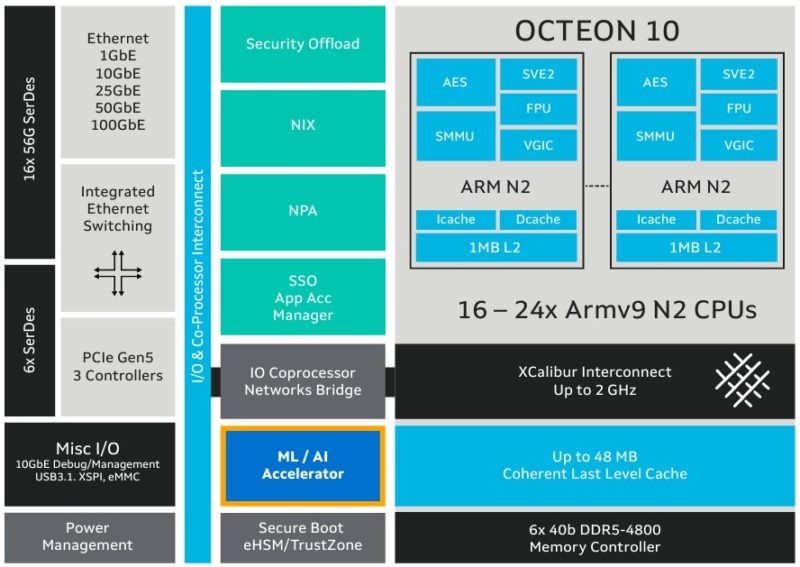

Every so often, there is a piece of hardware we get excited about, and very excited about at that. One of those pieces of hardware is the Marvell Octeon 10 DPU. This is actually a product that launched in 2021. At OCP Summit 2022, we saw different incarnations, and we were told that this is shipping for revenue. A massive Arm Neoverse N2 compute complex, accelerators, and plenty of networking is something to get excited about.

Marvell Octeon 10 Gets Wild at OCP Summit 2022

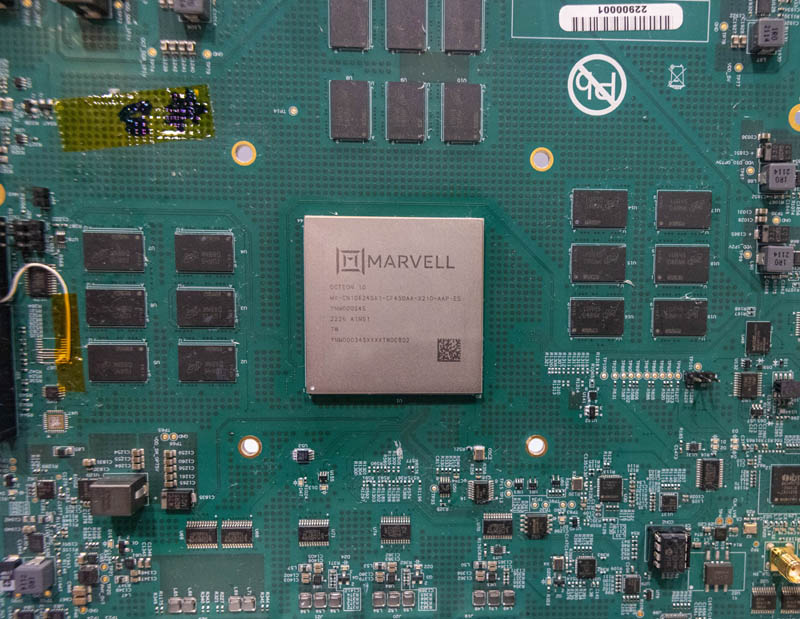

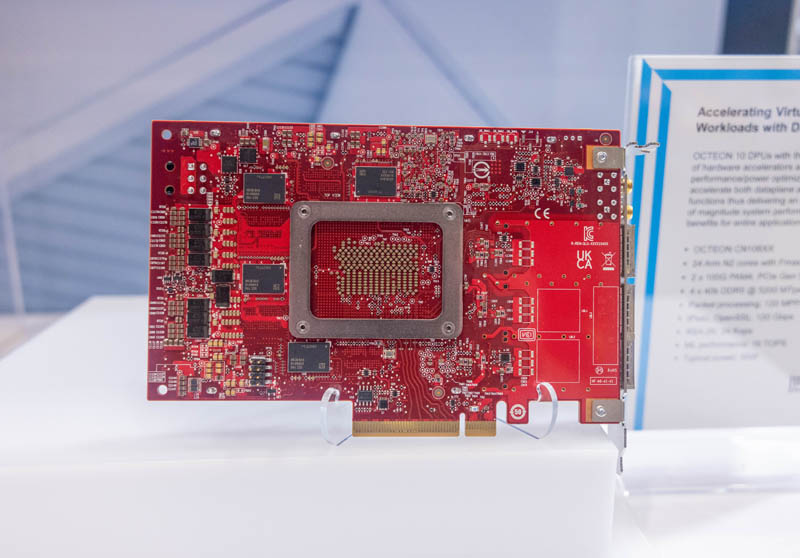

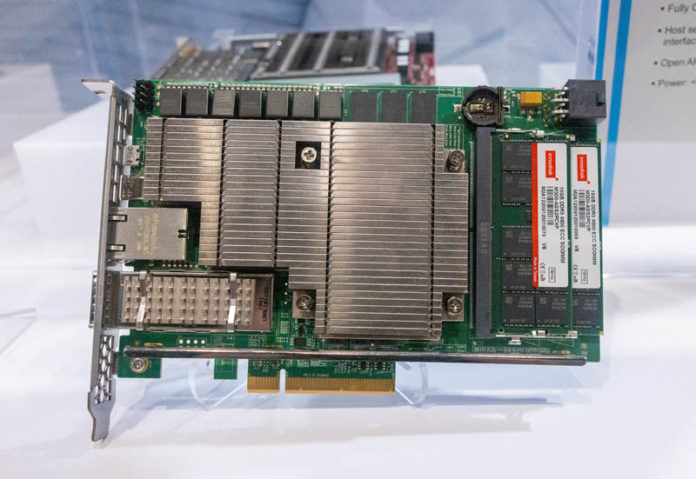

The first version we saw of the Octeon 10 DPU was on a large motherboard. Here we can see the chip flanked by memory, NICs, an ASPEED BMC, and more.

Here is the Octeon 10 DPU with memory all around it. Although this may look like a large chip, a good way to think about it is that it has 24 Arm Neoverse N2 cores, so that is roughly in the range of a Xeon E5 V4 14-16 core part on many workloads, but it will vary a lot based on accelerators used. This is also a 50W TDP part that has networking and more built-in.

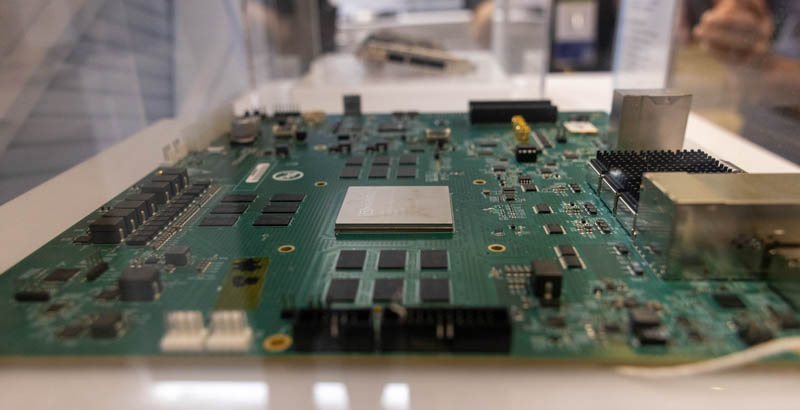

Here is a side view of the board. You may see another card in the background, we will get to that in a bit.

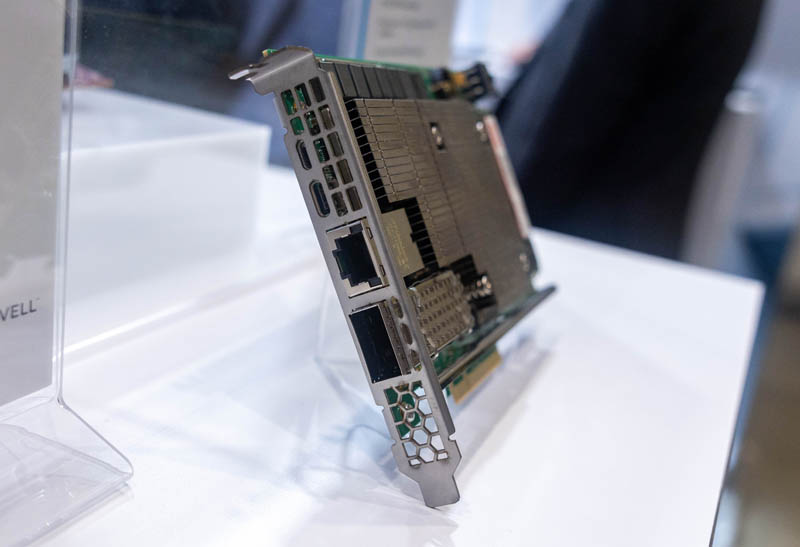

With the show’s plastic cover off, we were able to take a look at the board in more detail. Here we can see the 16x 50GbE networking in four SFP56 cages and three QSFP56 cages. This unit has an integrated switch as the CN106XXS model.

There are also out-of-band ports, USB ports, time sync headers, and at the end one can see PCIe slots. The Octeon 10 is a DDR5/ PCIe Gen5 device and as per our What is a DPU A Data Processing Unit Quick Primer criteria, the Octeon 10 has root ports. DPUs are not just being used as add-in cards. They can also be used in devices like firewalls, VPN endpoints, and more.

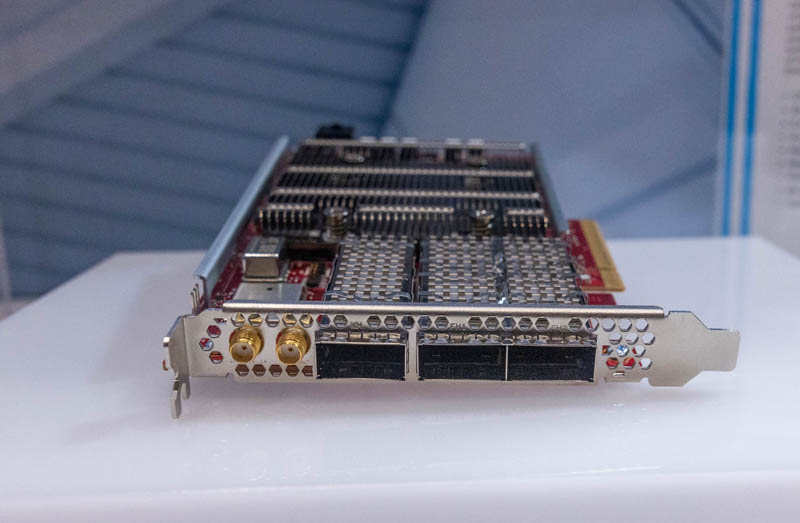

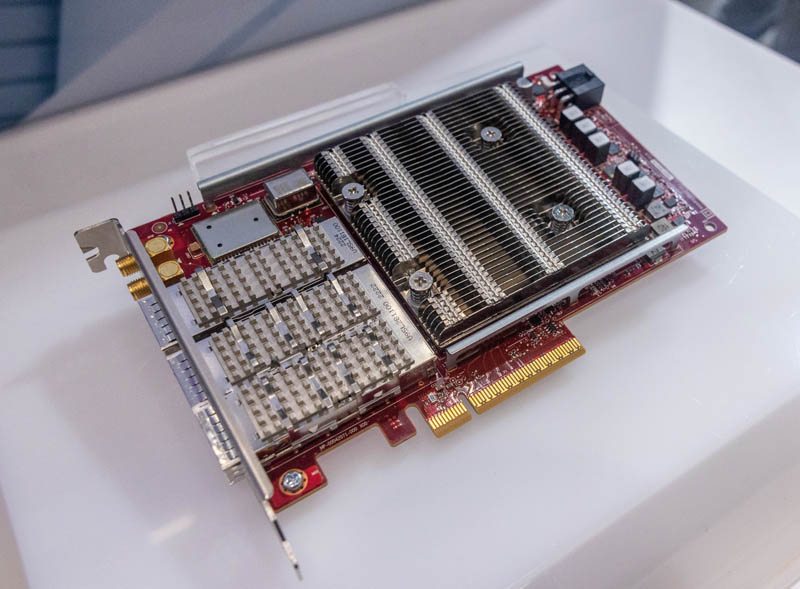

For 5G markets, another option is that one may want to use these in a card format. If so, Marvell has that option. As a quick aside, Marvell has 40W and 50W 24x Arm Neoverse N2 core DPUs with different I/O, so these are very reasonable to cool in a full-height single-width add-in card.

Here we can see GPS time sync as well as the QSFP28 optical networking cages.

Here is the rear of the DPU.

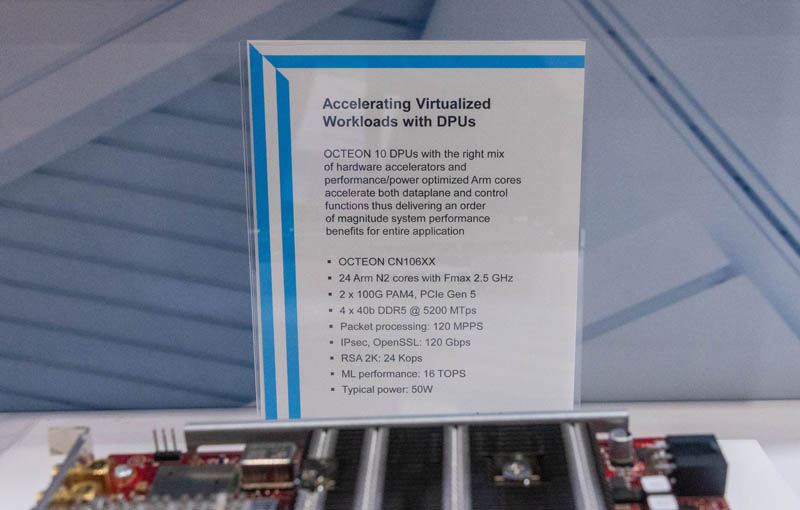

This platform looks a lot like it is a reference card but likely one built for a big customer. Here is the placard next to the CN1006XX (non-switch) card:

That was interesting, but there was another card that was not formally at the booth, but instead came from a briefcase and ESD bag for some photos.

Marvell’s Octeon 10 DPU with Expandable Memory

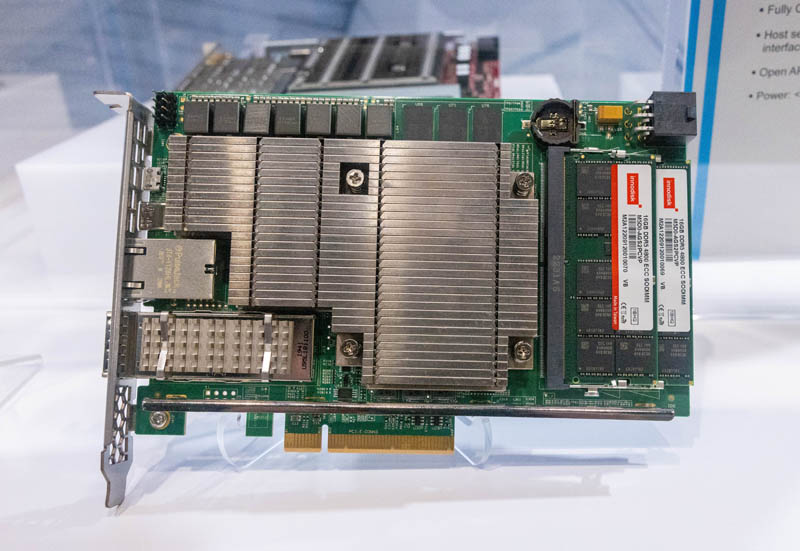

I was told this is an Octeon 10 CN106XX DPU since there is not a switch required for a lower port count. This card, however, looks a lot more like a production DPU with a cool feature.

The networking is a big difference here, this is a single-port card (save for the management and USB ports.)

What makes this card so interesting is that not only does it have onboard DRAM, but it also has DDR5 SODIMMs. This card has slots for two SODIMMs, so an additional 32GB are being added. On the rear, there is also a M.2 slot.

We are excited about these DPUs because they offer Arm’s newest core design for this class but also a lot of offload capability. Marvell (via its Cavium acquisition) has been building network offload engines for years for things like cryptographic acceleration. Octeon 10 also has an INT8 and FP16 capable AI inferencing accelerator onboard.

This is really interesting to us because having a low to midrange Xeon-class (integer) performance CPU with expandable memory, high-speed networking, and accelerators onboard opens a lot of possibilities.

Final Words

The Marvell Octeon 10 DPU may be the fastest DPU of this generation. Marvell typically sells to hyper-scale, large telco, and other organizations so while this is a very strong hardware platform. At the same time, the competition between NVIDIA, AMD, Intel, Marvell, and others on which company will have a dominant DPU platform is still an open question. Indeed, each of those four companies has a quite different offering in the market and DPU generations are launched on a less predictable cadence than other parts like CPUs and switch ASICs.

For those of our readers that focus on environments like VMware, Marvell is starting with larger infrastructure companies first. This was not one of the original DPUs in the VMware Project Monterey and was not part of the VMware ESXi 8 announcement. At the same time, it seemed like Marvell was aware of Project Monterey, so we hope that Marvell gets its DPUs onto VMware’s HCL.

Hopefully, we will get to show you these hands-on soon. This is one of the parts that I have been most excited about in this space, as Marvell has awesome specs in this generation. The green PCB PCIe card, I was told, was a working sample. My hope is that Marvell gets these cards ready and into the channel so folks can use them outside of large organizations. That is what NVIDIA is doing a better job of right now than AMD, Intel, and Marvell.

That is a very very cool card! It’s still a little wild to me that we have add-in cards that are 50W computers all on their own, especially ones that have user-configurable RAM & storage.