This week, Marvell held an AI-focused financial analyst day, and I was there. AI infrastructure growth has been massive over the past two years or so, and so Marvell wanted to show its investment case for the high-growth area. At the event, Marvell made a few big announcements and showed off a few interesting market statistics that I hope STH readers would find interesting, so I wanted to write up some of my key lessons learned from the event.

Accelerated Infrastructure at Marvell

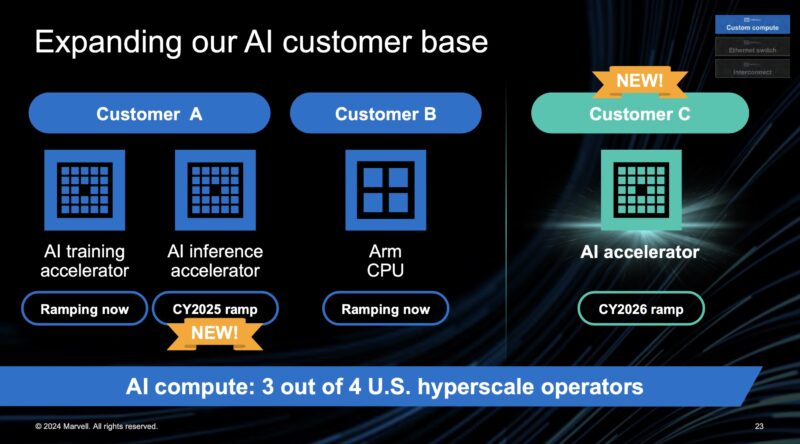

Let us start off with the big one. Marvell announced a new AI accelerator win at a 3rd US hyper-scaler. Just to give some sense. Part of Marvell’s direction is to use its IP to help hyper-scalers build big chips. This is generally a higher volume but lower margin play. It is also one that is growing rapidly at Marvell. That 2026 accelerator joins an AI inference accelerator at another US hyper-scaler. There is another custom Arm CPU at “Customer B”. One of the funniest moments of the event was when an analyst from a large US bank identified that Arm CPU as Google Axion while the Marvell team sat reactionless and expressionless, given that hyper-scalers generally have strict non-disclosure agreements in place.

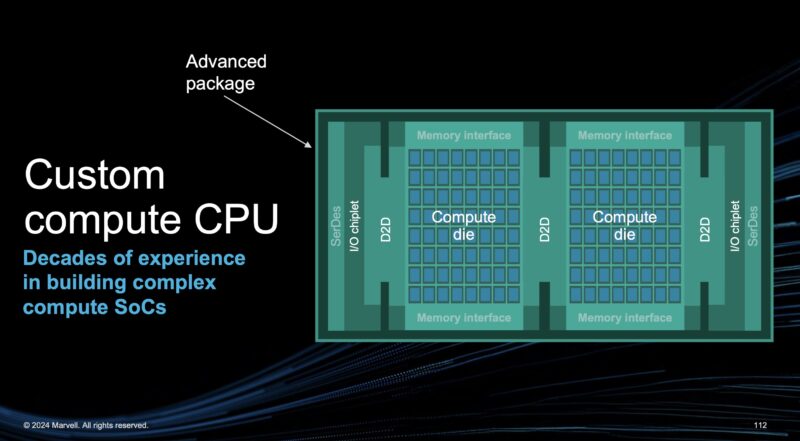

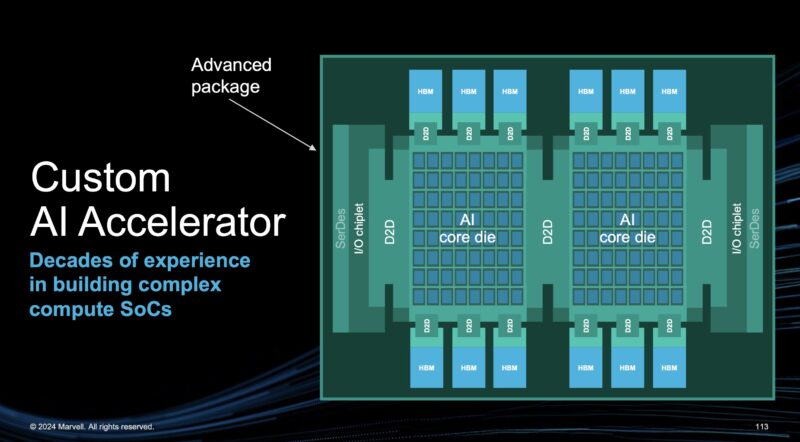

Diving into that, Marvell talked about its IP building multiple reticle-size chips into a package and how it can work with customers to build those solutions. We will just note the Google Axion does not look like a huge chip.

The company also said how similar some of those CPUs look to AI accelerators from the perspective of the IP that Marvell supplies.

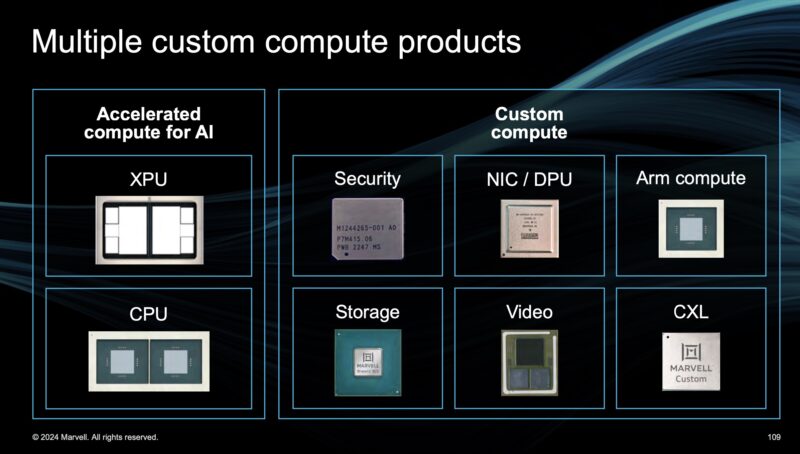

Marvell showed off a number of custom compute products that it makes.

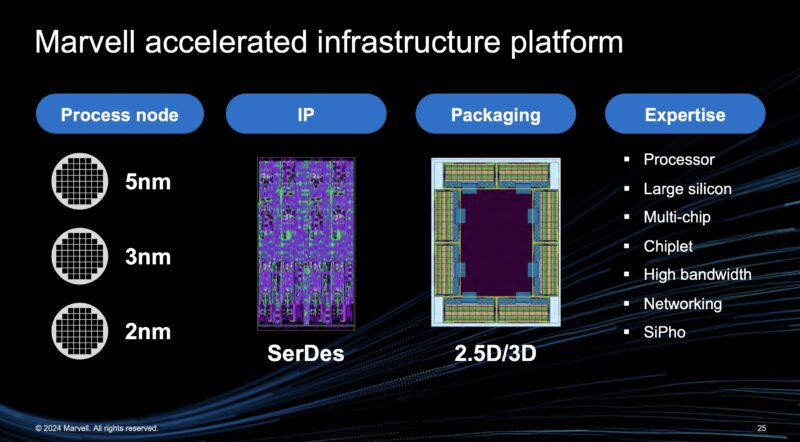

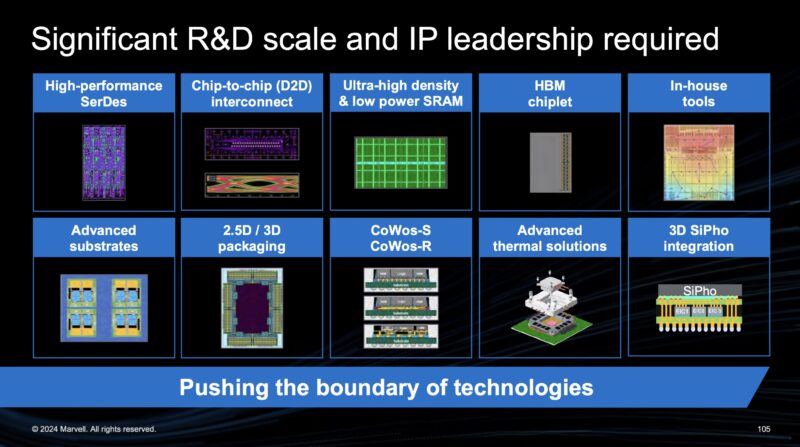

It also discussed its IP from interconnects on chip to off chip, SerDes, photonics, and more.

A big part of the Marvell’s investor message is that it believes hyper-scalers will work with Marvell because it has a lot of IP to leverage at different parts of the chipbuilding process.

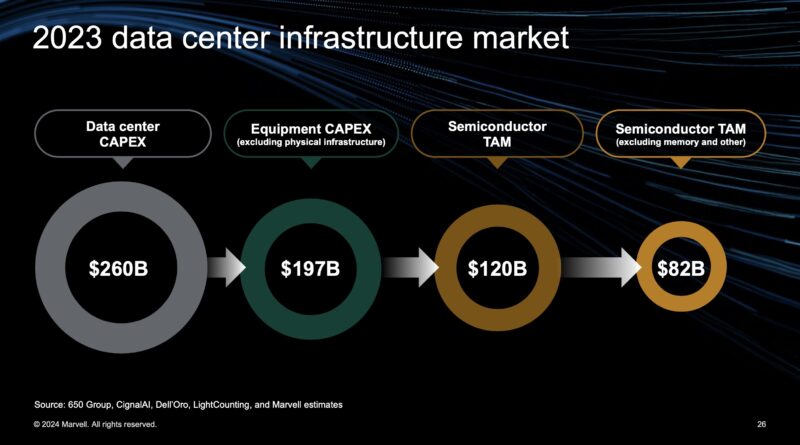

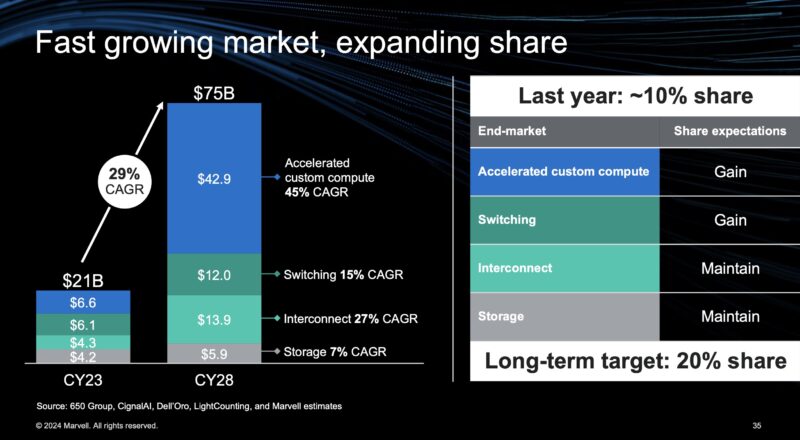

For STH readers that care about market sizes, Marvell says that its 2023 data center infrastructure market breakdown looks like this:

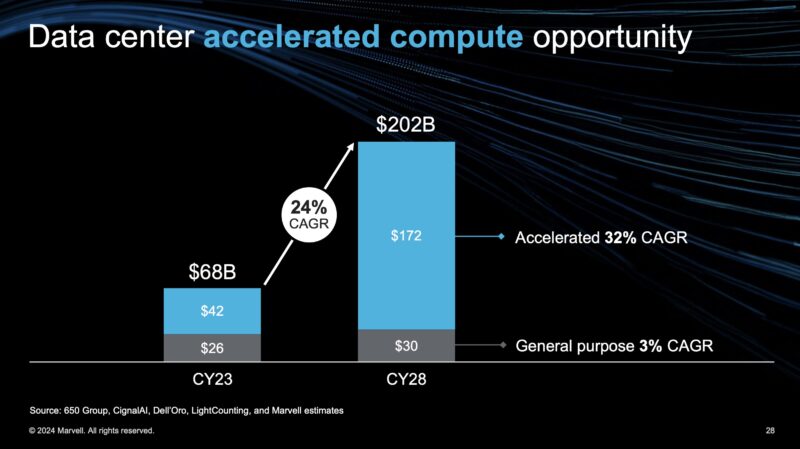

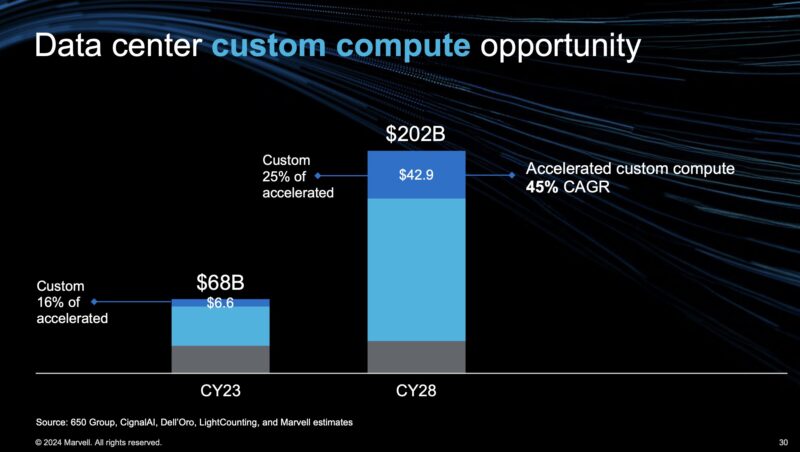

Perhaps one of the more interesting discussions was just how big the opportunity is. Marvell thinks that traditional general-purpose compute will grow at a 3% CAGR. That figure is significant since it is effectively a negative growth rate adjusted for inflation. Instead, Marvell thinks the market for accelerated compute is going to have a huge 32% CAGR.

Of that, Marvell thinks the custom segment is going to grow even faster at a 45% CAGR and make up a larger portion of the accelerated compute market in 2028. This is primarily due to hyper-scalers building their own chips.

Here is the breakdown of the growing market that Marvell competes in, where it expects custom compute, switching, and interconnect to grow quickly and take share in larger portions of that.

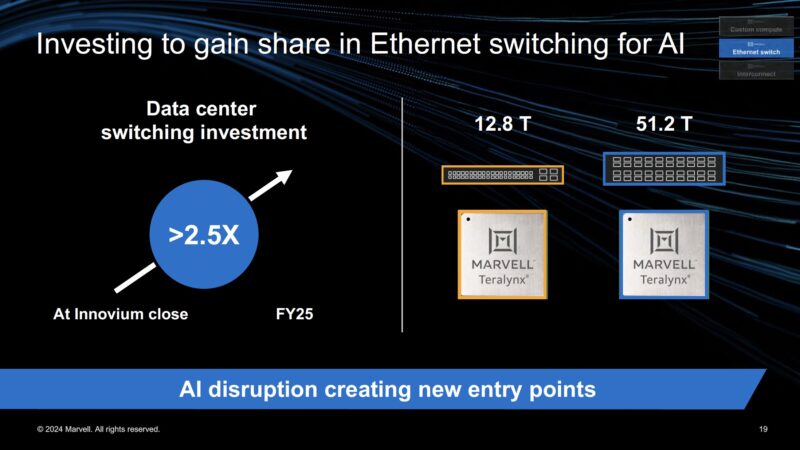

Driving switching and some of the interconnect side is Marvell’s investment in switching. It says it increased its data center switching R&D by 2.5x. The Innovium-derived Teralynx 10 51.2T product is starting to ramp. Here, as with a number of markets, Marvell is going head-to-head with Broadcom. While companies like Intel have exited the market, Marvell has a hyper-scale win at 12.8T and hopes to win more at 51.2T.

As a quick note, this investment does not seem focused on lines like Prestera that we see in switches from companies like MikroTik. Those lines make a lot of money for the company, but do not have as much competition. Instead, these are the mainstream data center switches as we looked at pre-acquisition in our Inside an Innovium Teralynx 7-based 32x 400GbE Switch piece.

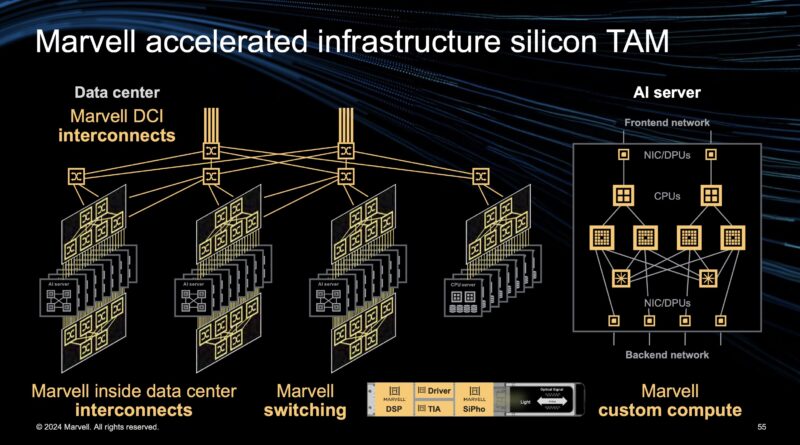

A big part of the day focused on interconnects and just how many there are in AI infrastructure. That includes in-chassis interconnects, as well as data center, front-end, and back-end networks. Marvell does not just have switches, but it has a big optical portfolio as well as DSPs and so forth so interconnect is a place it wants to play.

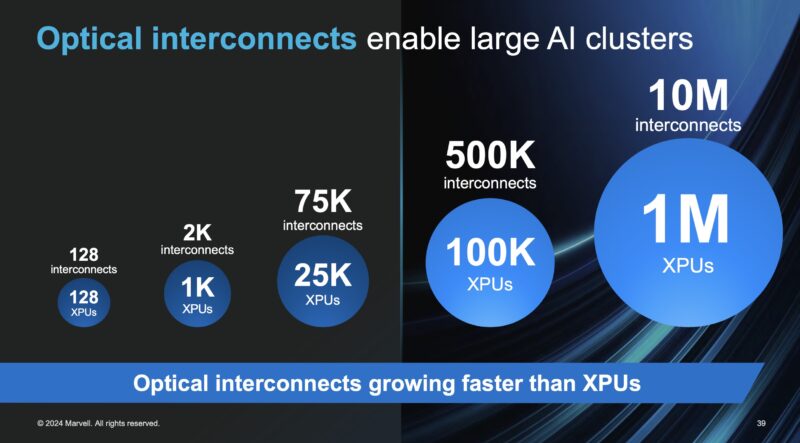

This was an interesting slide. Marvell shows that as the cluster size rises, the interconnect needs increase much faster. This is because larger clusters need more levels of switching.

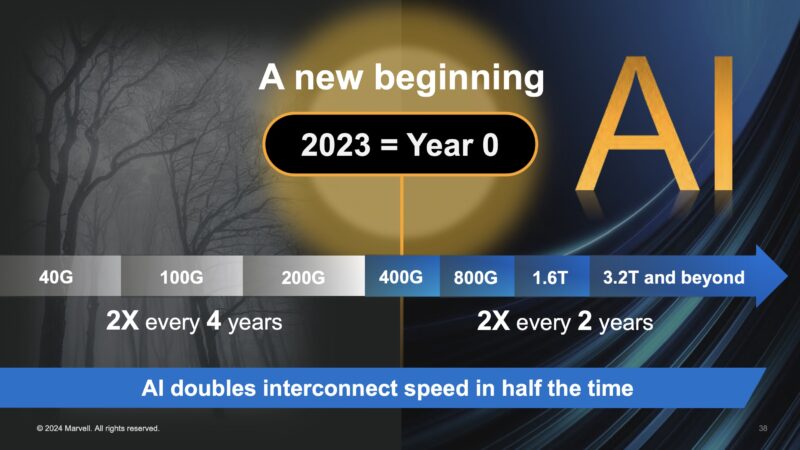

Another interesting, but very important insight is the speed of interconnect generational shifts is increasing. Instead of doubling every four years, we are seeing a push to a two-year cadence. This is on the networking side, but also the PCIe folks are working to speed up generations. As interconnect speeds increase, the cost per bit of moving data decreases and performance increases, so that is driving a faster cadence.

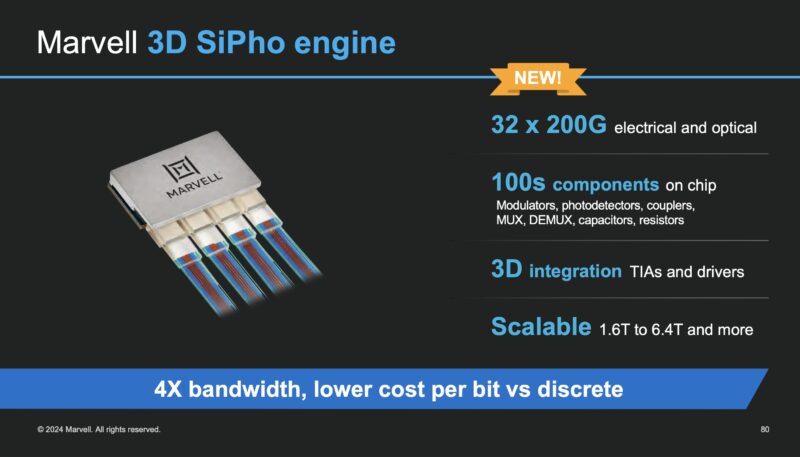

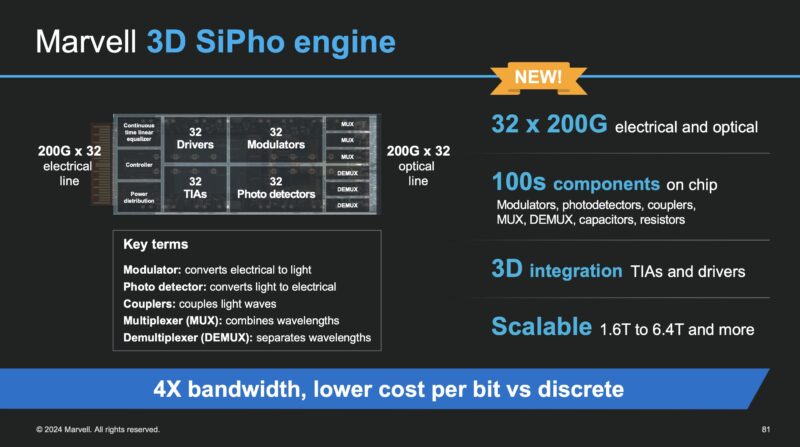

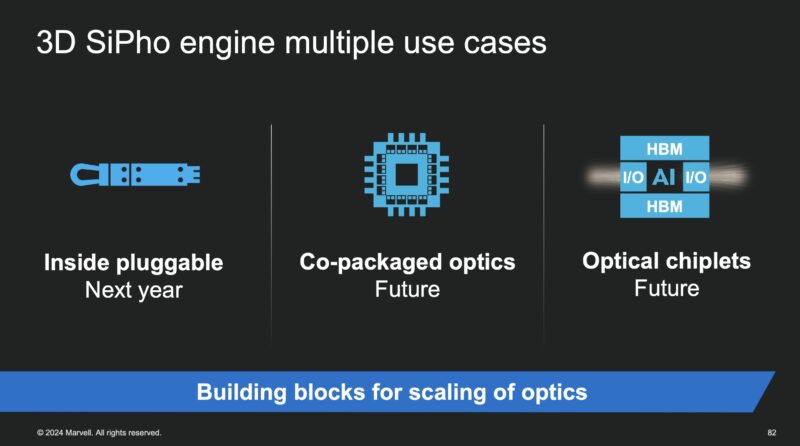

Another really interesting product announcement is the company’s 3D SiPho (Silicon Photonics) engine. This puts 32x 200G links ont a single chip with the ability to integrate this into different applications.

Here is a quick look at this in a bit more detail. Something that was very notable is that while Broadcom is talking about 51.2T co-packaged optics switches, Marvell says its customers are not ready for that, but that it has much of the IP ready. It also says that as speeds increase, SiPho becomes more important to scale at a lower cost since lower-cost CW lasers and fewer components are used.

It discusses the use of these 3D SiPho engines in a number of use cases including pluggable modules next year and optical chiplets in the future.

Of course, Marvell also has co-packaged optics as a use for this.

Final Words

Marvell showed a lot at the investor event. These were some of the highlights. It is fairly clear that Marvell is looking to take share from Broadcom over the next few years while at the same time targeting large markets.

Something else that I learned at the event was that talking to folks from various investment firms that were there, either from banks, funds, independent research houses, and so forth, a lot of use STH. About 1 in 3 folks that I spoke with at the event either read or watch STH. I knew we had a lot, but I was unsure of the magnitude. That is a big reason we started the Axautik Group to build research reports for these investment professionals as well as a few other groups. Early next week, we are going to publish our #1 most requested research item discussing Supermicro’s rise in the AI server realm compared to Dell and HPE while covering from a high-level down to the hardware comparisons and examples. I am getting ~5 call requests/ week on that one, and I cannot spend 5 hours a week on those calls, so we will put it in a report next week to make life easier. If you are one of those professionals, check the Axautik Group reports next week.

I didn’t know Marvel had IP related to shipbuilding. Was that supposed to read as “chipbuilding?”