Perhaps one of the most exciting advancements coming to storage is the adoption of NVMe over Fabrics or NVMeoF. This technology will change how organizations view storage as technology providers figure out how to extend NVMe over the network making it more than in-node storage. At Flash Memory Summit 2019, the Marvell 88SS5000 NVMeoF controller was paired with Toshiba BiCS NAND to deliver a dual-port 25GbE NVMeoF solution.

Marvell 88SS5000 NVMeoF Controller in Toshiba SSD

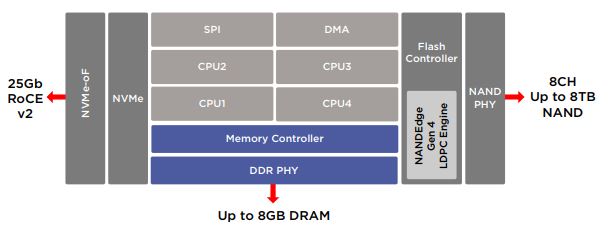

The goal of the Marvell 88SS5000 is to create a solution that combines both network IP with SSD NAND controller IP. From what we have been told, the actual NVMeoF functions are relatively lightweight. As a result, Marvell who has IP in both domains is putting them together to make a controller that can effectively take raw NAND and put it onto an Ethernet fabric.

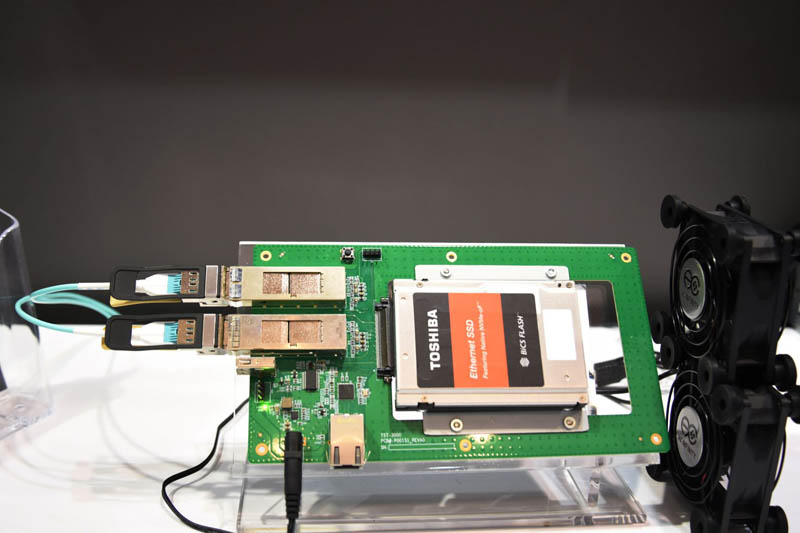

To demonstrate this, Marvell teamed with Toshiba to bring a working product to the show floor. They are showing 25GbE speeds and over 650K IOPs from the device.

Here is the actual Toshiba device using the company’s BiCS flash. As you can see, the SSD itself looks like a normal 2.5″ drive, but it is placed in a carrier to provide power as well as the dual-port 25GbE physical SFP28 cages. We expect these drives will be placed in higher-density arrays, possibly switched, so direct cabling like this will be uncommon.

As an example, We saw the Aupera array that we covered at last year’s Flash Memory Summit in Marvell 25GbE NVMeoF Adapter Prefaces a Super Cool Future.

The impact of this is tangible. Using the Marvell 88SS5000, one can consolidate a traditional server’s CPUs, memory, SSD controller, and networking into a single device. It also allows companies to scale using Ethernet fabrics instead of PCIe fabrics and in a host-independent manner. A major advantage of the recent AMD EPYC 7002 series is the ability to get 128x PCIe Gen4 lanes per host to build bigger systems, but these drives from Marvell and Toshiba mitigate the need for that many PCIe lanes.

Marvell 88SS5000 Key Specs

Here are some of the key specs for the Marvell 88SS5000 NVMeoF controller:

| Domain | FEATURES |

|---|---|

| Processor | Quad Cortex-R5 CPUs Dynamic Branch Prediction DMA controller |

| NVMe-oF Interface | Dual-port 25Gb RoCE v2 Interface |

| DDR Controller | Up to 8GB DDR3, DDR4, LPDDR3, LPDDR4 at speeds up to 2400MT/s with ECC support |

| Flash Controller | 8 Channels @ 800MT/s ONFI 2.2/2.3/3.0/4.0, JEDEC mode and Toggle 1.0/2.0 Hardware RAID Marvell NANDEdge™ Gen4 LDPC engine |

| NVMe | NVMe Standard Revision 1.3 compliance 256 outstanding I/O commands 132 total queue pairs 64 Virtual Functions MSI and MSI-X interrupt mechanisms T-10 DIF and end-to-end protection |

| TCG | OTP support for secure drive configuration AES encryption hardware Media re-encryption hardware |

| Package | Available in standard 17mm x 17mm (625 ball) BGA package |

Update: In perhaps the most poorly written press release of 2019, Marvell noted the 88SS5000, however, we were told that Toshiba actually showed off a drive with their 88SN2400 controller. Our apologies, but when a vendor puts one model in a press release along with a demo story and does not mention the demo is using a different controller, it is very hard to know what is underneath.

I stopped at their FMS booth. My big question on the 24-drive chassis they were showing: How does the cost of that chassis with dual 100GB Switches compare to an AMD platform with a few 100Gb NICs?

The switches won’t be cheap and the base AMD platform gives you plenty of PCIe on the lowest chips to make that a meaningful competitor.

It’ll definitely be interesting to see how this market plays out.

since these device will run their propitiatory “operating system” i could imagine them to turn into a security nightmare. intel management engine similarities …

[quote]but these drives from Marvell and Toshiba mitigate the need for that many PCIe lanes.[/quote]

That should say “for storage uses”. That frees up lanes so you could say, build VM hosts or GPU computer box that have tons of GPUs and a large ethernet pipe and don’t spend any PCIe on local storage. That lets the servers get a lot more powerful for their real purpose and not pulling double duty to host storage too.

I’m curious what this looks like cost-wise moving that NVMEoF/Ethernet translation layer from the box to individual drives. As Wes mentioned, you’ll need to still perform switching somewhere.

Rather than rack scale or higher for this, I could see it being a big boon “at the edge”, where you’re space confined. That way adding storage becomes a “do I have the watts and ports” vs “do I have XYZ to support these drives?” I’m also thinking the Poweredge MX might benefit from this.

Very curious to see if the IPv6 stack in these embedded NVMEoF RoCEv2 server chips’ firmware is any good.

RoCEv2 adds potential for IPv6 to replace non-ethernet/IP networks in the enterprise backend.