The Wild Side That Even AMD Does Not Highlight

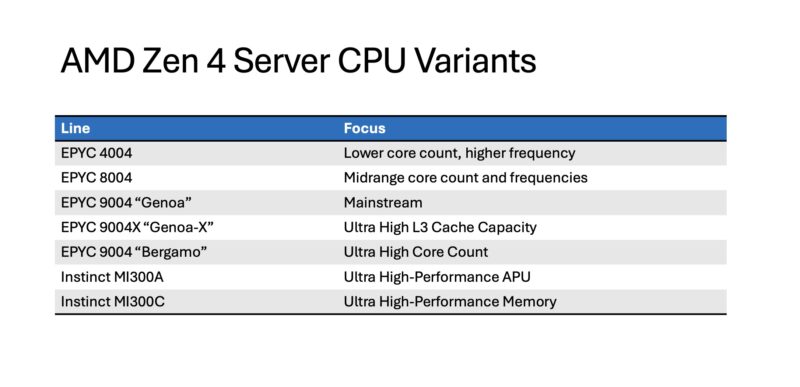

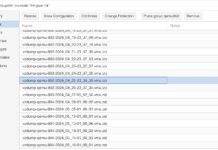

While doing this, we had a strange period of time when we were testing the popular Gigabyte server, finishing the HPE ProLiant DL145 Gen11 Review, plus working on some future pieces. AMD Zen 4 has an incredible breadth of platforms that all use the same instruction set. Of course, Zen 5 is now out with Turin, but here is a quick snapshot of the parts we were testing this month with the Zen 4 instruction set, whether full Zen 4 cache or the cache and frequency reduced Zen 4c variants:

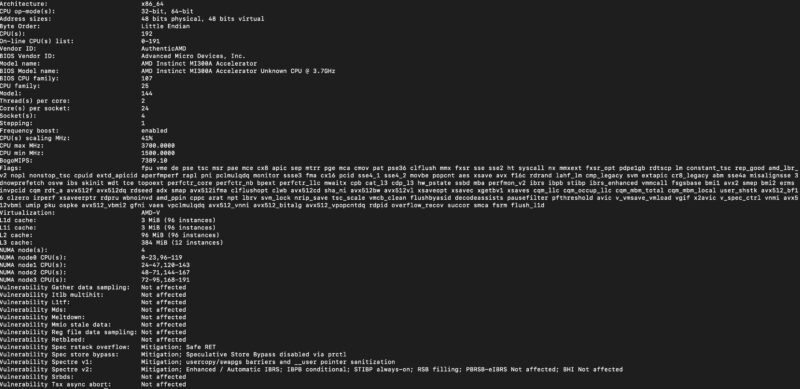

AMD’s Zen 4(c) spans all the way from a sub $1000 server up to high-performance AMD Instinct MI300A APUs with integrated GPU IP and HBM. Those are the parts found in the world’s current #1 Top500 Supercomputer El Capitan. If you do not believe this, here is the lscpu output from an AMD Instinct MI300A node we are using right now where you can see AMD-V is present.

For a long time the industry has been focused on challenges of Xeon to EPYC live migration, but here is the other way to look at it. Xeon E-2400 does not support AVX-512 as an instruction set difference between it, and other Xeons. High-density Sierra Forest (Xeon 6700E) uses a scaled back instruction set and does not support SMT. That small Gigabyte server that cost us $899 for the barebones uses the same CPU architecture as supercomputer chips, HPC chips, high-density chips, and more. This is something we took for granted, but when you see it, it is somewhat crazy to experience.

It would be silly to take a workload like a web server that runs on the AMD EPYC 4464P and run it on an AMD Instinct MI300A supercomputer APU. That is probably why you do not hear AMD trumpeting this capability. At the same time, you can and that is quite cool.

Final Words

At STH, we get hands-on time with anything, and that is one of the reasons I have been very hard on the Intel Xeon E series. This popular Gigabyte barebones server really kickstarted a discussion within the team about licensing since Will has been deploying the EPYC 4004 series as a cost reduction measure for some time, mostly predicated on license cost savings. As we went back to our cloud-native series from 2024, we realized that this same story is playing out across a number of different product lines.

We knew from that series, for example, that the EPYC 9004 and EPYC 9005 series parts performed very well, but there were a few surprises like the high-frequency EPYC 9575F hitting the same SPECrate2017_int_base per core ratio as the 8 core Intel Xeon E and EPYC 4004 parts. There has always been a penalty for using higher-density chips to the point we have not looked at that comparison in a long time.

We reviewed the HPE ProLiant DL145 Gen11 as a low cost and low power edge server, which is its target market. When we looked back at the data, it feels absurd that the 48 core EPYC 8434P could offer significantly more performance in 48 cores than a dual Xeon Gold 6252 server in half the sockets, and close to half the power, but here we are.

In the comping weeks, we will have reviews of that Gigabyte R113-C10 server and more as a result of this. It felt like it was time to take a step back and look at this more broadly from a virtualization perspective since so many folks have projects to optimize license costs with some of the changes in that market, but also to lower power of traditional compute to make room for more AI acceleration in data centers.

Can we get a review of the GB system? That looks neat.

We’ve got a 512 2P Xeon virtualization cluster that we’ll transition in 2H. You’re right the licensing costs more than the hardware.

Great charts! STH always has an excellent perspective. I’d like to learn more about that server too

Since VMware came out with the per core licenses I’ve bought processors in line with those licensing bands. I will have to look at buying AMD Epyc next go around with performance being that much better.

Supermicro AS -1015A-MT has better cooling and 2 x M.2 NVME

MS Server comes with 16 core license to begin with. If you need more than that they sell them in 2 core and 16 core packs for sure. Do note that Server Standard only allows for 2 VMs where as Datacenter allows for unlimited VMs. Plus if you desire 4 servers at 16 cores each it costs the same as 2 servers with 32 cores each.

@Bob

I know that all too well. We are in the final stages of an upgrade and the VMware licensing was 1/2 of our budget since it is now per core.

@Jason

The Epyc CPUs have been very nice for a while. Before VMware went to the per core license model they were doing a 32 core license model. Basically every license was for 32 cores and if you had say dual 64 core CPUs you needed 4 licenses. At one point in time it was a “waste” of a license to do anything less than 32 CPUs as you weren’t using the full license amount and Intel topped out at 40 cores.

While there is no denying the reality, I personally find per-core licensing without regard to core strength to be weird. I also think such licensing is what led to those maladaptive IBM Power cores with 8-way SMT.

If a licensing model does not take into account the shift in Moore’s law from frequency scaling to multiple cores and as a result actively discourages the power savings obtained from running more cores at slower clocks, what kind of race to the bottom is that?

Hi Patrick… Did you know HPE recently introduced our offer for virtualization – HPE VM Essentials, based on the Morpheus Data platform. It can managing both existing VMware VMs and offers the ability to migrate workloads to the KVM based HPE VM hypervisor.

We’ve licensed it per socket…

Nice to mention XCP-NG, but unless you need support, it is free of charge and fully open-source.

I found this hilarious. It also supports my alt theory that “the cloud” mostly exists because IT joes are mostly terrible at math and or analytics.