Patrick took a first look at the LSI SAS 9207-8e host bus adapter recently. Now it’s time to see if the new card can deliver the performance we expect. In this article, we test throughput and IOPS performance in an attempt to answer the age old question: How much faster is this little guy compared to its predecessors? It’s a very real question. The previous hardware refresh eclipsed the LSI 9200-8e with the 9205-8e, featuring a new and faster chip but delivering essentially the same performance. Both cards deliver very good performance, to be sure, but I hoped for more. Hopefully this update won’t disappoint.

We can realistically expect better performance from the SAS 2308 based LSI SAS 9207-8e. The earlier 9200 and 9205 cards are held back considerably by the PCIe2 bus. A SAS 2008 based card can scale its throughput only to five or six SSD drives, after which performance begins to level off. Maximum throughput for the 9200 and 9205 is about 2,600MB/Second on most server platforms – a bit higher on new Xeon E5s at around 2,900 MB/Second. With the move to PCIe3, the LSI SAS 9207-8e should be able to do better.

Benchmark Setup

As usual, we are testing the LSI 9207-8e card using two large multi-CPU servers – one Intel and one AMD. The AMD server is a quad Opteron 6172 with 48 cores and the Intel a dual Xeon E5 with a pair of 2.9Ghz Intel Xeon E5-2690 CPUs for a total of 16 cores and 32 threads. The primary difference between the two systems is their PCIe architecture. The AMD uses a separate northbridge IO controller that provides PCIe2 lanes while the Xeon has its PCIe functionality built in to the CPUs and delivers PCIe3.

For all tests, the LSI card is paired with eight Samsung 830 128 GB SSD drives. The drives are initialized but unformatted to ensure that no operating system caching takes place. All tests are run as JBOD (8 separate drives) as opposed to using any RAID. In fact, while the LSI 2308 chip supports RAID 0, 1, 10, and 1E, the firmware on the 9207 card is limited to JBOD only. There is a version with internal connectors that does support basic RAID levels (RAID 0, 1, 1E, and 10) known as the LSI SAS 9217-8i. For those wanting both basic RAID levels and external connectors, it is possible to re-flash the LSI SAS 9207-8e with the 9201 firmware. Beware, doing so may have a negative impact on the warranty, but it should be possible.

Test Configurations

For this test we have two servers, one AMD Opteron 6172 and one Intel Dual Xeon E5-2690 machine.

AMD Server Details:

- Server chassis: Supermicro SC828TQ+

- Motherboard: Supermicro MBD-H8QGi-F

- CPU: 4x AMD 6172 Magny-Cours 2.1Ghz

- RAM: 128GB (16x8GB) DDR3 1333 ECC

- OS: Windows 2008R2 SP1

- PCIe Bus: PCIe2

Xeon Server Details:

- Server chassis: Supermicro SC216A

- Motherboard: Supermicro X9DR7-LN4F

- CPU: 2x Intel Xeon E5-2690 2.9Ghz

- RAM: 128GB (16x8GB) DDR3 1333 ECC

- OS: Windows Server 2008 R2 SP1

- PCIe Bus: PCIe3

Disk Subsystem Details:

- Chassis: 24-bay Supermicro SC216A with JBOD power board

- Disks: 8x Samsung 830 128GB drives

- Cables: 2x 1 Meter SFF-8088 to SFF-8088 shielded cables

Benchmark Software:

- Iometer1.1.0-RC1 64 bit for Windows

- Unless otherwise noted, all tests were run with 4 workers, 4 million sectors per disk test size, and a queue depth of 32. Test runs begin with a 30 second warm-up followed by a two minute run time. If we were testing the drives as opposed to the card, a much longer run time would have been appropriate, but for measuring card capabilities a short run will do.

One important note: Do not think that you need big beefy servers in order to achieve the performance results we get in this article. In fact, a single-CPU machine, even one with a middling Xeon E3 processor, could match these results or even do better, not having to waste time pushing data from CPU to CPU.

LSI SAS 9207-8e Benchmark Results

The four pillars of IO performance are small block random reads, small block random writes, large block sequential reads, and large block sequential writes. Small block results are often shortened to IOPS and the large transfer sizes abbreviated as Throughput. Our tests focus on reads as opposed to writes for a simple reason – while hard drives and SSD drives perform very differently when reading as opposed to writing, to host bus adapters it’s all just data transfer. For purposes of testing the basic capabilities of the 9207 host bus adapter, we need only test reads.

Our small block size tests use 4kb transfers. For large size transfers, we use 1MB chunks.

Throughput Results – PCIe3

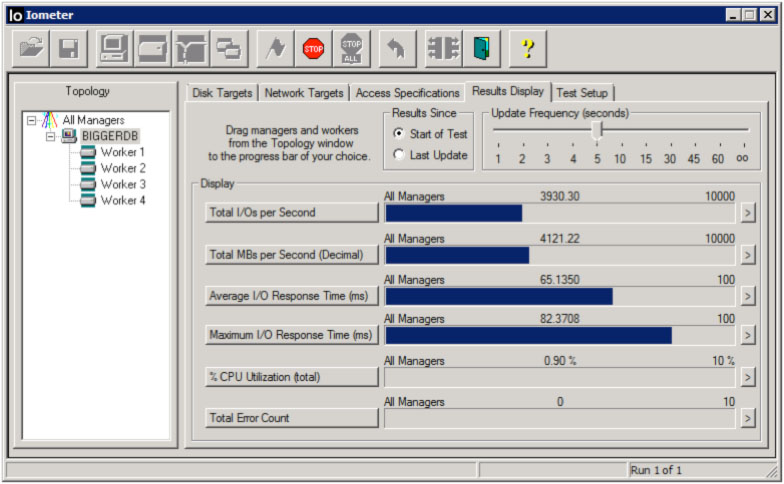

For our first test, we configure IOMeter on the Intel Xeon with four workers operating over all eight disks with a transfer size of 1MB. The resulting Throughput is impressive at over 4.1GB/Second. Right from the start, the LSI SAS 9207-8e is breaking records for an 8 port card.

At this level of performance, the limiting factor is likely to be the eight Samsung 830 SSD and not the card. To reach the limits of absolute maximum throughput, we perform a second run of the test after a secure erase of the Samsung drives. This time, instead of using a warm-up time and a long test run time, we take a snapshot of throughput at the beginning of testing, when the drives are absolutely fresh. Throughput reaches over 4,400 MB/Second for a brief period of time – about 30 seconds. This represents 550MB per second per drive, which is very close to the theoretical maximum for any SATA3 drive. A reasonable conclusion is that the LSI 2308 chip on which the LSI SAS 9207-8e card is based has enough raw performance to handle any eight SATA3 drives. This is very good news.

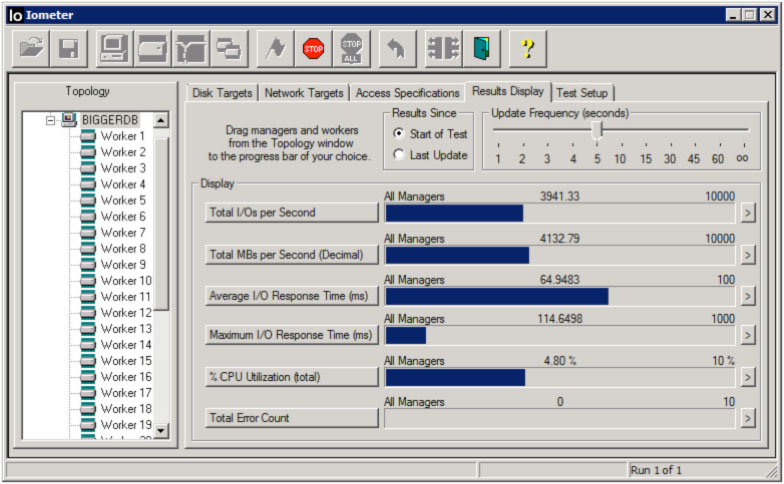

Some cards have trouble servicing a large number of IO requestors. The 9207 does not. On the Intel Xeon E5 server, configured with 32 IOMeter workers as a form of torture test, throughput does not suffer at all. Results are essentially identical to our four-worker test.

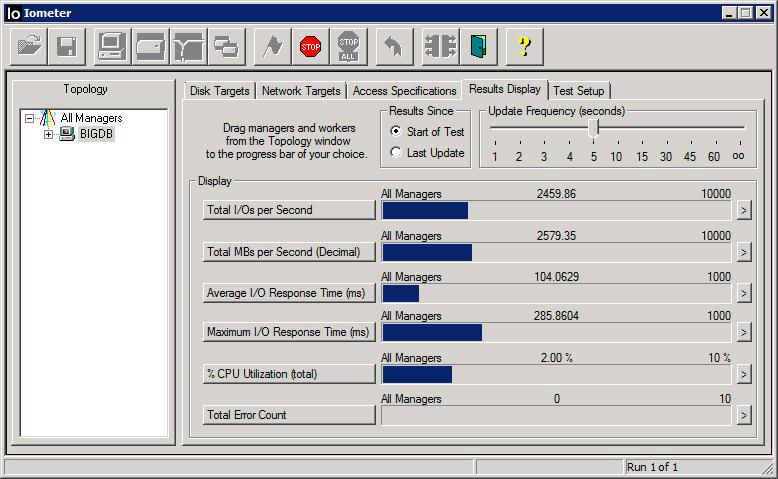

LSI SAS 9207-8e Throughput Results – PCIe2

On the AMD server, with its slower PCIe2 bus, throughput is lower at 2,580MB/Second. In earlier tests, not reproduced here, an Intel Xeon E5 server with its slots forced to PCIe2 drove the same card to around 2,900MB/Second. Interestingly, these results are essentially identical to the 9200-8e and the 9205-8e on the same platforms, evidence that the PCIe bus and not the LSI hardware has been the limiting factor for these earlier cards. We won’t be seeing any new cards – from any vendor – that provide significantly better throughput on PCIe2 bus servers.

IOPS Results

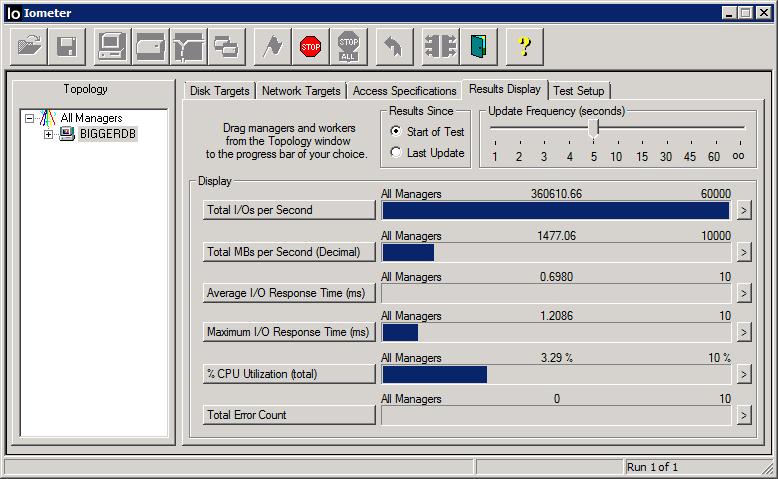

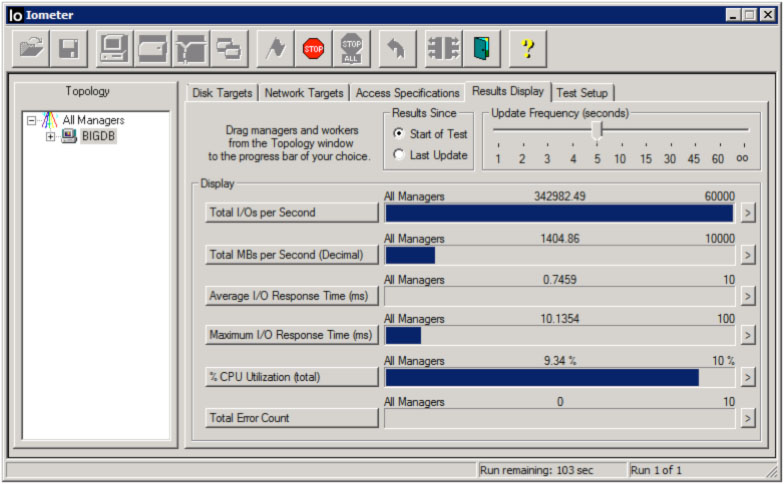

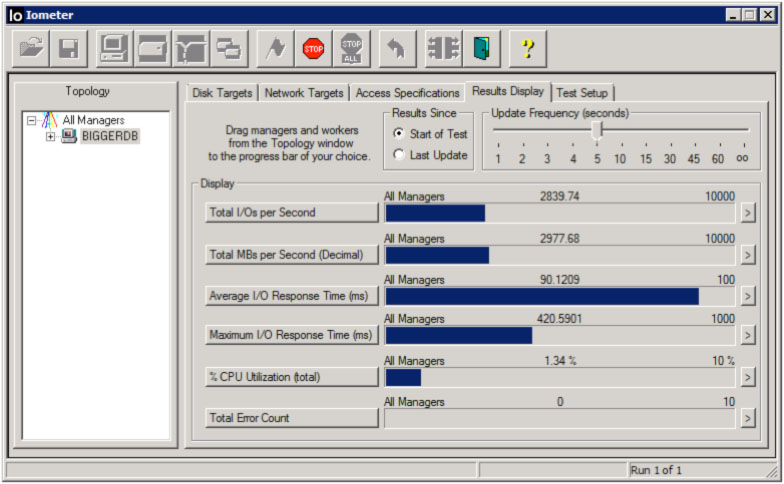

The LSI 9207 is specified to reach up to 700,000 IOPS – IO operations per second. Unfortunately, we aren’t able to verify that number. Our Samsung 830 drives are rated to 80,000 IOPS, but in real-world benchmarks using 4kb transfers they are good for around 50,000. Eight such drives should be good for 400,000 IOPS – nowhere near fast enough to saturate the LSI 9207. In fact, our testing hits a ceiling at just over 361,000 IOPS on the Intel Xeon and 343,000 IOPS on AMD. All we can say is that new card has not lost any ground relative to the 9200 when it comes to IOPS performance. It would take more capable SSD drives to test any further.

Here are the dual Intel Xeon E5 results:

Great results. The quad AMD Opteron showed similar figures.

Those are fairly similar figures but the Intel system does show slightly better performance.

Running the LSI SAS 9207-8e in an x4 Slot

Some users may be interested in running the LSI 9207, which is a PCIe3 x8 HBA, in a PCIe3 x4 slot. In this configuration, the LSI SAS 9207-8e performs surprisingly well, with throughput near 3,000 MB/Second and no change in maximum IOPS as measured by our setup. By way of contrast, a 9200-8e, which is a PCIe2 card, delivers less than 1,500MB/Second in a PCIe2 x4 slot, unable to keep up with just three top-tier SSD drives.

IOPS in the PCIe3 x4 slot is essentially unchanged at 364,000.

Conclusion

Until the release of the LSI SAS 9207-8e, there were no host bus adapters able to keep up with a full set of eight SSD drives. The LSI SAS 9207-8e changes that equation: Now there are no eight SSD drives able to keep up with the LSI SAS 9207-8e.

If you need to connect eight, or even as few as six, SSD drives to a PCIe3 server, buy the LSI SAS 9207-8e now. It’s the fastest x8 PCIe host bus adapter card I’ve tested and the second fastest period, next to the LSI SAS 9202-16e with its dual controllers and x16 interface. The LSI SAS 9207-8e is definitely worth the $50 premium over the SAS 2008 based LSI 9200 and 9205 cards. Few applications will require the increased IOPS but the improved throughput will be welcome news to many.

On the other hand, users with PCIe2 servers will see little to no improvement by switching from a 9200 or 9205 card and, unless you attach more than four SSD drives, you don’t need more throughput anyway. That said, most corporate users will probably spend the extra $50 for the LSI SAS 9207-8e as a bit of inexpensive future-proofing. That is a decision I can agree with.

Super duper performance.

Do you have a benchmark IOmeter profile to download? Would like to use something similar.

I don’t have a configuration to share, but my settings are all outlined in the article. If not mentioned, I’m using the default values in IOMeter.

That is thorough. Wonder why AMD and Intel are different by so much.

Nice performance there. Great to see how these perform. And that there is a site that actually looks at LSI cards.

Anyone get this card to work in Windows Server 2012? LSI SAS 9207-8i

Have you had a chance to use more than one of these cards in a server at one time? I am running into an issue in using the 9207-8i and 9207-8e in the same server at once. It sees them at the BIOS level but the server hangs on boot before it is able to access the drives.

I am going to open a case both with LSI and the distributor we bought them from to get some support, because it seems like a basic hardware issue. Strangely, I have not been able to access the LSI website for the last month or so.

was just going to ask a question but then read previous comments …Rolf: sure don’t mean to patronize you but have you checked all the boot options (in their BIOS) on both cards? .. i’m (still) running an Oct 2007 dual Opteron box that has two LSI SAS controllers in it and i had same problem until i made sure only one card was set to boot from, and then explicitly turned off that option on the 2nd HBA … again, no insult intended if this is too dumb a suggestion … BUT my, perhaps dumb, question is… in my old box i run all 15K Seagate Cheetahs, no RAID (so have drives C: – L:) and on my new box am interested in running SSDs and my question, based on this review, is this: by JBOD, does that mean that i can have e.g., 8 x 128gb SSDs that the OS sees as (roughly) a single 960GB that will get the read performance mentioned in this article (or, perhaps 2 x 4-drive JBODs, etc.)…i guess the compliment query is this: is JBOD basically as fast as RAID 0 but w/o any striping such that if one drive goes bad yo just lose stuff on that drive and not the entire array … TIA for any advice