Performance and Power Impact

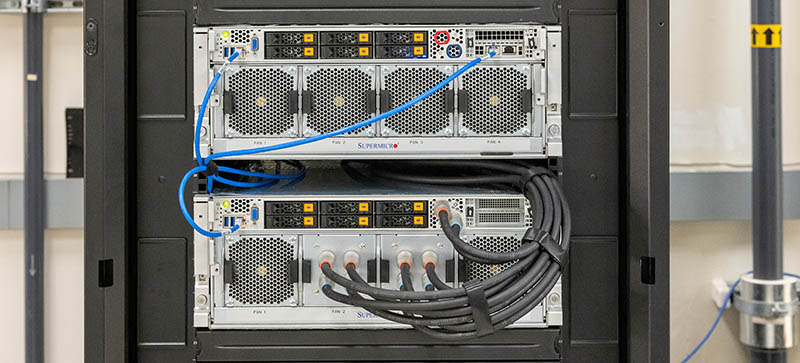

There are two main areas that we are going to focus on here, performance and power. The systems are effectively the same except for some miscellaneous network cards that are either not hooked up to transceivers or will not have an appreciable impact. These dual AMD EPYC 7713 systems are as close as we could reasonably get. That allowed us to issue the same commands to both systems with the differences just being the GPUs and cooling.

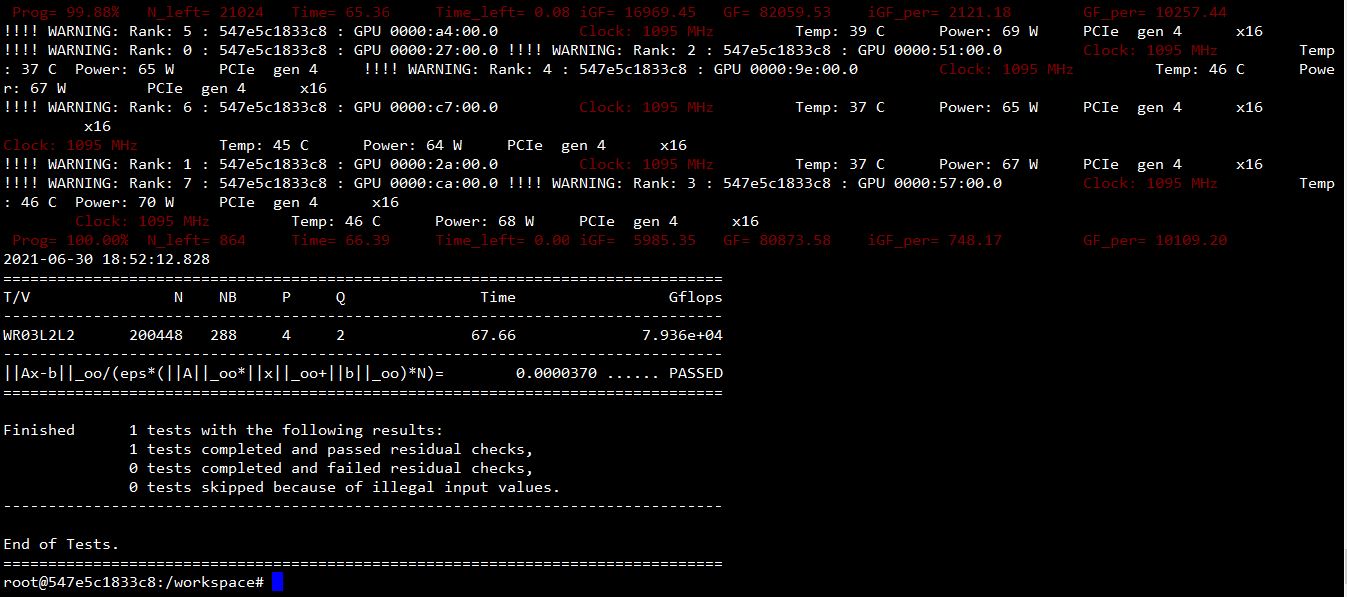

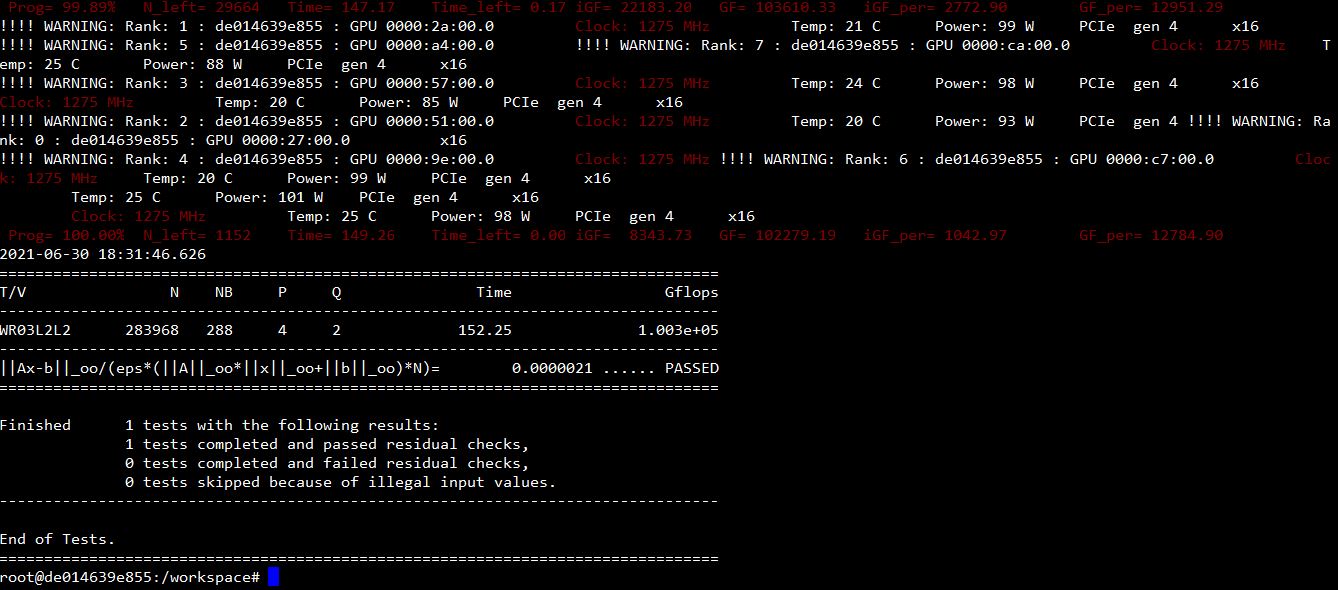

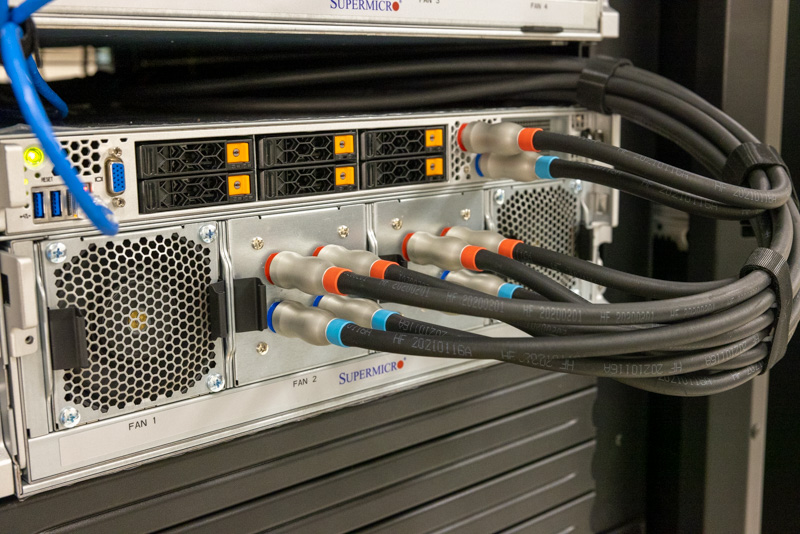

First, we ran HPL from the NVIDIA NGC repository. During the runs, we confirmed that the 400W GPUs were running at around 400W and the 500W GPUs were pulling around 500W each. With eight GPUs that meant that we had ~3.2kW of GPUs on the air-cooled system and ~4kW of GPUs on the liquid-cooled system and that the liquid-cooled GPUs were indeed pulling a combined total of 800W more.

The performance numbers came out and were a bit shocking. This is likely due, in part, to the 80GB memory configuration on the 500W SXM modules, but we got around 100TFLOPS on the 500W system and around 80TFLOPS on the 400W system. That is roughly 20% better performance. We did not expect the delta to be that big. For many buyers, getting 20% more performance on GPUs using 25% more power is perfectly reasonable. That, however, was only part of the story.

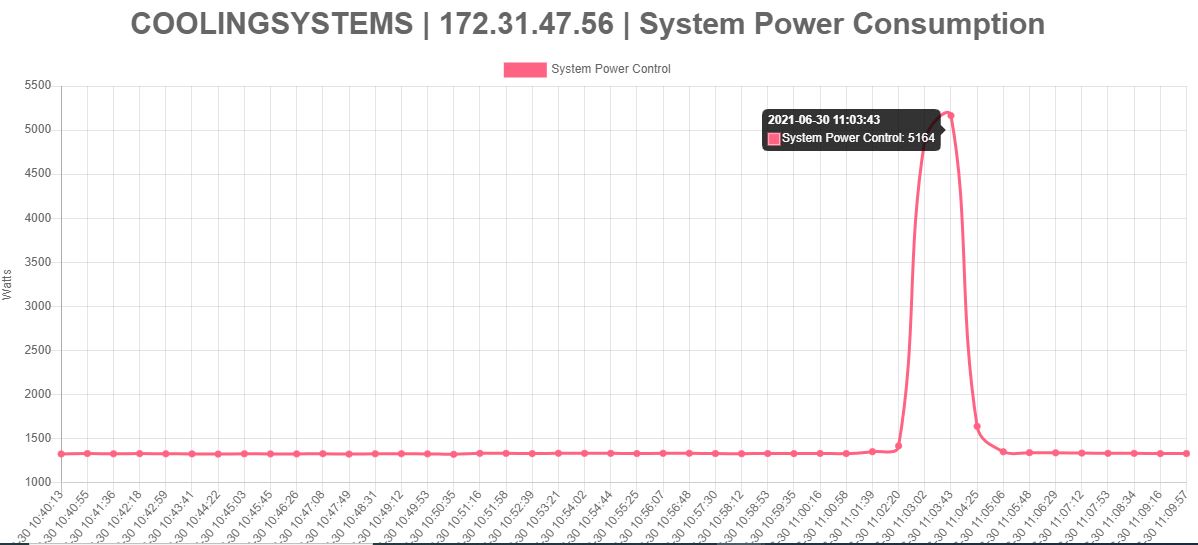

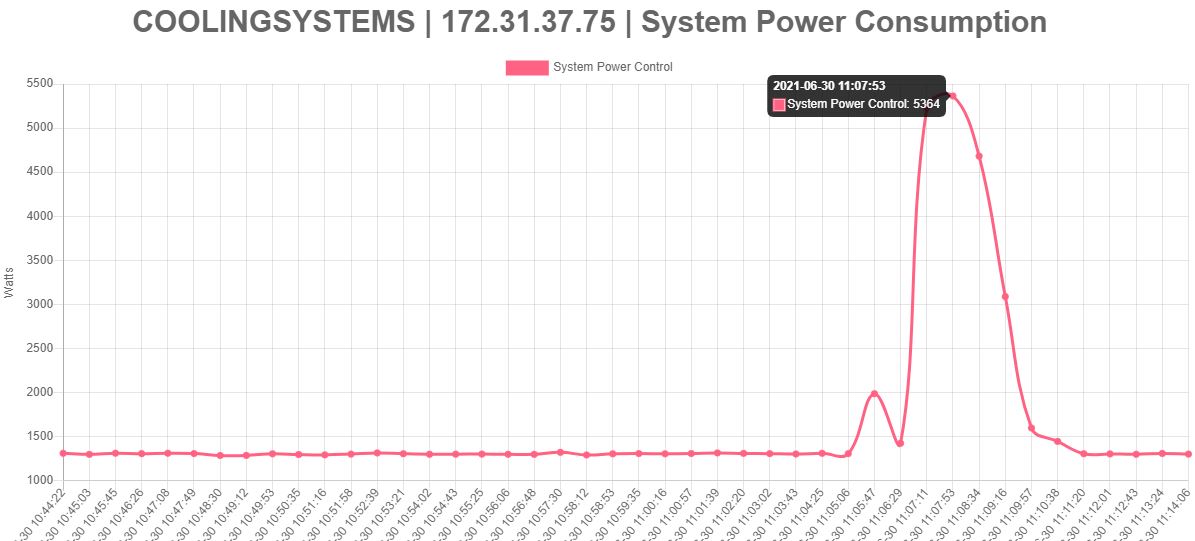

Taking a quick look at the power consumption during the runs, we could see a notable delta.

- Air-cooled 8x NVIDIA A100 40GB 400W: 5164W

- Liquid-cooled 8x NVIDIA A100 80GB 500W: 5364W

We effectively only saw a 200W difference between the systems running Linpack.

At this point, you may be wondering, what is going on. We watched the eight GPUs during the runs consume 100W more each, but instead of an 800W delta, we saw only a 200W delta. The answer for this is perhaps the coolest part: it is liquid cooling.

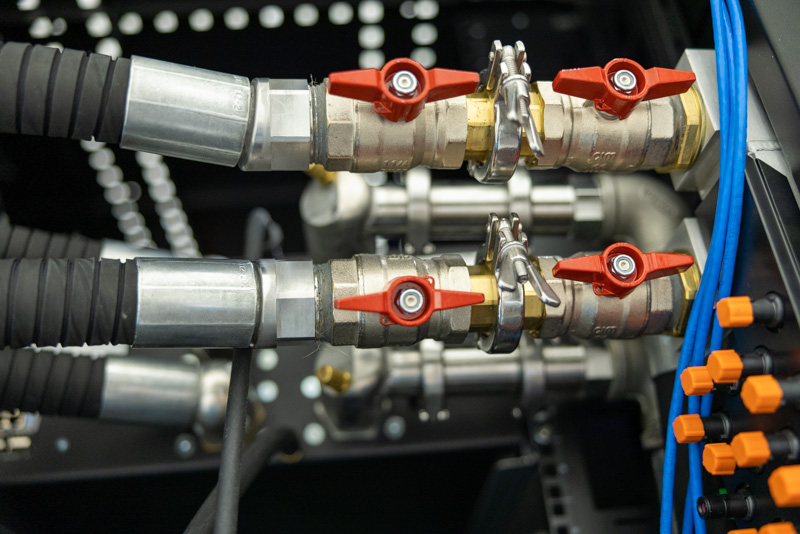

What was actually happening here is that the chassis fans are not spinning up to cool the system. At a server level, that means that even with 800W more electrical load from the GPUs, we are net only seeing a 200W increase in power consumption.

This is a figure that many vendors will show, but it is also a bit misleading. In the rack, there is an Asetek cooling unit that is using power as well as pumps. This setup is also using water that is coming in from the building so there are resources being used outside of the servers. One could, of course, argue that the air-cooled system requires air conditioning in the room or a rear heat exchanger to keep the ambient temperatures reasonable and that requires power as well.

All told this is the exact type of benefits folks talk about with liquid cooling. At a server level, we managed to get approximately 20% more performance for less than 4% more power consumption. While the GPUs cost a bit more (we were not given firm pricing but NVIDIA does charge a premium), and liquid cooling costs more, getting that extra performance in a system means we need fewer servers, NICs, switch ports, and so forth leads to massive cost savings. On a $100,000 server, getting 20% better performance one can equate to around $20,000 in benefit which pays for a lot of quick-connect fittings.

Final Words

There are a lot of folks hesitant about liquid cooling for obvious reasons. At the same time, liquid cooling benefits are already here today offering gains like the 20% better linpack performance for a ~3.8% power increase.

As chips use more power and generate more heat in 2022, liquid cooling will be required for an incrementally greater portion of the server market.

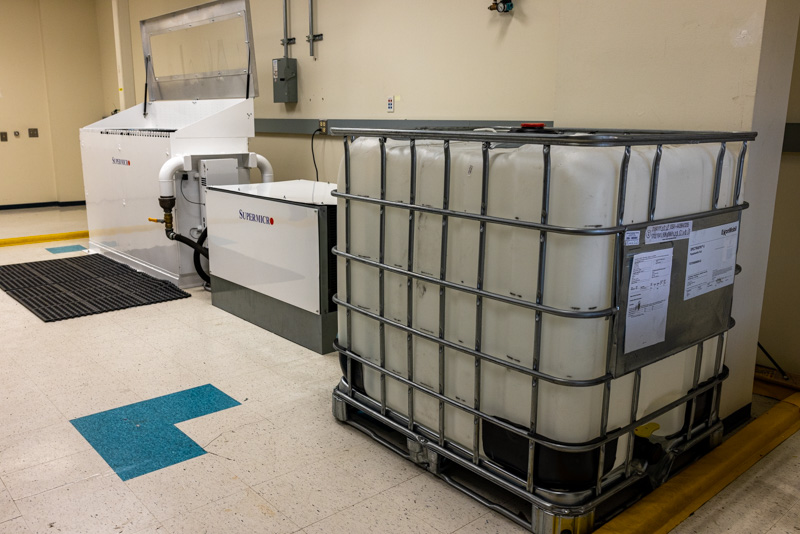

The fact is that adding liquid cooling to a data center or even just servers is not a trivial task. Each facility is different and environments are different as well based on physical location. As a result, if you want to cool top-end gear that will arrive in 2022 and beyond, you need to be thinking about that today.

I just wanted to say thank you again to Supermicro for helping me do this article and getting the lab set up for this testing. If you are looking at liquid cooling in the future, they have been providing these solutions to large customers for years and we highlighted some of both the older and newer generations in this article. They now have an entire practice set up to help customers transition to different types of liquid cooling.

For our readers, our hope is that this gives some tangible independent data on the impact of liquid cooling. It is a big project to add newer cooling to infrastructure, but it is also something that those looking to deploy high-end 2022 and beyond solutions will need to utilize. Indeed, this was the first time we could show the benefit since we had access to the top-end NVIDIA A100 80GB 500W GPUs.

The kiddies on desktop showed the way.

If data centers want their systems to be cool, just add RGB all over.

I’m surprised to see the Fluorinert® is still being used, due to its GWP and direct health issues.

There are also likely indirect health issues for any coolant that relies on non-native halogen compounds. They really need to be checked for long-term thyroid effects (and not just via TSH), as well as both short-term and long term human microbiome effects (and the human race doesn’t presently know enough to have confidence).

Photonics can’t get here soon enough, otherwise, plan on a cooling tower for your cooling tower.

Going from 80TFLOP/s to 100TFLOP/s is actually a 25% increase, rather than the 20% mentioned several times. So it seems the Linpack performance scales perfectly with power consumption.

Very nice report.

Data center transition for us would be limited to the compute cluster, which is already managed by a separate team. But still, the procedures for monitoring a compute server liquid loop is going to take a bit of time before we become comfortable with that.

Great video. Your next-gen server series is just fire.

Would be interesting to know the temperature of the inflow vs. outflow. Is the warmed water used for something?

Bonjour

Je sui taïlleur coudre les pagne tradissonelle ect…

Je ve crée atelier pour l’enfant de demin.

Merci bein.

Hi Patrick.

Liked your video Liquid Cooling High-End Servers Direct to Chip.

have you seen ZutaCore’s innovative direct-on-chip, waterless, two-phase liquid cooling?

Will be happy to meet in the OCP Summit and give you the two-phase story.

regards,