Liquid Immersion Cooling

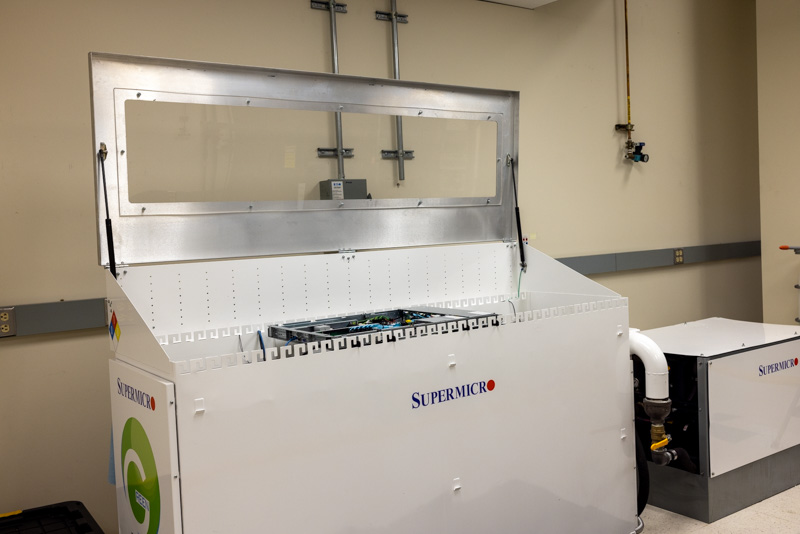

The first of the more invasive cooling methods to help cool higher TDP chip packages is liquid immersion cooling. The immersion tank that was in this room is an older design. Again, there are much newer versions of immersion cooling tanks but this just happened to be in the room where the servers were.

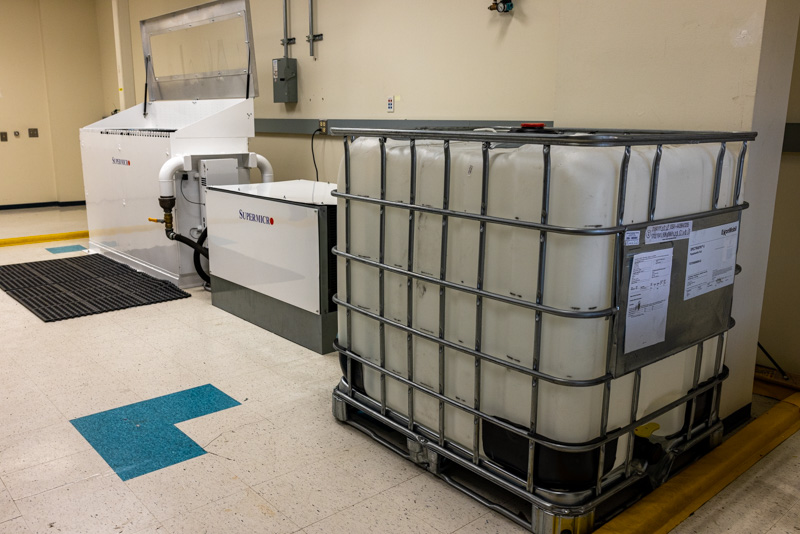

Liquid immersion cooling works by exposing components directly to a liquid by placing them in a tank or tub. There are a few key aspects here. First, the liquid itself must be a non-conductive and certainly not highly corrosive liquid to the components of the system. In this case, there was a large tank of ExxonMobil (the same company that makes gasoline for automobiles) fluid next to the setup. Whereas with the rear door heat exchanger setup facility water could be used, in immersion cooling the fluid has to be a special formula. We also see 3M Fluorinert used quite often and some immersion cooling manufacturers have their own formulations.

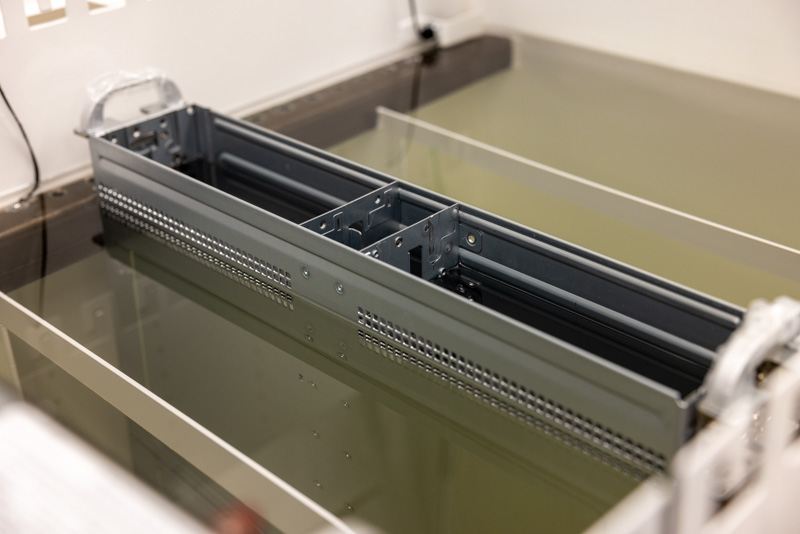

This fluid is placed into the tank/ tub and then components are placed into the fluid. Immersion cooling systems generally have some method to keep servers suspended and in place since the fluid circulates and servers may still need servicing.

Often in production facilities like the TACC data center we see ceiling hoists above the immersion cooling tanks to help lift servers from tanks vertically. With modern servers often weighing more than data center technicians, and when covered in fluid, machanical assist is required to get servers out from the immersion tanks.

The liquid circulates in the tank and is heated by components. Sometimes standard servers are used. More recent developments are that custom PCBs and heatsinks designed to optimize denser liquid fluid flow instead of airflow are fitted to increase surface area and fluid flow. The liquid is then circulated via a pump assembly and heat exchange can happen either to air or to facility water.

Here is the heat exchanger unit where one can see pumps. Again, this is just one design and an older one that Supermicro used, but a lot of the key concepts continue which is why I am showing this detail.

The particular unit had a large radiator unit as well.

A quick note here is that the fluid choice is a big deal. Some fluid leaves residue that is hard to get off. The fluids themselves cost a lot more than water and certainly more than copper heatpipe coolers. Also, some fluids are toxic bringing up potential workplace safety concerns using them, especially if they evaporate and fumes are breathed in by data center techs.

Finally, as one can probably tell from the TACC data center above, there is a big shift from having a vertical rack to a horizontal tank in terms of facilities. That, along with some of the cost components, is a big reason that liquid immersion cooling has seen slow adoption in mainstream data centers.

A major benefit of immersion cooling is that the cooling reaches the chip. If one recalls the rear heat exchanger, that solved data center and rack cooling requirements but not at the chip level. Liquid immersion cooling gets to the chip level. One other side benefit is that if you have ever been around an immersion tank, they can often cool 100kW+ of components and be nearly silent. If you have ever been near a 100kW air-cooled rack, it is generally loud with lots of vibration from spinning fans.

Next, we are going to take a look at direct-to-chip cooling before getting to the performance.

The kiddies on desktop showed the way.

If data centers want their systems to be cool, just add RGB all over.

I’m surprised to see the Fluorinert® is still being used, due to its GWP and direct health issues.

There are also likely indirect health issues for any coolant that relies on non-native halogen compounds. They really need to be checked for long-term thyroid effects (and not just via TSH), as well as both short-term and long term human microbiome effects (and the human race doesn’t presently know enough to have confidence).

Photonics can’t get here soon enough, otherwise, plan on a cooling tower for your cooling tower.

Going from 80TFLOP/s to 100TFLOP/s is actually a 25% increase, rather than the 20% mentioned several times. So it seems the Linpack performance scales perfectly with power consumption.

Very nice report.

Data center transition for us would be limited to the compute cluster, which is already managed by a separate team. But still, the procedures for monitoring a compute server liquid loop is going to take a bit of time before we become comfortable with that.

Great video. Your next-gen server series is just fire.

Would be interesting to know the temperature of the inflow vs. outflow. Is the warmed water used for something?

Bonjour

Je sui taïlleur coudre les pagne tradissonelle ect…

Je ve crée atelier pour l’enfant de demin.

Merci bein.

Hi Patrick.

Liked your video Liquid Cooling High-End Servers Direct to Chip.

have you seen ZutaCore’s innovative direct-on-chip, waterless, two-phase liquid cooling?

Will be happy to meet in the OCP Summit and give you the two-phase story.

regards,