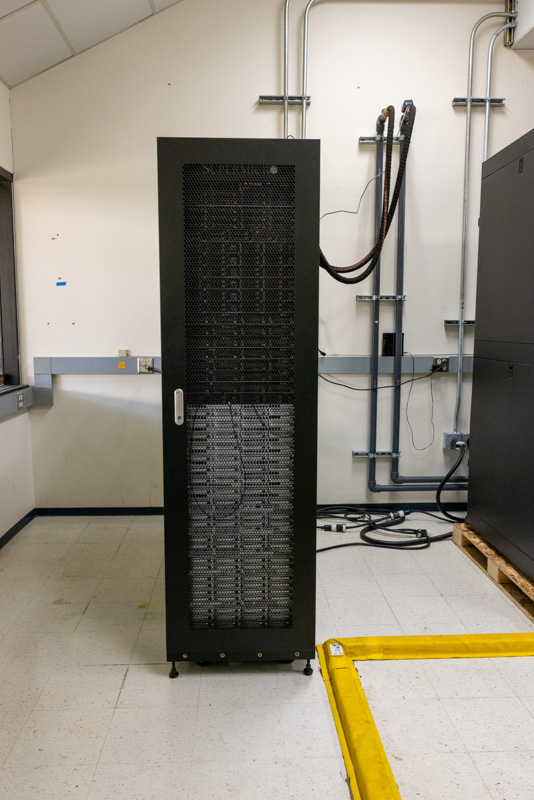

Rear Door Heat Exchanger

The first option we are going to look at is called the rear door heat exchanger. This is an older version of what one may see being deployed today, but it was next to the GPU systems so I took some photos of it.

The solution basically uses a rack of servers in an as-is configuration. Liquid (in this case water) is piped in from a facility feed and brought into a large radiator. The radiator is about the size of an entire rack that covers the rear door.

There are large fans that sit at the back of the rack to pull air through the radiator. While the servers still have internal fans, these large fans are needed to get optimal airflow across the radiator.

Hot exhaust air is expelled through the rear of servers and is sucked into the radiator. This effectively removes heat from the exhaust air and returns cooler air to the data center. Heated water is then removed via pipes off of the data center floor. A system of sensors monitors temperatures and helps to modulate flow as needed.

Something that was very cool is that there is a leak detection sensor that goes around the piping. Most leaks do not happen in the middle of pipes, instead, they usually occur near fittings.

These sensors detect leaks and can perform emergency shut-offs if there is a leak detected.

This addresses the facility cooling and rack cooling challenges, but not necessarily keeping the chips cool since the servers used are still standard servers. Next, we are going to take a look at some of the solutions to cool the actual chips.

The kiddies on desktop showed the way.

If data centers want their systems to be cool, just add RGB all over.

I’m surprised to see the Fluorinert® is still being used, due to its GWP and direct health issues.

There are also likely indirect health issues for any coolant that relies on non-native halogen compounds. They really need to be checked for long-term thyroid effects (and not just via TSH), as well as both short-term and long term human microbiome effects (and the human race doesn’t presently know enough to have confidence).

Photonics can’t get here soon enough, otherwise, plan on a cooling tower for your cooling tower.

Going from 80TFLOP/s to 100TFLOP/s is actually a 25% increase, rather than the 20% mentioned several times. So it seems the Linpack performance scales perfectly with power consumption.

Very nice report.

Data center transition for us would be limited to the compute cluster, which is already managed by a separate team. But still, the procedures for monitoring a compute server liquid loop is going to take a bit of time before we become comfortable with that.

Great video. Your next-gen server series is just fire.

Would be interesting to know the temperature of the inflow vs. outflow. Is the warmed water used for something?

Bonjour

Je sui taïlleur coudre les pagne tradissonelle ect…

Je ve crée atelier pour l’enfant de demin.

Merci bein.

Hi Patrick.

Liked your video Liquid Cooling High-End Servers Direct to Chip.

have you seen ZutaCore’s innovative direct-on-chip, waterless, two-phase liquid cooling?

Will be happy to meet in the OCP Summit and give you the two-phase story.

regards,