Lenovo ThinkSystem SR650 Performance

At STH, we have an extensive set of performance data from every major server CPU release. Running through our standard test suite generated over 1000 data points for each set of CPUs. We are cherry picking a few to give some sense of CPU scaling. Given the CPU TIM contact we found, we used our own heatsinks and TIM with the system to get more reliable figures for each test.

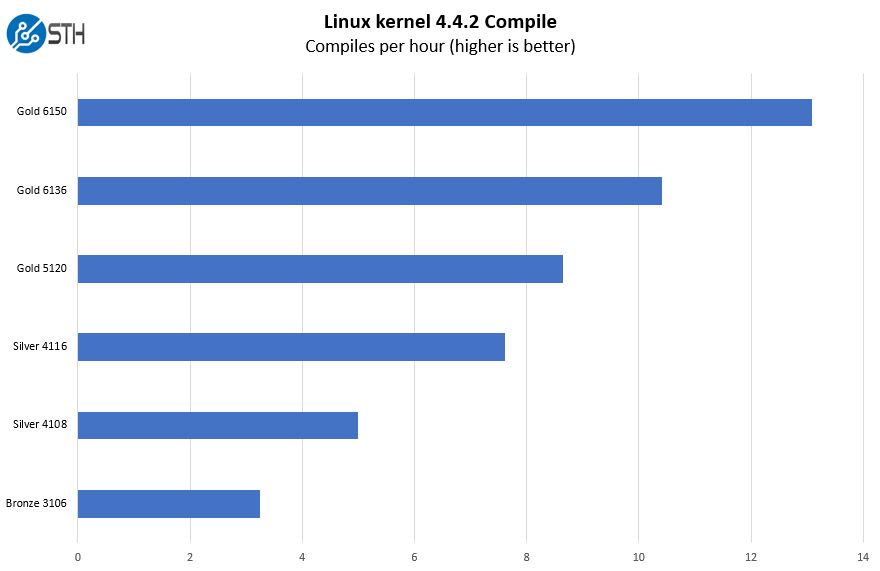

Python Linux 4.4.2 Kernel Compile Benchmark

This is one of the most requested benchmarks for STH over the past few years. The task was simple, we have a standard configuration file, the Linux 4.4.2 kernel from kernel.org, and make the standard auto-generated configuration utilizing every thread in the system. We are expressing results as compiles per hour to make the results easier to read.

We wanted to show a range of results from the Intel Xeon Bronze to the Intel Xeon Gold range so that our readers can get some sense regarding the performance they get moving to higher-level SKUs.

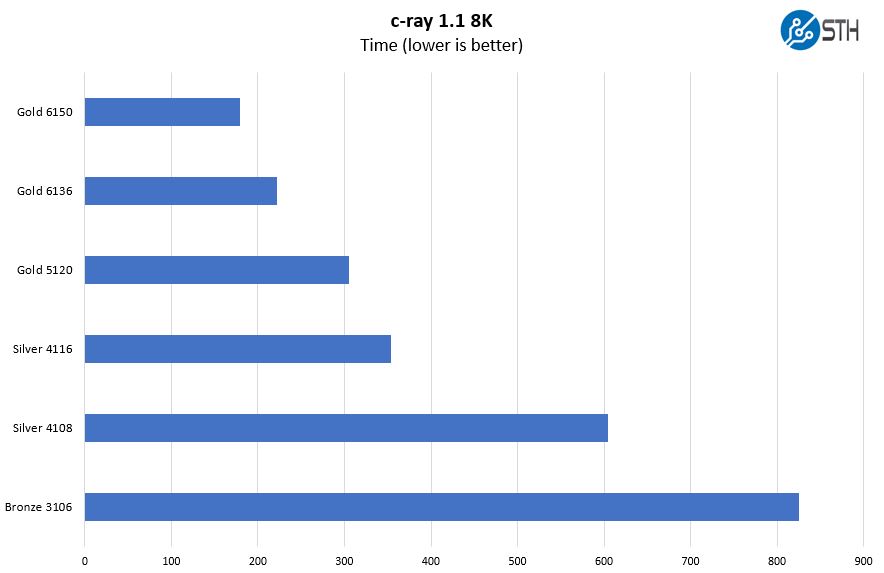

c-ray 1.1 Performance

We have been using c-ray for our performance testing for years now. It is a ray tracing benchmark that is extremely popular to show differences in processors under multi-threaded workloads. We are going to use our new Linux-Bench2 8K render to show differences.

A trend you will see is that the Intel Xeon Bronze 3106 CPUs are low on the performance scale. That means for a relatively low incremental cost; one can move to the Intel Xeon Silver 4108 and higher with considerably more performance.

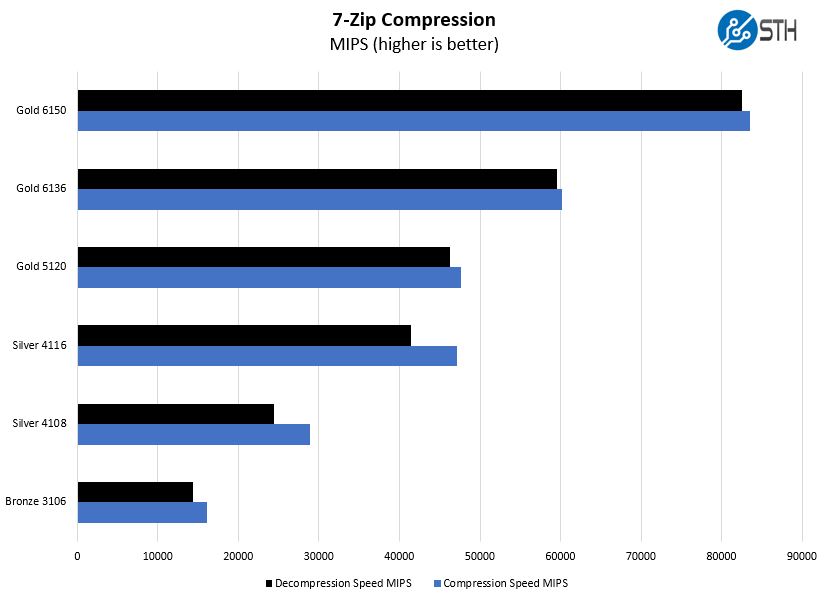

7-zip Compression Performance

7-zip is a widely used compression/ decompression program that works cross-platform. We started using the program during our early days with Windows testing. It is now part of Linux-Bench.

For compression workloads, again popular in data centers, one can get 4-6x the performance of Intel Xeon Bronze by moving into the Intel Xeon Gold range. That is a massive speed increase.

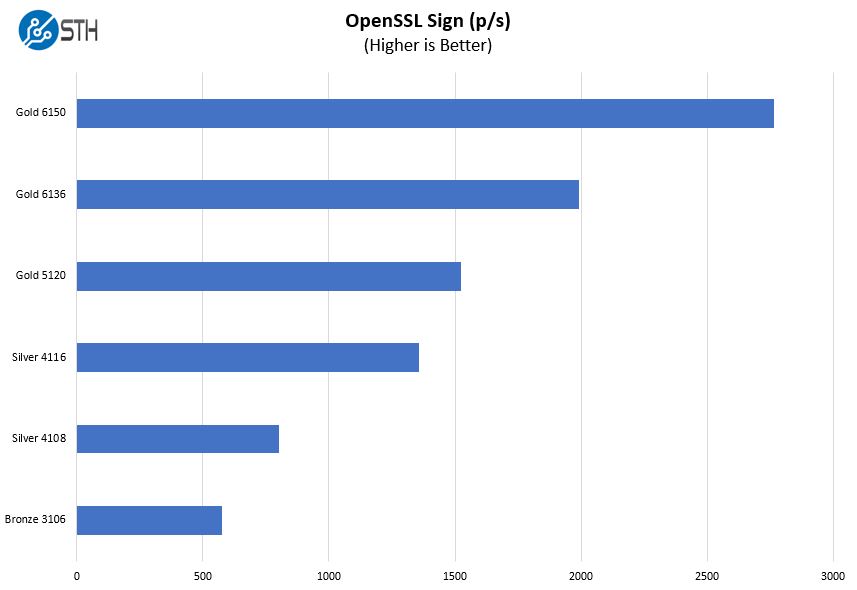

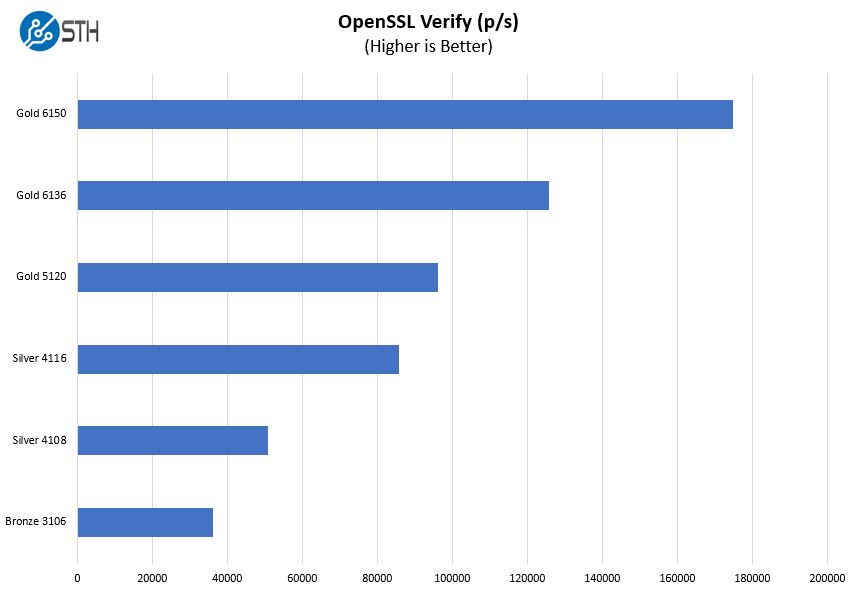

OpenSSL Performance

OpenSSL is widely used to secure communications between servers. This is an important protocol in many server stacks. We first look at our sign tests:

Here are the verify results:

As one can see, this is another test where performance scales well with cores and clock speeds.

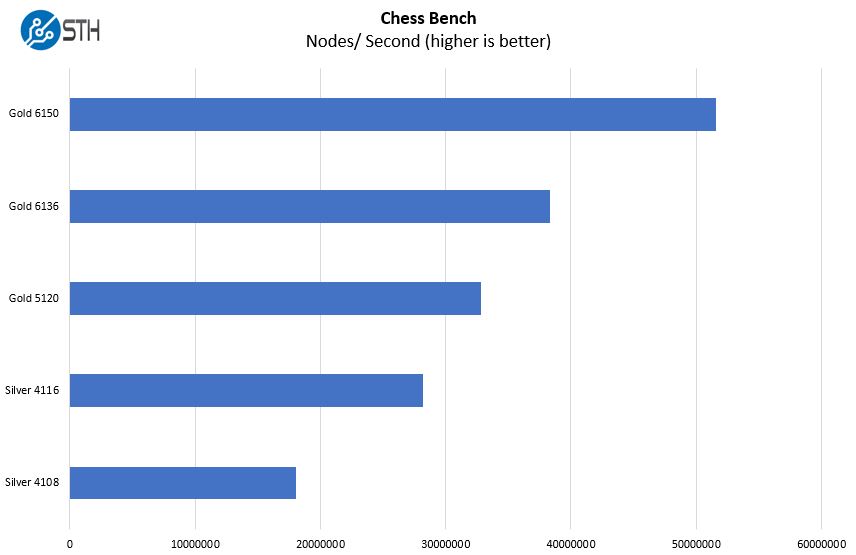

Chess Benchmarking

Chess is an interesting use case since it has almost unlimited complexity. Over the years, we have received requests to bring back chess benchmarking. We have been profiling systems and are ready to start sharing results:

For the chess and GROMACS test (next) we did not utilize the Intel Xeon Bronze since if you are doing these computationally heavy workloads, you will not configure servers with Xeon Bronze.

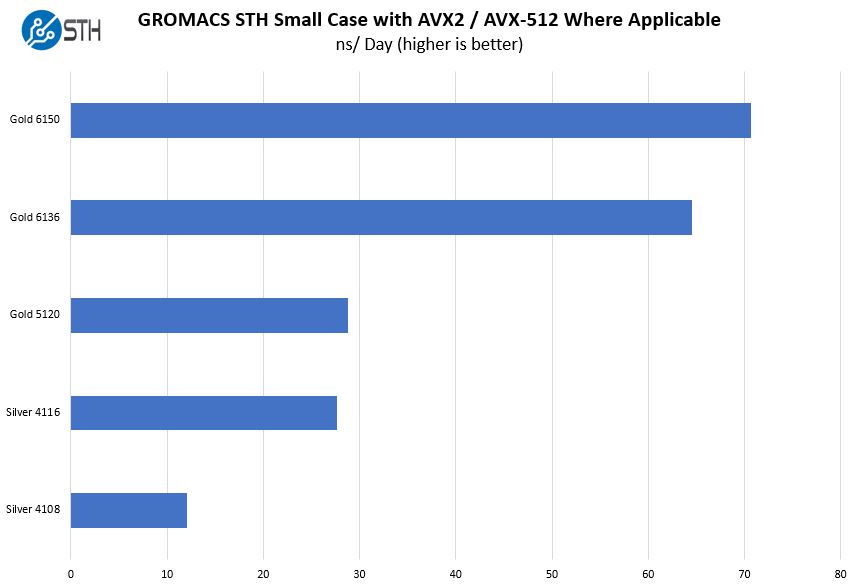

GROMACS STH Small AVX2 Enabled

We have a small GROMACS molecule simulation we previewed in the first AMD EPYC 7601 Linux benchmarks piece. In Linux-Bench2 we are using a “small” test for single and dual socket capable machines. Our GROMACS test will use the AVX-512 and AVX2 extensions if available.

AVX-512 is an Intel extension brought from supercomputers to mainstream CPUs. Higher end Intel Xeon Gold 6100 and Platinum SKUs have dual port FMA AVX-512. You can see the impact here as the Intel Xeon Gold 6136‘s lead over the Intel Xeon Gold 5120 increases to more than double the performance. That is the impact of the higher-end AVX-512 implementation. If your application relies on AVX-512, get Intel Xeon Gold 6100 or higher CPUs.

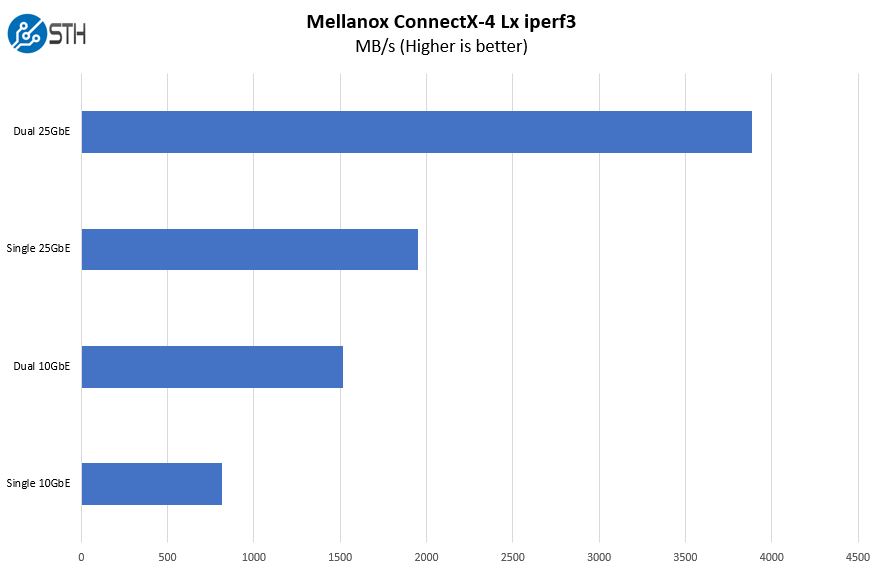

Lenovo ThinkSystem SR650 Network and Storage Performance

We used our Mellanox ConnectX-4 Lx card with our lab’s 10GbE and 25GbE networks. Here is what the basic performance looks like:

As you can see, 25GbE is the path we are recommending for new installations as it helps to maximize PCIe utilization.

Generally, in our reviews, we focus on storage performance as well. Since we had a single 480GB SATA boot drive, we did not push storage performance.

In the last part of our review, we are going to focus on power consumption and give our final thoughts. on the platform.

dang, 9.2. you must have really hated this one!

I’m looking for a cheap software-defined-storage server that would run Red Hat Ceph, anyone would recommend this or something similar for a Private OpenStack cloud?

Are the connectors on the sata/sas backplane standard or proprietary?