The Lenovo HR650N is a 2U NVIDIA MGX platform that houses a NVIDIA Grace Superchip Arm processor. We saw one at NVIDIA GTC 2024, and since we have a few pieces that are stuck with Patrick welcoming the newest STH team member, we went through a few photos we had and figured we would show off this system.

Lenovo HR650N NVIDIA Grace Superchip 2U Server Shown

Taking a look at the server, there are a set of configurable bays up front. This server has 2.5″ drive bays. There are also the ConnectX-7 NICs and the OCP NIC 3.0 NICs in this configuration. Notably absent, and a bit strange given that this is a NVIDIA-powered MGX system, is that there is not a full-height BlueField-3 DPU.

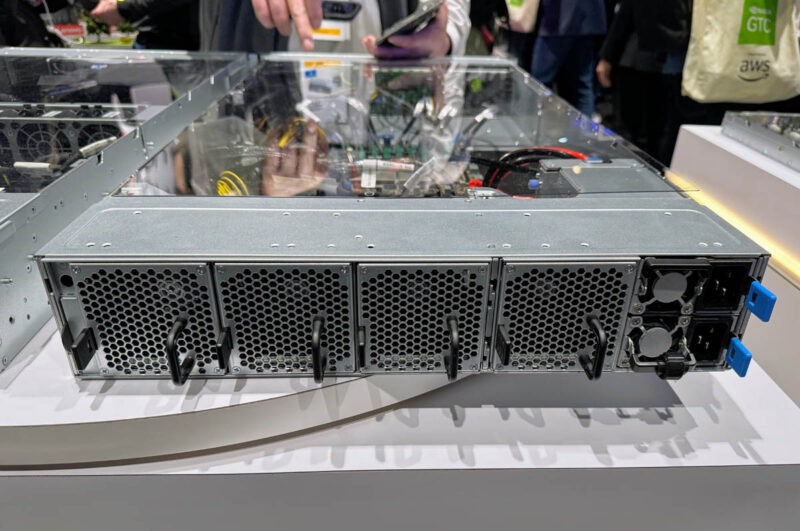

Looking at the back of the server we see a lot of fans and power supplies.

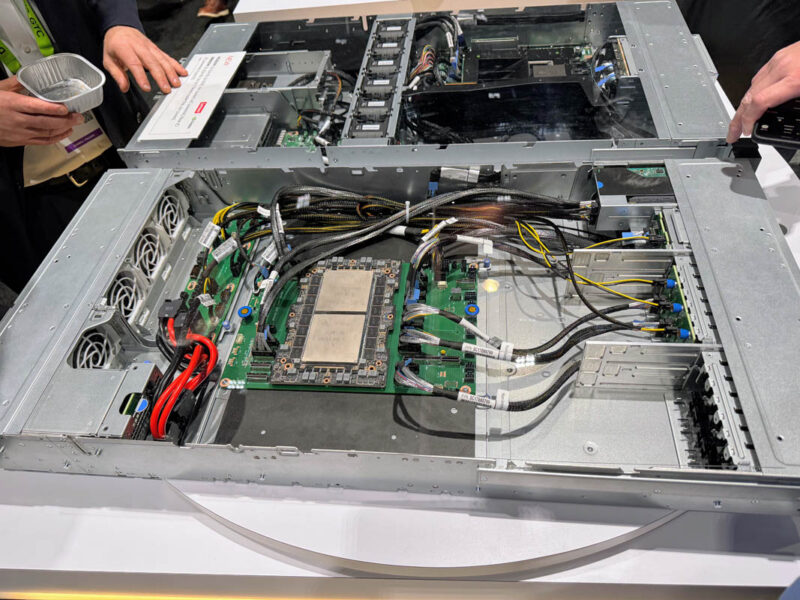

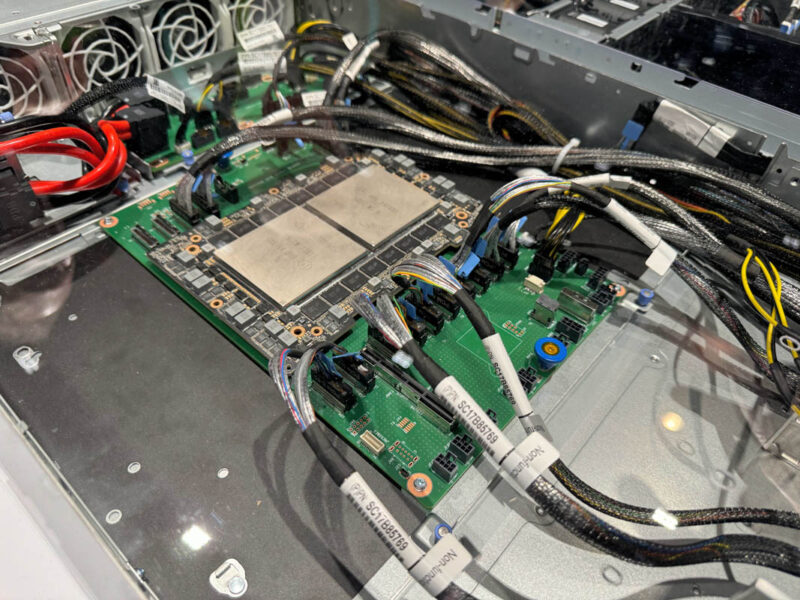

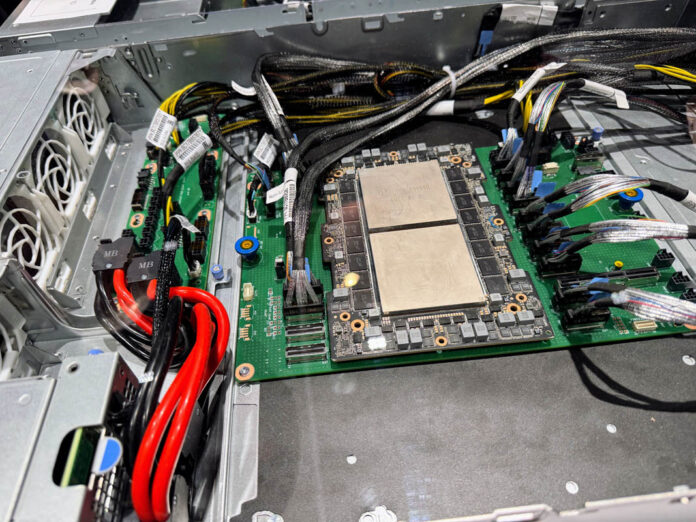

Inside the server, the main feature is the NVIDIA Grace Superchip. This offers 144 Arm Neoverse V2 cores and memory in the ~500W TDP package. While that may seem like a lot of cores for that TDP, Sierra Forest at 144 and 288 cores will be a game changer for efficiency.

Some of the cool MGX platform features include the cabled PCIe Gen5 connectivity as well as the use of risers with BMCs.

Here, we can see a lot of space. Some of that space can be used for one of NVIDIA’s future features. It can also be used to add a second Grace Superchip, making this a two-node chassis with a total of 288 cores.

Final Words

One of the really interesting market dynamics is that NVIDIA’s MGX platform is really taking a way a lot of the differentiation large legacy OEMs like Lenovo have among one another. At the same time, NVIDIA has so much market leverage that those OEMs effectively have to use MGX. Still, it is cool to see a platform like this after Lenovo fell far behind in AI servers over the past few years. NVIDIA GTC 2024 was where the company showed its new competitive portfolio. Prior to the GTC 2024 announcements, Lenovo’s main AI server was a Chinese market-only model that could not get GPUs to populate. The HR650N was the Grace Superchip offering, but the company also had GH200, GB200, PCIe GPU servers, and HGX platforms at the show.

“Sierra Forest at 144 and 288 cores will be a game changer for efficiency”

Based on what? It’s going to use Crestmont cores, which are not revolutionary in any way – https://chipsandcheese.com/2024/05/13/meteor-lakes-e-cores-crestmont-makes-incremental-progress/

No to mention that it’s going to use 12 channels of DDR5 per socket which takes a lot of power of itself.

I think it’s wild how much technology is cycling at this point. We went to PCBs because the traces were shorter and thus faster, easier signaling. Now we have cycled back to everything being wired together, because the PCBs can’t handle the number of connections we need with good signal integrity.

Wonder how many years before someone comes up with the “new” idea of routing all the wiring on the PCB, because it’s easier? lol

Seriously though, the tiny SoC board in the middle, and everything else is wired. Also, why are companies no longer caring about wire routing/cleanup for good airflow?

James, because wire routing and cleanup never mattered that much, it’s just an excuse to sell expensive cases and modular power supplies to pc gamers

And this system doesn’t have its heatsinks and airflow guides on, so you don’t see the real airflow path, it’s probably a lot cleaner assembled

Kyle – only part of what you have there is accurate. Hold on for a few more days.

why is it a 2U ???