Kioxia has been working on a technology that we have been following since the company was still called Toshiba. Specifically, that is the SSD that is addressed over NVMe-oF using 25GbE. Now the Kioxia EM6 brings that drive from development to a product.

Kioxia EM6 25GbE NVMe-oF SSD

The Kioxia EM6 25GbE NVMe-oF SSD uses the Marvell 88SN2400 controller. Perhaps the easiest way to think of this controller is that it speaks SSD on one end. On the other, it connects via 25GbE instead of a drive specific interface such as SATA, SAS, or PCIe/ NVMe.

Just for some historical perspective, here is the Toshiba (now Koixia) test Ethernet SSD from Flash Memory Summit 2019.

This is the earlier version of the drive from 2018. Above one can see the drive in an adapter board that splits out to two 25GbE interfaces. Below, we basically have a NVMe SSD with a 25GbE Marvell controller on an external PCB.

In terms of why one would want a 25GbE NVMe-oF SSD, the answer is fairly simple. A device like this can be addressed directly over the network. Instead of a drive first going to a standard server, it can just be placed directly over the network fabric.

Here is an example of the flash box. Instead of having a server complex inside, the box with the drives contains a Marvell switch chip on each side of the chassis. The switch chips each connect to the drives on the front of the chassis. On the rear, one can use 100GbE connections to then aggregate the drives up to the switch.

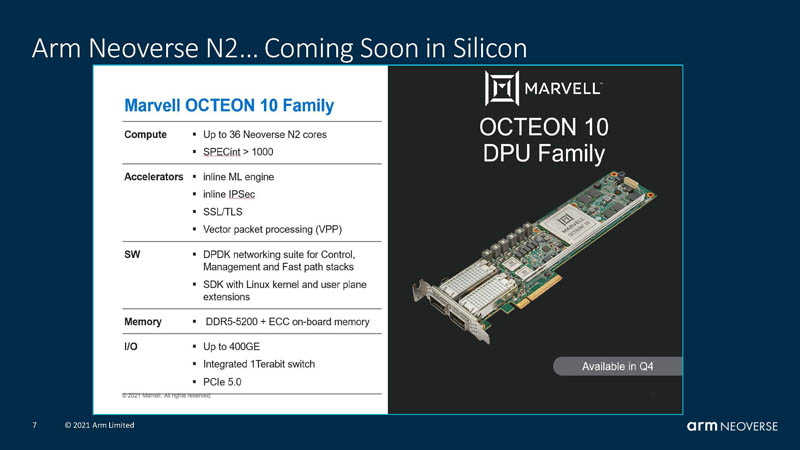

Let us go another step into the future as to why this is a powerful solution. You may have seen our coverage on DPUs in pieces such as: What is a DPU A Data Processing Unit Quick Primer and DPU vs SmartNIC and the STH NIC Continuum Framework. There are a number of different vendors out, there, but let us use the Marvell Octeon 10 as an example:

Using these NVMe-oF SSDs, one can scale capacity by simply connecting the drives getting them on the network fabric. DPUs can then be used to allocate capacity, perform erasure coding functions, and so forth, delivering storage to a server over the network.

Other key stats for the drives is that they come in 2.5″ 15mm form factors, utilize RoCEv2, and come in 1 DWPD endurance with 3.84TB and 7.68TB capacities.

Final Words

At STH, we have been patiently waiting since 2018 to get our hands on these. If ever there is an opportunity to stick an Octeon 10 (or use the other DPUs we have) and show how NVMe-oF native SSDs can be used with server storage, you can believe we are going to jump on it. For now, it is just great to see this technology move from a rough demo and into an actual product.

I’d be curious to see which way the presence of DPUs ends up pushing things in terms of putting drives directly on the network:

It certainly makes doing that more attractive in that you can get results better than just a basic NIC with iSCSI support; but it also makes possible DPU-based commodity-drive-to-network bridge systems(like the Fungible FS1600 you had an article on some time ago) that are basically the bare essentials of bridging a PCIe root loaded with NVMe devices to ethernet; without depending on special drives or having to manage every single drive as an individual network device.

I’d imagine that it will come down substantially to how much it costs to add a 25GbE NIC and enough extra controller to handle NVMeoF rather than just a PCIe interface, along with how much the switched chassis end up costing per drive vs. the cost per drive of a slightly smarter chassis that implements the PCIe root and 100GbE interfaces; but handles the intelligence for a bunch of basic NVMe drives.

Any idea how those compare?

So the ‘client’ compute element just sees these as block devices, right?