Traditional “Four Corners” Testing

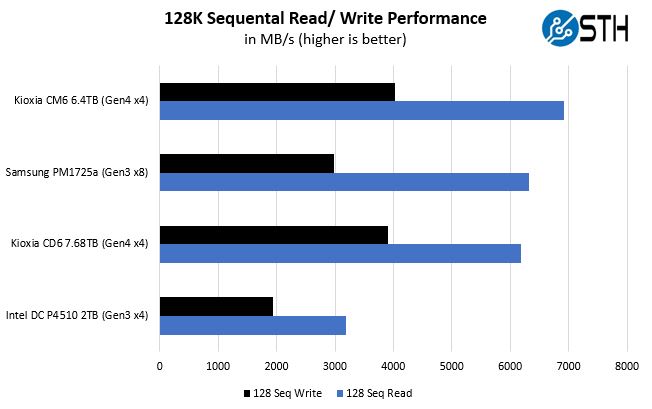

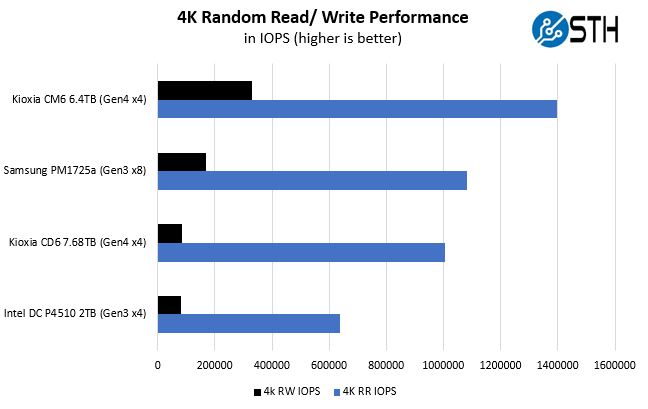

Our first test was to see sequential transfer rates and 4K random IOPS performance for the Kioxia CD6 7.68TB SSD. Please excuse the smaller than normal comparison set, but if you need an explanation, see above as to why we are not using legacy Xeon Scalable platform results.

Here we can see what Kioxia means by this being a “read optimized” SSD. We actually get very good sequential performance. The one area of four corners testing that seems to be lower is the 4K random write testing which makes sense given the market. This drive is designed for optimizations on retrieval of data rather than the fast ingest of small bits of data. We were a bit surprised that the sequential write numbers were high, but that seems to align with the spec sheet.

When we compare to our baseline PCIe Gen3 drives, we can see that the closest competitor is a PCIe Gen3 x8 Samsung PM1725a 6.4TB AIC instead of a Gen3 x4 drive. This may not seem like a big deal but using half the PCIe lanes matters in many applications. As an example, the PCIe Gen3 Samsung drive can hit similar levels of performance but is not hot-swappable due to the AIC form factor. It also uses twice as many PCIe lanes which are limited in systems.

STH Application Testing

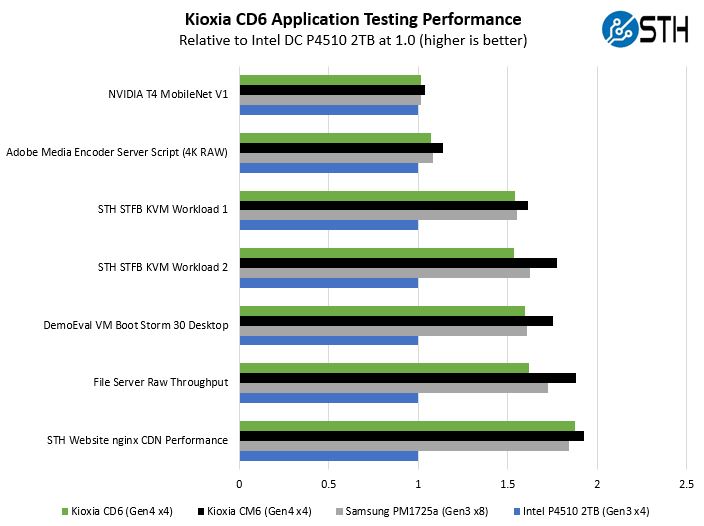

Here is a quick look at real-world application testing versus our PCIe 3.0 x4 and x8 reference drives:

As you can see, there is a lot of variabilities here in terms of how much impact the Kioxia CD6 and PCIe Gen4 has. Let us go through and discuss the performance drivers.

On the NVIDIA T4 MobileNet V1 script, we see very little performance impact, but we see some. The key here is that we are being mostly limited by the performance of the NVIDIA T4 and storage is not the bottleneck. Here we can see a benefit to the newer drives, in terms of performance, but it is not huge. Perhaps the more impactful change here is the move from Gen3 x8 to Gen4 x4 frees up more PCIe connectivity for additional NVIDIA T4’s in a system, thus having a greater impact on total performance. This is a strange way to discuss system performance for storage, but it is very relevant in the AI space.

Likewise, our Adobe Media Encoder script is timing copy to the drive, then the transcoding of the video file, followed by the transfer off of the drive. Here, we have a bigger impact because we have some larger sequential reads/ writes involved, the primary performance driver is the encoding speed. The key takeaway from these tests is that if you are compute limited, but still need to go to storage for some parts of a workflow, there is an appreciable impact but not as big of an impact as getting more compute. Here, the CD6 performed about where we would expect given the sequential read/ write numbers we saw.

On the KVM virtualization testing, we see heavier reliance upon storage. The first KVM virtualization Workload 1 is more CPU limited than Workload 2 or the VM Boot Storm workload so we see strong performance, albeit not as much as the other two. These are a KVM virtualization-based workloads where our client is testing how many VMs it can have online at a given time while completing work under the target SLA. Each VM is a self-contained worker. We know, based on our performance profiling, that Workload 2 due to the databases being used actually scales better with fast storage and Optane PMem. At the same time, if the dataset is larger, PMem does not have the capacity to scale. This profiling is also why we use Workload 1 in our CPU reviews. We see that the Kioxia CM6 is frankly faster than the Kioxia CD6. Our sense is that this is purposeful as the CM6 is a higher-cost per GB drive. For many, trading a few percentage points of performance is worth getting more capacity. If one can hold more VMs on the storage, then that can have a bigger TCO benefit than having slightly faster application performance. That is not true in all cases which is why we have the mixed-use CD6 and the CM6.

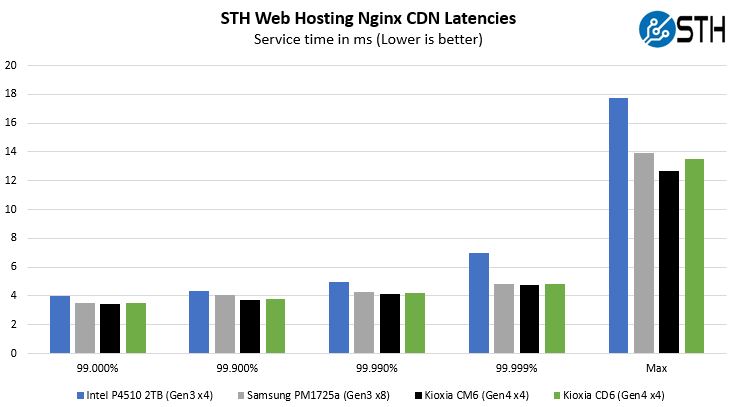

Moving to the file server and nginx CDN we see much better QoS from the new CD6 versus the PCIe Gen3 x4 drives. Perhaps this makes sense if we think of a SSD on PCIe Gen4 as having a lower-latency link as well. On the nginx CDN test, we are using an old snapshot and access patterns from the STH website, with caching disabled, to show what the performance looks like in that case. Here is a quick look at the distribution:

Overall, we saw a few outliers, but this is an excellent performance. Our performance was again not as good as we saw on the Kioxia CM6, but it was much better than our baseline PCIe Gen3 SSDs. Perhaps the key takeaway is that the CM6 is faster, but the CD6 is a better value if you need capacity and are focused on reads.

Since this section is long already, we spent some time simplifying the results. The key takeaways are:

- If you are mostly limited by CPU/ GPU performance, the CD6 (and CM6) will likely have some benefit, but to a lesser extent.

- The CD6 excels in sequential workloads and is generally fast, but the CD6-R is not going to be the fastest PCIe Gen4 SSD we see. That is by design as it is a capacity and read performance play rather than a raw random write performance-optimized drive.

- PCIe Gen3 x8 SSDs can be competitive to the CD6. We saw the Samsung PM1725a perform relatively close to the CD6-R. Still, Kioxia is achieving this level of performance while enabling higher density with PCIe Gen4 x4. The CD6-R is also a U.3 and 2.5″ hot-swappable SSD while the Samsung drive we tested is neither making it more difficult to service in the field than the Kioxia CD6 solution. Performance is one aspect, but performance in a dense and serviceable form factor is another.

Next, we are going to give some market perspective on a new variant before moving to our final words.

I surprised for this phrase: “aside from databases where we do not use NAND to store them anymore”…, neither for index files?