While consumers are adopting PCIe Gen4 quickly, the data center market is a bit behind in this trend. That trend is something that Kioxia hopes the CD6 will start to change. With PCIe Gen4, we now have the opportunity to utilize a higher bandwidth interface. In this review, we are going to look at the Kioxia CD6 which is one of the first wave of data center PCIe Gen4 drives on the market. As we found with the Kioxia CM6 these new drives are able to out-perform a SATA/SAS array on a PCIe Gen3 x8 controller while also being a small 2.5″ form factor and hot-swappable. In many ways, that is exactly the point of the Kioxia CD6 SSD.

Kioxia CD6 Overview

The Kioxia CD6 comes in a standard 2.5″ drive form factor. We will note that STH covered the latest Kioxia XD6 EDSFF PCIe Gen4 NVMe SSDs which utilize a newer form factor but sit next to the CD6 in Kioxia’s product stack. While you may be familiar with the 2.5″ form factor that has been around for decades if you want to see an EDSFF system you can see an example here. For now, we still have a drive in a standard 2.5″ form factor since that is what a lot of servers support in a quest to maintain compatibility with legacy SATA and SAS form factors.

The major change with this generation is the connection. The SFF-8639 connector is wired to be compliant with SFF-TA-1001 specs and U.3. This is designed to help servers better handle a mix of SATA, SAS, and NVMe SSDs. As we will show in the performance section, PCIe Gen4 SSDs such as this Kioxia CD6 will make SATA and SAS largely irrelevant. Still, this uses the traditional layout pioneered years ago since the basic connector form factor, like the 2.5″ form factor, was designed for spinning disks.

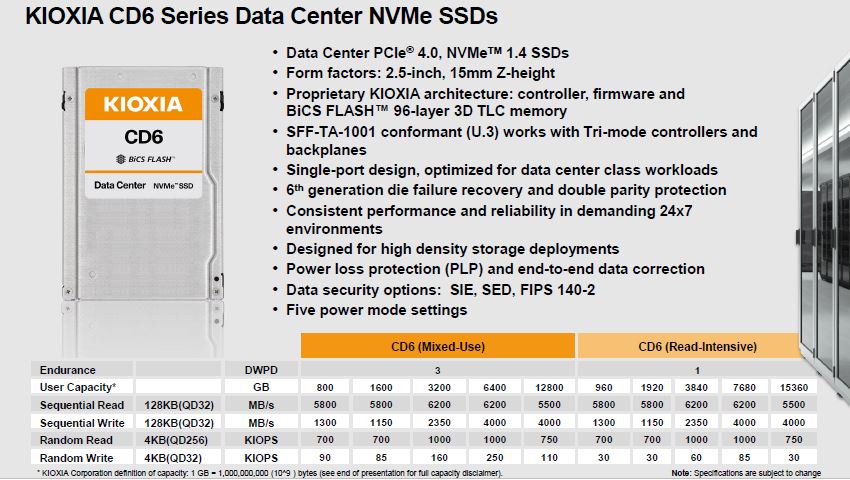

We previously discussed the key specs of the Kioxia CD6 and CM6 PCIe Gen4 SSDs but we wanted to pull in some of that spec information here. These are the specs of the Kioxia CD6. We are specifically testing the CD6 (Read-Intensive) 7.68TB (7680GB below) version. This will offer us 1 DWPD endurance and performance well beyond PCIe Gen3 x4 NVMe SSDs. Some of the other key features here are that the drive features power loss protection (PLP) along with a number of data recovery features such as the ability to recover from an entire NAND die failure. Also, we have updated security options which are important in current drives. While the Kioxia CM6 has a dual-port design, the CD6 is a single port design that aligns more closely with the way most NVMe servers are being built and deployed.

Read-intensive means that this drive is designed for many workloads that are focused on writing some data, but then frequently accessing the data that is stored. There are write-intensive drives out there that are focused on serving as log devices where the model is to write once/ read once or not reading data written at all in most cases. For the majority of workloads, read-intensive drives are going to work fine. At STH, we purchased many drives in the SATA SSD era second hand and used SMART data to discern how much data is actually being written to drives in the real world. Used enterprise SSDs: Dissecting our production SSD population. As an excerpt from that piece:

This is only over a subset of the SSDs we purchased for that article, so this only includes 234 of the SSDs in that piece. These were older drives, so they were much smaller than the 7.68TB SSD we have here. At the time, “read-intensive” was generally closer to 0.3 DWPD and so we found that 80-90% of the SSDs that we purchased were fine at that rate. With 1 DWPD and larger drives, there is a good chance the vast majority of workloads will work well on a modern large 1 DWPD drive. Many data center customers will purchase higher-end drives because they simply do not want to think about the repercussions of having to manage workloads to specific drives. These days, there are fairly well-defined segments for general purpose SSDs, log devices, and boot devices so we see this as less of an issue than when we did that study.

Let us move on to performance testing, and what we had to do to test the drives. There we found way more than we expected so first, we are going to discuss what we found when starting Gen4 drive reviews, then we will get into more of the performance aspects.

I surprised for this phrase: “aside from databases where we do not use NAND to store them anymore”…, neither for index files?