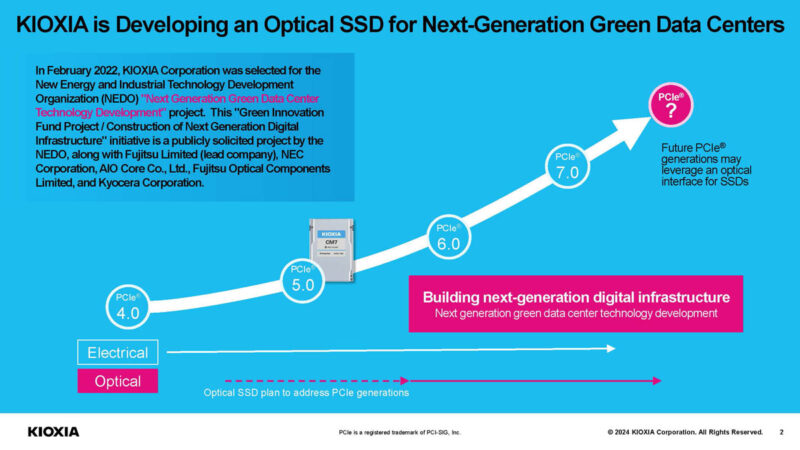

Kioxia, AIO Core, and Kyocera now have a faster PCIe Gen5 over optical SSD. The new generation builds on the previous PCIe Gen4 version but doubles the speeds. This is a technology really designed for future data centers, but it is also one that we saw the previous generation of at FMS 2024 in August 2024.

Kioxia, AIO Core, and Kyocera Develop PCIe Gen5 Over Optics SSD

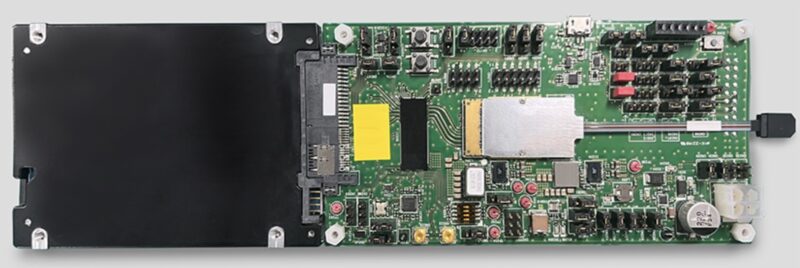

This is the new demonstration unit of putting a Kioxia SSD’s PCIe Gen5 signaling over optical fibers.

The unit takes a Kioxia NVMe SSD, AIO Core’s IOCore optical transceiver, and Kyocera’s OPTINITY optoelectronic integration module technologies and puts them together. Here is the FMS 2024 demo board running at the show for some reference point.

Putting a NVMe SSD over optical networking is not just about getting the data signal. Instead, a solution needs to be developed to power the SSD as well, and that is one of the major functions of the attached PCB, aside from handling the electrical to optical (and optical to electrical) signal conversion.

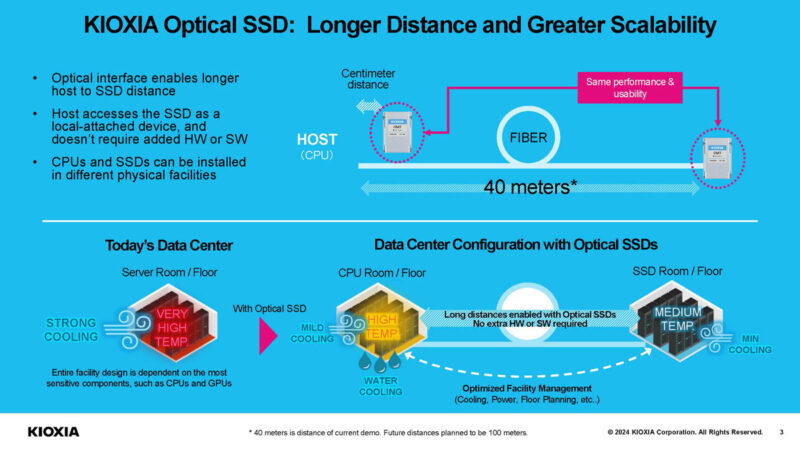

The idea behind this is that one can move the SSD out of the rack by increasing the PCIe distance.

That may not seem like a big deal, but remember, we covered in the NVIDIA GTC 2025 Keynote and also earlier this year in the Substack, that companies like NVIDIA and AMD will be out with 500-600kW racks in 2027.

Getting to that power density requires not only liquid cooling but also removing components like power supplies, liquid cooling CDUs, fans, and more from racks. While NVIDIA Rubin NVL576 Kyber racks still have SSDs in the compute blades, having that many SSDs locally presents another opportunity if they can be removed from the chassis and to another part of the data center or another rack entirely.

Final Words

Our sense is that this is still a prototype product as it seems like the push for a technology like this will come in the PCIe Gen6, Gen7, or Gen8 eras where signaling gets even more challenging as to space constraints. Using an optical interconnect also allows for switching which may be required as the I/O needs of other components grow faster than SSD interface peformance needs.

Hopefully we get to see these in action in the future as this is a neat technology.

I’m a little unclear on what Kioxia is driving at here.

It seems unarguable that, if you get past a certain combination of fast and far, a traditionally copper inteface is going to go optical; but there’s normally a cost associated with taking that plunge(both direct BoM costs and complexity or versatility costs if what used to be a PCIex4 interface now has some proprietary or somewhat niche optical interface slapped on(since “no extra HW or SW” is only going to be true if it’s a pre-install rather than a converter card); and there’s also the obvious but not really addressed elephant in the room of one flavor or another of storage networking.

I just look at this and it seems neither fish nor fowl: adding an optical interface converter on both ends of the link(unless they are getting significantly greater cost effectiveness or efficiency because of how special purpose it is) seems like a lot just to move an SSD next door(especially if it means cabling each SSD across the hall or committing exclusively to the NVMe-era equivalent of fiber channel HDDs); while being much less versatile than just going with my preferred flavor of storage networking and being able to easily disaggregate drives from hosts and do tiered storage and all the other potentially interesting SAN stuff.

Is this a general thesis for a future of Multi-host/multi-root SR-IOV all the things handling of disaggregated PCIe networks; just weirdly myopic looking because the vendor demoing it has SSDs on the shelf? Is it actually as conservative as it looks and basically someone looking to re-create the SAS drive shelf for NVMe(or even the simplest subset of the SAS shelf; since I don’t see anything about multihoming or some of the other things you can get involved in with the more fiddly SAS topologies)?

@fuzzy, Quotes from the previous article (August 6th, 2024):

1. “One of the concepts is that this could allow for SSDs to be placed in locations far away from hot CPUs and GPUs that may be liquid-cooled. Instead, NAND can be placed in a more moderate temperature room or containment area where it performs the best.”.

2. “We asked, and in theory this type of technology can be used for things like GPUs.”.

2. “We asked and this demo is more like PCIe over optics (without the electrical part) rather than converting it into another protocol like Ethernet.”.

Synopsis listed some benefits in their blog: “The Future of PCIe Is Optical: Synopsys and OpenLight Present First PCIe 7.0 Data-Rate-Over-Optics Demo”.

An article from last year at Tom’s tells about initial working hardware (for SSDs / CXL / GPUs to connect to, on the motherboard): “TSMC’s silicon photonics technology relies on the Compact Universal Photonic Engine (COUPE) that combines a 65nm electronic integrated circuit (EIC) with a photonic integrated circuit (PIC) using the company’s SoIC-X packaging technology.”.

Higher efficiency, speed, and lower latency are some of the benefits.

Seems like a Home Lab User could beef up CPU Horsepower by using a Micro mini PC w/ Ryzen 9 CPU for both their User PCs and a DIY NAS 10GB or better Build. Connect all with 10GB or better fiber in the same building. My vintage 2020 QNAP 10GB & aggregated dual 2.5 GB and my 2019 Synology 1 GB both have internal SSD’s and external NVMEs and nice and fast all connected with fiber except the media converter to the NAS ports. Both servers are available to all rooms via fiber, WiFi, etc. For my next NAS upgrade I’m thinking DIY. For User PC if Ryzen 9 isn’t enough then make the jump to EPYC.