The title of this is harsh, but we are going to go into this in a bit more detail in the article. If you saw our Top500 November 2022 Our New Systems Analysis: Lenovo Has More Stuffing Than A Turkey, then we have some bad news. The list stuffing took on a whole new dimension at ISC 2023 to the point that it is starting to impact the integrity of the Top500 list. We are going to do our new systems analysis, but the results were nothing short of shocking despite a rosy veneer many tried to put on the list. This Top500 list is the equivalent of watching once vibrant ocean reefs of 25 years ago die off.

For those wanting to take a trip down into the archives, you can find our previous pieces here: November 2022, June 2022, November 2021, June 2021, November 2020, June 2020, November 2019, June 2019.

Top500 New System Trends

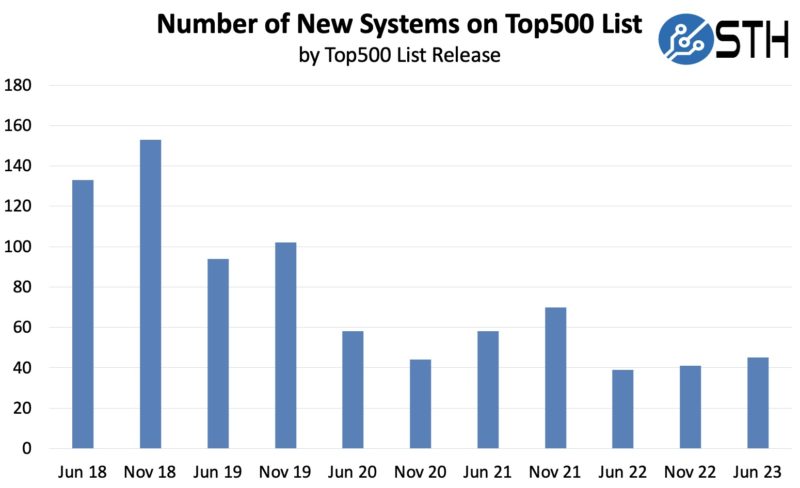

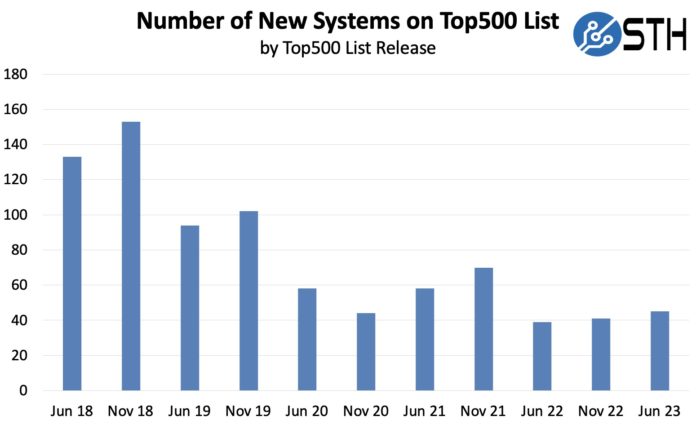

First, we highlight the sheer quantity of turnover in the Top500. When we started doing this analysis in 2018, we more than a quarter of the list turnover with each new publication. In November 2020, the industry noted the decrease in systems attributed to the pandemic. We are now post-pandemic and it is hard to see a real rebound:

In this list, we have 44 new systems. In the two prior lists, we had 41 and 39 new systems. One way to look at this is it is recovering. The other way to look at this is that we are exiting the pandemic with fewer new systems and no new top 10 systems in this list (June 2022 was Frontier, still the #1 system in the world.) What we will discuss later is that one could argue that, save severe gamesmanship by a vendor, the number should have been 26 or so not 44 new systems.

The Top500 list is dying.

Top500 New System CPU Architecture Trends

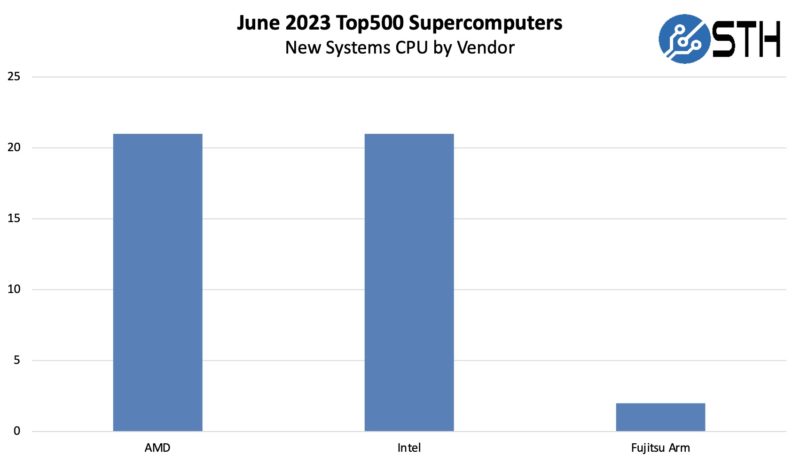

In this section, we simply look at CPU architecture trends by looking at what new systems enter the Top500 and the CPUs that they use. Let us start by looking at the vendor breakdown.

AMD edged out Intel for the most new systems on the list. Intel’s figures were significantly bolstered in this list from a Chinese HPC vendor’s list of stuffing practices. Intel was the primary beneficiary of that stuffing. Fujitsu Arm A64fx also added new systems to the list which was awesome to see. In 12-18 months we expect Arm to add more than this.

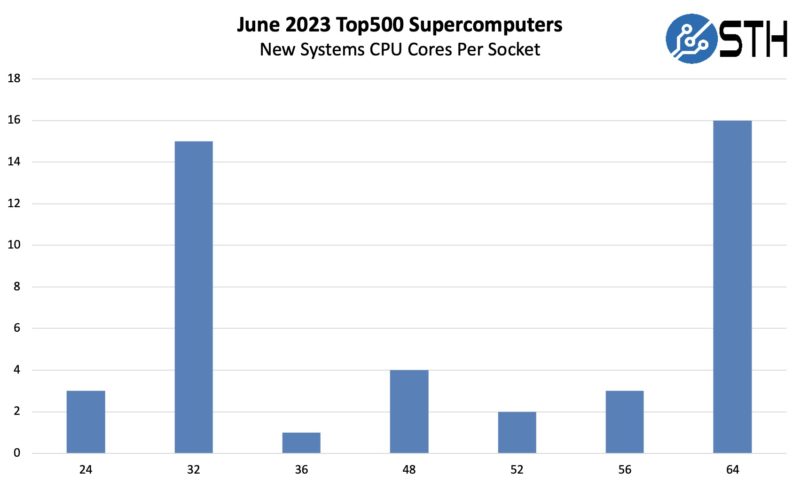

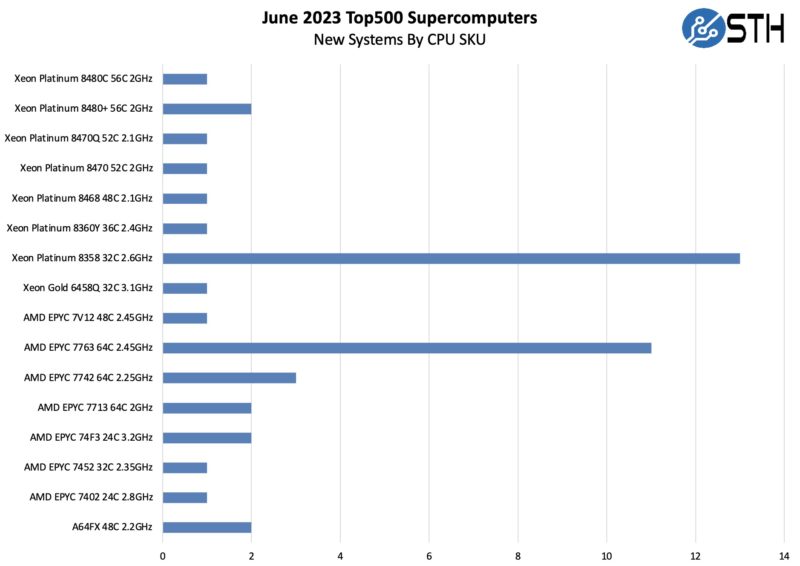

When we go to CPU cores per socket, we see a few interesting tidbits. Notably, the low core count is now 24 and the two most popular core counts were 64 (AMD EPYC) and 32. 56 cores are from Sapphire Rapids.

Notably absent were 96 core parts, especially since the AMD EPYC 9004 “Genoa” launch was in November 2022 (availability was later, and that is a big reason for this.)

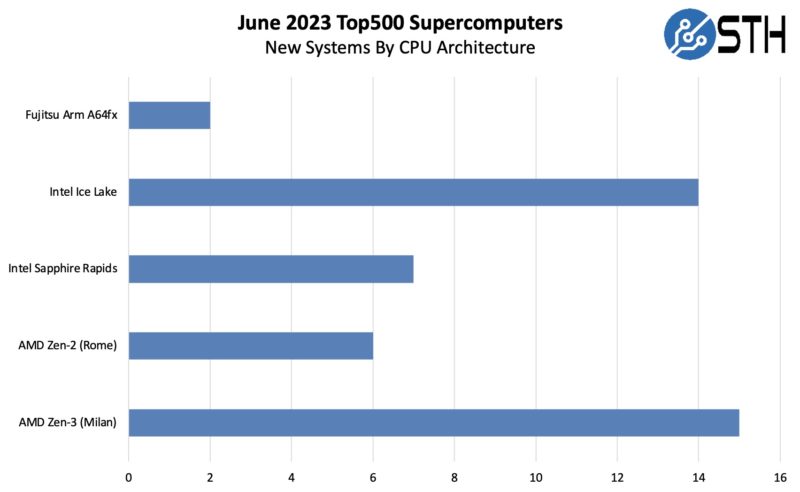

In the June 2022 list, the most popular architecture was AMD’s Zen3 or 3rd generation AMD EPYC. Here we can see a resurgence of 2019 generation Cascade Lake processors. This is due to the extreme stuffing that is taking place.

Here are the actual SKUs that are being used:

Perhaps the strangest part of this is that AMD announced its PCIe Gen5 generation (Genoa) first, but Genoa is not on the list. Intel announced its PCIe Gen5 generation (Sapphire Rapids) second, but it has seven new systems on the list. AMD has a lot of EPYC 7003 “Milan” and EPYC “7002” Rome on the list while Intel has a lot of Ice Lake.

Accelerators or Just NVIDIA?

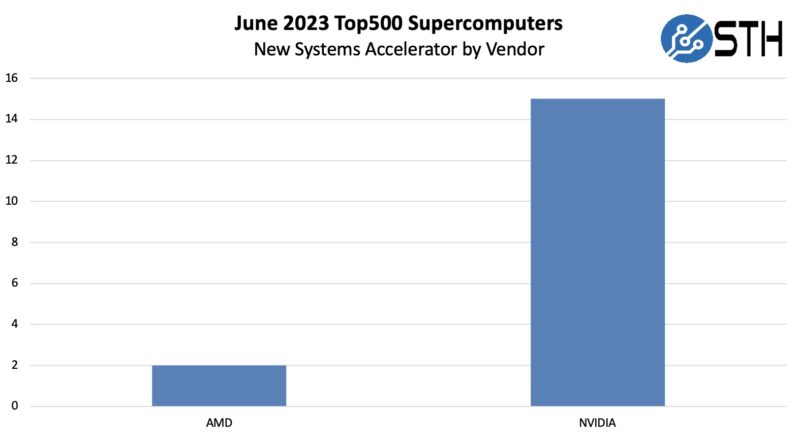

NVIDIA has been so dominant in the accelerator for HPC market that we have a section specifically addressing that in its title. In this list, it was nowhere near as dominant, but it was close:

AMD had two more systems as it did in November. NVIDIA had 15. That means 17 of the 44 new systems had accelerators. Two are A64fx but do not have accelerators. One bit to keep in mind here is that we are going to discuss 19 of those remaining 25 CPU-only systems later.

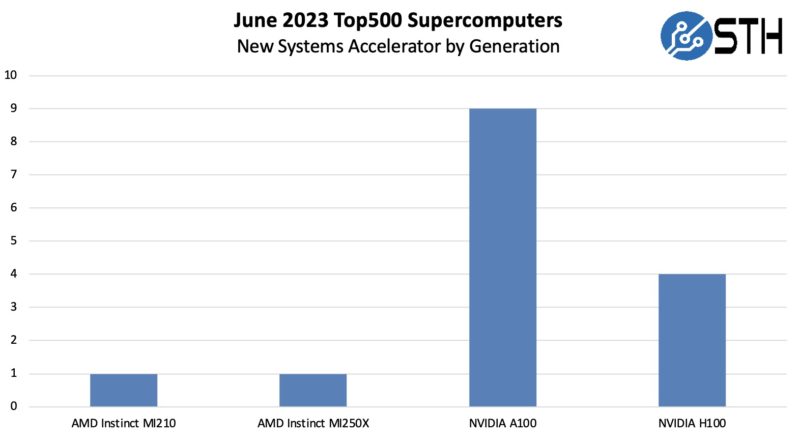

NVIDIA H100 and A100’s dominated the list. Something to keep in mind here is that there were only four H100 systems.

Putting that into a bit of perspective, it takes somewhere in the 6-8 NVIDIA DGX H100 or HGX H100 systems to make the Top 500. NVIDIA just posted a huge quarter in the data center. The four H100 systems were NVIDIA’s Pre-EOS at #14, then #190, #397, #483. Given it only takes 128 DGX H100 (8x GPU) systems to take the #14 spot, and that is roughly 20x the performance of the bottom of the list systems, that gives just some sense of how many H100’s were actually benchmarked as part of the Top500.

At some point, we have to call it what this is: NVIDIA’s high-end GPU customers do not care about the Top500. A company buying fewer than ten DGX H100’s can get onto the Top500. It seems as though NVIDIA sold a lot of H100 and A100 systems. It just seems like they are not running Linpack to make it to the Top500. The HPC community is smart and has figured this out. AI performance is now where the market’s focus is.

Take this a step further. Intel did not have a new AI accelerator. NVIDIA pulled its Grace Hopper and DGX GH200 announcements to the week after ISC for its Computex keynote. The GH200 will have Top500 performance, but the announcement was not prioritized for the HPC show. AMD, for its part, has a data center event in mid-June. AMD and NVIDIA both declined to launch HPC products at ISC 2023.

Fabric and Networking Trends

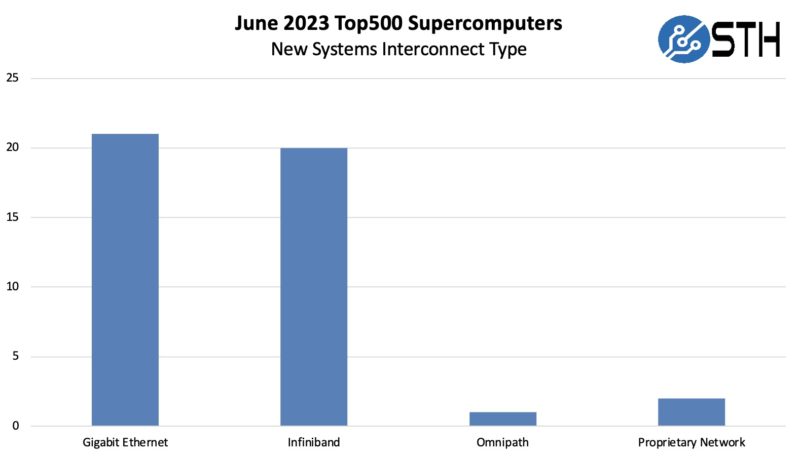

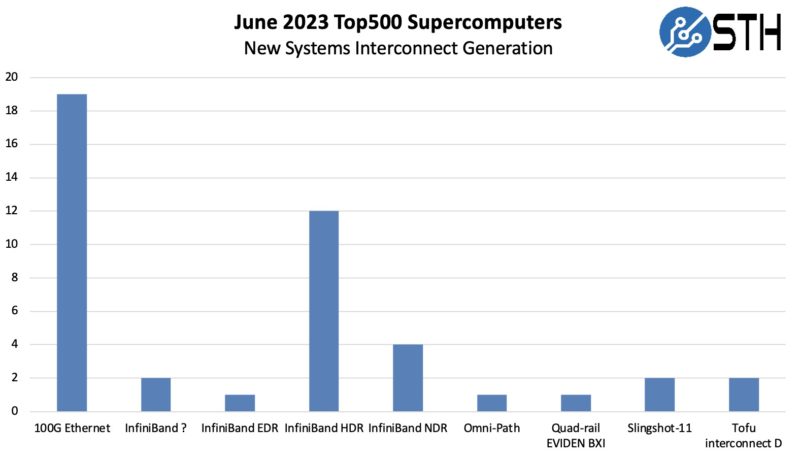

Here is one that many regulars to this piece will identify with. On the Interconnect side, Ethernet had been by far the most common solution. In this list, InfiniBand made a huge comeback.

The big announcement a few days after the Top500 list was released was that NVIDIA is positioning its new Spectrum-X / Spectrum-4 Ethernet for AI clusters where InfiniBand was the previous default for NVIDIA clusters.

Here is a look at the Interconnect by generation details. InfiniBand ? was two systems at the same site based on the Dell PowerEdge XE8545 we reviewed that did not have a type of InfiniBand listed.

At least this list has much more diversity. We even have Omni-Path! Also, 25GbE did not make the list which is an accomplishment in itself. There is evolution on the interconnect side.

Extreme Gaming for Prestige

Let us get to extreme gaming. HPE, Dell, and Fujitsu all had Site IDs listed with two systems entered. For example, Fujitsu’s two systems were Japan Meteorological Agency 1 and Japan Meteorological Agency 2. HPE had the Derecho CPU and GPU partitions at the National Center for Atmospheric Research (NCAR) listed as two machines. Dell had those XE8545 clusters installed at a South American Oil & Gas company. Each of those sites had two new systems for traditional HPC clients.

Then there was Lenovo.

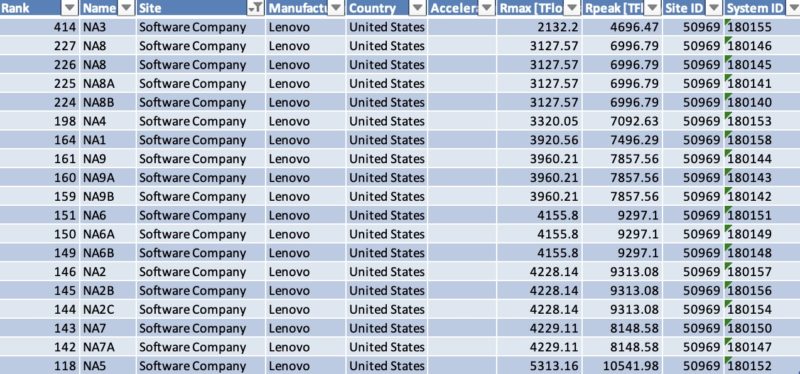

One site ID at a “Software Company” had multiple CPU and Ethernet-only systems partitioned off from the company’s cluster and submitted as separate systems.

Normally we see a Site ID have 1-3 systems. 19 is extreme to say the least, especially when we are celebrating 44 systems. If these were submitted as perhaps AMD and Intel clusters instead, the number of new systems would have only been 27.

These nineteen systems from the same Site ID have a couple of major impacts:

- It drastically increases the number of new US systems

- It is the reason Lenovo is the largest vendor

- It is why China is such a large HPC vendor, despite no longer submitting domestic systems

- It sets a precedent for others.

For example, if NVIDIA partitioned its pre-EOS system into ~20 smaller systems, it could have pushed for the #1 HPC vendor. It did not care enough to do so and instead took the #14 spot. As we discussed earlier, NVIDIA likely has 20+ customers with >10 DGX H100/ HGX H100 systems that simply do not care enough to submit to the Top500.

Final Words

The ISC 2023 timed Top500 list should be a horrifying wake-up call to the HPC community. To recap:

- The number of new systems being added to the list is plummeting, even while being bolstered by having a single Software Company’s single site having 19 of 44 new systems

- A major HPC powerhouse nation does not submit its systems, only those it sells elsewhere, and often to non-HPC clients

- Supplier and country data are skewed by gaming, and this has been consistent for years but has gotten worse

- Mixed precision is being driven by massive investment in AI, yet Linpack is FP64

- Companies buying highly capable FP64 clusters for AI are not submitting Top500 results

- AMD and NVIDIA de-prioritized ISC for their own event releases within a few weeks because they are following the market for high-performance AI computing

- The presentations at ISC discussed AI because that is where a lot of the market is moving, not towards more FP64

The perhaps scarier part of the above is considering the inverse. If the Top500 decided to tell Lenovo that this level of gaming was not going to be accepted, what would happen? The Top500 list would have perhaps 27 new systems. That would put the list on a 9+ year turnover rate.

In a world where NVIDIA has been effectively sold out of H100’s in 2023 for months, previous-gen A100’s are selling like hotcakes, AMD is about to launch a new architecture, and Intel Xeon Max and GPU Max are shipping, we know there is a lot of industry progress. That industry progress, even with new faster FP64 halo parts, is no longer being represented on the Top500. A crackdown on gaming would make the Top500 seem deprioritized and detached from industry progress.

To maintain the list’s integrity, the Top500 would need to cut back on gaming. The resulting reduction in new systems would, in turn, make the Top500 show a dangerously low turnover rate and therefore seem less relevant.

The way out would probably be NVIDIA working with its partners and customers to submit even smaller DGX/HGX clusters. At the same time, NVIDIA’s market cap gains are being driven by its H100’s AI performance rather than its traditional HPC performance.

Thank God for STH! The HPC community is an echo chamber. They’ve got small media in the pocket of vendors so they’ll never call them out like this or just flat state what we’re all seeing that NVIDIA doesn’t care about HPC even if they say they do. The margin is in AI so that’s where the money is for these chips.

It’s refreshing to see a big site state the obvious, even if you’re a bit late on calling this.

F I R E

There’s gonna be pitchforks for sure but ya got it right.

I appreciate that you don’t just recite the findings and have a unique perspective.

Why no vid on this?

Your youtube videos are so popular you’d reach more with this message.

If you want change you’ve gotta hit both audiences

The lack of relevance of the HPL to problems of scientific interest has been pointed out by Jack Dongarra the creator of the benchmark for some time. The problem is that real HPC is memory, latency and bandwidth bound while the benchmark primarily reflects floating point performance.

The HPCG is more relevant and it’s interesting that in 2023 Fugaku is ahead of Frontier by 14 percent on that benchmark.

I agree benchmarks of cloud infrastructure not actually be used for scientific computing, while interesting as a point of comparison, do not belong on a list of the top 500 machines devoted to research and science. To keep the noise from drowning out the signal, I would suggest retaining 500 machines for which half the cycles are devoted to HPC. Those systems which don’t satisfy this requirement could appear as extra entries in order with footnotes on the list but not enumerated with an official ranking.

Is there any point in keeping the list so long? Are there that many systems doing classical FP64 HPC anymore? I think it would be better to toss out the fake systems and list only the top 100 or so.

They’ve got to clean this up. I can’t believe they even allowed 19 same customer and site Lenovo systems. They’re also allowing all of these Lenovo systems to be anonymous. If you look at the data they’re not getting classified HPC systems on the list with that. They’ve just been getting these web hosting customer systems from Lenovo.

You’re right, they’ve had to choose having a good list and having a big list and they chose a big list.

In reply to “Are there that many systems doing classical FP64 HPC anymore?”

There are thousands of such systems. Some have been bumped off the list by general cloud infrastructure and a certain vendor’s advertising gimmicks. Others never ran the HPL benchmark, possibly because it is irrelevant and there are real scientific computing workloads more important to optimize for performance. For example, this may be why recent Chinese exascale systems were never submitted to the list.

Advances in AI have also lead to increases in HPC because traditional HPC is often the cheapest or only way to create the training data.

The bigger death kneel for Top500 is that it doesn’t count the dozens of exascale AI training systems at hyperscalers as “real” supercomputers since they don’t run fp64. It used to be that the hardest problems that had the most compute thrown at it were all double precision, but the elephant in the room is that 16 bit training workloads have usurped fp64 as top dog, and this happened years ago.