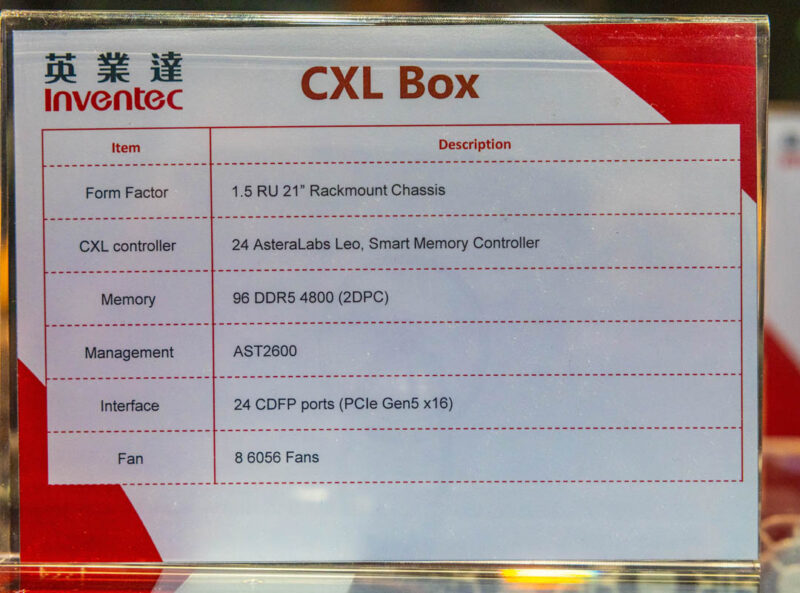

This is it. For more than a decade and a half at STH, I have been hearing server companies talk about disaggregated memory and having storage, memory, and compute shelves. Inventec and Astera Labs have made that possible with a 96x DDR5 DIMM memory shelf. Using CXL x16 connections, it can connect to an upcoming 8-way Intel Xeon 6 Granite Rapids-SP server that itself has 128x DDR DIMM slots for a whopping 224 DIMM slots in a single scale-up server. We are about to get tens of TBs of memory in a single server, which is huge for scale-up server applications.

For this, we have a short:

Inventec 96 DIMM CXL Expansion Box at OCP Summit 2024 for TBs of Memory

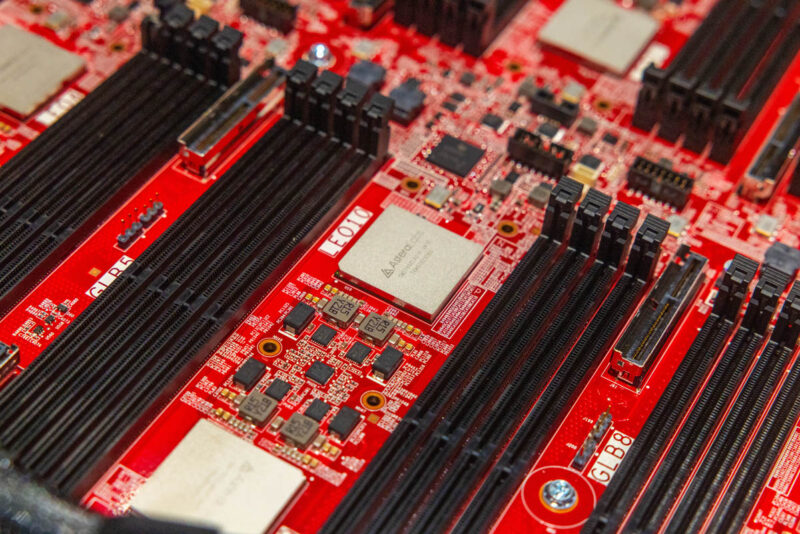

The DDR5 DIMMs are DDR5-4800, and the box uses the Astera Labs Leo, which we have covered many times on STH.

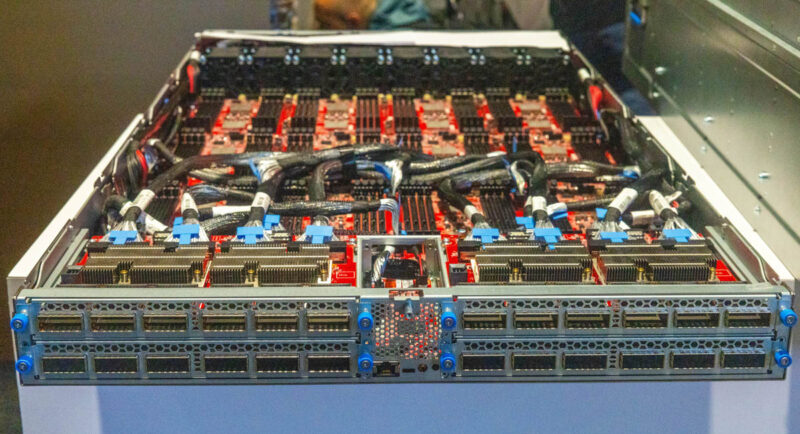

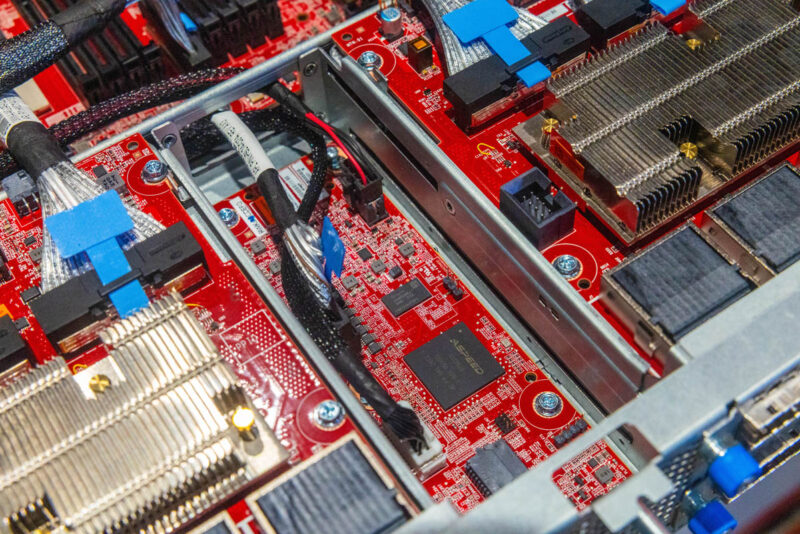

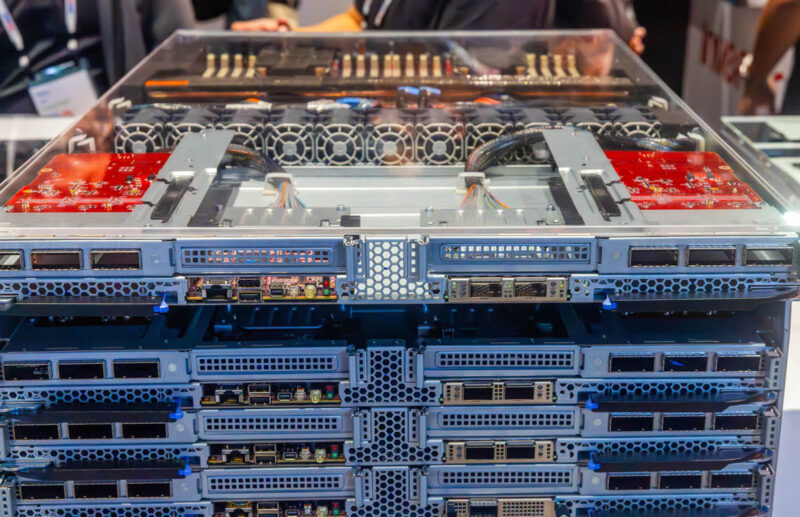

On the front of the server, we have 24 CDFP ports. Each carries a PCIe Gen5 x16 connection between the CXL box and the host server or servers.

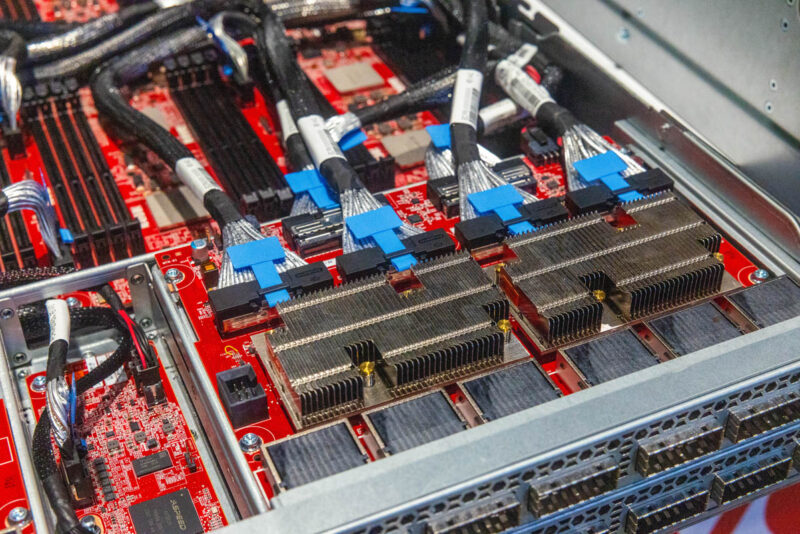

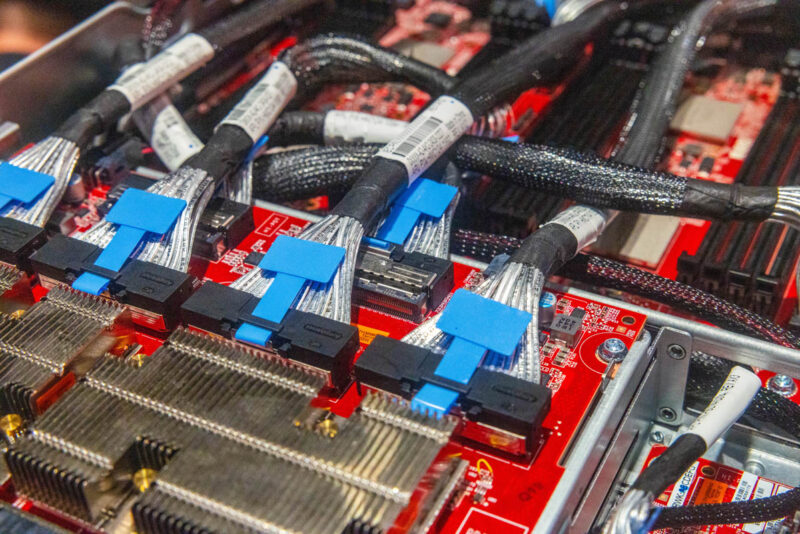

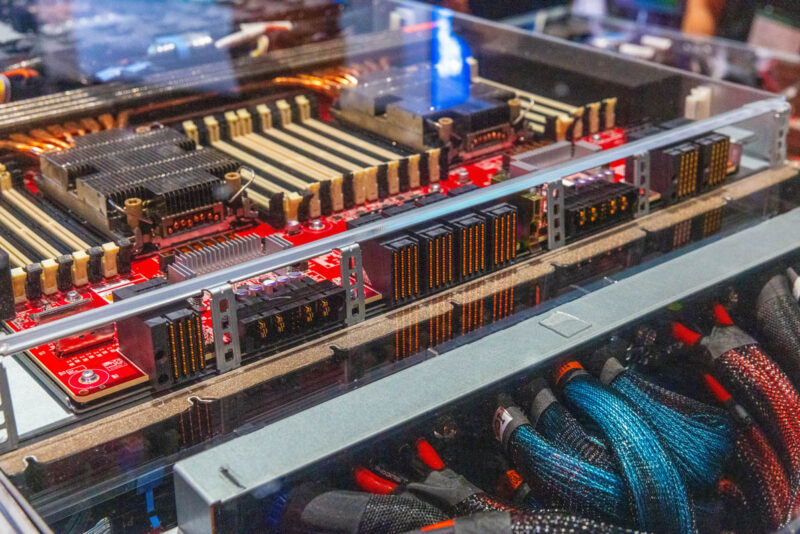

Behind the CDFP ports we have Astera Labs retimers.

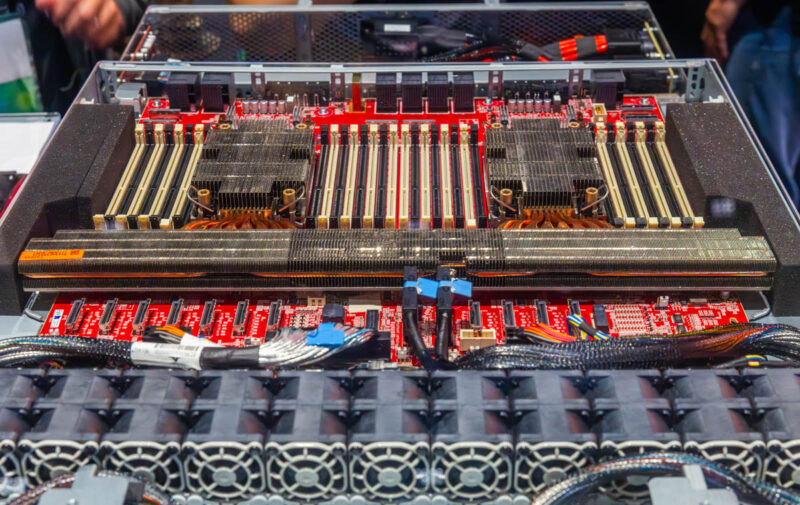

There is then an array of MCIO connectors and cables to the main memory board.

There is also an ASPEED AST2600.

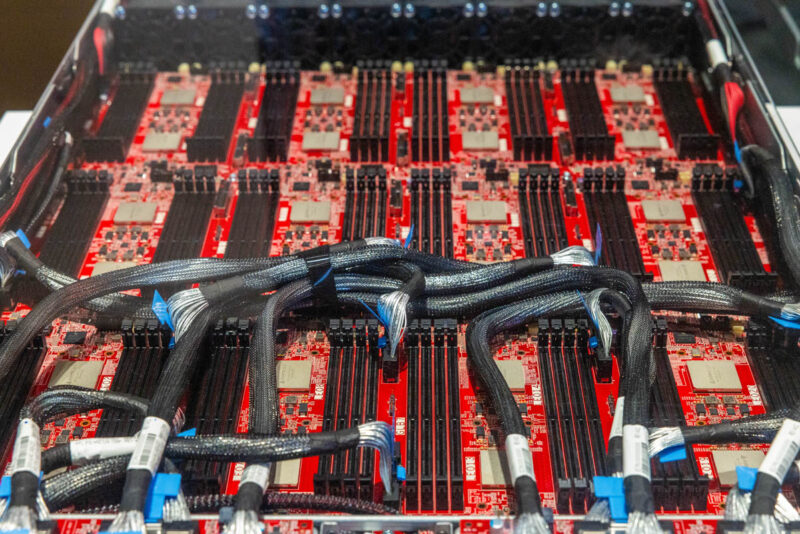

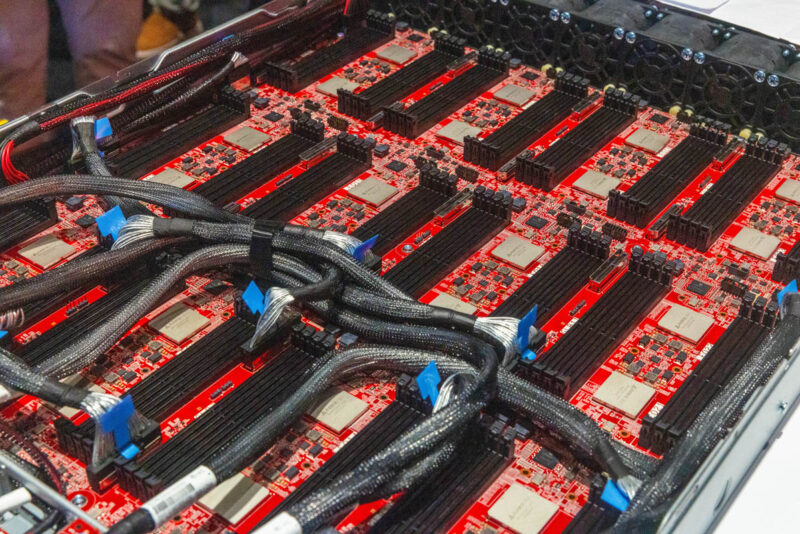

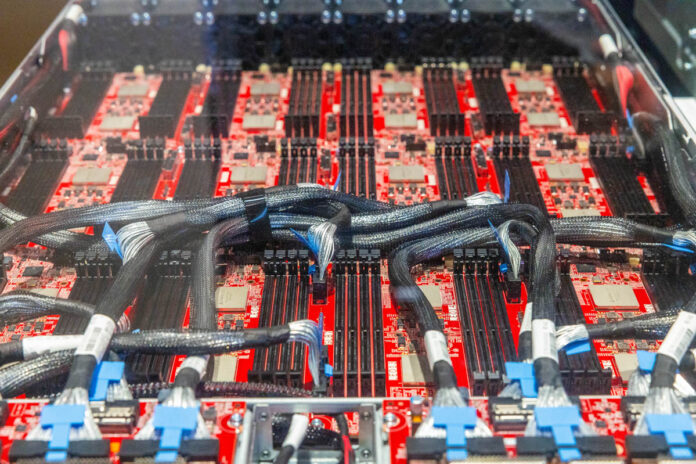

The main DIMM section of the chassis has 96 DIMM slots and 24 Astera Labs Leo controllers.

Inventec had this only half cabled so one can see the slots and controllers easily on the other half.

Each Astera Labs Leo controller attaches to a cabled connection to the front as well as four DDR5-4800 DIMM slots.

CXL makes this all happen. We have a lot on CXL and how it allows one to re-purpose the physical layer of PCIe lanes and add memory to a system with Type-3 devices.

Inventec 8-way Intel Xeon 6 Server for 224 DIMMs Total

While one option with a memory shelf like that is to attach it to multiple systems, Inventec showed something cooler. This is an 8-way Intel Xeon 6 “Granite Rapids-SP” system. Granite Rapids-SP is the P-core Xeon 6 that has 8 memory channels and can scale to these 8 CPU system topologies.

The eight CPUs are placed on four dual-socket trays.

We can see the 3-port CXL x16 cards on the front of the server.

Each CPU socket has eight channels of DDR5 memory and is a 2DPC configuration. That means that each tray has 32 DIMMs. Four trays in the 8-way server gives us 128 DIMMs total.

The four dual CPU trays are connected with UPI over a backplane to make a single system.

This was also the first 8-way Intel Xeon 6 system we have seen.

Final Words

Between the 128 DDR5 DIMM 8-way Intel Xeon 6 server and the 96 DIMM expansion box, that gives 224 DIMMs of capacity. In other words, topping 20TB of memory in a server like this is possible, making tens of TBs of memory a reality. CXL Type-3 memory shelves are one of the most exciting developments in the computing industry. While we are showing the 8-way server designed to connect to the box, simply changing where the cables attach to could make it so the CXL memory expansion box can connect to many different servers instead. This is a flexible design that will be even more exciting as we get CXL switches.

Super cool!

We’re witnessing the birth of the JBOR…

8 CPUs in 1 server connected via a backplane, this kind of seems like something from a bygone age that has somehow come back

@Thomas That era did take a bit of a dip on x86 with Cooper Lake and Ice Lake as the Intel offers were rather thin then and software licensing was transitioning from per socket to per core models used today. Now that the big software premium for scale up licensing is not really there (ie only marginally more expensive to go with four 16 core chips vs. one 64 core product), there is increased demand due to scaling up memory capacity and PCIe bandwidth alongside each other.

IBM has been IBM with their mainframes and POWER line up which offers modular designs like this on the top still.

Ultimately I see a resurgence of these style of systems once photonics takes off as signals over fiber have a much further run-length (though not necessarily faster).

> In other words, topping 20TB of memory in a server like this is possible, making tens of TBs of memory a reality.

People have been running 24TB SAP HANA machines for a few years now though? All the big clouds have an offering like that.

I’m also waiting for photonics and fiber, but maybe not so much for *distance* as for connector and cable size. I mean, look at the size of the internal cables–they had to remove them to improve the pictures. This feels like the same sort of issue as with networking, where DAC cables are cheap but they’re so bulky, heavy, and stiff that I’d usually rather spend the money for optics and fiber, even for short runs.

I keep picturing a motherboard with a bunch of duplex LC connectors instead of MCIO plugs, and then having totally disaggregated slots, etc.

Mind you, I don’t know if this will ever really make financial sense for pre-built servers. It’d be cool for custom servers, but one-off standard chassis+standard motherboard+standard PSU servers are pretty much a thing of the past at this point. It’d be amazing for high-end desktops and workstations, but again — I think we’re nearing the end of the era there as well.

Is anyone working on endlessly scalable unified memory systems with this tech? If you can just scale-out memory without a big latency hit, why not also scale out compute too? Get rid of CPU interconnects, replace them with PCIe links, and let a user design their own unified N-socket machine. Unify a whole rack into a 10k core 100TB memory system? Sign me up (in 2030 when I can get it on eBay).

28TB only using 128GB DDR5. Also, these can add aggregate bandwidth. So you can get more memory bandwidth and more capacity, especially if you go with large DIMMS

Now we are talking.

Reminds me of an old NCR server I managed that had a memory board that big which, IIRC, held a couple GB of RAM in total.

Latency and cost. It’s not interesting otherwise.

It feels like the era of 128bit processors can’t be that far off with CXL, maybe 20-30 years.

Oh my goodness