Within the Intel Xeon Silver line, the Intel Xeon Silver 4116 is the current king. It is the highest core count and one of the highest clock speed CPUs in the Intel Xeon Silver series, and also the most expensive. With twelve cores and twenty-four threads, the compute power in an 85W TDP CPU is awesome. For some perspective here, the previous generation equivalent was the Intel Xeon E5-2650 V4 which had similar core counts and clock speeds but a 105W TDP.

Lower TDP, 10% lower cost and a more scalable platform, what is not to like? One key difference is where the Intel Xeon E5-2650 V4 was a part that had the maximum memory clock speed of its generation, the Intel Xeon Silver series is limited to the same DDR4-2400 as the previous generation. The Intel Xeon Silver 4114 also can address only half as much memory (0.75TB instead of 1.5TB) as the previous generation part. Despite being a $1000 CPU, the Intel Xeon Silver 4116 has the single AVX-512 FMA unit so its AVX-512 performance is severely limited.

Key stats for the Intel Xeon Silver 4116: 12 cores / 24 threads, 2.1GHz base and 3.0GHz turbo with 16.5MB L3 cache. The CPU features an 85W TDP. Here is the ARK page with the feature set.

Test Configuration

Here is our basic test configuration for single-socket Xeon Scalable systems:

- Motherboard: Supermicro X11SPH-nCTF

- CPU: Intel Xeon Silver 4116

- RAM: 6x 16GB DDR4-2400 RDIMMs (Micron)

- SSD: Intel DC S3710 400GB

- SATADOM: Supermicro 32GB SATADOM

One of the key advantages the Intel Xeon Silver series has is the ability to use up to 12 RDIMMs per socket. Intel’s embedded platforms and quad-core Intel Xeon E3-1200 V6 series platforms cannot do this in 2017. DDR4-2400 is the fastest memory supported on the Xeon Silver platform which is why we are not using faster speeds.

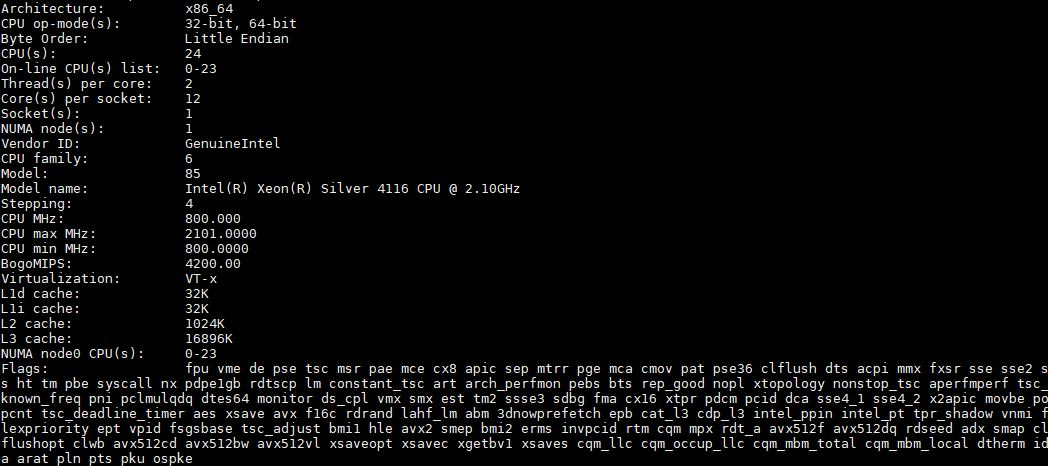

Here is the lscpu output of the chip:

Single Intel Xeon Silver 4116 Benchmarks

For this exercise, we are using our legacy Linux-Bench scripts which help us see cross-platform “least common denominator” results. We do have a full set of expanded benchmarks from our next-gen test suite (Linux-Bench2) so expect to see those results sprinkled in as we get a larger comparison data set built and a few in this review.

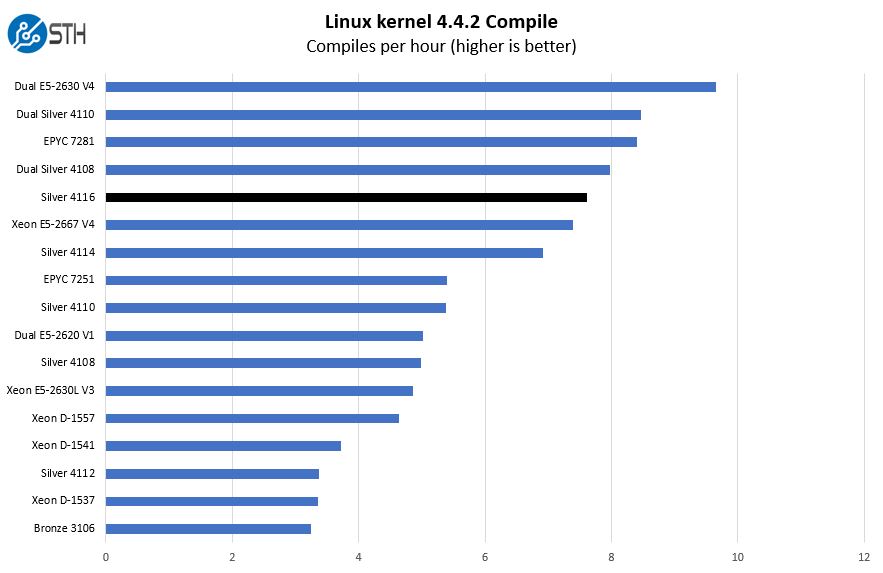

Python Linux 4.4.2 Kernel Compile Benchmark

This is one of the most requested benchmarks for STH over the past few years. The task was simple, we have a standard configuration file, the Linux 4.4.2 kernel from kernel.org, and make the standard auto-generated configuration utilizing every thread in the system. We are expressing results in terms of compiles per hour to make the results easier to read.

Keeping everything on the same CPU and offering higher clock speeds helps the Intel Xeon Silver 4116 keep pace with the dual Intel Xeon Silver 4108 setup. We are going to take a moment to acknowledge the single socket EPYC 7281 performance which is a higher power, higher performance and lower cost part from AMD.

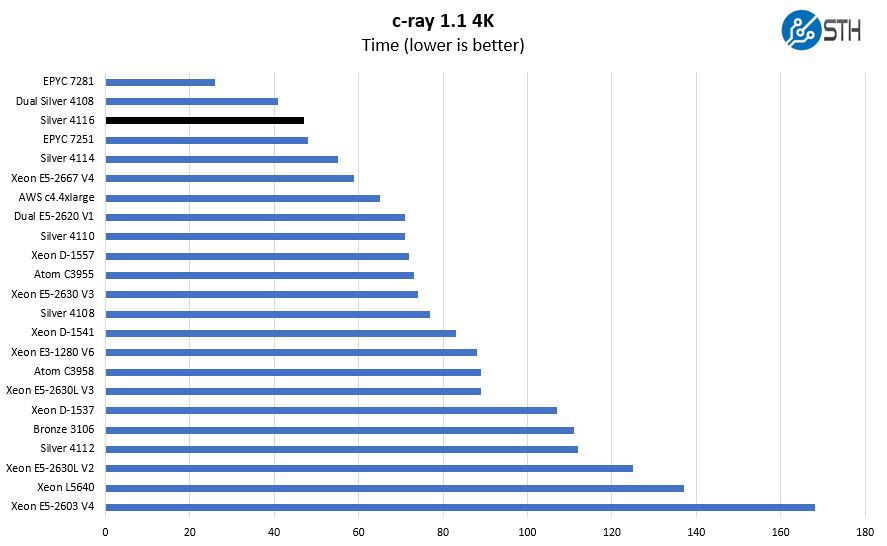

c-ray 1.1 Performance

We have been using c-ray for our performance testing for years now. It is a ray tracing benchmark that is extremely popular to show differences in processors under multi-threaded workloads.

Here the Intel Xeon Silver 4116 shows an interesting comparison point, being faster than two Intel Xeon E5-2620 V1s. For those looking to consolidate several legacy servers to a newer generation, it is now possible to transition a dual socket legacy server to a single low-power Intel Xeon Silver 4116.

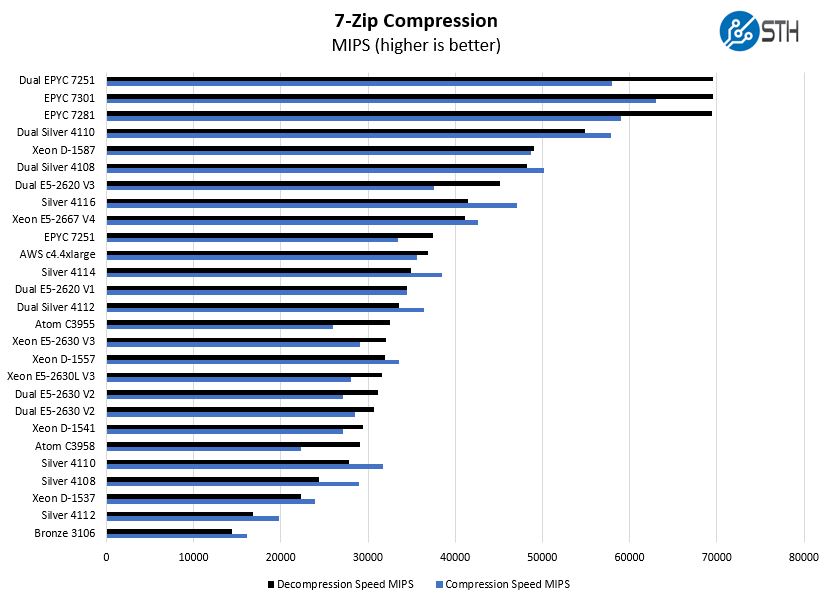

7-zip Compression Performance

7-zip is a widely used compression/ decompression program that works cross-platform. We started using the program during our early days with Windows testing. It is now part of Linux-Bench.

Here we see performance clearly ahead of the other Intel Xeon Silver offerings. We can also see the Intel Xeon Silver 4116 ahead of the AMD EPYC 7251 a 120W TDP part.

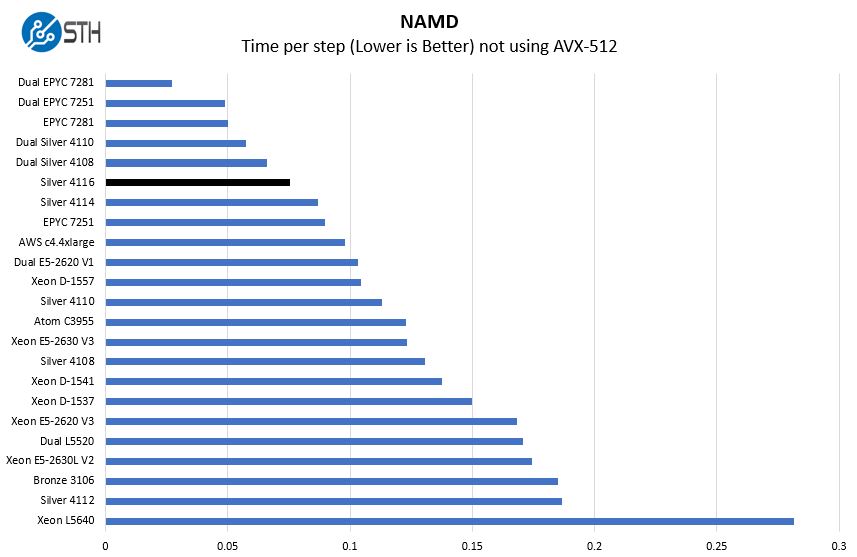

NAMD Performance

NAMD is a molecular modeling benchmark developed by the Theoretical and Computational Biophysics Group in the Beckman Institute for Advanced Science and Technology at the University of Illinois at Urbana-Champaign. More information on the benchmark can be found here. We are going to augment this with GROMACS in the next-generation Linux-Bench in the near future. With GROMACS we have been working hard to support Intel’s Skylake AVX-512 and AVX2 supporting AMD Zen architecture. Here are the comparison results for the legacy data set:

Here again we see strong single socket performance from the Intel Xeon Silver 4116. It falls just where we would expect it two ahead of the other Intel Xeon Silver and Bronze CPUs.

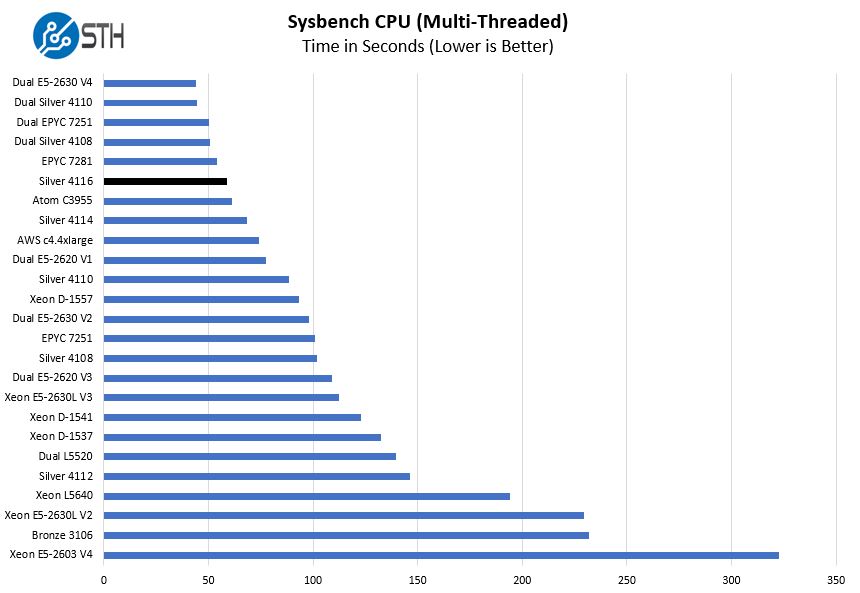

Sysbench CPU test

Sysbench is another one of those widely used Linux benchmarks. We specifically are using the CPU test, not the OLTP test that we use for some storage testing.

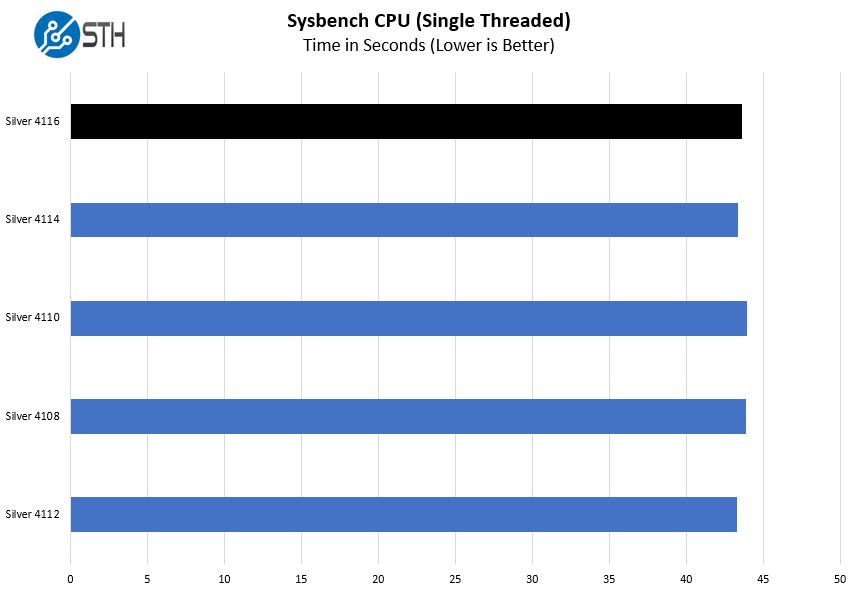

The multi-threaded test is informative, but we wanted to show a single threaded view to illustrate a more important point:

All five members of the Intel Xeon Silver family have a 3.0GHz clock speed. That means that the CPUs end up performing identically in single threaded tasks. This illustrates that limitation of each CPU.

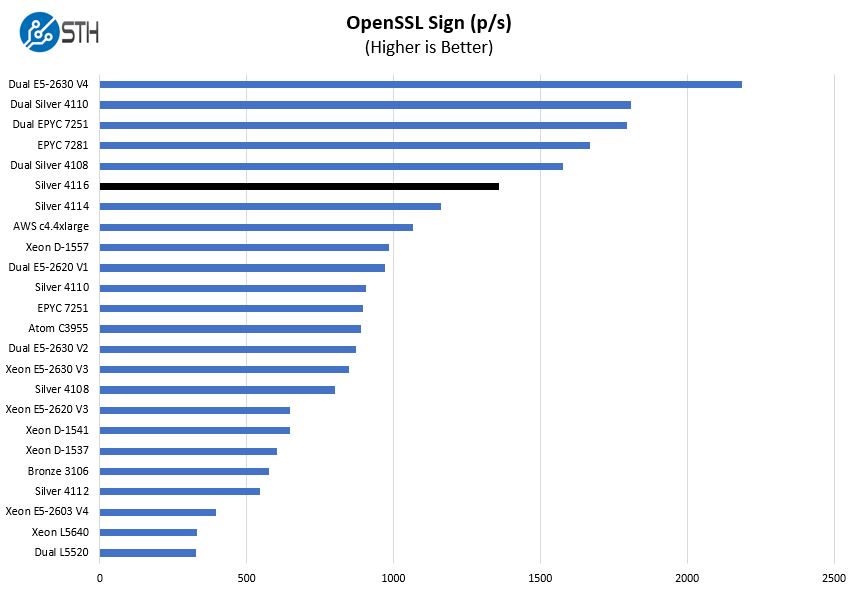

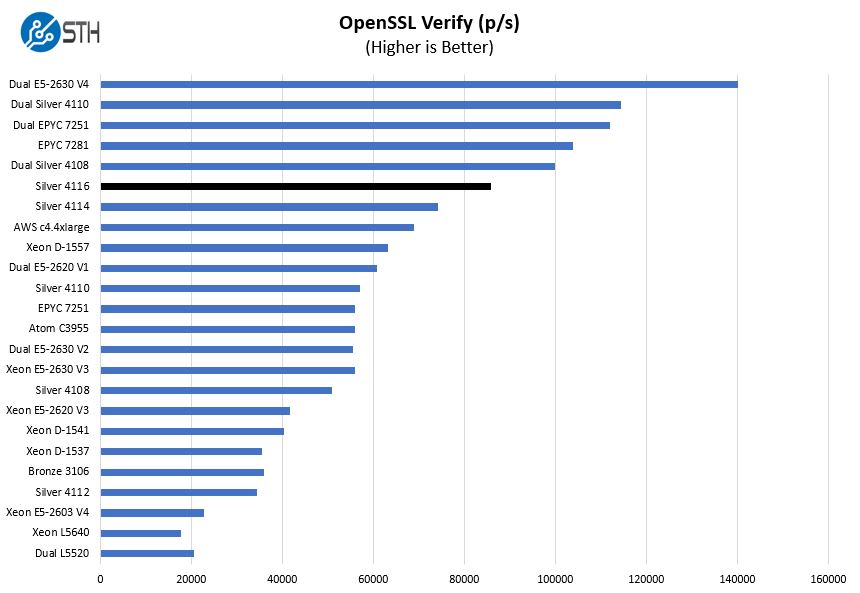

OpenSSL Performance

OpenSSL is widely used to secure communications between servers. This is an important protocol in many server stacks. We first look at our sign tests:

Here are the verify results:

Here we wanted to call attention to the Intel Xeon D comparison. The higher clock speeds and higher TDP allow the 12 core Intel Xeon Silver 4116 to outperform the Intel Xeon D-1557 by a wide margin.

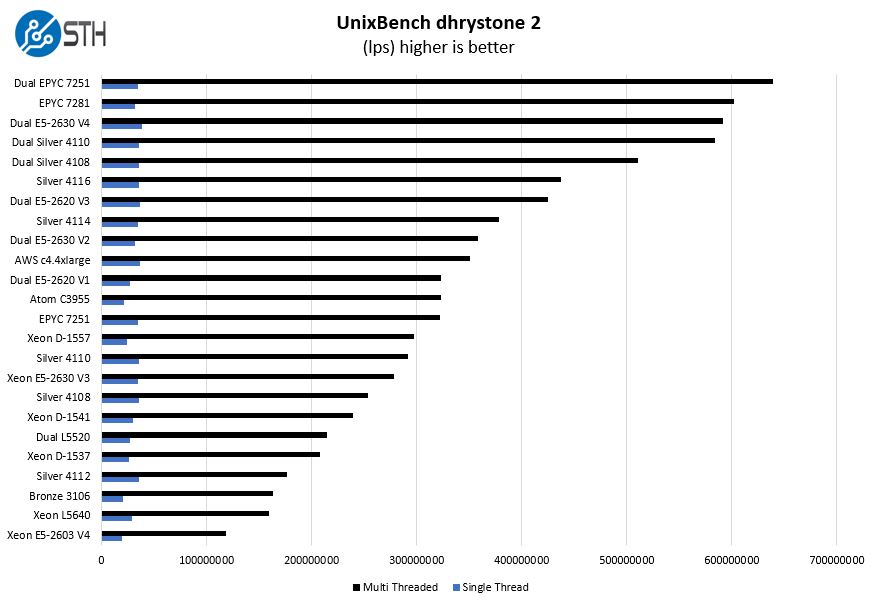

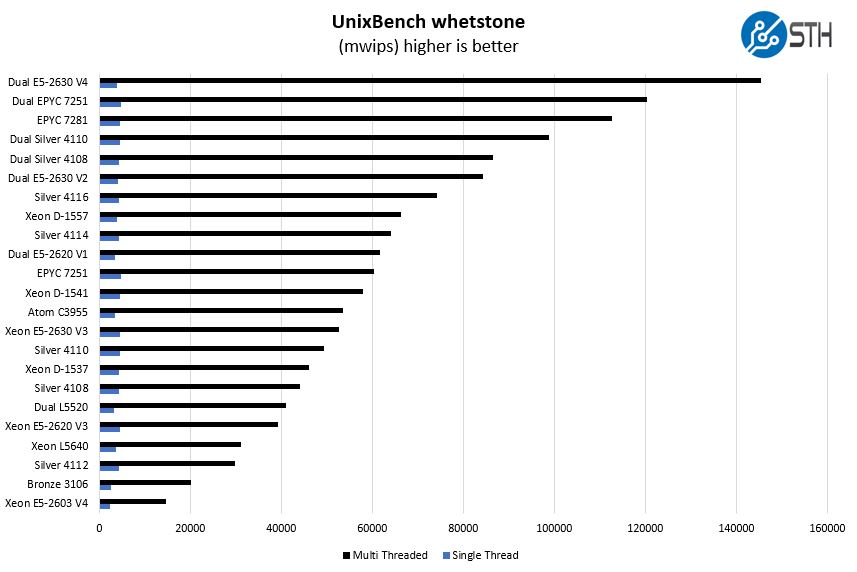

UnixBench Dhrystone 2 and Whetstone Benchmarks

One longest-running tests is the venerable UnixBench 5.1.3 Dhrystone 2 and Whetstone results. They are certainly aging, however, we constantly get requests for them, and many angry notes when we leave them out. UnixBench is widely used so we are including it in this data set. Here are the Dhrystone 2 results:

Here are the whetstone results:

Overall we see the impact of adding two additional cores to the mix as we have a nice curve for all of the Xeon Silver line.

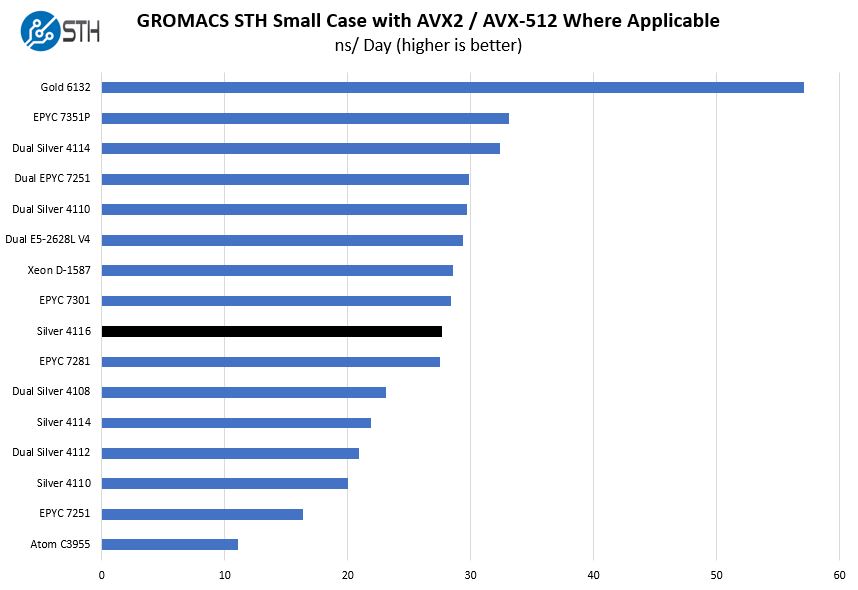

GROMACS STH Small AVX2/ AVX-512 Enabled

We have a small GROMACS molecule simulation we previewed in the first AMD EPYC 7601 Linux benchmarks piece. In Linux-Bench2 we are using a “small” test for single and dual socket capable machines. Our medium test is more appropriate for higher-end dual and quad socket machines. Our GROMACS test will use the AVX-512 and AVX2 extensions if available.

With this, we wanted to highlight an important delta. Intel is touting AVX-512 with this generation. Although the Intel Xeon Silver 4116 has the ability to execute AVX-512, the performance is not great. Unlike the Intel Xeon Gold 6100 and Platinum, the AVX-512 on the Intel Xeon Silver series is a single FMA unit. That, combined with low AVX-512 clocks, means that the Intel Xeon Gold 6100 series that looks great with AVX-512 here, is significantly faster. If you want AVX-512 performance, just go Gold 6100 or higher in the Intel stack.

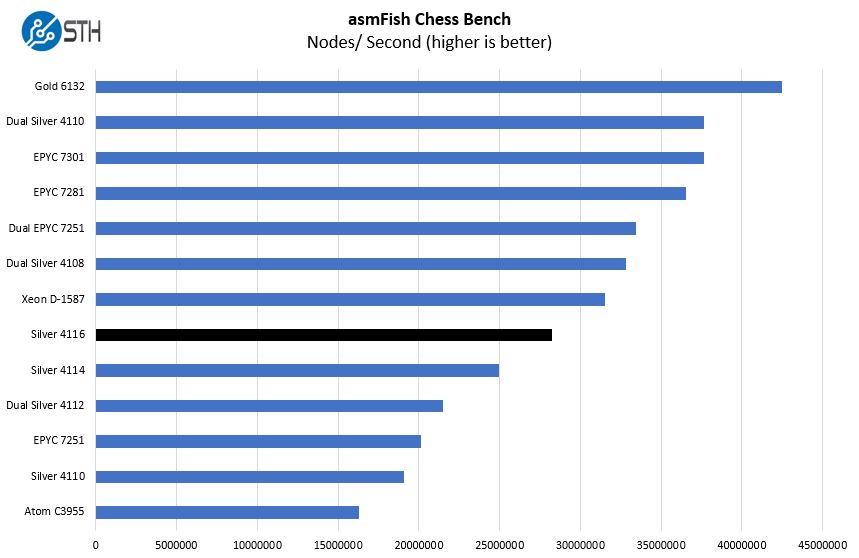

Chess Benchmarking

Chess is an interesting use case since it has almost unlimited complexity. Over the years, we have received a number of requests to bring back chess benchmarking. We have been profiling systems and are ready to start sharing results:

Here the Intel Xeon Silver 4116 has performance right where we would expect. Chess benchmarking is a well-threaded application so we can see the clear impact of higher core counts here.

A Note on Power Consumption

We normally only publish power consumption data on full systems we review. There are too many variables for this data to land in a CPU test. On the other hand, in order to get data out there, we wanted to present what we have been seeing. The Intel Xeon Silver series is highly optimized for low power consumption over performance so it is a big part of the story. The Silver 4116 was using up to 134W in our test with 10Gbase-T links active, a SAS 3008 12gbps controller and a number of drives / DIMMs in the system.

Given the overall system environment, this seems more like an 85W TDP rating CPU than the Silver 4112 with the same 85W TDP rating. Realistically, if you were to use SFP+ 10Gb networking and PCH SATA ports instead of a SAS controller you could easily get a 12 core / 24 thread Xeon Silver 4116 in a low-density 1A 120V colocation facility and not worry about power consumption.

Market Positioning

The Intel Xeon Silver 4116 has a few competitive vectors. First, single and dual socket Intel Xeon offerings. It also has to contend with the AMD EPYC single socket platform.

Intel Xeon Silver 4116 v. Intel Xeon Silver and Gold 5100

If we were to describe the Intel Xeon Silver 4116 market positioning, it is a clear bump up in performance from the Intel Xeon Silver 4114 and can be competitive in some workloads with dual Xeon Silver 4108/ 4110 CPUs. If you need more memory capacity, two Silver 4110’s will be our choice over the Silver 4116 and the power consumption of the dual Silver 4108/ 4110 setup is fairly low.

The next steps up in the Intel stack are the Intel Xeon Gold 5118 and 5120. The Intel Xeon Gold 5100 series is limited to the same single FMA AVX-512 and DDR4-2400 as the Intel Xeon Silver. The main differentiation is higher TDP and clock speed, along with price. The Xeon Gold 5118 has higher clock speeds but is still limited to 12 cores / 24 threads but is a relatively small approximately $270 premium over the Intel Xeon Silver 4116. The Intel Xeon Gold 5120 has an additional two cores for 14 cores / 28 threads for an extra $550. At this juncture, it is hard for us to recommend the step up to the Xeon Gold 5100 series if you are looking for performance. One of the primary drivers for this is that AMD is offering performance oriented options in this price segment where Intel is focused on power consumption.

Intel Xeon Silver 4116 v. AMD EPYC

At the $1000 Intel Xeon Silver 4116 price point, one can instead opt for performance and get an AMD EPYC platform. Here there are a number of options, albeit with higher power consumption, that are performance oriented. For example, for ~25% less, one can get an AMD EPYC 7351P which we showed has the vastly superior performance to the 4116 due to its 16 cores / 32 threads and 64MB L3 cache. Beyond this, if you are looking for expansion, that AMD EPYC 7351P single socket platform has more PCIe lanes and 8x DDR4-2666 channels versus the Xeon Silver 6x DDR4-2400.

If you are optimizing for low power, the question would be whether you need 12 cores of the Intel Xeon Silver 4116. If you are optimizing for performance and expandability, Intel needs a solution other than the Intel Xeon 4116.

Final Words

The single Intel Xeon Silver 4116 is an impressive chip, and is probably the highest-end chip you can put in 1A 120V racks. The real question one needs to answer when purchasing the 4116 is whether they would be better served with dual Silver 4108 or Silver 4110 chips instead as those options are highly price competitive and offer more performance and platform level scalability per machine for the same CPU price. Moving up the stack to the Intel Xeon Gold 5100 series exacts a high premium for marginal gains.

The Gold 5115 has the same TDP and almost the same price with a slightly difference balance of clock and cores – I’m surprised you didn’t compare to it in this article. Any thoughts, or are they just unavailable to date, and will be the subject of a future review?

@T R great question and I appreciate your comment. Mentioned a bit of this in the article, but we have seen a fairly amazing lack of requests for Gold 5100 series numbers.

On the Xeon Gold 5115, the street pricing right now is close to $1400. Paying that big of a premium for a part where we have had zero interest in at STH, is a bit much. Your request is the first Gold 5115 request we have logged across STH and DemoEval.

Since there are somewhere around 90 unique performance 1P and 2P combinations for EPYC and Skylake we have been prioritizing based on demand. That may seem strange, but we have a backlog of test results and a limited budget/ time to work with.

Totally understandable. At least based on retail pricing, it seems like the individual who would find the 4116 attractive (particularly due to power consumption) would also consider the 5115. But it seems that this whole segment of the Xeon product line has an market positioning problem.

It looks that the XEON-SP is only interesting when you need a 4/8 socket (Platinum) line up or when you like to pay a lot for low performance. Funny part is that intel prices for i9 are a lot lower in Europe than in the US and for Threadripper it’s the opposite(still less expensive).

I don’t quite see the point of the Gold 5xxx series, only real advantage vs. Silver is 4 Socket capability. I’d rather have fewer sockets in a server (1 or 2 at the most) but faster memory is a huge plus – this is mainly for small database nodes, which the Broadwell-E were very well suited for.

Very hard to buy the Skylake-SP lineup without feeling screwed and I think at least in theory AMD is not really an Option (limited memory / IO bandwith due to NUMA and infinity fabric), however this I should probably benchmark.

@Nils

“I think at least in theory AMD is not really an Option (limited memory / IO bandwith due to NUMA and infinity fabric), however this I should probably benchmark.”

EPYC has Octa channel DDR4-2667 upto 2048GB/socket vs. 1536 GB DDR4-2400/socket quad channel for Broadwell. Broadwell’s dual ring bus is so good that intel abandond it. Maybe you should visit a doctor.

@Misha:

I have yet to see benchmarks for database workloads on EPYC vs. Broadwell vs. Skylake, everything focused on raw CPU speed where most of the data is local which isn’t really how many real world workloads turn out to be. The ring bus has served Intel well for some time, and it’s very likely superior to Compute Clusters and MCP. As I said, benchmarks are needed.

@Nils

STH, Anandtech, phoronix, http://openbenchmarking.org/.

Ringbus was okay, double ringbus was really slow.

You may remember that intel QPI on Broadwell was so slow, that lots of companies put in infiniband cards to get faster communication between the sockets.

There are some nice articles on, among others, STH about the EPYC structure.

https://www.servethehome.com/amd-epyc-infinity-fabric-update-mcm-cost-savings/

@Misha

I usually read STH and Phoronix daly, but I have yet to see a comprehensive database benchmark – these would usually be done by a seasoned DBA. Let’s face it, this is not an area a lot of people concern themselves with, most clients I deal with think all their scaling needs can be solved be “The Cloud” ;)

I have yet to hear of people actually using Infiniband for Inter-socket communication, I believe the idea is to use an Infiniband card per CPU to keep strain of the QPI, basically partitioning a 2 socket server into 2 separate systems.

I guess I’ll buy myself an EPYC and a Skylake-SP system and see for myself.