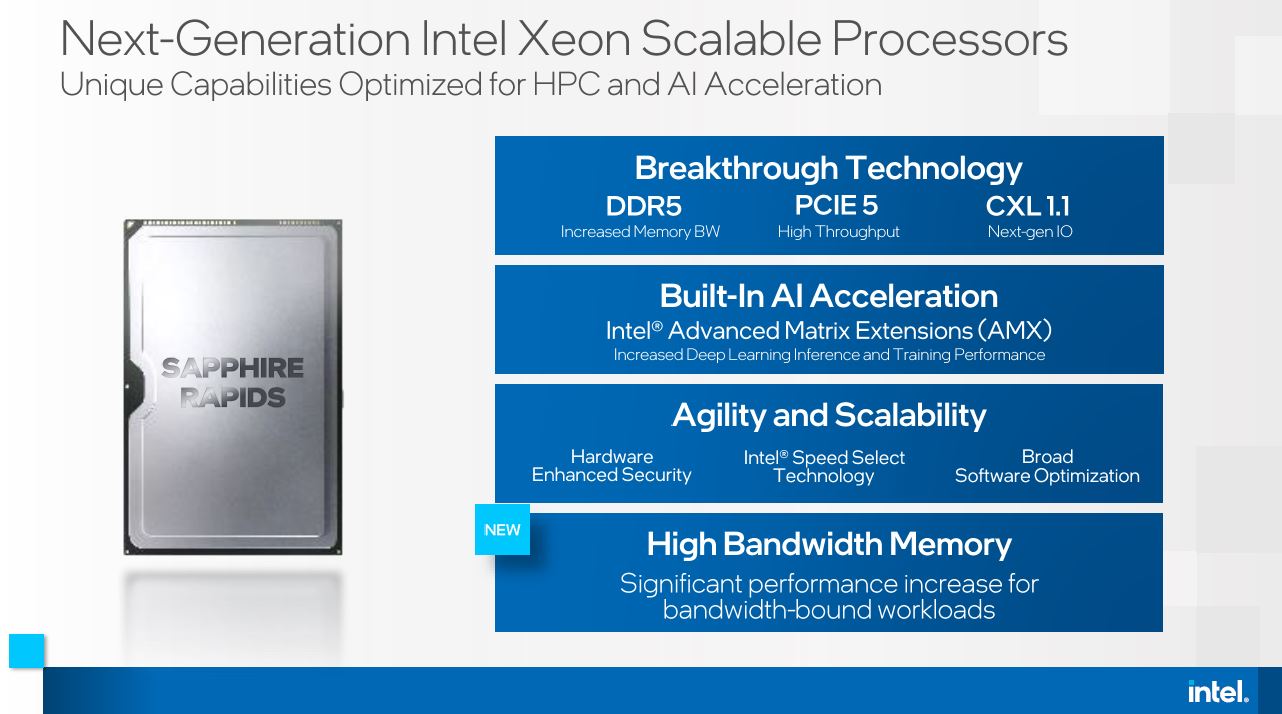

At ISC21, Intel made a number of new announcements. First that the Sapphire Rapids generation will have HBM support. Second, the new Intel Xe HPC GPU will come in the OAM form factor. Both of these will have been expected by longtime STH readers.

Intel Xeon Sapphire Rapids to Support HBM Onboard

After the 2020 3rd Generation Intel Xeon Scalable Cooper Lake and 2021 Intel Xeon Ice Lake Edition will come the Sapphire Rapids generation.

Recently, Intel said that it will have enhancements including CXL 1.1 and PCIe Gen5 along with Intel Advanced Matrix Extensions or AMX. Intel is pushing AMX for deep learning inference and training adding more matrix capabilities to traditional CPUs.

Something that was notably absent from the ISC21 slide was a lack of mention of 3rd Gen Intel Optane DC Persistent Memory. Intel has been very consistent for years mentioning PMem on its marketing slides for new generations of processors. On a slide discussing DDR5, HBM, and CXL we would have expected to see that on this slide. While Intel told us PMem 200 is still on track, omissions like these plus Micron Exiting 3D XPoint may make some in the industry nervous.

At ISC21, Intel finally disclosed publicly that Sapphire Rapids will support HBM onboard. This will act as a large and close cache to the CPU. We covered this trend in a recent STH video:

You can also read more about the overall industry trend here. The short version is that larger CPUs will put more pressure on memory subsystems and so having larger memory footprints closer to the compute complexes will be important.

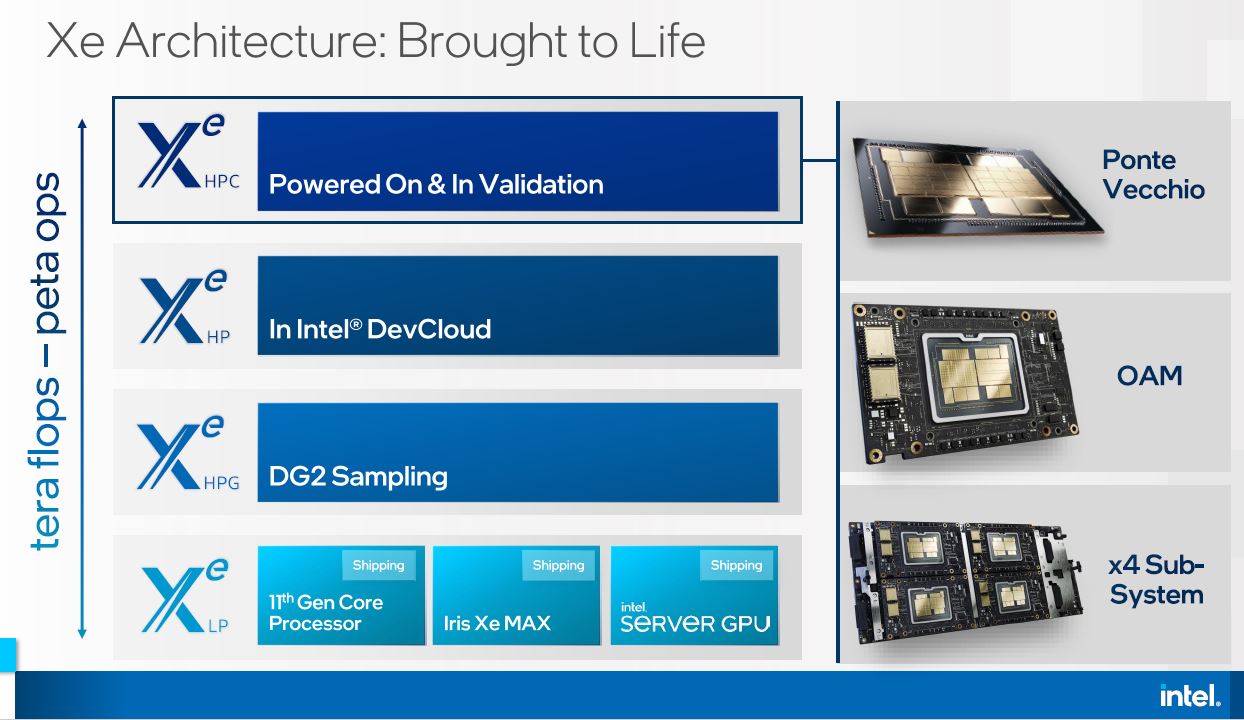

Intel Xe HPC GPU Adopts OAM

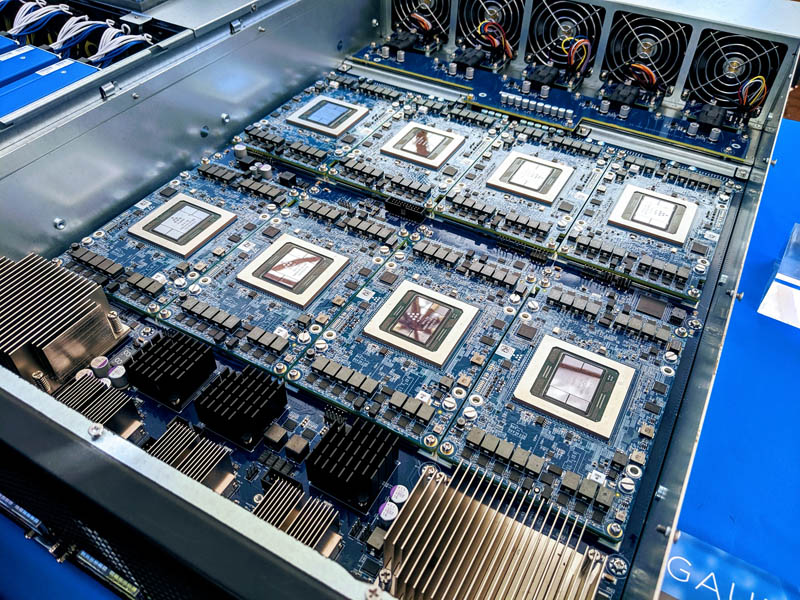

This one makes a lot of sense. We previously looked at the Facebook OCP Accelerator Module OAM Launched and Facebook Zion Accelerator Platform for OAM. Facebook and other hyper-scale customers have been pushing OAM to free the industry from NVIDIA’s SXM platforms.

If Intel using OAM sounds familiar, it should. The now canceled Intel Nervana NNP-T was slated for the OAM form factor.

Also, Intel acquired Habana Labs to replace Nervana as its AI architecture, largely because Facebook chose Habana over Intel Nervana. Habana Labs Gaudi utilized the OAM form factor as well. You can learn more about that at: Favored at Facebook Habana Labs Eyes AI Training and Inferencing.

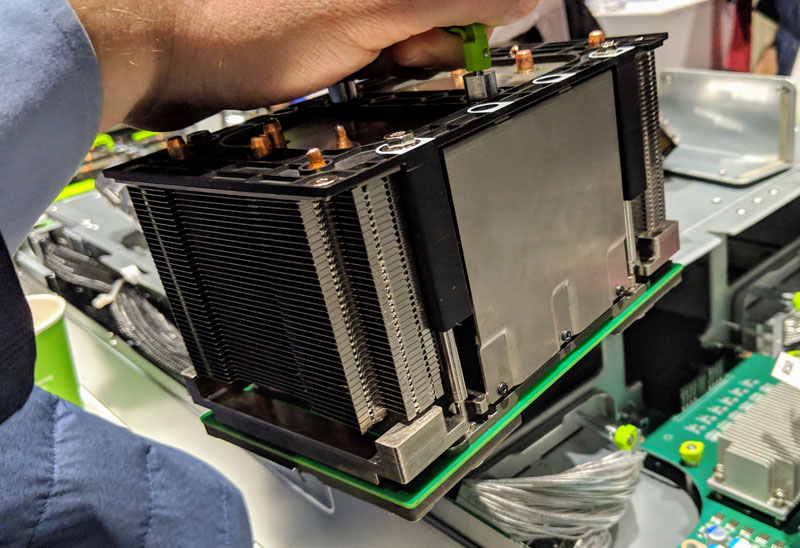

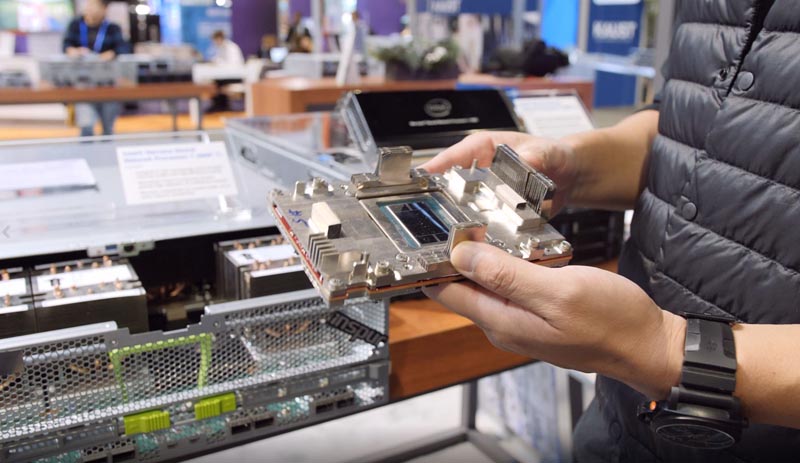

At ISC21, Intel said its new Ponte Vecchio Xe HPC GPU will utilize OAM as well.

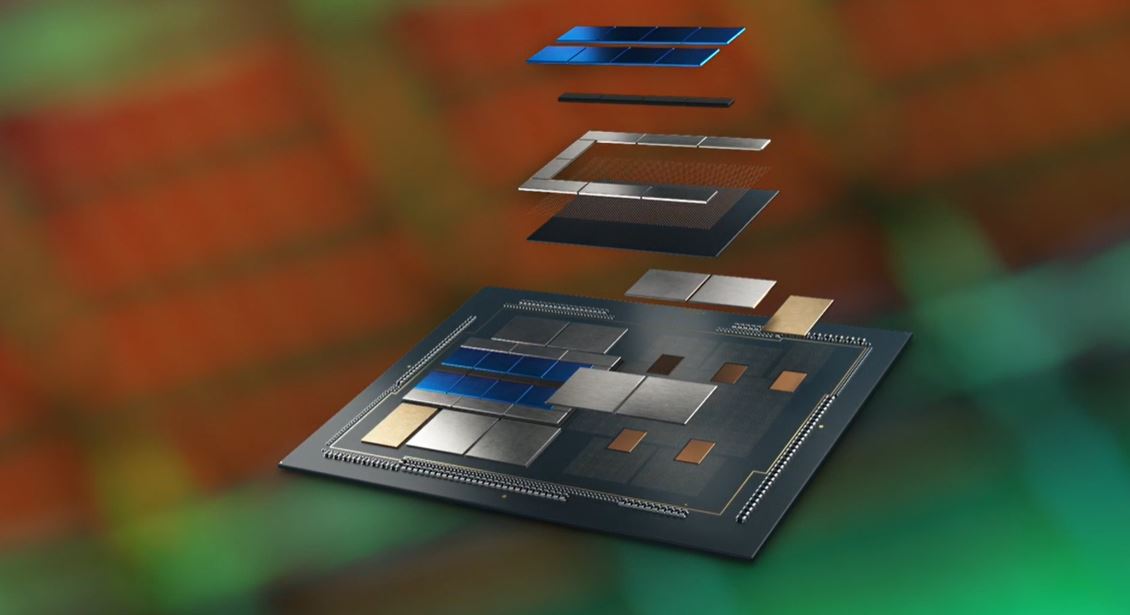

Ponte Vecchio is very exciting as it is a massive step forward for packaging. We have a bit more on that in Intel Xe HPC Ponte Vecchio Shows Next-Gen Packaging Direction.

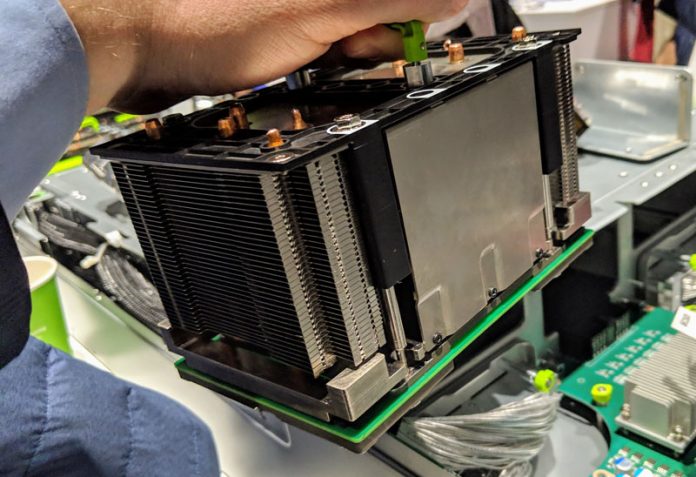

Intel is showing this both in its OAM form factor but also with a four-module subsystem. This is a big deal given the power and thermals of these next-gen accelerators. NVIDIA is already out with 4x GPU subsystems in its Redstone platform and it appears as though Intel is following NVIDIA’s lead here.

Final Words

Neither announcement should be a big surprise for STH readers. We already foreshadowed the transition to the GB era of CPUs (although the Sapphire Rapids detail was still embargoed, that is why the Arm HBM inclusion.) The omission of Intel PMem on that slide we hope Intel clarifies soon since there is an open question in the market.

On the OAM side, it should be little surprise as well that Intel is using the OAM form factor it had input on for its new accelerators. Ponte Vecchio should be a very cool architecture. Stay tuned on STH over the next few weeks as we go into the NVIDIA A100 PCIe and SXM modules including the 8x GPU HGX A100 “DELTA” and 4x GPU “Redstone” platforms. We have some awesome system reviews coming.

CPU and HBM package hugely increase server compute density for workloads that will do with out the need of DDR5. But in 2022?

ARM still has the upper hand though in terms of cost / pref.

I wonder how will this stack up against AMD’s capability to triple the L3 cache by, well, stacking. I would guess AMD’s it’s better for most workloads, especially as the number of cores goes up, because there’s less traffic that leaves the CCD. Although we have to account for the increased cache coherency traffic. Interesting times ahead!