Welcome to the next generation of Intel’s server processors, the aptly named Intel Xeon E5-2600 V4 series formerly code named “Broadwell”. We have been working over the past few weeks to get you a number of benchmarks for today’s announcement and will have those in separate pieces. We have had at least 6x dual processor E5-2600 V4 series systems running for the past few weeks non-stop to get numbers out in time. In terms of scale of effort, we collected data from close to 10,000 individual benchmark runs between the new systems and other systems we were using as comparisons. In this article, we are going to give an overview of the Intel Xeon E5-2600 V4 series and discuss the high-level architectural implications of the new processors. We are also attending Intel’s Cloud Day launch event in San Francisco and will bring any additional coverage from that event. Luckily we have had several weeks to prepare for this launch.

The TL;DR Intel Xeon E5-2600 V4 “Broadwell-EP” Launch Notes

For those looking for the extremely condensed version of the new features, here it is:

- Delivers a broad array of new capabilities across many market segments

- New (for the Intel Xeon E5-2600 series) Broadwell microarchitecture

- Built on 14nm process technology (versus 22nm for the V3 parts)

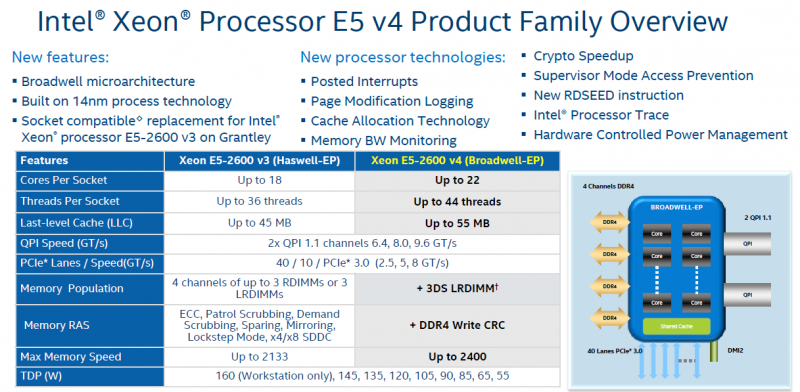

- Up to 22% more cores per socket. (22 cores/ 44 threads max per socket v. 18 cores/ 36 threads)

- Socket compatible replacement for Intel Xeon processor E5 v3 on Grantley PCH – no new PCH and BIOS upgrade is required

- New security improvements (New/enhanced instructions, RDSEED, SMAP)

- New and enhanced Quality-of-Service capabilities (CMT, CAT, MBM)

- New Virtualization capabilities (Posted Int, Page Modification Logging)

- New Power Management capabilities (per-core AVX, HPM)

- And More (TSX, Intel Processor Trace, DDR4 Write-CRC)

We are now going to look at many of the features behind these new CPUs.

Introducing the Intel Xeon E5-2600 V4 SKUs

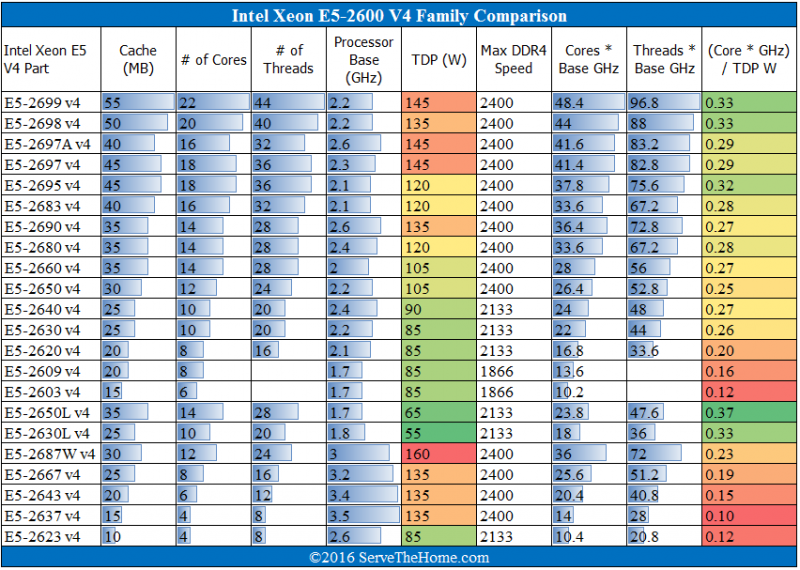

With today’s launch, Intel is releasing 22 SKUs. Intel does tend to make some modifications to SKU line-ups up until launch so please forgive this chart if we omitted or added something that is not on ARK. There are actually five embedded/ extended life SKUs that are not in this list that we will discuss in a separate piece. We added in our STH comparison colors and bars for an easy comparison among the deluge of new processors.

If you are wondering where Turbo Boost frequencies are, we have that information but instead of just publishing the maximum information, we are going to publish those figures separately on a by core count basis. For example, the Intel Xeon E5-2699 V4 can operate at 2.2GHz with a 600MHz Turbo Boost (power and thermals limiting) with all 22 cores running (non-AVX) but up to 3.6GHz if a single core is being utilized. We added the efficiency figure as a pure compute system will be heavily influenced by the processors. As one can see, in general the low power parts and the higher core count parts get you more cores * GHz per TDP Watt specified. Cores * GHz is a very crude metric but it does give a good back of the envelope relative comparison point for multi-threaded CPU performance. We also see speeds between DDR4-1866 and DDR4-2400 now in the lineup. There is a good reason that we published a 2133MHz versus 2400MHz DDR4 RDIMM benchmark earlier this week.

Diving into the Intel Xeon E5-2600 V4 Family

We have the official Intel slides explaining the Intel Xeon E5-2600 V4 family so we are going to let them tell most of the story and provide some commentary along the way based on our in-person briefing. The Intel Xeon E5-2600 V4 is, at its heart a transition to the 14nm process node and Broadwell architecture. This is much like the Intel desktop and mobile CPUs went through before the current Skylake generation. As a result, we have the same underlying platforms and most vendors will enable the new chips via a BIOS upgrade to existing systems. With Skylake-EP we expect to see a major platform and architecture overhaul, however that is just educated speculation since those products are still some time from release.

The bottom line here is that if you are buying a server starting tomorrow you are going to see deep discounts on the Intel Xeon E5-2600 V3 processors to move inventory. A short time later there will be little reason to purchase a V3 system new over a V4 system. The V4 is a very similar architecture and is an incremental advancement on just about every front. On the other hand, unless you have a specific feature need Broadwell solves, for example working TSX, there is not a lot that will make you upgrade a V3 system to V4 (or likely a V2 system for that matter.)

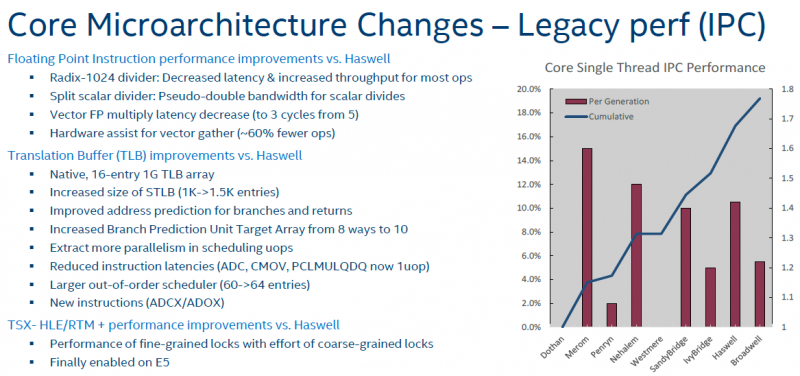

As one can see, Intel is estimating an ~6% overall IPC improvement over Haswell-EP. Chips like the Intel Xeon E5-2699 have base clocks that are 100MHz (9%) faster on the V3 parts so even with the gains in IPC, there are some applications which will perform very closely.

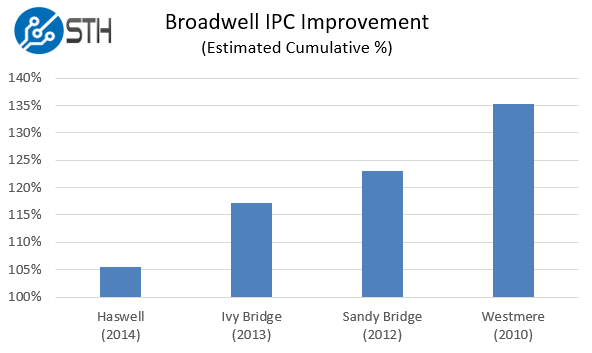

In Intel’s IPC improvement graph we see a staggering >75% IPC improvement. Then we look at the starting point and it is a 11 to 12 year old Dothan system and before features like dual core processors. We decided to go the extra step and produce something that is more useful: a comparison based on recent architectures. We took the data from the above graph (estimated since we did not have the base data) and made a relative performance graph for each architecture:

As you can see, if you are still utilizing a 2010 vintage Westmere-EP server, you get about 35% more IPC from Broadwell. If you want evidence of this, you can see our Intel Xeon D-1528 benchmarks where we compared a Broadwell 6C/ 12T Xeon D to a Westmere EP 6C/ 12T part, and the Broadwell was much faster. On the other hand, for 5-6% performance gain in single threaded IPC improvements, the upgrade from Haswell looks less tantalizing. Sandy Bridge-EP had a maximum of 8 cores / 16 threads so moving to the top end of Broadwell-EP now gives you 275% the core count and over 20% IPC gains per core which is very compelling.

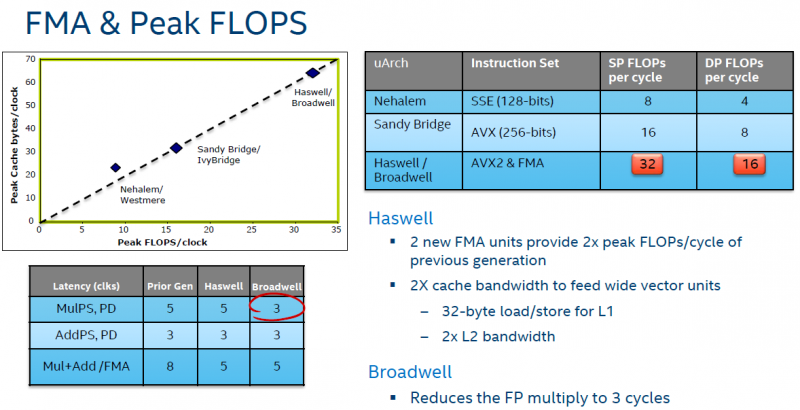

Now to get extremely technical. Haswell brought major floating point improvements versus previous versions but Broadwell lowers the latency for those operations.

Likewise, Intel did work on dividers to get some additional IPC improvements.

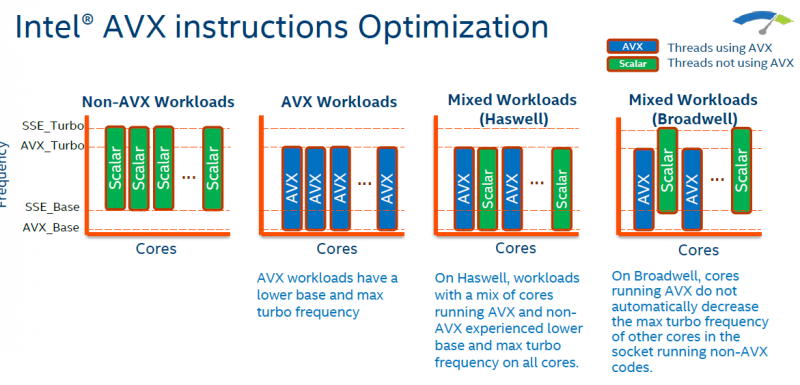

In terms of AVX and Turbo Boost, with Broadwell one can have different Turbo clocks for AVX and non-AVX workloads. That means that if one core is running AVX workloads, that gets limited to the AVX turbo cap but the rest of the CPU can still run to standard turbo clocks (given power and thermal constraints.) With Haswell if one core was running AVX, every processor core (up to 17 others) would be limited to the AVX turbo frequencies. Why does this matter? Imagine if you have an EC2 instance. On previous architectures, if someone was running an AVX workload on your CPU, the entire processor would be clock frequency limited.

Broadwell brings about an incremental improvement in security features. Which we will discuss shortly.

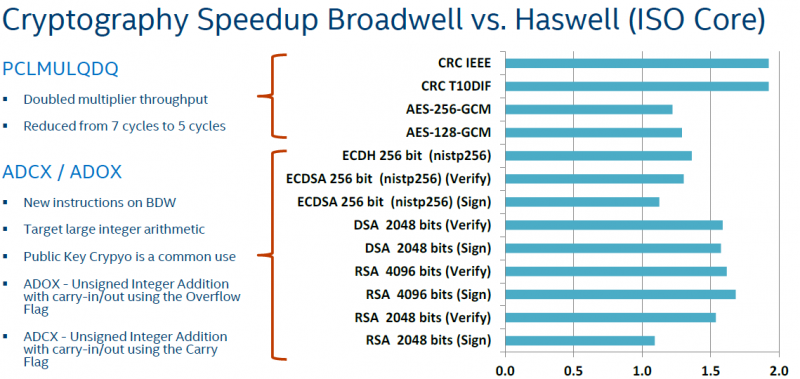

With PCLMULQDQ Intel is stretching the limits of reasonable acronyms. The bottom line here is that one can get significant crypto speedups using the new Broadwell cores.

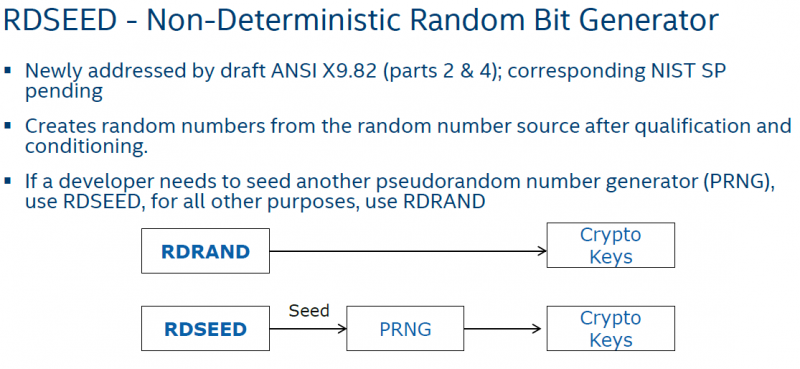

Random number generation is a big topic in cryptography. Broadwell supports RDSEED to help with random number generation.

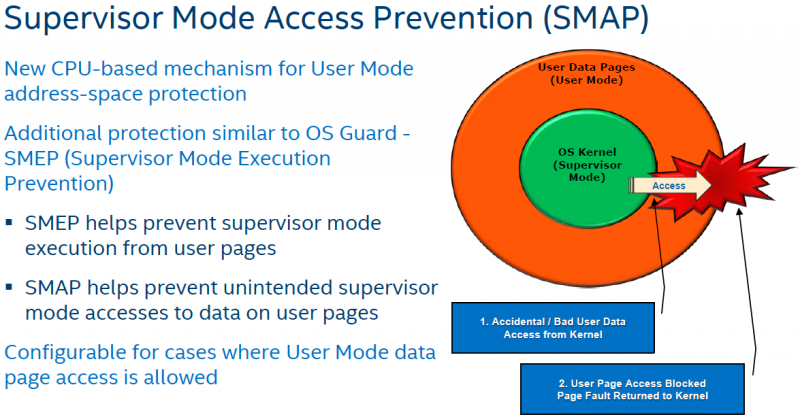

Since a huge portion of the E5-2600 processors will spend their days running multiple applications and with multiple users, the Supervisor Mode Access Prevention is a way to prevent unwanted security breaches.

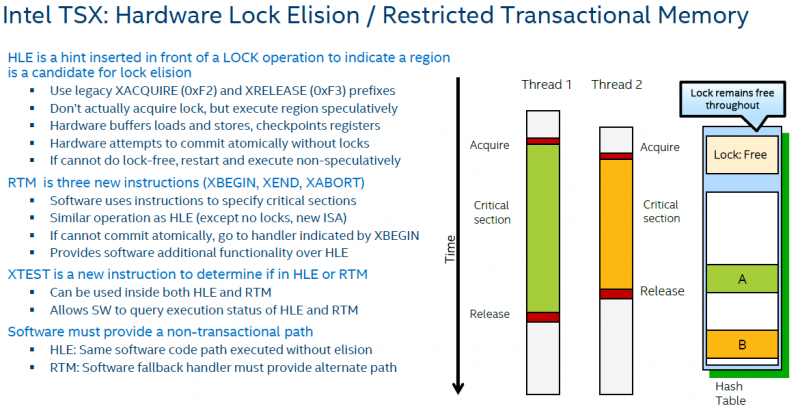

Intel TSX was supposed to be in Haswell-EP as a full fleged feature. Instead it first appeared in the Xeon E7 and Xeon D lines due to a bug with Haswell. With Broadwell-EP TSX, or Hardware Lock Elison is finally a supported feature. The database crowd is every excited about this feature because it does have the ability to manage locks much faster than previous methods on x86.

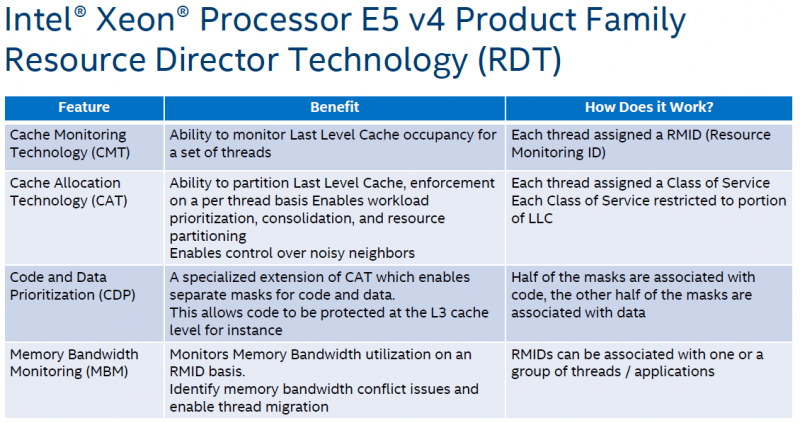

We are going to have another piece on Resource Director Technology but with the public cloud and private clouds, finding noisy neighbors has become a bigger concern so Intel has been introducing new features with each generation dedicated to identifying and solving noisy neighbor problems.

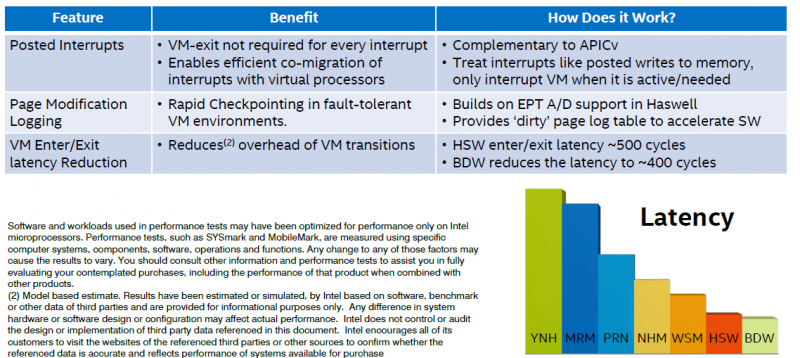

Virtualization is perhaps the most widely deployed application for a Xeon E5-2600 server. As a result, Intel does incrementally improve CPU features with each generation. The latency graph on this goes back to Yonah a 10 year old architecture (2006.) We also see Sandy Bridge and Ivy Bridge conspicuously absent from Intel’s Latency graph. We did not spin our own since we were missing too much data.

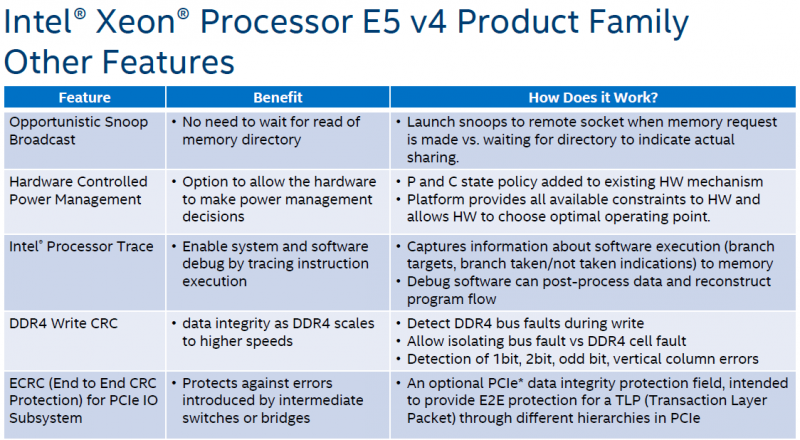

This is the part of the presentation where probably 10-20% of the technology press understands what is going on so if you are getting lost, take a quick break. If you do not understand what is going on, congratulations you probably do not need to know anything beyond “it is a generational incremental improvement.” Intel outlines a few new features with the E5 V4 family that we will take a look at briefly.

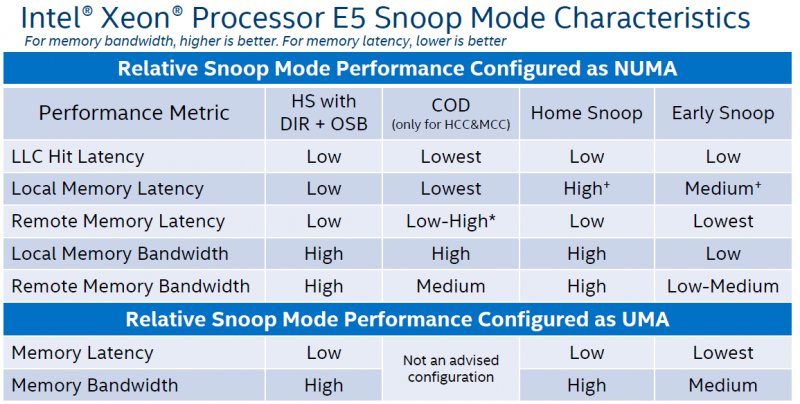

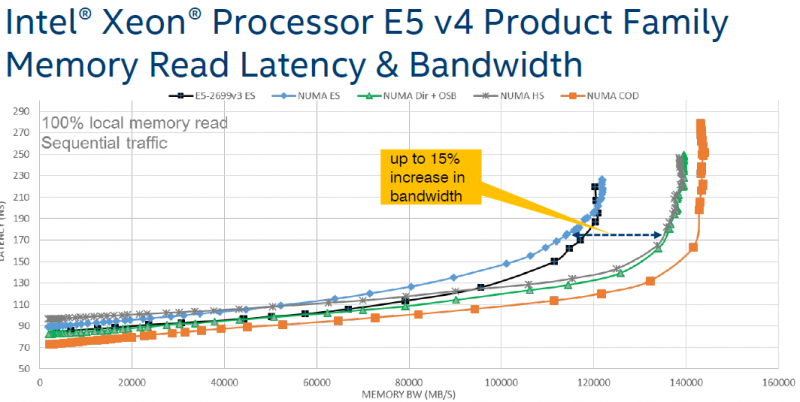

The Intel Xeon E5-2600 V4 has L1, L2 and L3 caches on die and main memory off package. That means finding data and getting it to the part of the CPU that needs it quickly can be challenging. Further adding to these challenges with two processors in a system, one CPU core on CPU1 may have to go over the QPI interface to CPU2 and look in the memory attached to CPU2. To most end users, the key takeaway is that Intel is innovating on how that process is done to optimize on latency and bandwidth.

Intel shows a significant improvement with the new hybrid snoop mode.

Intel is also continuing to innovate on its hardware controlled power management features. CPUs these days are able to monitor application performance and power consumption and intelligently throttle parts of the processor to change power characteristics. The advantage to doing this in hardware is that it can be done faster (in most cases) than in the OS and it also works across OSes.

Initial Benchmarks

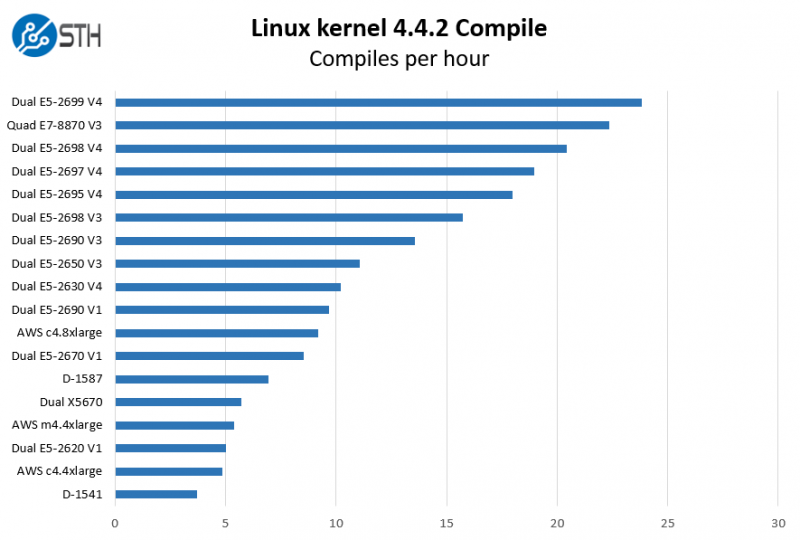

Alongside this article, we are publishing benchmark results for dual Intel Xeon E5-2699 V4 and E5-2698 V4 systems. Over the next few weeks, we will be publishing platform reviews and benchmarks of additional chips. We have had 6-10 test platforms running for weeks to get results for today. We will also use the Intel Xeon E5-2600 V4 launch to start using our new Python driven Linux Kernel compile benchmark. As a teaser of results here is the Linux kernel 4.4.2 compile speed on several of our comparison platforms, including the E5-2600 V4 parts. We are expressing results in terms of compiles per hour:

As you can see, the top end of the Intel Xeon E5-2600 V4 range in dual socket configuration is now competitive with quad CPU Intel Xeon E7-8870 V3 configurations. Of course the E7-8800 V3 series has plenty of other benefits. At the bottom end, the dual socket E5-2630 V4 configuration is a significant jump forward. We expect these to sell for around $600 per processor. For full benchmark results and a discussion see:

Subscribe to STH to get the latest benchmarks and platform reviews as they are published. We have a huge back log of content coming.

Final Words

Preparing for this launch has taken several days of in-person briefings, around 10,000 benchmark runs and has been running loads in our datacenter exceeding 3kW just to get ready for the Intel Xeon E5-2600 V4 launch. Aside from new processors, we are also covering the four SSDs launched today. The Intel DC P3700 and D3600 dual port NVMe drives and the Intel DC P3520 and DC P3320 NVMe SSDs.

In the end, and starting with the Intel Xeon E5-2699 V4 and Intel Xeon E5-2698 V4 benchmarks you will see that the new platforms are indeed faster than the previous generation of chips. On the other hand, the Intel Broadwell-EP platform is very much an incremental upgrade bringing incrementally more cores, more cache, slightly faster memory clock speeds and slightly higher IPC. While there are a few new compelling features (e.g. working TSX) at the end of the day the E5-2600 V4 will sell well simply because it will replace the V3 parts in the market over the coming weeks.

One other subtle impact is that the Intel Xeon E5-2630 V4 now provides a significant performance advantage over the Intel Xeon D-1587, and has significantly more expansion capabilities than the Xeon D platform all at a lower acquisition cost. When we broke the news of the Intel Xeon D-1587 we speculated that there was a reason Intel was holding back from publicity. Our hypothesis is that between mid-February and the end of March 2016 Intel did not want to have the Xeon D series benchmark faster than the Xeon E5 series, the company’s main server revenue stream.

You can find more of our launch day coverage here:

- Intel Xeon E5-2699 V4 Benchmarks

- Intel Xeon E5-2698 V4 Benchmarks

- Intel DC P3700 and D3600 dual port NVMe

- Intel DC P3520 and DC P3320 NVMe SSDs

Subscribe to STH to get the latest benchmarks and platform reviews as they are published. We have a huge back log of content coming.

I can’t remember the last time Intel introduced chips with this many security features. Makes me wonder if their 2007 partnership with Cavium to produce QuickAssist also resulted in headhunting.