Intel Xeon 6700P Performance

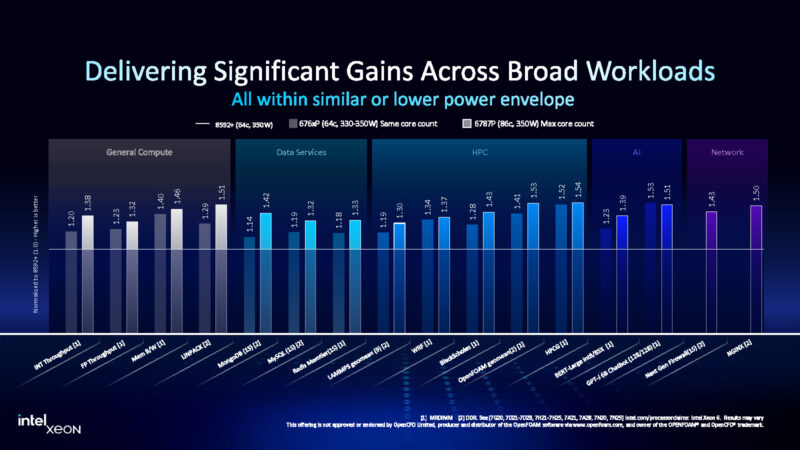

Intel showed two good comparisons: 64-core Xeon 6 versus 5th Gen Xeon and top core count to top core count.

Unlike every server launch we have covered since Sandy Bridge (or Westmere?), we did not get a dual socket CPU set from Intel to test. Instead, we got a last minute single-socket R1S platform with an Intel Xeon 6781P.

Come back later in the day for results. This system had 1TB of memory installed, but in 16x DIMMs, which dropped the memory speed. Therefore, we needed to re-run scripts starting on Saturday morning before the launch.

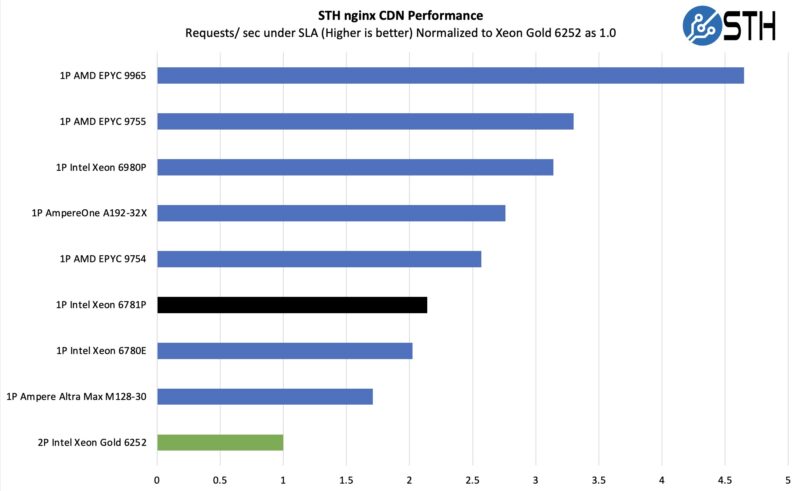

STH nginx CDN Performance

On the nginx CDN test, we are using an old snapshot and access patterns from the STH website, with DRAM caching disabled, to show what the performance looks like fetching data from disks. This requires low latency nginx operation but an additional step of low-latency I/O access, which makes it interesting at a server level. Here is a quick look at the distribution:

Just as a quick note, the configuration we use is a snapshot of a live configuration. Here, nginx is one of the very well-optimized for Arm workloads, and the cloud-native processors. Or in other words, on modern CPUs, just having more cores helps. Even with that, we were a bit surprised to see the part hit perform slightly better than the Xeon 6780E, Intel’s cloud native CPU in the socket. Of course, the Xeon 6781P is benefiting from more threads and 20W higher TDP headroom. We should also note that we are not using QAT offload here which would be a significant boost for the Xeon 6 platforms as it can take away most of the SSL overhead.

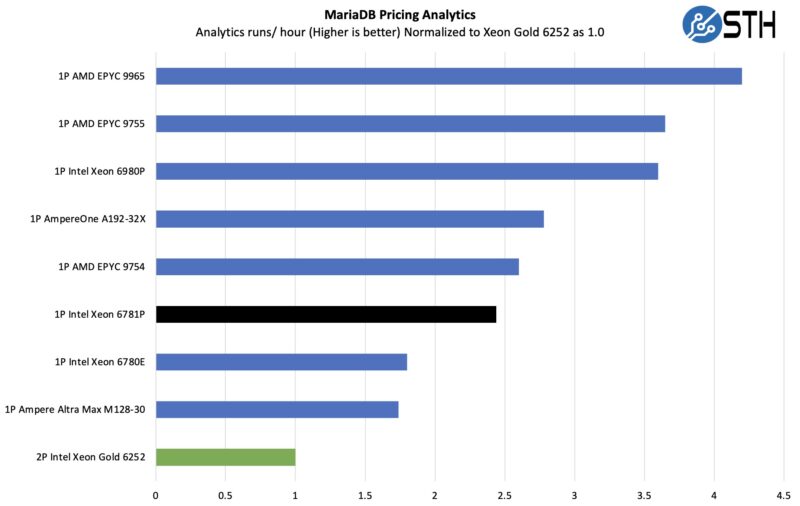

MariaDB Pricing Analytics

This is a very interesting one for me personally. The origin of this test is that we have a workload that runs deal management pricing analytics on a set of data that has been anonymized from a major data center OEM. The application effectively looks for pricing trends across product lines, regions, and channels to determine good deal/ bad deal guidance based on market trends to inform real-time BOM configurations. If this seems very specific, the big difference between this and something deployed at a major vendor is the data we are using. This is the kind of application that has moved to AI inference methodologies, but it is a great real-world example of something a business may run in the cloud.

Here we can see a case where the P cores really power ahead over the E-cores. Something also worth noting is that if you want to estimate performance of the Xeon 6700P and have results for the Xeon 6980P, it is not too far off from the core count difference in many of these workloads, assuming you are not memory bandwidth bound. The 6781P is giving us a little bit more performance per core which is common on lower core count parts.

Our baseline here is that dual socket Intel Xeon Gold 6252. We selected that as part of our cloud-native workload series because it is what a major OEM told us they sold the most of in the 2nd Generation Xeon Scalable line. A 24-core part is not top bin, but was fairly high in that lineup since the 28 core parts were uncommon. That is still pointing to around a 5:1 consolidation ratio, which is great.

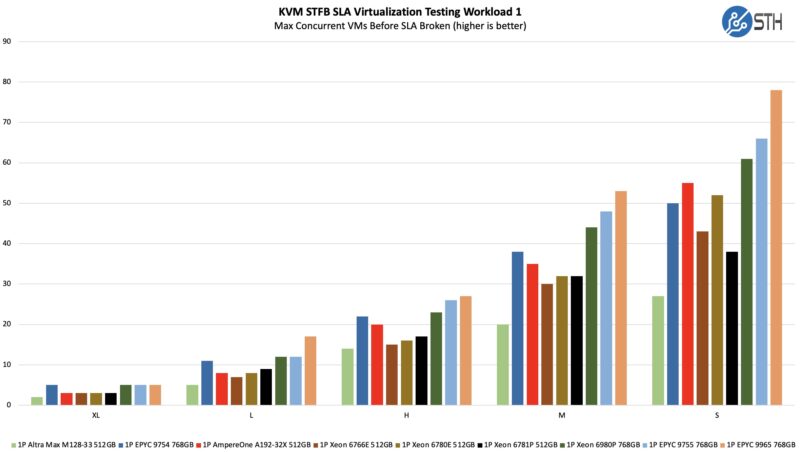

STH STFB KVM Virtualization Testing

One of the other workloads we wanted to share is from one of our DemoEval customers. We have permission to publish the results, but the application itself being tested is closed source. This is a KVM virtualization-based workload where our client is testing how many VMs it can have online at a given time while completing work under the target SLA. Each VM is a self-contained worker. This is very akin to a VMware VMark in terms of what it is doing, just using KVM to be more general.

Interestingly enough here, the E-core Xeon is doing well with smaller VM sizes, or many smaller VMs. As the VM size increases, the P-core Xeon seems to make up for only having 80 cores instead of 144. We have the top-bin Ampere and AMD numbers on here, as well as the Intel Xeon 6980P. We need to reiterate that we are not using a top-bin Xeon 6700P SKU. This is only 80, not 86 cores, and it is the single-socket optimized part. Still, it is doing fairly well.

More Coming

The scripts are still running and will not finish by launch time. It is for a somewhat funny reason. We usually test dual sockets not single socket, so this is the lowest core count mainstream server launch piece we have done in years with “only” 80 cores available. Since some workloads are running time to do X amount of work, and we have far fewer cores, they are taking longer. Give it a few hours and check back.

In the meantime, let us get to power consumption.

Another great analysis

These’ll outsell the 6900 series by many times. They’re not sexy but they’re enough for most people.

I’m still believing that the issue is the price of the newer chips. We used to be able to buy mid-ranged servers for under $5k. Now you’re talking $15k-20k. It’s great if they’re offering consolidation, but nobody wants to open their wallet on legacy CPU compute if they’re paying as much as they did for the older servers that are all running fine

You’ve colored me intrigued with this R1S business

Just want to say 8-socket 6700/86core, 4TB each.

It’s 688 cores and 32TB in a single x86 server pulling 2800W for CPU alone.

Some day I’d like to see that system, not that my budgets ever include that kind of thing, what use case? Some accounting program, or day-trading bot that wants a thread per trade which somehow is coded only in x86. VDI for 600 users on a single server? I mean you could run 1000 web servers, but why on this instead of a bunch of 1-2U servers. Same with container apps at AWS. what’s the use case?

Supermicro did this on Xeon 4th gen – 6U, 4x mainboard modular trays, vertical pcie in the back of the thing.

Honestly single socket systems are my favorite thing.

And a single socket system with enough PCIe bandwidth to have nearly zero restrictions on throughput between storage and networking is fantastic.

MDF it’s mostly apps like SAP HANA where you need so much memory in a single node. That’s why the cloud co’s all have instances with 4P and 8P. It’s a low volume business but high in the almighty dollar

@Jed J

I don’t know about that. The four customers that buy more than 50% of all servers worldwide primarily ship the highest density processors they can get their hands on. The Xeon 6900 parts are bought mostly by hyperscalers, these small baby xeons are designed for the rest of the market which is becoming so small they’re practically a footnote in server design. Thats why these launched so far later than the higher volume 6900 parts did.

The P-core parts with AMX are great for AI prototyping. GPUs with large GRAM are not very accessible under 10k.

I’d like to see how fast non-distilled AI interference runs on these SKUs.

The full models have 600 billion or more parameters, require 1TB RAM and could demonstrate the matrix hardware built-in. A comparison to the performance of large Power10 systems that also have built-in matrix units would be interesting as well as recent EPYC processors.

Xeon® 6787P High Priority Cores 36C C Low Priority Cores 50C P/E core?????????

Eric – good idea.

argus – I have an e-mail out to Intel on that. I think it is part of the power saving mode which one can select in BIOS, but will confirm. If you look at the Xeon 6781P we have the topology map on, those are all P-cores even though that has high and low priority as well.

Regarding to the high/low priority cores, it is part of the Speed Select Technology. By default, all cores are set to the same priority with the same base frequency and turbo frequency behavior. In some scenarios, the user may do bind their application process to a few cores and running at higher base or turbo frequency. They can set these cores to high priority (HP). Basically, it is about allocation of power budget. When some cores are running at higher frequency, some cores must give up some power budget and running at lower frequency. https://www.kernel.org/doc/Documentation/admin-guide/pm/intel-speed-select.rst