Intel Xeon 6700P and Xeon 6500P Series Positioning

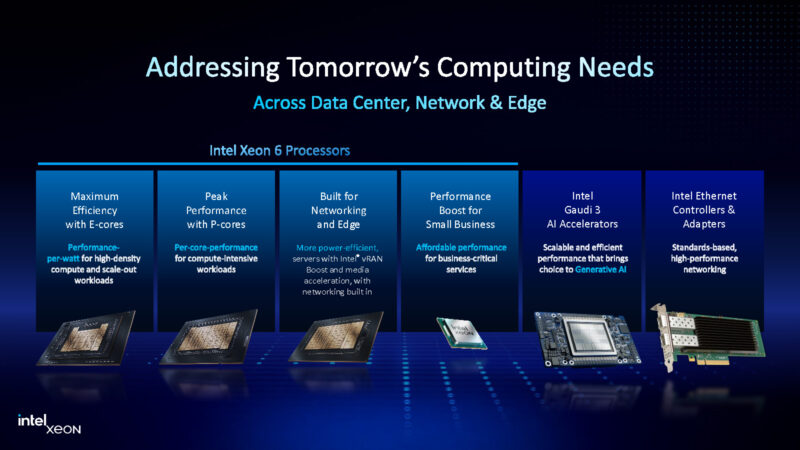

Today’s launch is part of Intel’s overall portfolio. There are, however, some holes. For example, on the AI side, the Gaudi 3 Intel is not expecting to make significant market inroads, and it also said Falcon Shores was not going to be a volume part. The new Intel E830 (200GbE) and E610 (10GbE) are not exactly class-defining, as we have tested many systems with 400Gbps NICs for quite some time. Launching 10GbE and 200GbE NICs in 2025 feels strange. Mellanox (now NVIDIA) launched the ConnectX-6 Dx with a single 200Gbps port in 2019 as a point of reference.

The Intel Xeon 6900E series transitioned to a “call us” part rather than a mainstream part, as we covered a few weeks ago in Intel’s New High-Core Count CPU is Making a Major Change. We will not see the Xeon 6900E 288 core parts in OEM servers. Instead, Intel is focusing on one major hyper-scale client and perhaps a few others with Sierra Forest-AP. The Xeon 6300 series is competitively weak as well. Still, there are some highlights in the Xeon 6700E (up to 144x E-cores), Xeon 6700P, Xeon 6900P, and Xeon 6 SoC that are all quite good.

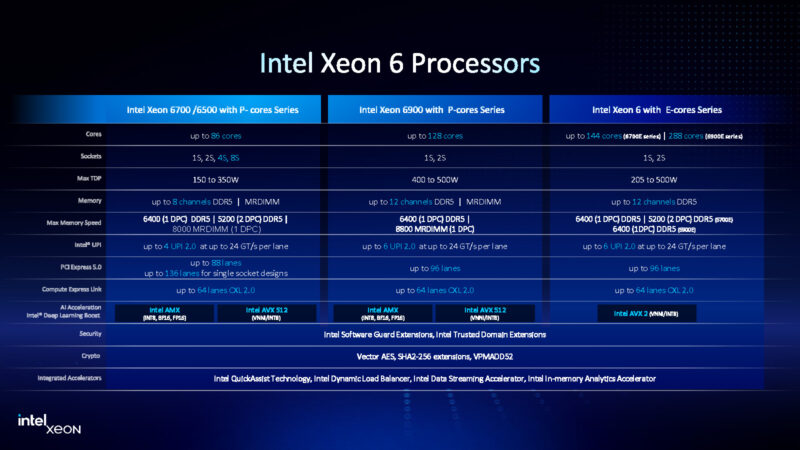

What is clear: If you are shopping for Intel Xeon, you are a long way from the days of the Xeon E5 V1/V2, when it was mostly a question of core counts and clock speeds. There are six Xeon lines with three sockets and one embedded footprint. The shared socket is between the Xeon 6700E and the Xeon 6700P/6500P, and we will focus on the 6700P/6500P today.

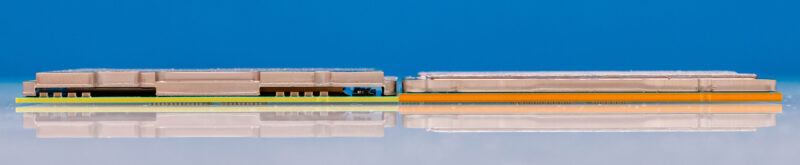

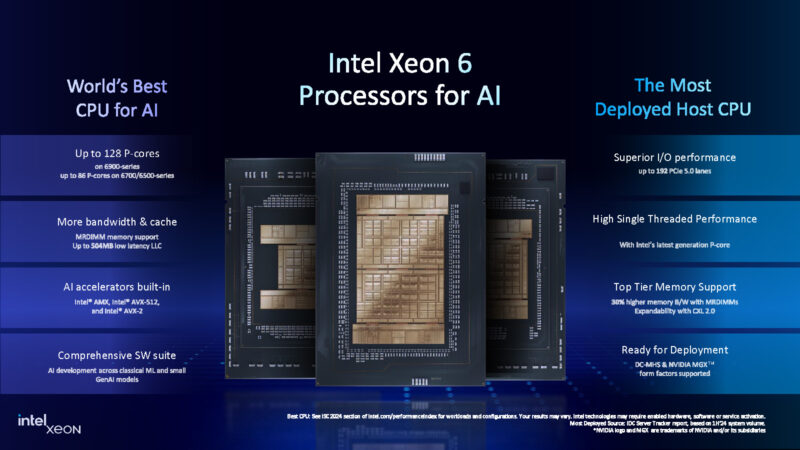

Intel is constructing the Xeon 6700P and 6500P series from two I/O tiles then one or two compute tiles. XCC goes up to 86 P-cores with up to two compute tiles, HCC is up to 48 P-cores with a single compute tile, and LCC is up to 16 P-cores with a smaller compute tile.

Then, things get confusing when Intel tries to summarize the new series. Still, the new Xeon 6700P and Xeon 6500P series can have up to 88 PCIe lanes in multi-socket configurations, built-in accelerators, and more. Intel just has too many lines and way too much micro-segmentation to make a single slide that would make a customer immediately get it.

Like the Xeon 6700E series that shares the platform, the new parts are 8-channel DDR5 and support CXL 2.0. Something we wish that Intel did was to make MCRDIMM/ MRDIMM support at 8800MT/s for all of the P-core parts. If someone wants to pay the price premium for expensive DIMMs on a $2000 lower-core count part, why not just let them?

That brings up a really neat question. How do you even classify the Xeon 6700P? It is undoubtedly lower-end than the Xeon 6900P which can have almost 50% more cores, and 50% more memory channels. Yet the Xeon 6700P can scale to 4-socket or 8-socket configurations, maybe making it ahead of the Xeon 6900P’s scalability since those are higher-end systems. In terms of core counts, 86 cores are more than twice that of the 3rd Gen Xeon Scalable, but it is the third or fourth lowest core count SKU family in Intel’s portfolio today, failing to eclipse Intel’s 2024 Xeon 6 launch families.

This is the other challenge with Intel’s messaging. In touting Intel AMX, which is legitimately a good feature for AI, Intel goes back to Xeon 6, but the Xeon 6900P series, which is not socket compatible with the Xeon 6700P series launching today.

Intel now has so many SKU families and decided to invent more with Granite Rapids-SP that it is probably worth just a clean slate trying to state who these are for. The fix is simple but would be a departure for Intel. Intel needs to move to a simplified stack and not artificially hold back silicon in key areas, much like it has started to do with the onboard accelerators. If it is already in the silicon, enable it and let customers make the most of it. That simple change would help straightforwardly present Xeon 6.

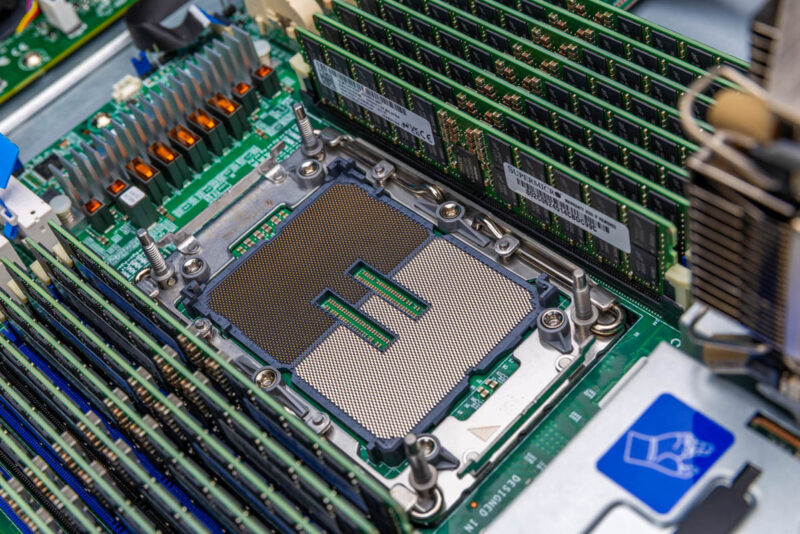

Some applications and hyper-scalers simply want the biggest CPUs at 400W-500W each. Over the next few generations, we expect CPUs to top off at 500-600W while power is allocated to GPUs and AI accelerators. Still, not every data center can handle two 500W TDP CPUs per node. Granite Rapids-SP scales from 150W to 350W each. That fits into many power budgets out there already. Further, while the top-end core counts have grown significantly, the enterprise market primarily has grown core counts at a much more modest pace. Per core, licensing is a suppressing force. Not everyone needs a 16-core CPU with 12-channel memory as an example, so having 8-channel memory makes sense. Also, and quite importantly, the eight-channel two DIMM per channel (2DPC) design with the smaller socket fits up to 32 DIMMs and two CPUs in the width of a server. The Intel Xeon 6900P series and the AMD EPYC 9000 series platforms see two CPUs and 24 DIMMs (12 per CPU) side-by-side in a system just due to the 19″ rack width. While you can install more memory in 12-channel platforms, there is not enough room to do it side-by-side in a standard chassis.

Intel fills that gap. Perhaps a better way to say it is that the Intel Xeon 6700P and Xeon 6500P series are for those who do not want to buy the top-end cores per socket SKUs.

Speaking of SKUs, let us get to that next.

Another great analysis

These’ll outsell the 6900 series by many times. They’re not sexy but they’re enough for most people.

I’m still believing that the issue is the price of the newer chips. We used to be able to buy mid-ranged servers for under $5k. Now you’re talking $15k-20k. It’s great if they’re offering consolidation, but nobody wants to open their wallet on legacy CPU compute if they’re paying as much as they did for the older servers that are all running fine

You’ve colored me intrigued with this R1S business

Just want to say 8-socket 6700/86core, 4TB each.

It’s 688 cores and 32TB in a single x86 server pulling 2800W for CPU alone.

Some day I’d like to see that system, not that my budgets ever include that kind of thing, what use case? Some accounting program, or day-trading bot that wants a thread per trade which somehow is coded only in x86. VDI for 600 users on a single server? I mean you could run 1000 web servers, but why on this instead of a bunch of 1-2U servers. Same with container apps at AWS. what’s the use case?

Supermicro did this on Xeon 4th gen – 6U, 4x mainboard modular trays, vertical pcie in the back of the thing.

Honestly single socket systems are my favorite thing.

And a single socket system with enough PCIe bandwidth to have nearly zero restrictions on throughput between storage and networking is fantastic.

MDF it’s mostly apps like SAP HANA where you need so much memory in a single node. That’s why the cloud co’s all have instances with 4P and 8P. It’s a low volume business but high in the almighty dollar

@Jed J

I don’t know about that. The four customers that buy more than 50% of all servers worldwide primarily ship the highest density processors they can get their hands on. The Xeon 6900 parts are bought mostly by hyperscalers, these small baby xeons are designed for the rest of the market which is becoming so small they’re practically a footnote in server design. Thats why these launched so far later than the higher volume 6900 parts did.

The P-core parts with AMX are great for AI prototyping. GPUs with large GRAM are not very accessible under 10k.

I’d like to see how fast non-distilled AI interference runs on these SKUs.

The full models have 600 billion or more parameters, require 1TB RAM and could demonstrate the matrix hardware built-in. A comparison to the performance of large Power10 systems that also have built-in matrix units would be interesting as well as recent EPYC processors.

Xeon® 6787P High Priority Cores 36C C Low Priority Cores 50C P/E core?????????

Eric – good idea.

argus – I have an e-mail out to Intel on that. I think it is part of the power saving mode which one can select in BIOS, but will confirm. If you look at the Xeon 6781P we have the topology map on, those are all P-cores even though that has high and low priority as well.

Regarding to the high/low priority cores, it is part of the Speed Select Technology. By default, all cores are set to the same priority with the same base frequency and turbo frequency behavior. In some scenarios, the user may do bind their application process to a few cores and running at higher base or turbo frequency. They can set these cores to high priority (HP). Basically, it is about allocation of power budget. When some cores are running at higher frequency, some cores must give up some power budget and running at lower frequency. https://www.kernel.org/doc/Documentation/admin-guide/pm/intel-speed-select.rst