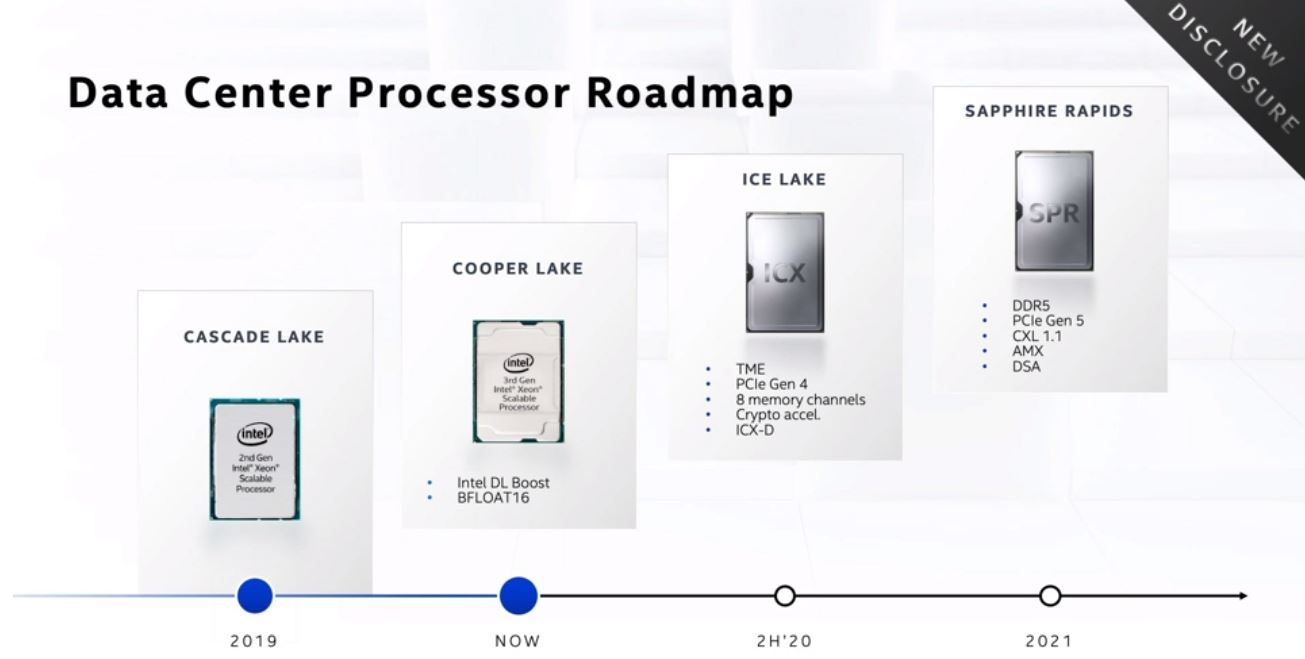

As part of the company’s Intel Architecture Day 2020 event, the company went into its data center portfolio. Since I know many of our readers after The 2021 Intel Ice Pickle piece want to know about Ice Lake Xeons and Sapphire Rapids, we are going to cover those in this piece. We will also cover the Intel Xe SG1, the company’s first data center GPU coming out in 2020, and a few of the other big disclosures from this Architecture Day in this piece.

Intel Shows Ice Lake Xeon and Talks Sapphire Rapids

We are going to learn more about the Intel Ice Lake-SP Xeon generation soon. However, if you saw our cover image in this story, you will notice something important. That is where we will start with Ice Lake Xeons.

Intel Shows Ice Lake Xeon and Whitley

That photo was showing what seems to be a GPU development vehicle attached to an Intel Whitley platform.

The picture is a bit grainy, but one can see that there are a total of 16 DIMMs per CPU (four black and four blue on each side.) The socket also looks like a Whitley socket albeit a bit grainy. This is one of the first images of the Whitley platform that Intel has released to date and it aligns with the roadmap Intel showed.

These are new disclosures on both Ice Lake and Sapphire Rapids Xeon families. We have known that Ice Lake Xeons would support features such as PCIe Gen4 (64 lanes) and 8x DDR4-3200 memory channels for some time. Intel confirmed that it will offer TME or “Total Memory Encryption” on its next-gen Xeons. This is a big deal as we are seeing AMD and Arm vendors offer memory encryption as well. A good example of this is when we covered Google Cloud Confidential Computing Enabled by AMD EPYC SEV. Along those lines, we expect Intel to continue to release details around its confidential computing platform in the Ice Lake Xeon generation over the coming months. The company has been promoting SGX for secure enclaves for some time. We expect to see a greatly enhanced SGX solution on Ice Lake Xeons to enable Intel’s confidential computing platform. This goes hand and hand with its new crypto acceleration features as part of the new security feature set for Ice Lake.

Ice Lake Xeons with PCIe Gen4 are extremely important to the company. Intel has been pushing its portfolio strategy. With 10nm slow to arrive, Intel’s other elements such as NAND SSDs, Optane PMem 200, Columbiaville NICs, FPGAs, Habana Labs AI Training accelerators, forthcoming GPUs, and so forth are all awaiting the cornerstone of its platform. What will be interesting is that Tiger Lake client PCs will get PCIe Gen4 first.

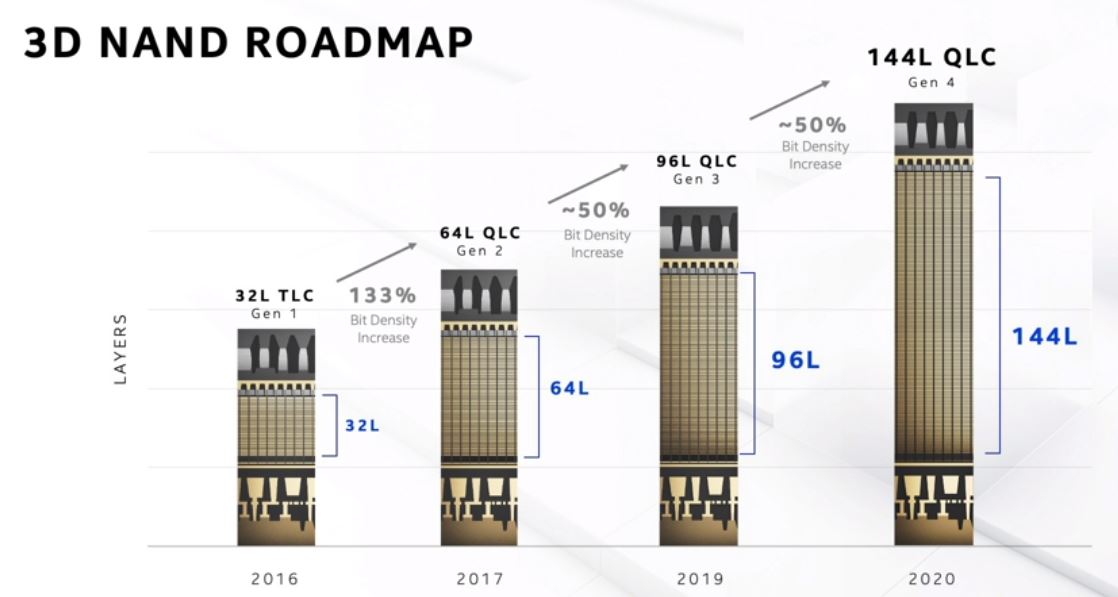

We discussed Intel’s storage strategy in our piece Next-generation Intel QLC NAND Increases Capacity and Performance from Seoul.

Next-Gen Ice Lake Xeon D

Another small notation that may be easy to miss here is “ICX-D.” ICX-D is the next-gen Ice Lake Xeon D codename. Intel is confirming that we will see this and it is in the 2H’20 swimlane so we expect the announcement in the next 4.5 months.

Intel Sapphire Rapids Xeon

We have discussed this before, but the Ice Lake Xeon generation is a 10nm part that is designed to be competitive with the 2019 AMD EPYC Rome platform. We covered this in our Kioxia CM6 PCIe Gen4 SSD review, due to Ice Lake Xeons being pushed, PCIe Gen4 will be relatively short-lived. In 2021, we will see PCIe Gen5, DDR5, new instructions, and security features with the Sapphire Rapids Xeon generation as shown in the roadmap. Perhaps the most important architectural change will be CXL 1.1.

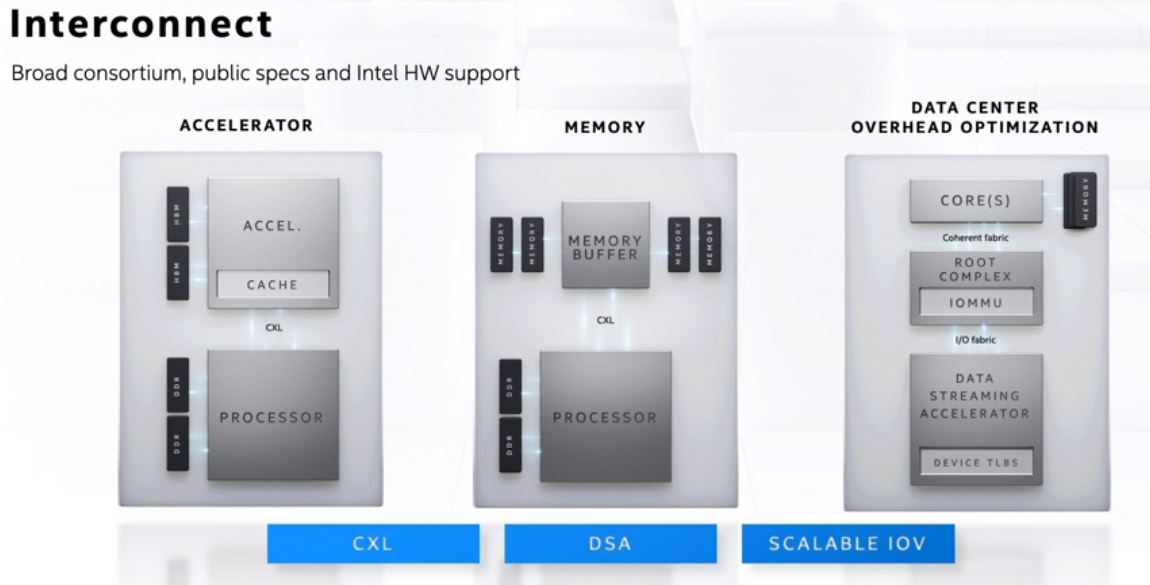

CXL runs over PCIe Gen5 physical lanes and is important because it allows devices in a system to perform remote load/ stores among other things. For computer architectures, this will start to change system design because it has the potential to more efficiently use DRAM caches and is when we can start to see major changes in how systems and software can be architected.

That CXL transition will not happen overnight, but it is going to be a big deal in the industry. A great example of this is that your SSD that has DRAM cache today does not necessarily need to use its own local DRAM controller cache over main system memory tomorrow. When you think about the implications combined with technology such as 3D XPoint or Optane PMem in new Intel terms, one would not need local DRAM cache and power-loss protection on a SSD. Instead, data can be pulled from the main memory and placed directly on NAND.

While that may look like a small bullet point, it is by far the #1 development that will happen in the industry. You can learn more about CXL in:

- New CXL Details at Intel Interconnect Day 2019

- CXL and Gen-Z Lay Borders with a Formal MOU We Talk Impact

When we say CXL is a game-changer, some of the early examples are easy to imagine and the hardware is, according to Intel, <16 months away.

One will also notice that “DSA” is listed for Sapphire Rapids. The Intel team is focusing on how, in the future, to move data around efficiently in the system where we have many devices all requesting data. It will need functionality in its products to simply manage the mass of data movement. The Data Streaming Accelerator is part of that solution.

Final Words

There is an amazing amount of technology that is awaiting enablement by Intel Ice Lake Xeons and PCIe Gen4. Once that is in the market, Sapphire Rapids will come with a new platform designed for CXL and change the game again. These are exciting times.

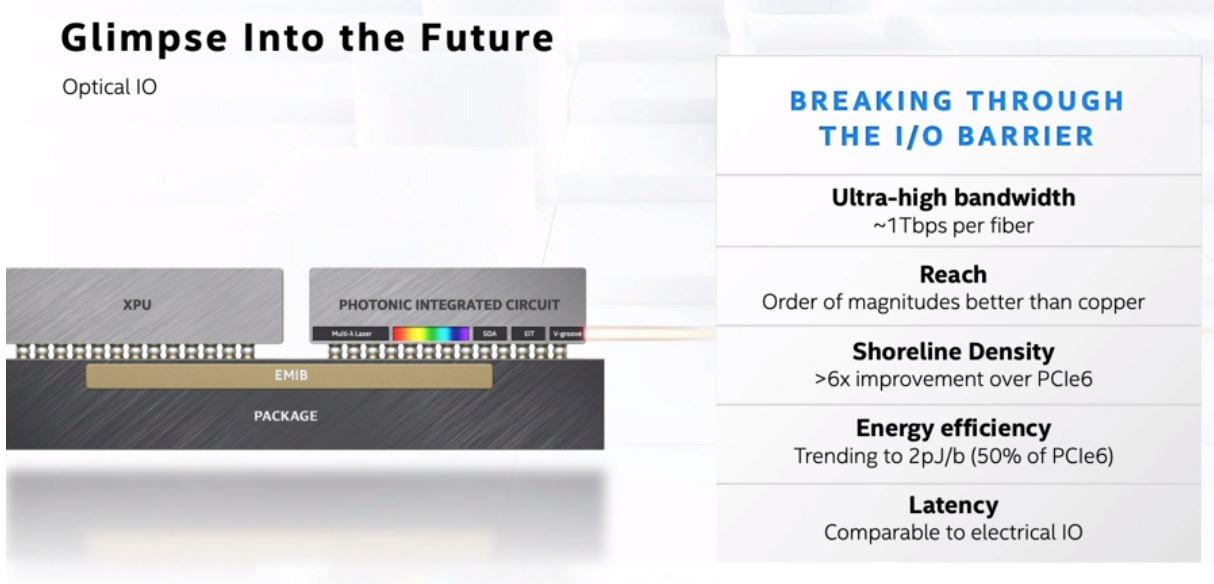

After our Hands-on with the Intel Co-Packaged Optics and Silicon Photonics Switch earlier this year (video here) there was a question I had after hearing Intel’s presentation. I asked Intel’s Chief Architect, Raja Koduri, about when we will see silicon photonics integrated into chips. For some reference point, as we move beyond Sapphire Rapids, demands on data movement are going to be so high, especially in a CXL environment, that higher throughput and lower latency will be needed. We are also hitting points where motherboard PCB needed to be upgraded for PCIe Gen4 and there are more constraints on runs making vendors look at more cabling in servers that would have used PCB risers previously.

Raja, of course, declined to give product specifics but did say he sees the need for this type of technology in much less than 10 years, and likely 5 years. He had a big smile since he knew I was fishing a bit here so we will not hold him to five years, but this change is coming.

I like ‘game-changers’!

and Optical IO! Finally!

On the topic of CXL, it’s easy to imagine how removing a step or two from a large data movement could improve throughput and latencies. Are there any realistic measurements yet of how big an improvement it will be? Maybe some educated guesses?