Testing Intel QAT Encryption: nginx Performance

Here we are going to take a look at something that goes beyond just QAT hardware acceleration. We are going to look at the nginx HTTPS TLS handshake performance using QAT Engine. This is going to encompass both the hardware offload, but also the IPP using onboard Ice Lake extensions. Since performance is going to vary a lot based on our different cases, we are aiming for 45,000 connections per second and adjusting the various solutions to achieve around that mark by dialing up and down threads as well as testing the different placement of the threads on the chips.

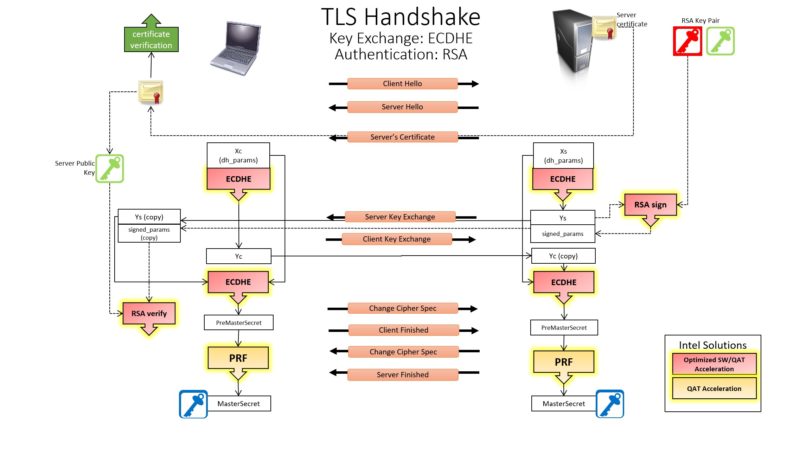

Just to give some sense of where the acceleration is happening, Intel has this diagram that shows where it accelerates the HTTPS TLS handshake:

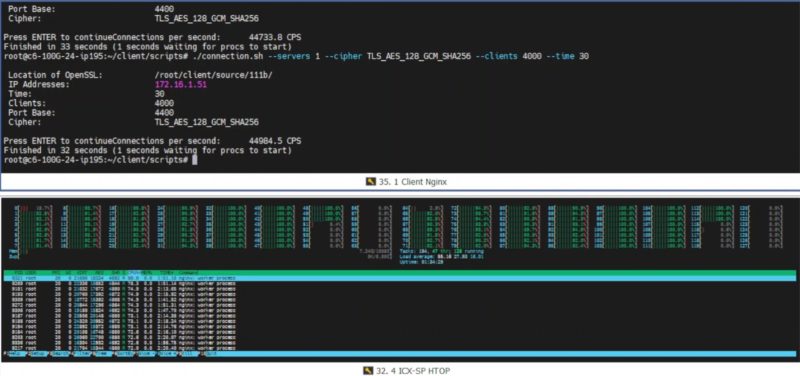

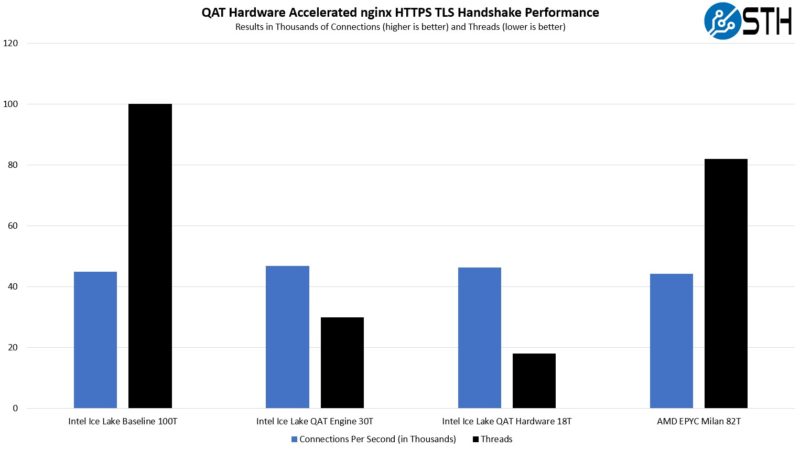

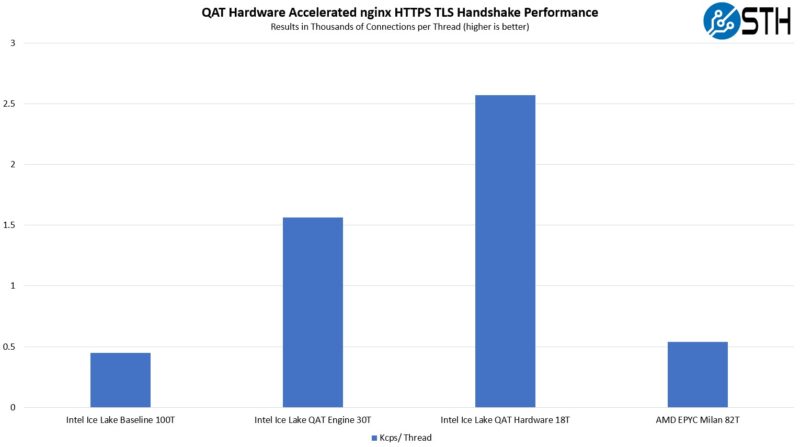

Just to give a sense of how taxing this is, here is a screenshot of the ~45,000 connections per second not using any of the QAT acceleration using 100 threads. This goes down to 30 threads with the QAT Engine using the IPP crypto library and our performance goes up to around 46,900 connections per second. We tried dropping cores here, but ended up losing too much performance. Again, it was a lot of trial and error to hit the ~45Kcps range.

With the QAT hardware acceleration, we are doing effectively the same work with only 18 threads.

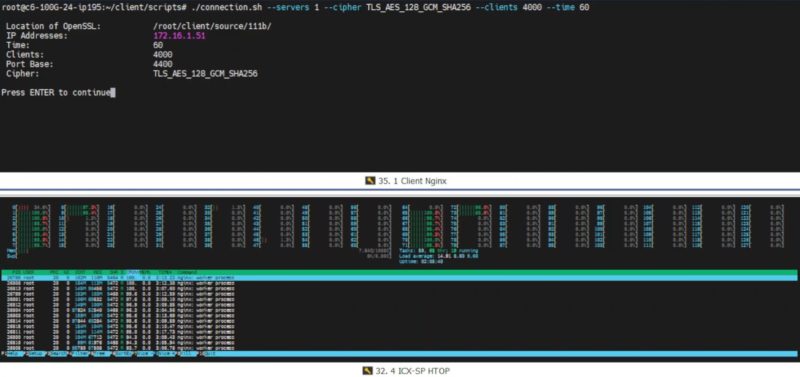

AMD was able to use only 82 threads to reach this 45K connection range (44200cps.) The AMD solution has some crypto offloads, but not as much as we can get using the more optimized QAT solutions.

What we learned here is that AMD’s higher clocks and TDP meant it used 18% fewer resources to maintain around the 45Kcps range. Once Intel QAT Engine was added, Intel pulled ahead. Finally, once the QAT hardware acceleration was added, Intel was roughly 4.8x AMD’s implementation on a connections-per-core basis or over 5x the Intel Xeon baseline we had.

Taking a step back, this is what makes servers notoriously difficult to benchmark. Companies with other architectures such as Arm CPUs often discuss nginx performance. What they often miss is that a lot of their advantages disappear when encryption and compression are turned on and proper accelerators are used. Using IPP and the QAT Engine did not require special hardware and even that offers massive performance gains.

At the same time, there are many folks running nginx web servers and not using the QAT Engine/ IPP offload for Ice Lake and are therefore not seeing this performance.

Next, let us discuss the “gotchas” of QAT, and our final words.

I’m going back to read this in more detail later. TY for covering all this. I’ve been wondering if there’s updates to QA. They’re too quiet on the tech

IPsec and TLS are important protocols and it’s nice to see them substantially accelerated. What happens if one uses WireGuard for a VPN? Does the QAT offer any acceleration or is the special purpose hardware just not applicable?

Hi Eric – check out the last page where WireGuard is mentioned briefly.

How about sticking a QAT card into an AMD Epyc box? Would be nice to see how this works and get some numbers.

I came here to post the same thing that Herbert did. Is this more ‘Intel Only’ tech or is it General Purpose?

Also, what OS’s did you test with? It’s obvious that you used some flavour of Linux or BSD from the screenshot, I’d like to know specifics.

It would be also interesting to know if Windows Server also saw the same % of benefit from using these cards. (I’m a Linux/BSD only sysadmin, but it would still be nice to know.)

I don’t think QAT on EPYC or Ampere is supported by anyone, no?

I thought I saw in the video’s screenshots they’re using Ubuntu and 22.04.x?

Some of the libraries are available in standard distributions, however it seems you must build QAT engine from source to use it, there are no binary packages. I think this limits the usability for a lot of organizations. I would be especially wary if it’s not possible to upgrade OpenSSL.