Testing Intel QAT Encryption: IPsec VPN Performance

IPsec VPN performance is another great example of where commercial providers figured out that QAT hardware acceleration was a huge performance boost. IPsec VPNs are a mature and widely deployed technology, and a big part of establishing secure connections over the public Internet is encryption. As a result, we took a look at the IPsec VPN performance using a few cases.

- Intel Ice Lake Xeon Gold 6338N with:

- QAT Engine Software Acceleration using the Intel Multi-Buffer Crypto for IPsec library that you can find on GitHub.

- QAT Hardware Acceleration

- AMD EPYC 7513 “Milan” with:

- AES offload, but not VAES since Milan does not support AVX-512 VAES

On this one, we are just including the AMD EPYC 7513 numbers to stay consistent. Many of the instructions the QAT Engine’s software acceleration uses are not present in the EPYC 7003 series. Still, we are going to show the results, but perhaps the most important point to look at is the Intel scaling for this.

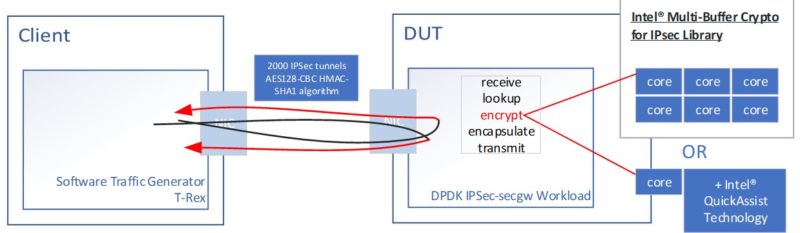

Here is the basic diagram. We are using the DPDK IPSec-secgw to measure how many packets/ Gbps we can process per second using IPsec. We are using the DPDK libraries here since DPDK is widely used in Intel, AMD, and Arm solutions at this point. We are using Cisco T-Rex as our load generator and we are using an acceptable packet drop rate maximum of 0.00001%.

When we talk about the QAT Engine here, we are using the cores and utilizing things like VAES (vectorized AES) instructions on the Ice Lake cores. This is different from using the Intel QAT hardware accelerator even though one could call it a form of acceleration.

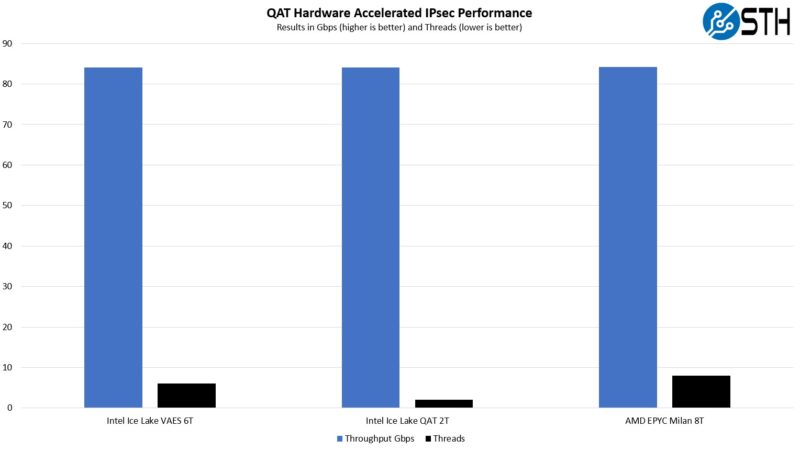

Here, we see the use of VAEX helps Intel quite a bit. Ice Lake Xeons can hit our ~84Gbps target traffic across 2,000 IPsec tunnels using only 6 threads while AMD needs 8 threads. To be fair, the AMD EPYC 7513 hit 84.14Gbps here and 7.39Mpps. The Intel examples were 84.04Gbps and 7.38Mpps. Again, we tried different core/ thread placements. The bigger challenge was actually we were using a 100Gbps link speed and with 64 cores/ 128 threads in this system, the bigger bottleneck was the networking link between the client and server.

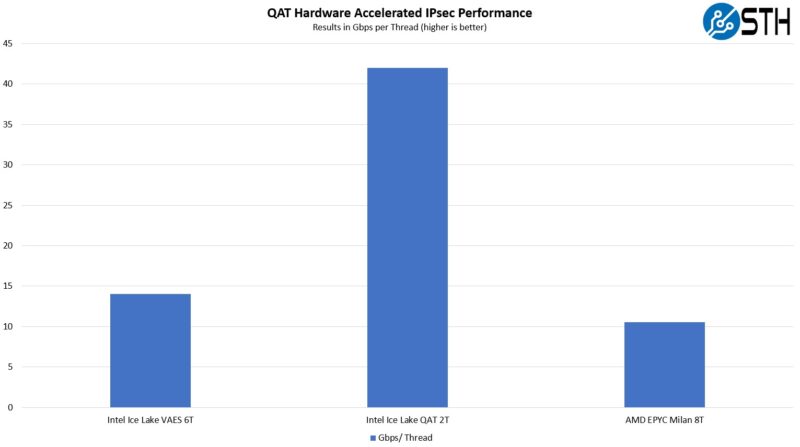

Here is a look at this result in a similar Gbps/ core view.

What we can see here is that the QAT accelerator helps a lot here, as do the VAES versus just using traditional acceleration like AES-NI like the AMD EPYC Milan CPU is using.

This will make more of an impact in a future piece we are going to show you, but for now, the Gbps per thread jump from using QAT is much larger than Intel versus AMD without the acceleration.

Next, let us take a look at nginx performance.

I’m going back to read this in more detail later. TY for covering all this. I’ve been wondering if there’s updates to QA. They’re too quiet on the tech

IPsec and TLS are important protocols and it’s nice to see them substantially accelerated. What happens if one uses WireGuard for a VPN? Does the QAT offer any acceleration or is the special purpose hardware just not applicable?

Hi Eric – check out the last page where WireGuard is mentioned briefly.

How about sticking a QAT card into an AMD Epyc box? Would be nice to see how this works and get some numbers.

I came here to post the same thing that Herbert did. Is this more ‘Intel Only’ tech or is it General Purpose?

Also, what OS’s did you test with? It’s obvious that you used some flavour of Linux or BSD from the screenshot, I’d like to know specifics.

It would be also interesting to know if Windows Server also saw the same % of benefit from using these cards. (I’m a Linux/BSD only sysadmin, but it would still be nice to know.)

I don’t think QAT on EPYC or Ampere is supported by anyone, no?

I thought I saw in the video’s screenshots they’re using Ubuntu and 22.04.x?

Some of the libraries are available in standard distributions, however it seems you must build QAT engine from source to use it, there are no binary packages. I think this limits the usability for a lot of organizations. I would be especially wary if it’s not possible to upgrade OpenSSL.