Intel QuickAssist is a technology that can greatly accelerate networking speeds. Check out our first Intel QuickAssist benchmarking and setup tips piece. We went over the implications of moving to QuickAssist 1.6 and some of the nuances to setup. In this article, we wanted to take a look at IPsec VPN performance. We expect QuickAssist to be a feature more prevalent in Intel’s 2017 lineup so it is time to start looking at the technology in more depth.

QuickAssist Hardware and Test Setup

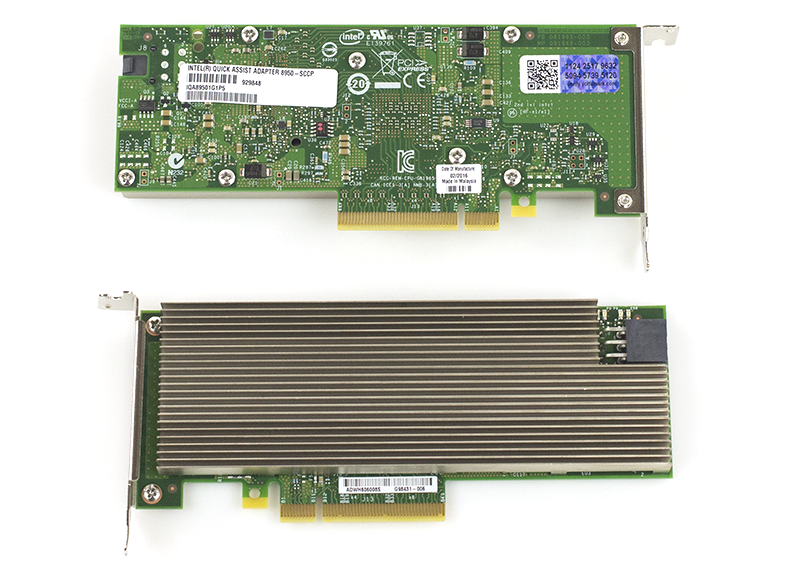

We are using the same QAT hardware we used in our original piece. We used two sets of cards, both based on Intel’s highest-end Coleto Creek 8955 chipset. We utilized the Netgate CPIC-8955 as our primary QAT accelerators. At the time of this writing, they retail for around $800 but Netgate loaned us two cards for our QAT testing. The Netgate CPIC-8955 is highest-end “Coleto Creek” PCIe QAT accelerator on the market rated for up to 50Gbps QAT throughput. These are full-height cards with active cooling. Netgate did provide both the cards and support for getting us started with QAT. That support made this article possible by greatly speeding our test setup cycle. If you are thinking of embarking on QuickAssist acceleration, having a QAT guru is extremely helpful.

Second, we had the Intel QuickAssist Adapter 8950 cards that Intel sent. We have been using these cards for some time and they will be used more heavily in one of our upcoming pieces. They also utilize the Intel Coleto Creek 8955 chipset. They are half height cards and require good chassis cooling to provide airflow over the large heatsink. We recommend these cards only in server chassis with appropriate airflow.

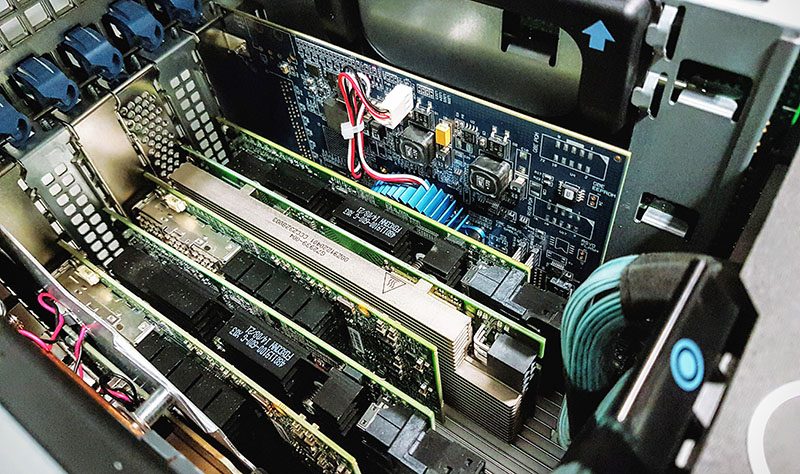

Here is a look at one of our first test beds (Dell PowerEdge R930) we used for QAT benchmarking dry runs with 180gbps worth of networking and several QuickAssist accelerators:

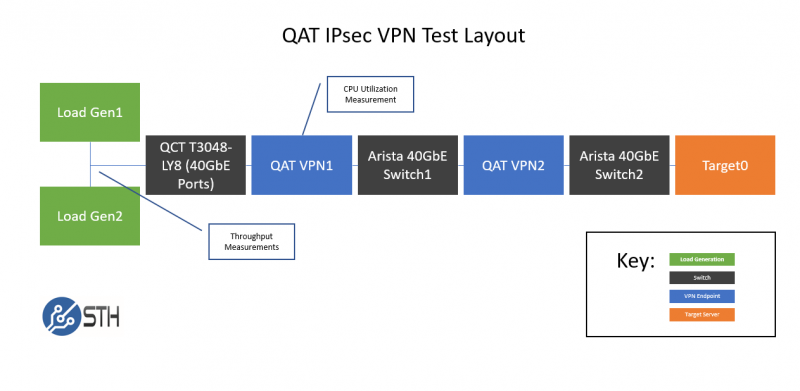

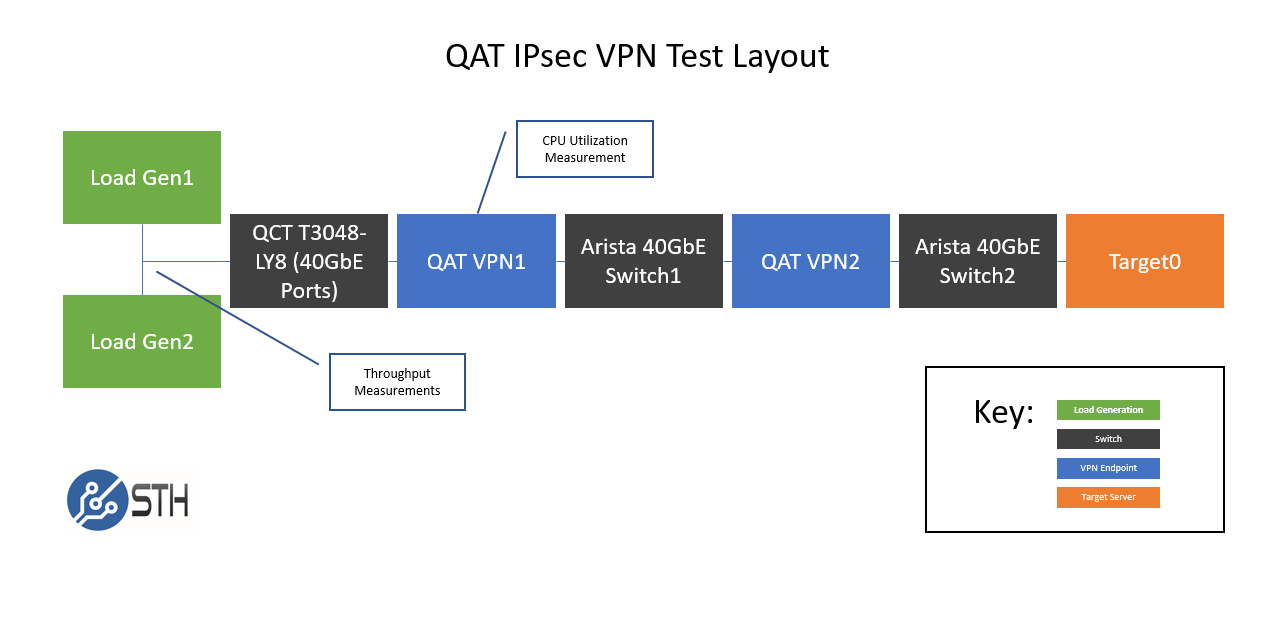

When testing the higher-end QAT cards dual 10GbE is not sufficient for network bandwidth testing and you need at least 40GbE. We also decided against running a two node configuration which we saw in some other results and decided to run a more “real-world” setup.

In terms of the actual layout, we decided to utilize the STH/ DemoEval lab to setup something that mirrors something closer to a “real world” environment although we had a 40GbE switch sitting where a WAN connection would be in the real world. We got feedback from a team at Cavium when testing their parts that we should have different switches for each network segment. Also, each 40GbE segment had its own 40GbE adapter (Mellanox ConnectX-3 EN Pro on the load generation nodes, two Chelsio T580-LP-CR on the target node and two Intel XL710-QDA2 in each QAT VPN nodes) so that we did not run into PCIe bandwidth issues. Although we did have every 40GbE port active and fitted with a 40GbE DAC, a PCIe x8 card cannot sustain 40GbE off of both ports of a dual port. That is a big driver as to why we had the entry and exit ports on the VPN nodes using different Intel XL710 NICs. We ran this test over a weekend when DemoEval services were offline so the three switches were sub 0.01% load outside of our testing. Thanks Patrick for clearing space.

We are interested in two primary data points. First, determining VPN throughput between the two networks since that is ultimately what we are aiming to achieve. Second, what is the CPU requirements of the QAT VPN nodes.

The load generation servers we were testing were dual Intel Xeon E5-2698 V4 machines with 256GB RAM. We used a quad Intel Xeon E7-8870 V3 system with 512GB of RAM as the target server. The QAT VPN nodes were single Xeon E5-2650 V3 machines with 128GB RAM each with two XL710-QDA2 dual 40GbE cards installed. We picked the E5-2650 V3 single CPU nodes so we did not have to deal with two socket NUMA.

Since there are a ton of different configurations, available and options in terms of packet sizes and etc, we decided to focus on seeing if we could reproduce Intel’s 40Gbps on two cores claim so that we did not tie up over $150K worth of demo hardware for months. One example of this is that you do need to use a supported crypto algorithm for the QAT engine to work. We went over this in our original QAT benchmarking piece. We decided to use aes128-sha1-modp1024! for our ike/esp. If you are an engineer at a company looking to OEM VPN appliances with QAT cards (or as an upcoming onboard feature), we highly suggest contacting Intel or a partner like Netgate to get assistance ensuring that the algorithm you want to use is supported.

For our test we used CentOS 7 along with strongswan to handle our VPN connection and iperf3 for our tests. We also needed to tune our network toward using larger packet sizes. We also disabled aesni_intel so that the CentOS kernel would use the QAT engine. If you dod not do that CentOS will default to using AES-NI and you will NOT be using the QAT card.

At this point, it should be clear why STH is the only independent hardware review site to do any QAT benchmarking. We hope that our process helps others as QAT and similar acceleration technologies are a big deal.

QuickAssist IPsec VPN Test Results

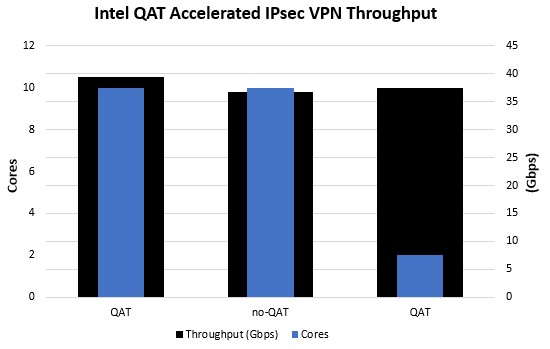

Here is what we saw in terms of results using Iperf3:

After a lot of cabling, test machine setup and etc. I think we managed to directionally validate Intel’s numbers although we were a few percent short of what Intel’s labs were able to produce. Given the fact that we were moving through three switches, two dedicated VPN nodes and three test machines means that we feel we got to within a reasonable margin of error. If you’re looking for a higher-speed IPsec VPN, look at QAT. Non-QAT 2 core numbers were below 10GbE speeds but we were getting a larger variance than we would have liked to see in a test result.

QuickAssist Resources

Here are some of the resources that helped the STH team setup these benchmarks. QAT is still far from automatic so there is considerable setup involved.

- Intel QAT resources on Intel.com

- Intel QAT installation debug video

- Intel QAT 01.org on GitHub (mandatory reading if you want to use QAT)

- Intel’s Getting Started Guide

- Intel’s QAT resources on 01.org

- Netgate – invaluable for getting STH QAT testing online and they are doing a lot of QAT work for products they sell

- Intel – Intel did send me some rather long (70+ pages) QAT guides so the company does have reference materials available

Of these resources, having Netgate’s assistance was by far the most helpful. Netgate is the company behind the popular pfSense network appliance distribution. The company’s QuickAssist Technology gurus that had us up and running with a phone call and a matter of minutes versus spending hours where we would run into small “gotchas”.

Final Words

In higher-end systems when cores cost a lot due to both hardware and software costs, QAT can be a great advantage. If you are building an Intel-based IPsec VPN appliance, we highly recommend getting QAT accelerators. These accelerators free up CPU resources for other network tasks, or allow one to spec a lower power CPU in the box. We do expect our next-generation VPN appliances to have QAT. If after reading our two QAT pieces you are noticing a theme, get help, you are not alone. It is our strong recommendation that if you want to use QAT in your appliances you seek help instead of going it alone as you will save an enormous amount of time. As we progress into 2017, we expect to see more solutions based on QAT and DPDK as complimentary technologies.

This is more a question than a comment. you indicated the load generation application was iperf3 and you also stated that you tuned the system for larger packets. for the graphic showing IPsec throughput with QAT and cores, what was the packet size you used?

Regards,