Intel Optane 905P 380GB M.2 Drive Performance

Intel Optane drives tend to put up awesome low queue depth numbers, and Intel usually specs them conservatively. Also, Optane uses 3D XPoint and can write in place unlike NAND and therefore also does not have big DRAM caches, most of the “exciting” read and write IOPS numbers are frankly less so versus traditional NAND devices. Instead of re-hashing Intel’s spec sheet, we wanted to show three use cases for the Intel Optane 905P where its costs may be justified. We are going to test under FreeBSD, Linux, and Windows just to ensure we get performance in some of the popular OSes that these will be deployed alongside.

Intel Optane 905P 380GB ZFS ZIL/ SLOG Test

Since the Intel Opatne 905P is rated at almost 7PBW, for most arrays it has enough endurance to serve as a ZIL or SLOG write logging device for ZFS. Again, see Exploring the Best ZFS ZIL SLOG SSD with Intel Optane and NAND for more on this. The key here is that the ZIL / SLOG device is a well-known write workload. So much so that exotic solutions like the ZeusRAM were developed years ago to do what Optane can do today. While many may assume it is a constant write, there is a slight difference. It instead writes for a period of time then flush pattern, not a constant write. For some perspective, the ZeusRAM drives were 8GB in capacity for large ZFS arrays.

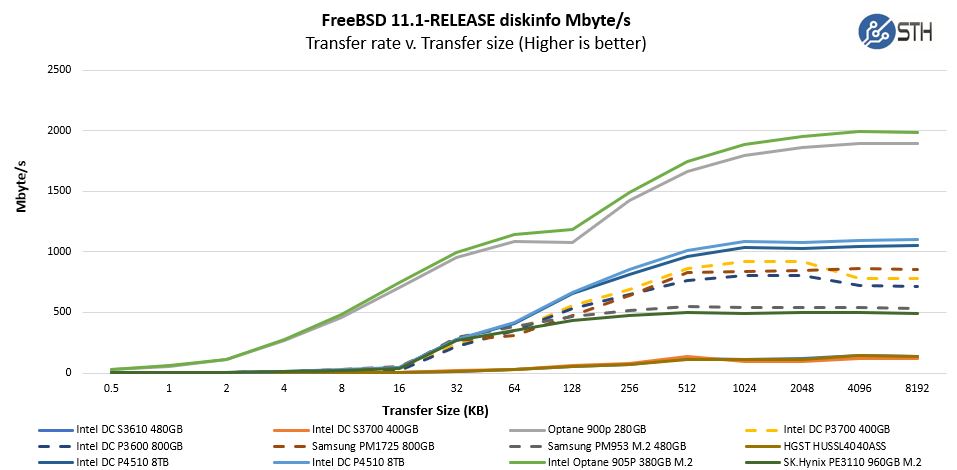

First, here is a view using various SSDs with PLP that you may consider for a ZIL/ SLOG device with ZFS. We are going to express this in Mbytes/s:

There are two main comparisons you should look at. First, the Intel Optane 905P 380GB M.2 versus the two other M.2 22110 drives on this chart the SK.Hynix PE3110 960GB and the Samsung PM953 M.2 480GB SSD. The second is versus the Intel Optane 900p 280GB drive which was our first consumer Optane selected. We have a version of these charts with the P4800X AIC and you will see very similar trends.

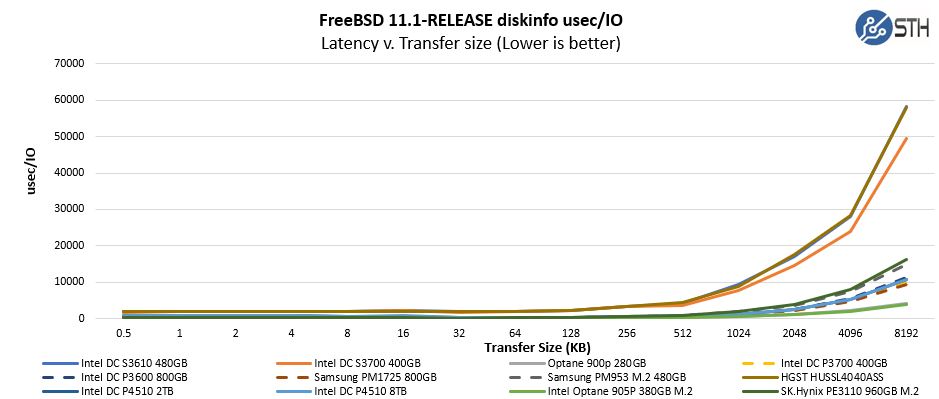

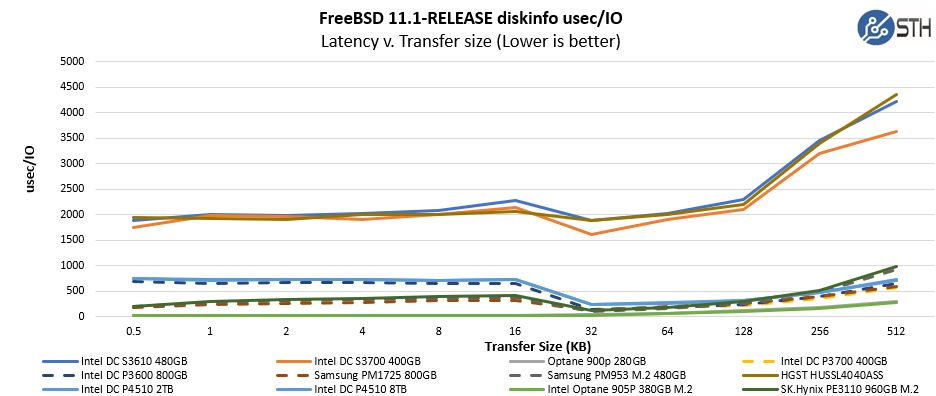

Taking a look at the latency side, the Intel Optane drives are very hard to see.

We can zoom in on the chart’s smaller transfer sizes and get a better picture of what is going on.

The overall stratification here shows why you want Intel Optane versus SATA SSDs, SAS SSDs, and even over NAND NVMe SSDs. We are going to caveat this a bit. If you have a NAS with 1GbE or 10GbE, and say under 60TB that is more of a write once, read infrequently system, the Intel Optane 800P 118GB has enough endurance and performance to get you most of the benefits of Optane for 40% of the price. If you are thinking about deploying a 25GbE ZFS server and have a M.2 110mm slot to use for a SLOG device, this is what you want.

Intel Optane 905P Sub 1ms Latency

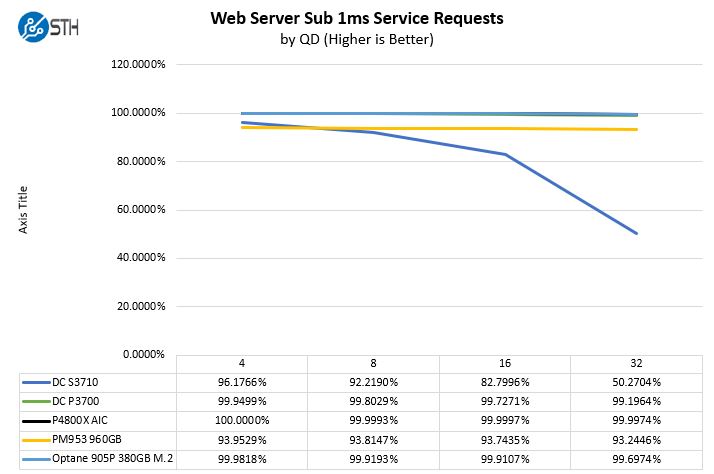

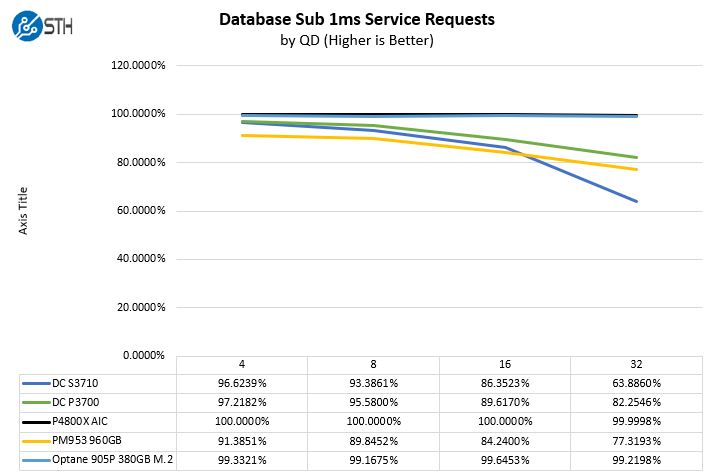

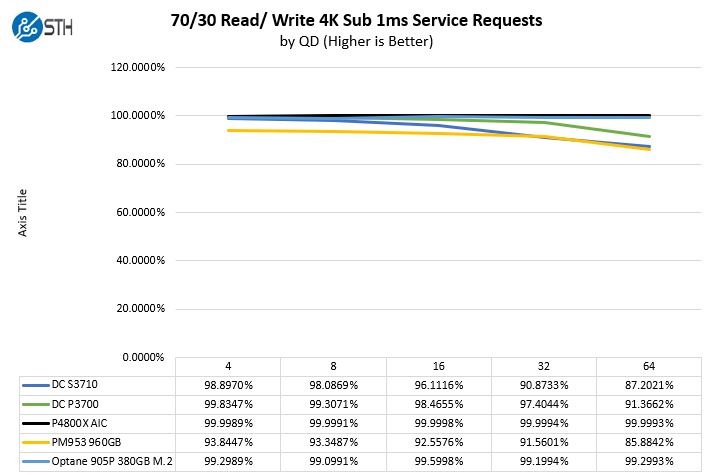

Intel Optane is too expensive to use for general purpose storage. For STH’s hosting cluster, all databases run on Optane, but our image and bulk hosting runs on NAND SSD arrays. There is a fairly easy way to visualize this using common iometer workloads. First, we are going to use our web server profile and look specifically at the sub 1ms service requests:

Here you can see a few trends. First, the Intel drives generally perform extremely well. Second, there is a delta between the Intel Optane DC P4800X and the Intel Optane 905P M.2. If you need the best performance and have the budget, you are better off with the Optane DC P4800X. The quick note here is that for web servers much of the data is primarily driven by reads, in which case modern web applications will cache in system RAM. For those applications, there is little need for an Optane SSD.

This is the chart which is why we use Optane for STH databases. You can see the performance figures are simply great for the Optane SSDs and translate to faster page views. Perhaps the bigger implication is looking at Optane versus other M.2 110mm SSDs which would previously had been your option outside of consumer drives without any PLP circuits.

Again, the Intel Optane 905P performs very well. staying above two nines throughout the range while the Samsung drives we used for these 110mm applications previously never achieves two nines of sub 1ms service requests. That is a big deal.

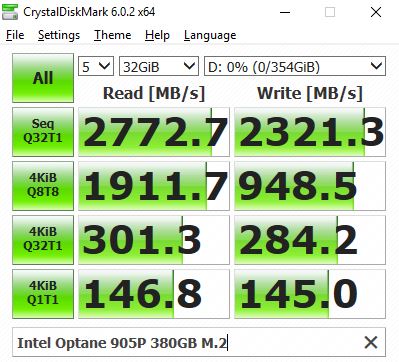

CrystalDiskMark 6 and Windows

Just to show this is not a one-trick pony for servers, we also fired up a Windows 10 Test Bed and let it run CrystalDiskMark 6.0.2 as a simple tool to show the more traditional figures. Here are the results:

Perhaps the biggest theme we are seeing is in the sequential numbers. The performance is slightly ahead of rated specs which seems to be a pattern with Intel Optane 900P and 905P SSDs in general.

For the vast majority of desktop workstation workloads, the Intel Optane 905P is overkill. It is hard to recommend a $500 380GB SSD in that space when there are 1TB and 2TB consumer NAND drives that will work perfectly well without the need for heavy sync writes.

At the same time, there are people who overbuild workstations to a large degree just because they can. Perhaps in those situations where one wants an Optane M.2 380GB drive instead of an Intel Optane 905P 1.5TB AIC model, it makes sense.

For server write workloads such as sync writes for databases and logging devices, Intel Optane is great.

Final Words

If you have a workstation or a server with U.2 drive bays or PCIe AIC card slots, just get the larger form factors. For workstation and mobile users, there are better consumer drives that have more capacity out there. 380GB is still fairly small for modern systems. Frankly, there are a lot of use cases where the Intel Optane 905P 380GB M.2 drive is not the best fit, and there are other products in the Intel portfolio that will better service a user’s needs. In tight spaces, with limited PCIe slots such as our recent Gigabyte H261-Z60 server review and its 2U 4-node form factor, the Intel Optane 905P offers something much better than we had just a few weeks ago by making it plausible to use Optane in the systems.

With that out of the way, we are going to buy a ton of these drives for STH, and we need more than our budgets will allow. At around $500 for 380GB these drives are over $1.31/GB. Once capacity is reserved for snapshots and other modern storage features, that does not leave a ton of capacity for data and drives up the price of storage considerably while NAND SSDs are larger and are seeing rapidly falling prices as QLC is introduced. For databases, and write logging devices, the Intel Optane 905P 380GB is the drive to get hands-down. As a result, we have a number of servers at STH that will have these drives onboard in the near future.

That’s a lot to digest but some great data.

So if you’re constrained to 110mm

And 380GB is enough capacity

And you need high endurance or fast response times

And you can swing $500

And the power and cooling are OK for your machine

Then it’s a good drive.

I get it. It’s a niche product. I’m still just ordered 2

Hot drive. This review is spot on. It’s a $#$$@! to find a fast 22110 M.2

380GB is enough capacity

And you can swing $500

LOL,

better if they sell it for $400 or less.

Does this give the same kind of uber-high performance when used as a drive for MS-SQL log files? I could also see it potentially being awesome as a MS-SQL tempdb drive

4 of those in a $60 ASUS Hyper M.2 x16 x Interface Card PCI Express 3.0 in raid 10 and you have a great fast ZIL device.

I assume these drives perform just as well if they are in AMD servers? I’ve not seen much information about doing this type of setup.

Troy – that would be a great use case

Misha Engel – Perhaps. There is actually a lot of overhead pushing that much NVMe small I/O performance quickly plus RAID 10. Usually, 1 or 2 (mirror) is good enough for most ZIL / SLOG configurations

Jared Geiger – We tested in Threadripper and EPYC. So long as you are on the same NUMA node, you get the performance you would expect. NUMA node to NUMA node, you see a bit of extra latency.

That’s sexy AF

@Patrick Kennedy: 40Gbs ethernet needs a lot of speed and we need some buffer for the 1+ PB of spinning drives, lucky for us FreeBSD 12.0 is out now with good support for EPYC so we can start to build our multiple ZFS RaidZ3+1hotspare in a 16+3+1 config with EPYC7551P. We need it for large seq. R/W. (video editing and storage).

@Misha Enge I love it when I see ppl save $$ thru AMD CPUs and give it back 2 Intel thru a memory product.

@Vlad: As long as it works the way I want, I don’t care where the hardware/software comes from.

By the way, roadmaps show a 760GB M.2 905P coming for those that need greater capacities.

How does it performance compares with 905P 480GB U.2 drive?

Igor – pretty close to the point that the form factor is going to be the reason you go M.2. Also, in the server world, a ~10W part as big as an M.2 drive is trivial to cool. In workstations, you will likely want a heatsink as there is a lot less surface area on the M.2 drive than the U.2 drive.

For things like FS journal / ZIL or database logs (WAL, doublewrite / undo log etc.), a device like this might be even more interesting: https://www.microsemi.com/product-directory/storage-boards/3690-flashtec-nvram-drives (sadly no M.2), or using NVDIMM, but I haven’t seen either in the wild, they seem to be hard to procure.

I think a separate fsync() benchmark would be very interesting, I’d wager the NAND based devices perform a lot worse here, unless they have capacitor protection for the DRAM or simply lie about durable writes:

https://www.percona.com/blog/2018/07/18/why-consumer-ssd-reviews-are-useless-for-database-performance-use-case/

Especially for small databases write+fsync performance may be all you (need to) care about.

Optane is simply superiour regarding performance

What I currently miss are the typical SAS advantages “hot removable/replaceable”, “Dualpath” for HA setups and scaleable to hundreds of disks. Sadly there is no 12G Dualpath SAS disk based on Optane technology. This would be the real storage break-through.

The U.2 drives are better in many ways than the M.2 form factor. NVMeoF applications will take over. Once you can put NVMe on 25GbE the ability to provide HA clustered storage goes up tremendously. The cloud providers are dictating the next directions in storage.

I put one of these in an ASUS Gene XI motherboard in the DIMM.2 riser card paired with a 970 Pro 1TB for sequential read/writes. I’ve test VM startup times, database backup times, game loading, unraring and copying the Windows folder. The 970 Pro only won in the unraring test, all other test were won by the 905P. Those tests plus the fact that endurance is crazy means I’m very happy with the purchase.

Patrick Great Review Congrats !!!

I currently use an Optane SSD 900P 280GB and a 4x2TB NVMe Storage on ASRock ULTRA QUAD M.2, I would like to upgrade my SSD 900P to 480GB but just saw this, and the SSD 905P seems faster. Can’t decide which one to go, if the 905P 380GB M.2 110 or the 905P 480GB U.2, this one is a bit cheaper and 100GB more…is there any differences in perforamance between them ?

Thanks a lot, all the best, Sergio!

Geez, what ever happened to the old days when we could buy an AST Ramdisk expansion board, stuff it with DRAM and have a REAL Ramdisk!? The things I’d do to get a PCIe 4.0 version of that old board. Even a a PCIe 3.0 would be acceptable. Any such animals still around?