Recently I had the opportunity to chat with Bill Pearson who is the VP of Intel’s IoT group around some of the company’s work with OpenVINO and its DevCloud. Bill has worked on developer solutions at Intel for quite some time and is now focused on the company’s IoT push. OpenVINO is Intel’s toolkit for vision-related neural networks at the edge. Effectively, Intel recognized that there would be an explosion of cameras at the edge, and anticipated the need for these cameras to have an easy way to process what they are seeing. OpenVINO provides the toolkit to make that happen. We often focus on the data center and edge server aspect of video analytics, but with products such as the NVIDIA Jetson series and others, it is clear that the vision is data center training through edge inferencing. The Intel DevCloud is how Intel is enabling developers to try their OpenVINO (and other) code on Intel platforms.

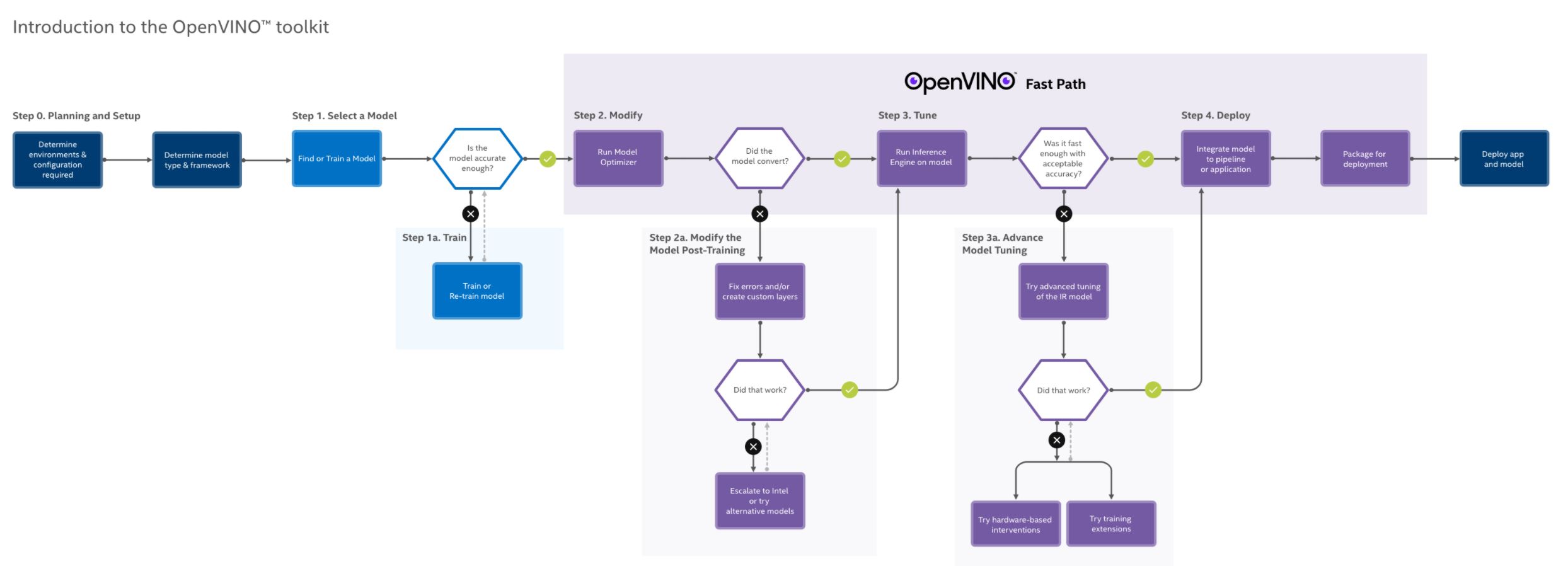

Intel OpenVINO 60 Second Overview

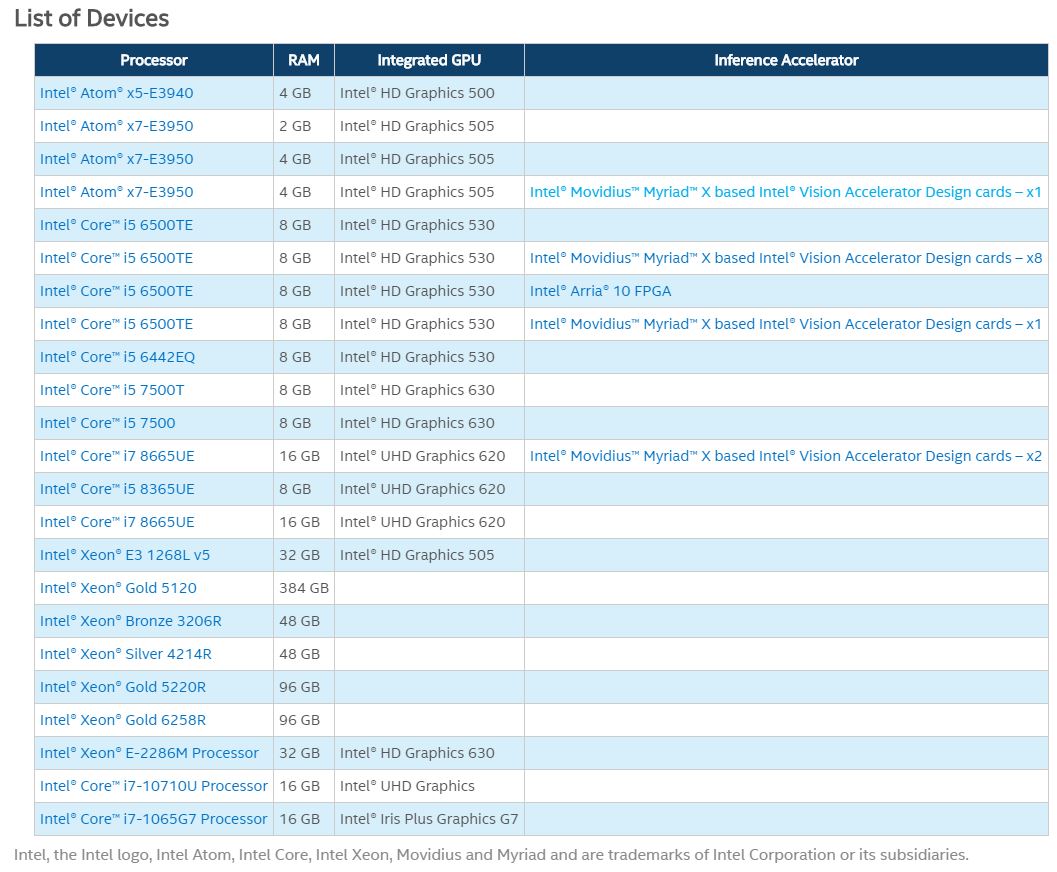

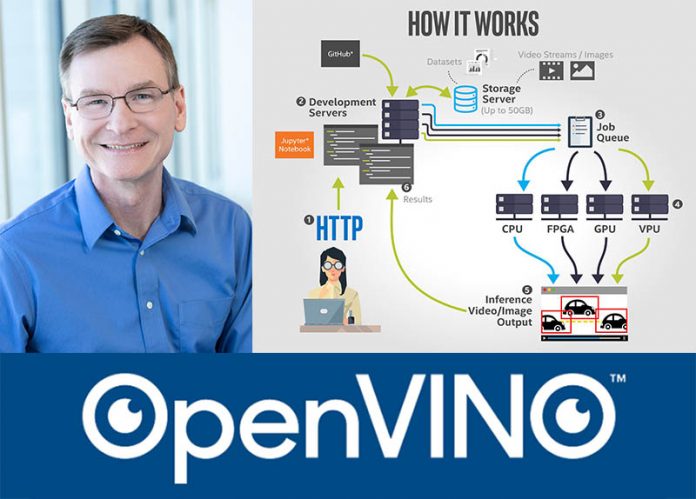

Since the OpenVINO toolkit has been around for some time, I wanted to focus on how it fits into Intel’s broader portfolio. Bill told me that OpenVINO provides API-based programming across a variety of silicon types. The idea is that one can write code once, then deploy that code on different silicon without making code changes. OpenVINO now works on not just some of the edge VPUs, but it also works across a broad array of Intel silicon including CPUs such as the 11th gen Core, x6000E embedded Atom CPU, and Xeon. It can also be used with FPGAs and Movidius VPUs. Intel has a list of supported hardware.

One of the biggest questions in AI is always the ecosystem. The market understands NVIDIA CUDA as being a major player, but I wanted to ask about the momentum for Intel. OpenVINO is an open-source effort (led by Intel), but the purpose is clearly to sell more Intel silicon. Bill told me that Intel is seeing five times the developer momentum in 2020 with revenue tracked in the $100Ms and growing at a 3x year-to-year basis. The message was that the solution is clearly growing.

Intel DevCloud for the Edge to Try OpenVINO

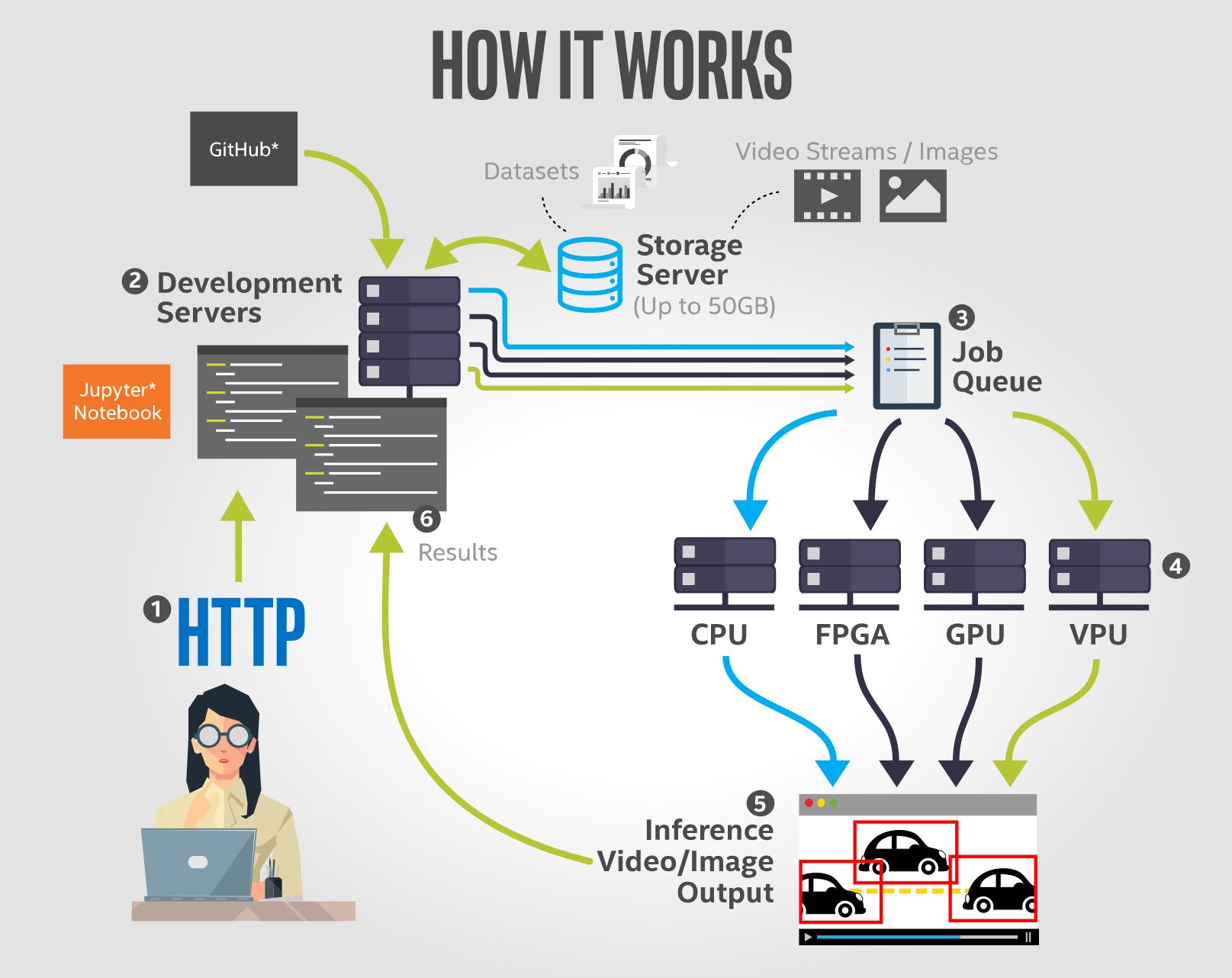

Intel is far more than x86 compute today. The company is on a multi-year journey to diversify its silicon offerings to different types of processors, along with different power and use profiles. While this week we looked at the Intel FPGA SmartNIC solutions spanning 75W to 225W Intel has solutions that are designed to run well under 1/10th the wattage. We see the same range with even traditional CPUs ranging from Xeon to Atom. Since there are now so many options in terms of types of silicon and power consumption, just finding which may be the right option can be difficult.

Enter Intel DevCloud. The company is adding its devices to its DevCloud so that developers can try their code on a number of different pieces of Intel silicon quickly and for free.

Personally, this makes a lot of sense. If you have ever ordered a Raspberry Pi or other development platform and forgot a power adapter or something, you know the feeling of having a new piece of hardware that you have to wait a day or two for the next Amazon delivery to bring you that extra part you need. If you are targeting a handful of platforms, this can be an annoyance and slow the process. If you need to test across tens or hundreds of devices, this will happen and slow the process.

The Intel DevCloud is designed to address that need. Intel is a business that wants to sell its silicon, but it needs to provide a way to match silicon to an application as the types and form factors of its silicon proliferate. Bill told me that there are now over 400 different hardware configurations to test in DevCloud and it is an area that Intel is heavily investing in. Hearing Bill describes this, he framed it as a great tool for developers. I think of it more as Intel’s virtual showroom.

For developers looking at questions such as what can process video at the best price per frame or lowest power per frame, DevCloud makes a lot of sense. An area we know from the STH experience that we see companies and startups keenly interested in is the security of their source code. Putting your code on a third-party cloud can be risky if that cloud provider decides to assimilate it (see how AWS “utilizes” open source software.) Further, one has to worry that the next developer may see the code you were trying to run on the DevCloud hardware platforms. Bill told me that Intel is using security best-known methods throughout the infrastructure. This includes internal and external reviews. Bill assured me that Intel is not trying to take everyone’s models and that developers are working on private encrypted storage.

Final Words

We know we have a lot of hardware folks on STH. We are focused on the edge DevCloud and OpenVINO here, but Intel also has a data center DevCloud and a FPGA solution as well. The Intel DevCloud solutions are going to be deployed more widely especially as the company pushes OneAPI and other tools as a way to manage code running on different architectures and packaging in the future.

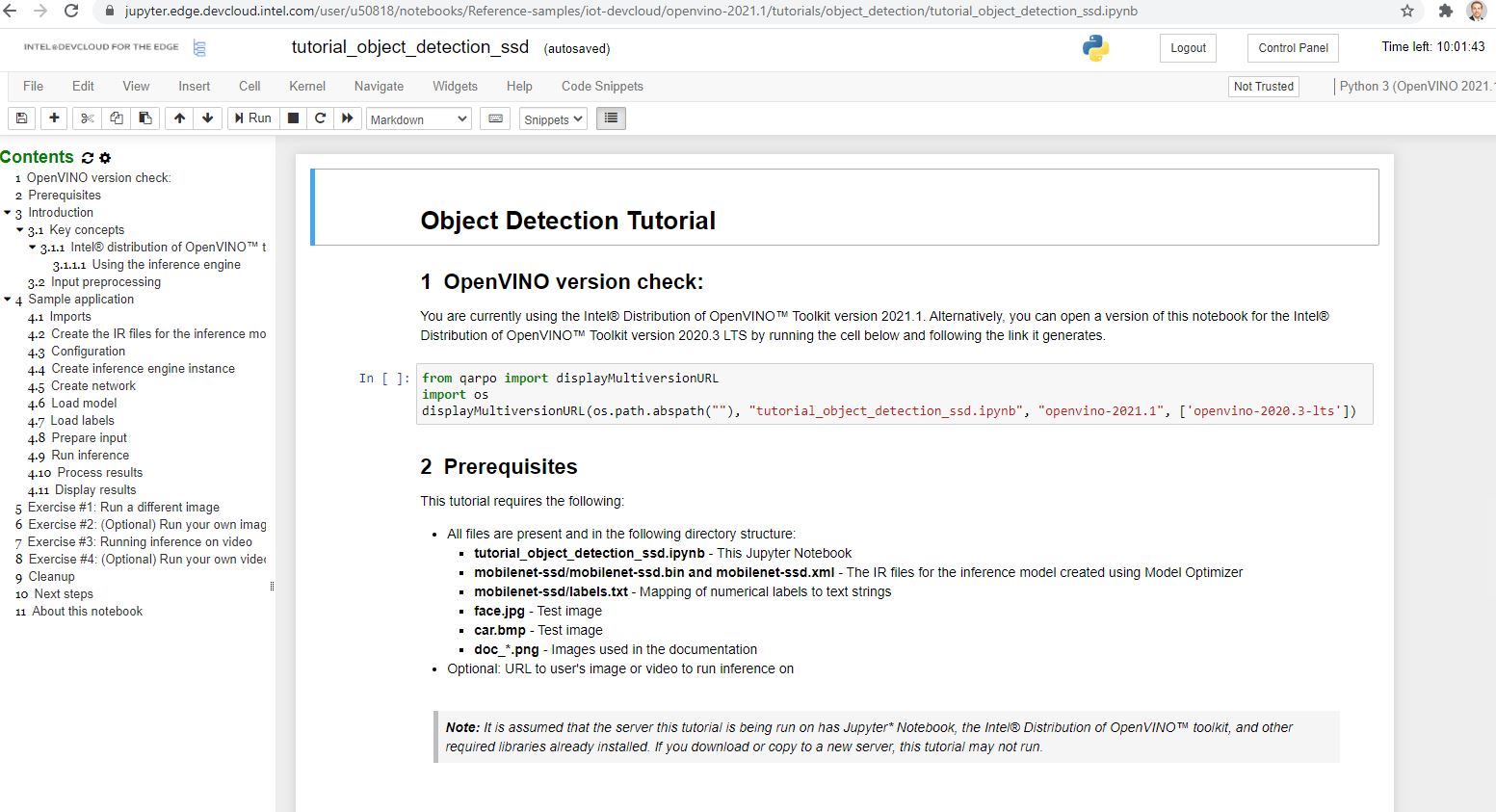

On a personal note, I wanted to say thank you to Bill for taking a few minutes out of his busy schedule to chat on the solution. As a quick note, Bill told me signing up and starting with DevCloud would be easy. It took me less than 10 minutes to be able to signup and get started with Jupyter-based tutorials. It was certainly faster than waiting for Amazon Prime delivery.

With this originally being released in 2019 one wonders why it hasn’t gained significant popularity. Then when you look into it you see it’s locked to very specific hardware combos and not doing a significantly better, cheaper or easier job than alternatives. Oh well…

There was a webinar today presenting a new release of an openvino model server that has been rewritten in c++. During q/a they also stated that the backend takes advantage of oneDNN to enable execution on multiple architectures … cpu, gpu, vpu, …

https://github.com/openvinotoolkit/model_server/releases