Intel NUC9VXQNX Topology

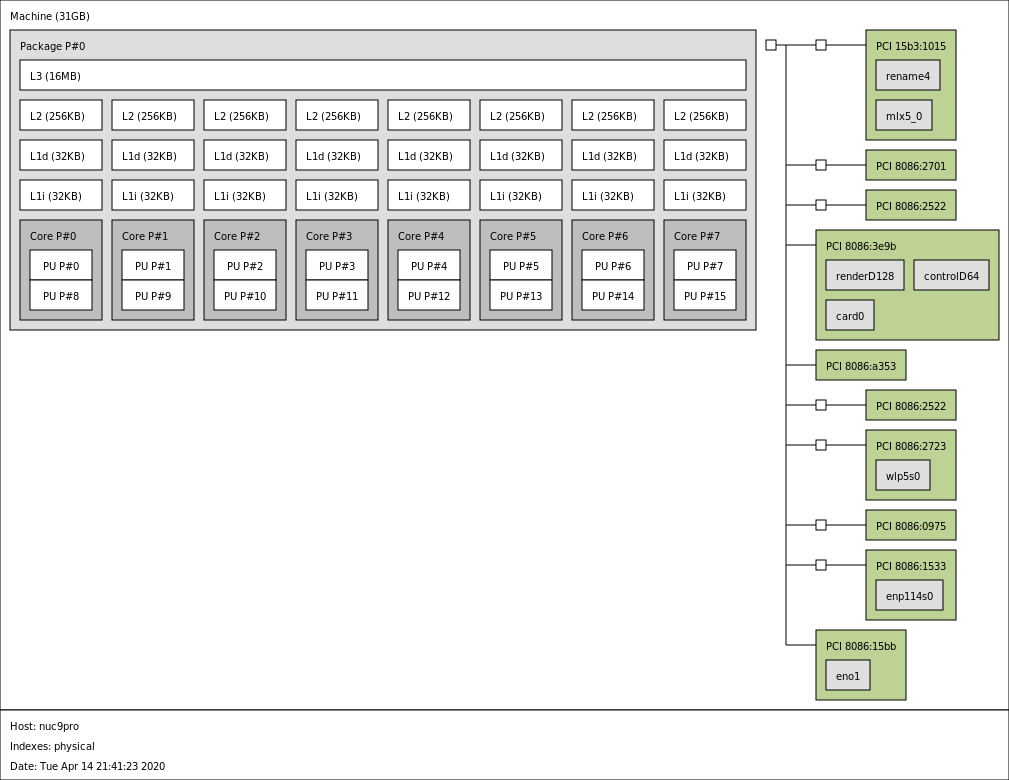

We decided to put a device in every slot that the system had so we could get a topology map. Here is what that looks like:

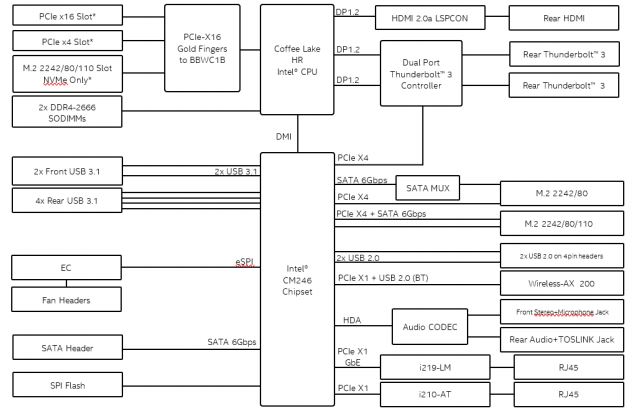

If we look at the system’s block diagram, we can see something very important.

The PCIe x16 edge connector of the NUC is providing PCIe support to the M.2 NVMe SSD slot, the PCIe x16 slot, and the PCIe x4 slot. If we want all devices to be used we have 1×8 + 2×4 bifurcation of this x16 link. We cannot run all slots at full speed. Also, we tried bifurcating the x16 slot into 4×4 for more NVMe storage and that did not work but that may be a BIOS update away from working.

Intel NUC9VXQNX Management

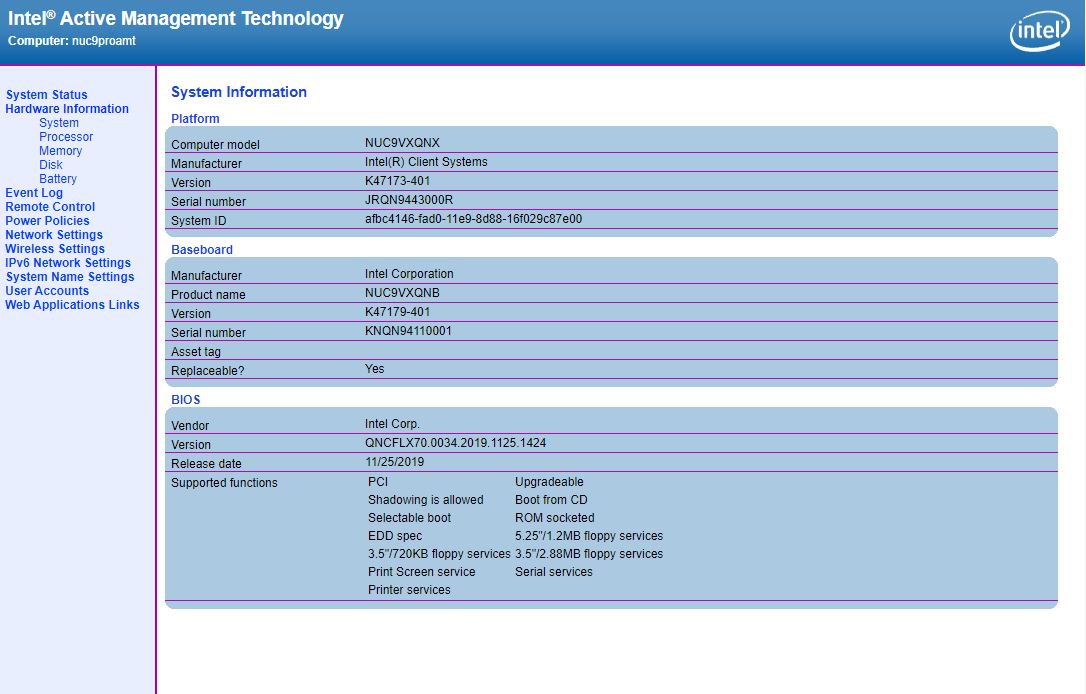

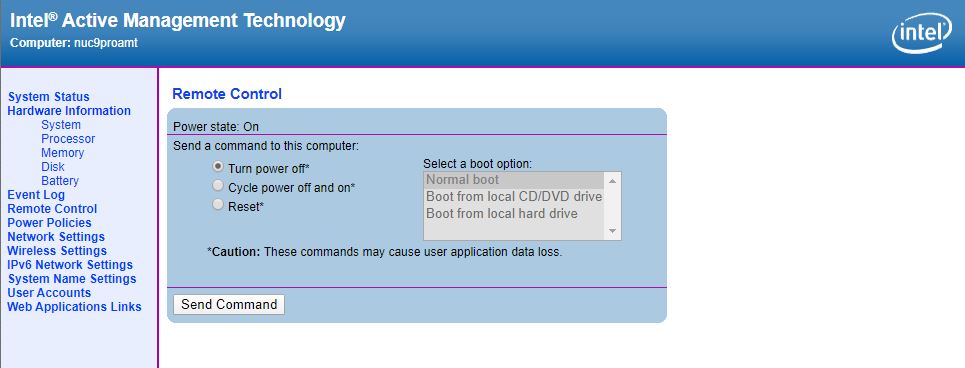

The Intel NUC9VXQNX kit uses Intel AMT which allows for out-of-band management. For those that manage corporate desktops and notebooks, vPro and AMT will be very familiar. Intel has offered this as the client OOB management solution for so long you can read some of my pieces on this from years ago when I wrote for Tom’s Hardware: Intel vPro Technology: Patrick on Tom’s Hardware and Using Intel vPro to Remotely Power Cycle a Client PC.

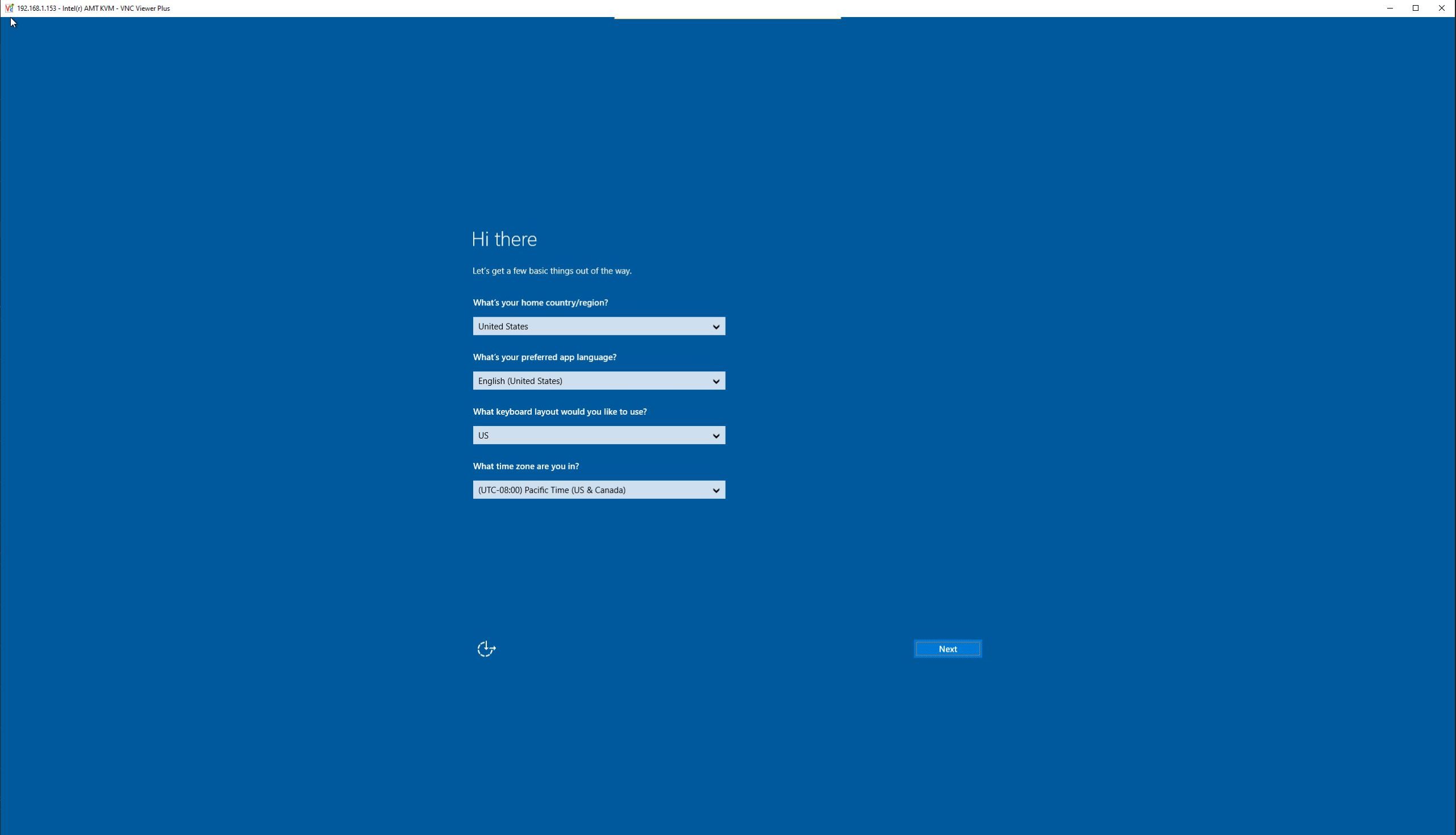

For those who are looking at the NUC9VXQNX to serve as a portable POC tool for enterprise software, which was common with older NUCs, this is not server IPMI/ Redfish management. It is something different albeit it is still an out-of-band management solution with integrations to desktop management tools. We could install Windows 10 Pro using the remote media and iKVM:

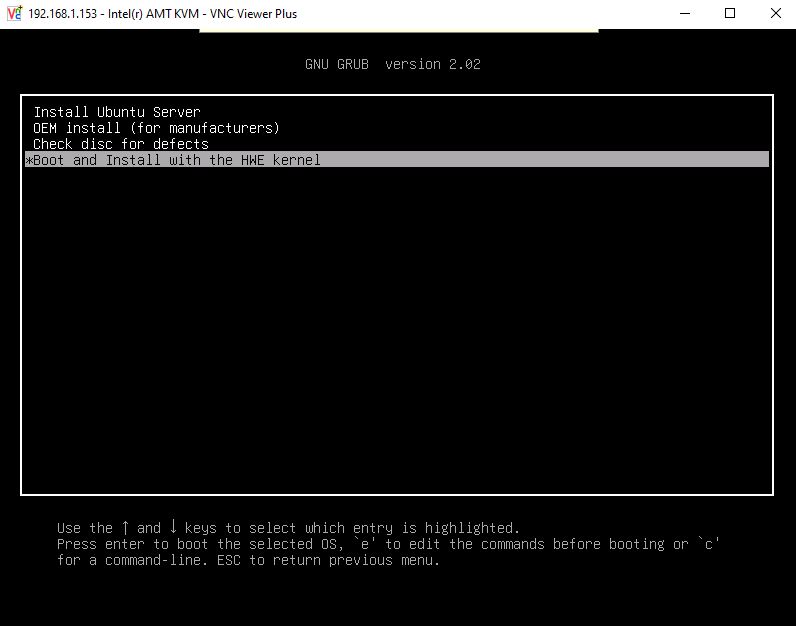

We could also install Ubuntu 18.04 LTS by using the remote power cycle and remote media features:

This requires a $110+ Real VNC Viewer Plus license to utilize the iKVM. One also has to enable the functionality. If you are setting up a unit or a handful of units manually, this is significantly more burdensome than standard server BMCs. Still, if you just need infrequent management access, then this is a solution and far better than no solution even if there are not the same server provisioning features. Of course, if you have a client PC management solution, then you can use that to set up the NUC via AMT which will be much easier. These are meant to be deployed as client devices so this makes a lot of sense.

We will note that having this low-level access in the system does present a security attack surface. Still, having any remote access by definition is a potential security threat. On the other hand, many of our readers will find this very useful.

Intel NUC 9 Pro Power Consumption and Noise

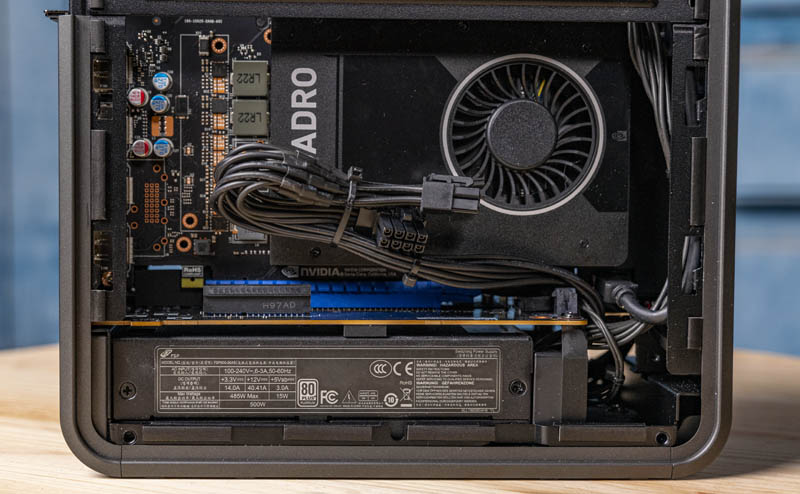

The Intel NUC 9 Pro NUC9VXQNX utilizes a 500W 80Plus Platinum PSU neatly tucked at the bottom of the system. This is important since it provides enough power to enable up to 225W GPUs.

In our test system, we found that the power consumption was relatively low, mostly due to the 45W TDP part. Without a GPU and even with NVMe SSDs and a high-speed NUC you will not see power even close to 200W. We were getting sub 25W idle even with a 25GbE NIC and four NVMe SSDs and not peaking above 150W. Of course, adding a GPU changes this.

In normal operation, the four fans (two chassis, one Xeon compute card, one PSU) are running but virtually silent. During our benchmark suite we could get them to spin up, but our sense is that under normal desktop and even light server duties this NUC platform will be effectively silent in most offices and homes.

Next, we are going to cover performance before getting to our final words.

beverly cove! you’re like the only person that’d pick up on the parallel

This reminds me a lot of the old (And new) PCIMG style computers. Just in a consumer friendly form. That might be a fun comparison given how cheap they are now.

Patrick, did you try putting the compute board into a normal motherboard? Does it still work? That would be my use case, as a 2nd pc for streaming housed in the same case.

Please try it and let me know or post somewhere! I would really buy one if it did work.

Thanks

Great review Patrick! Very nice extra touch to relate it to the early prototypes as well!

Surprising to read that it is quiet. Isn’t that a 40mm fan in the PSU?

I’ve had 5 Mac mini over the years and I love the form factor, but ever since I wanted more GPU power I switched to larger stationary PCs, typically mATX. Something like this could tempt be to go back to small machines, but then the $1500+ price absolutely kills it. You could easily build a much more modular, powerful, quieter and cheaper mITX-based machine in a Dan A4 (which is even smaller than this) or Ncase M1.

The main advantage with this NUC would be having two PCIe slots, but unfortunately they are only PCIe 3.0, and sharing x16. With AMD Ryzen you can get a PCIe 4.0 x16 in mITX.

Alex, I wouldn’t be surprised if putting this compute board into a PCIe of another motherboard would fry something.

@Alex

I doubt it’s possible to plug the board in another motherboard, in fact I believe it likely to cause damage to one or both components (since in both cases the PCIe interface would provide power, unless you don’t plug the compute board into the PSU).

You could of course put the board in the same case as another motherboard provided you don’t connect it to a motherboard (for example a large case with only an ITX or mATX board installed). This sounds kinda intriguing actually, get a big tower, install an ITX board and put in one or two of these compute board for a small cluster.

Thanks for the replies guys.

I would love a simple compute card like this that just sucked 75W of power from the PCI slot but had a jumper/switch that could make it independent (i.e. not search for other PCI boards, as is the case with this NUC).

Simply slot this in and have another full dedicated PC to encode my stream as it goes out live.

I’m sure there could be other uses … like having 4 or 5 of these in a HEDT system for a cluster? Who knows…

for me this will be perfect in my home-studio (Music) 1 pci-e card (universal audio dsp card) 3 or 4 ssd s and thunderbolt that will work with my Apollo interface (hm maybe do a hackintosh also we will se thx for a good site

This could be perfect for a small VSAN cluster.

Only downside I see here is memory support, if only it was possible to push in around 256gb ram that would be great.

Isn’t Intel providing the baseboard specs and design guidelines to OEMs so they can make their systems? I think I saw some at CES. I‘d love to see what someone like Dell would do with this with all the ready built up ecosystem they have

I can also see a use case for using this in a rack, similar to a Supermicro Microcloud but instead of sleds, we just have cards. Also extremely reminiscent of a transputer!

Anyway, this is something that AMD should jump on too with their PCIe lane advantage, this is a nobrainer for them to adopt the baseboard and compute module functionality too. Just need a 2nd PCIe slot to the baseboard…

@AdditionalPylons The Dan A4 (7.2L) is significantly bigger than the NUC 9 at 4.95L. You might be thinking of the Velkase Velka 3. at 4.2L

Though the design is fairly clever, I feel like Intel didn’t put a lot of effort into it.

1. Processor cards? Really? Vendor and platform lock-in?

2. They’re trying to emulate a “sandwich layout”, but they don’t seem to understand what makes the sandwich layout good. It’s not having your cooling system sandwiched in between 2 PCBs.

3. Why bother redesigning the industry-standard Flex-ATX PSU? Why not go further and design an even more compact PSU?

If size and expandability are all Intel has going for it, then the NUC 9 is a day late and a dollar short. The mini-ITX form factor has come a long ways over the past 3 years, allowing enthusiasts to build PCs as small as and expandable as the NUC 9. Actually, it feels like most things Intel has been releasing over the past 2 years has been lackluster. Hopefully Intel can drum up more interest in Lakefield or Alder Lake.

Nice machine and review. If the price weren’t so high, it would be much more tempting to get. At this price range, it’s got a lot of competition, if one didn’t mind going with physically larger servers. Question regarding the vPro/AMT functionality: is it possible to remotely install an OS (in particular ESXi) by mounting an iso on the network? Wondering if vPro/AMT allows the server to be completely managed remotely (including for fresh installations)? Wondering what I would be missing using vPro/AMT as compared to IPMI (such as HPE’s iLO).

@Ed Rota: Point me a min ITX MB with 3+ PCIe slots and 2+ thunderbolt ?