The Intel NUC line started off as a low-power and very compact line of desktop PCs. As a result, they power everything from conference room IT, to personal desktops, to even portable proof-of-concept demos for high-end data center software. Starting from modest roots, Intel is taking the next evolution of these with the NUC 9 Pro kits codenamed “Quartz Canyon.”

Intel sent us a unit powered by an 8-core Intel Xeon E-2286M to review and when we got it, the first thing that popped into our mind was not how revolutionary it was. Instead, it was just how similar this is to another Intel product we used in 2015 that never saw public introduction. In our review, we are going to talk about the capabilities of this NUC kit, as well as give a bit of a history lesson on where this may have come from within Intel.

Intel NUC 9 Pro Video

If you want to hear more about this platform and some of the background commentary, you can check out the video we have that accompanies this review:

As usual, we have a lot more detail in this review, but we are going to offer that video as an option for those who want to listen instead.

Intel NUC9VXQNX “Quartz Canyon” Overview

The Intel NUC9VXQNX is a fairly compact unit measuring 9.37″ x 8.50″ x 3.77″ or 238mm x 216mm x 96mm. For some context, that is only a bit smaller than a HPE ProLiant MicroServer Gen10 Plus based on a socketed Xeon E-2200 series processor.

Unlike the MicroServer Gen10 Plus, this has an internal 500W power supply in that space.

In terms of front I/O there is a front panel audio header along with two USB 3.1 and two USB 2.0 headers. There is even a SDXC (UHS-II capable) slot.

On the rear there are two 1GbE NICs. One is Intel i219-LM chipset based and the other is an Intel i210-AT port. There are four USB 3.1 Gen2 ports on the rear of the system. One also gets an HDMI 2.0a output as well as two Thunderbolt 3 ports and an audio/ optical combo port.

Something that is apparent from the rear is that the unit looks as though it is four PCIe expansion slots wide. That is because it essentially is.

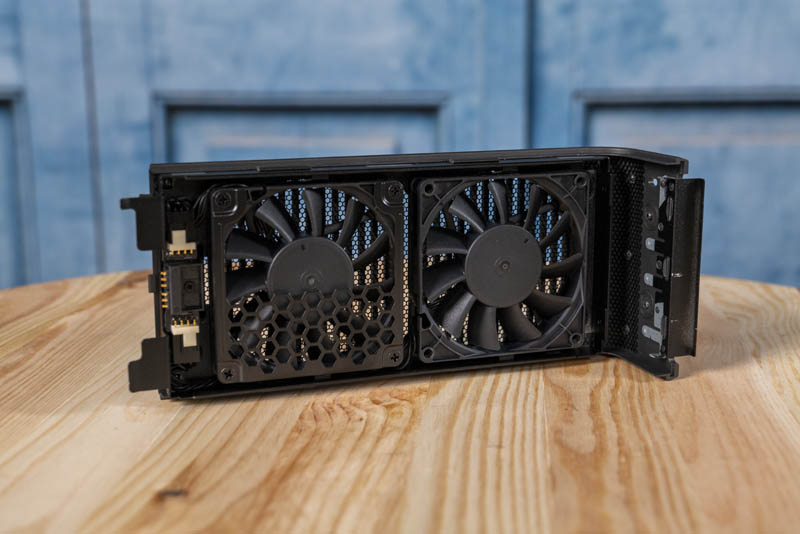

Opening up the system the first step is removing the top which has two fans.

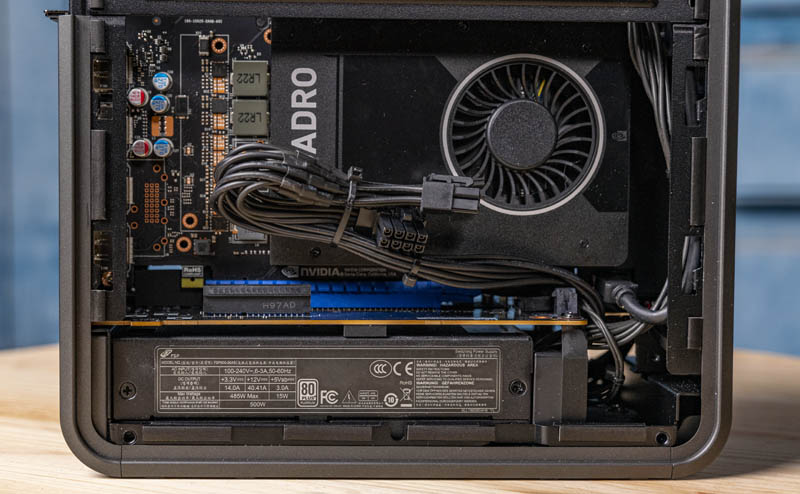

One can then remove the sides. Inside we can see our unit has a PNY NVIDIA Quadro P2200 GPU. This 5GB GPU offers four DisplayPort outputs which are important to drive multi-monitor setups.

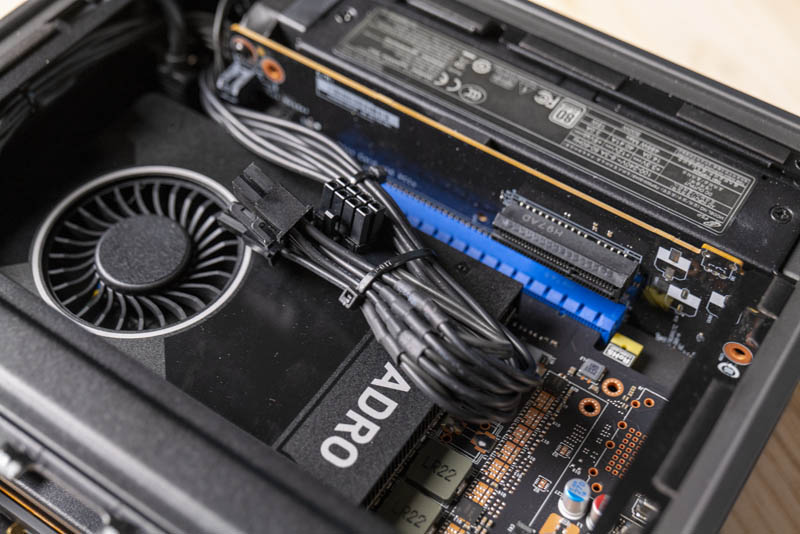

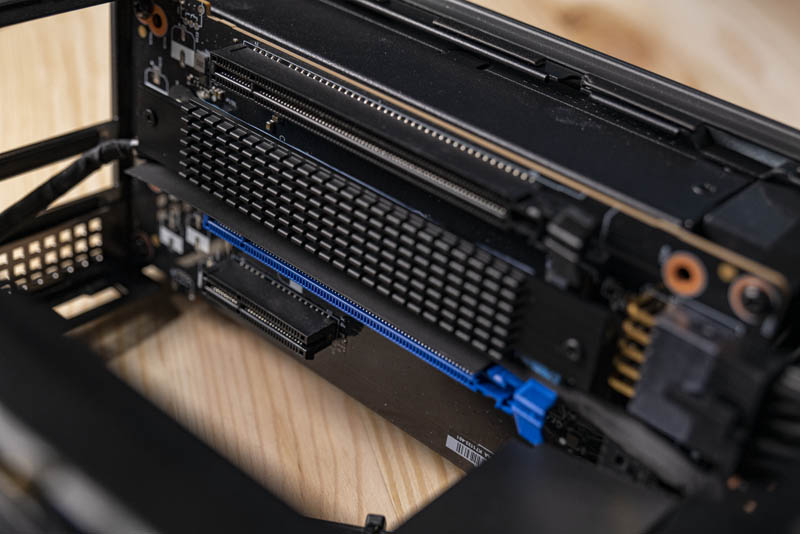

When we remove the GPU, we can see that it is sitting in a PCIe x16 slot. There is also a PCIe x4 slot next to the x16. That allows one to use a double-width GPU and Intel says that the chassis can take up to an 8″ deep 225W GPU powered by the internal 6+2-pin and 6-pin power cables.

The 8″ depth means one needs to be vigilant shopping for GPUs of that size since many standard desktop GPUs are much longer. Using a double-width GPU covers up the PCIe x4 slot.

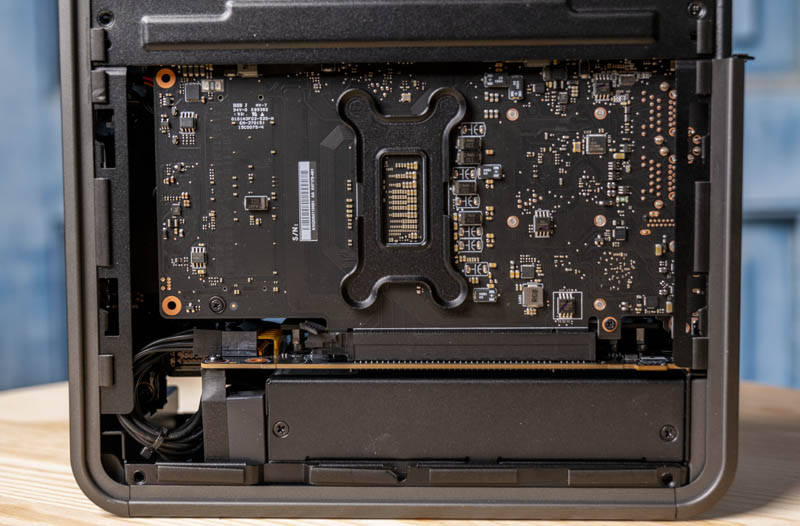

One can also see one of the more unique features of these Quartz Canyon NUCs, there is a PCIe card with the CPU, memory, cooling, and two NVMe SSDs. We are going to go more into that module in a moment, and why we have seen something like this before.

After unplugging a myriad of cables for front panel I/O as well as power and even the Wi-Fi 6 AX200 2.4Gbps and Bluetooth 5 antenna leads, we can remove this Xeon branded compute module just as we would a PCIe GPU.

Indeed, it has a PCIe 3.0 x16 connector that feeds the rest of the system with PCIe lanes for up to 16 combined.

Underneath the module, we can find a PCIe x4 M.2 slot that can handle up to M.2 22110 (110mm) NVMe SSDs.

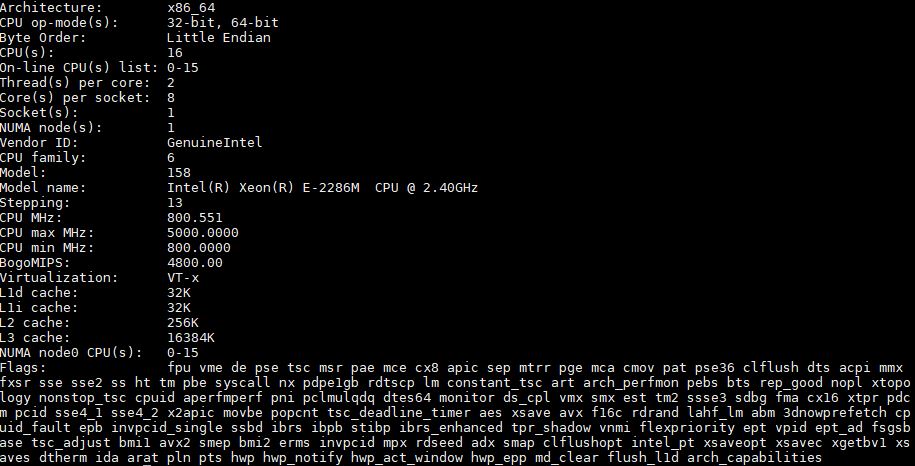

Opening the side cover of the module, we can see the copper cooling solution for the 8-core Intel Xeon E-2286M.

This 45W TDP Xeon we often see used as a high-end notebook part. We saw it, for example in our Dell Precision 7540 with Intel Xeon and ECC Memory Review. That means we get an integrated Intel P630 GPU which drives the onboard display outputs.

The Intel Xeon E-2286M is also one of our least favorite product names. The “6” digit in the rest of the Xeon E-2100 and E-2200 series lines means 6 cores. Here we still have 8 cores but at a lower 2.4GHz-5.0GHz clock speed range and TDP. This is a great CPU that we just wish could be renamed.

Next to the CPU on one side, we see two DDR4 SODIMM slots. These slots can support up to DDR4-2666 as well as DDR4-2400. We also get ECC support with the Xeon part. In terms of capacity, we can hit up to 64GB in this NUC at DDR4-2400 or 32GB at DDR4-2666.

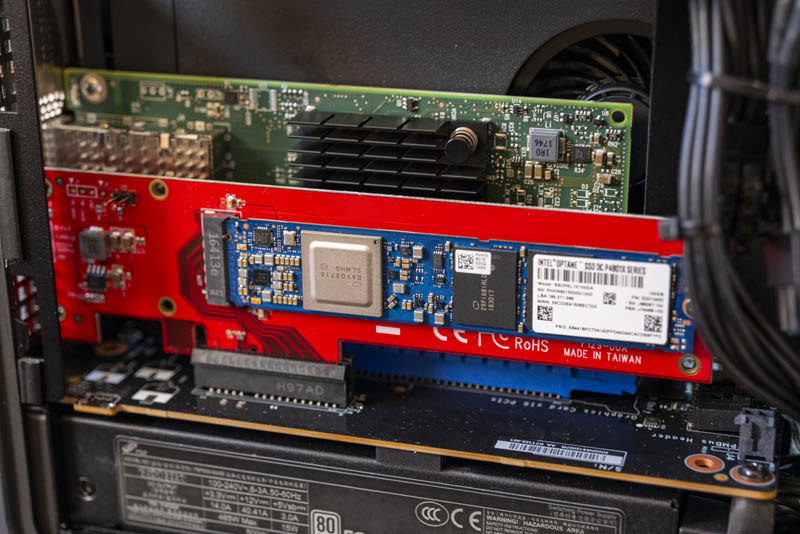

On the opposite side, we find two M.2 NVMe SSD slot. One is a M.2 22110 (110mm) slot where we have an Intel H10 Optane plus NAND combo SSD installed. The other slot is a M.2 2280.

One item we wanted to mention is that since the compute module connects to the rest of the system via a PCIe Gen3 x16 link, that poses a challenge. There is a M.2 NVMe slot, a PCIe x4 and PCIe x16 slot on the expansion board. As a result, we have 16 lanes to the compute module and 24 lanes worth of device slots on the board. As we will discuss in the topology section, we either get 1x PCIe x16 or 1x PCIe x8 + 2x PCIe x4 (M.2 and x4 slot.) While we have been primarily showing the GPU config, we also had a PCIe x8 25GbE NIC plus Intel Optane DC P4801X setup that allowed us to have 25GbE connectivity along with four NVMe SSDs in the chassis which makes for a very unique proposition.

Overall, the package has room for the 8 core/ 16 thread CPU, three M.2 SSDs, 64GB of ECC memory, and a double-width GPU making it extremely compact. The next question is whether this unit, at a list price of around $1565 without RAM, a SSD, memory, or a GPU, it worth this premium price tag.

Many are calling this completely revolutionary, but Intel has been putting Xeons of this class on compute cards with PCIe Gen3 connectivity for years. Next, we are going to take a look at some of that history before moving on with our review.

beverly cove! you’re like the only person that’d pick up on the parallel

This reminds me a lot of the old (And new) PCIMG style computers. Just in a consumer friendly form. That might be a fun comparison given how cheap they are now.

Patrick, did you try putting the compute board into a normal motherboard? Does it still work? That would be my use case, as a 2nd pc for streaming housed in the same case.

Please try it and let me know or post somewhere! I would really buy one if it did work.

Thanks

Great review Patrick! Very nice extra touch to relate it to the early prototypes as well!

Surprising to read that it is quiet. Isn’t that a 40mm fan in the PSU?

I’ve had 5 Mac mini over the years and I love the form factor, but ever since I wanted more GPU power I switched to larger stationary PCs, typically mATX. Something like this could tempt be to go back to small machines, but then the $1500+ price absolutely kills it. You could easily build a much more modular, powerful, quieter and cheaper mITX-based machine in a Dan A4 (which is even smaller than this) or Ncase M1.

The main advantage with this NUC would be having two PCIe slots, but unfortunately they are only PCIe 3.0, and sharing x16. With AMD Ryzen you can get a PCIe 4.0 x16 in mITX.

Alex, I wouldn’t be surprised if putting this compute board into a PCIe of another motherboard would fry something.

@Alex

I doubt it’s possible to plug the board in another motherboard, in fact I believe it likely to cause damage to one or both components (since in both cases the PCIe interface would provide power, unless you don’t plug the compute board into the PSU).

You could of course put the board in the same case as another motherboard provided you don’t connect it to a motherboard (for example a large case with only an ITX or mATX board installed). This sounds kinda intriguing actually, get a big tower, install an ITX board and put in one or two of these compute board for a small cluster.

Thanks for the replies guys.

I would love a simple compute card like this that just sucked 75W of power from the PCI slot but had a jumper/switch that could make it independent (i.e. not search for other PCI boards, as is the case with this NUC).

Simply slot this in and have another full dedicated PC to encode my stream as it goes out live.

I’m sure there could be other uses … like having 4 or 5 of these in a HEDT system for a cluster? Who knows…

for me this will be perfect in my home-studio (Music) 1 pci-e card (universal audio dsp card) 3 or 4 ssd s and thunderbolt that will work with my Apollo interface (hm maybe do a hackintosh also we will se thx for a good site

This could be perfect for a small VSAN cluster.

Only downside I see here is memory support, if only it was possible to push in around 256gb ram that would be great.

Isn’t Intel providing the baseboard specs and design guidelines to OEMs so they can make their systems? I think I saw some at CES. I‘d love to see what someone like Dell would do with this with all the ready built up ecosystem they have

I can also see a use case for using this in a rack, similar to a Supermicro Microcloud but instead of sleds, we just have cards. Also extremely reminiscent of a transputer!

Anyway, this is something that AMD should jump on too with their PCIe lane advantage, this is a nobrainer for them to adopt the baseboard and compute module functionality too. Just need a 2nd PCIe slot to the baseboard…

@AdditionalPylons The Dan A4 (7.2L) is significantly bigger than the NUC 9 at 4.95L. You might be thinking of the Velkase Velka 3. at 4.2L

Though the design is fairly clever, I feel like Intel didn’t put a lot of effort into it.

1. Processor cards? Really? Vendor and platform lock-in?

2. They’re trying to emulate a “sandwich layout”, but they don’t seem to understand what makes the sandwich layout good. It’s not having your cooling system sandwiched in between 2 PCBs.

3. Why bother redesigning the industry-standard Flex-ATX PSU? Why not go further and design an even more compact PSU?

If size and expandability are all Intel has going for it, then the NUC 9 is a day late and a dollar short. The mini-ITX form factor has come a long ways over the past 3 years, allowing enthusiasts to build PCs as small as and expandable as the NUC 9. Actually, it feels like most things Intel has been releasing over the past 2 years has been lackluster. Hopefully Intel can drum up more interest in Lakefield or Alder Lake.

Nice machine and review. If the price weren’t so high, it would be much more tempting to get. At this price range, it’s got a lot of competition, if one didn’t mind going with physically larger servers. Question regarding the vPro/AMT functionality: is it possible to remotely install an OS (in particular ESXi) by mounting an iso on the network? Wondering if vPro/AMT allows the server to be completely managed remotely (including for fresh installations)? Wondering what I would be missing using vPro/AMT as compared to IPMI (such as HPE’s iLO).

@Ed Rota: Point me a min ITX MB with 3+ PCIe slots and 2+ thunderbolt ?