At STH, we have been covering the Intel Gaudi 3 launch for some time. Along with its Xeon 6900P launch, the company also announced that the Gaudi 3 AI accelerator is going general availability. For Intel, this is a big deal as it needs to show it has an AI offering that is growing after IBM Cloud recently said it will deploy Gaudi 3.

We attended a pre-brief event last week in Oregon for this, so we are going to say this is sponsored.

Intel Gaudi 3 Goes GA for Scale-out AI Acceleration

Intel is fully on the AI bandwagon with AI ranging from the PC to data center clusters.

In April, we showed the Intel Gaudi 3 128GB HBM2e AI chip in the wild. The new chips are hitting GA in October with systems from several vendors. Dell has its PowerEdge XE9680, one of the least serviceable AI systems, but Dell has a big customer base.

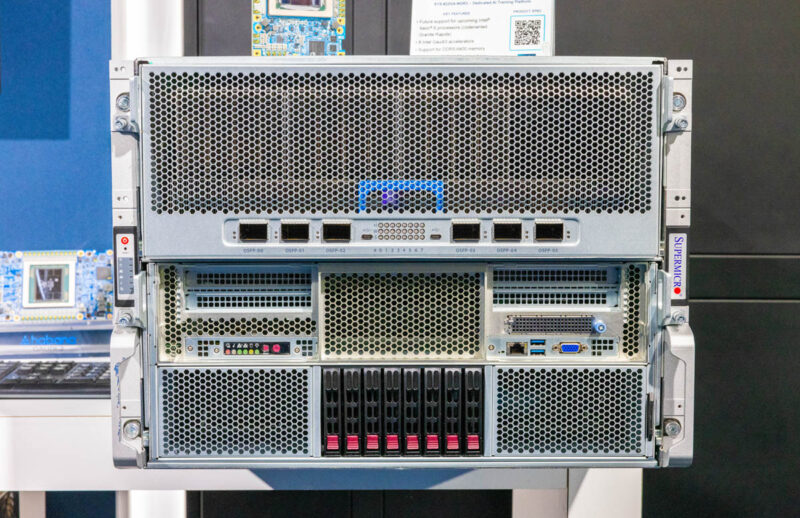

Supermicro showed off its X14 Gaudi 3 system in April 2024, and it was a functional system at that time. Other vendors brought cardboard and NVIDIA systems to Intel’s Analyst event in April, so the system we photographed was likely one of the first running and available models waiting for GA on the accelerators.

We also saw Wiwynn’s Gaudi 3 system in June, and others also have Gaudi 3 systems as well.

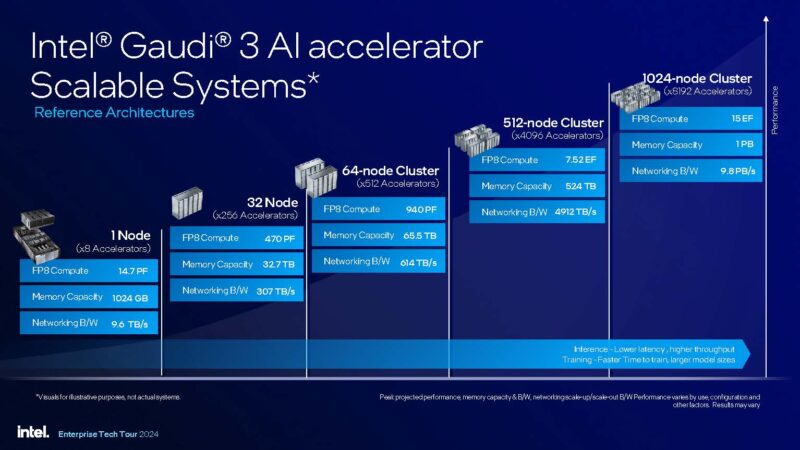

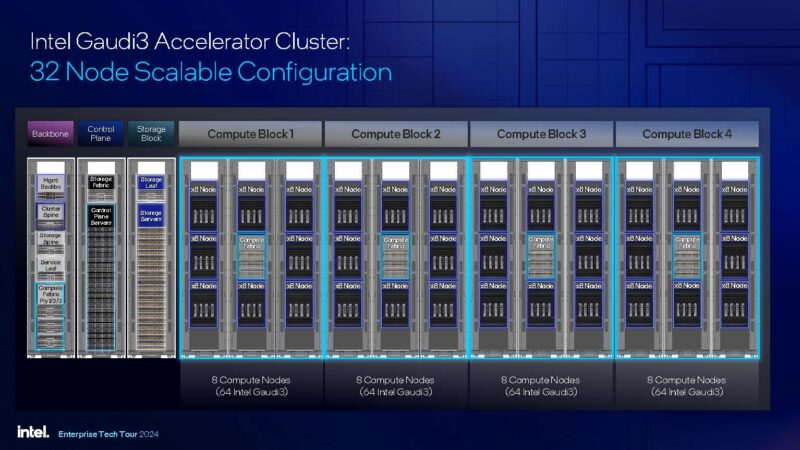

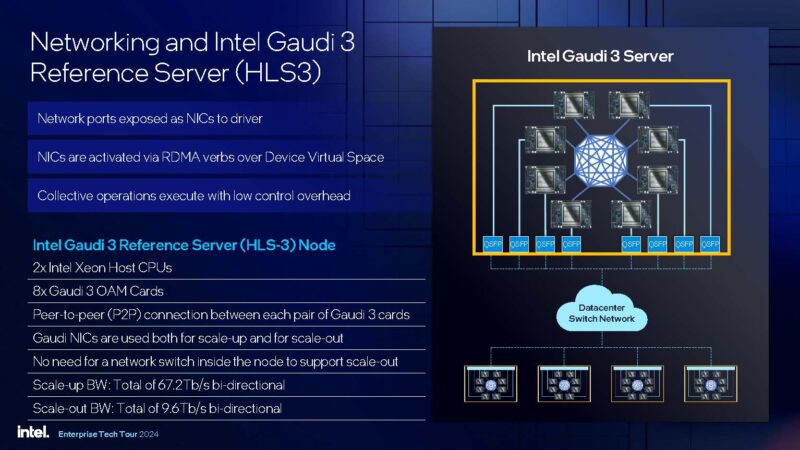

Systems are a big deal since not many of these are going to be deployed as single OAM modules. In fact, it would also be strange to simply see a single 8-GPU Gaudi 3 system deployed by itself these days. As a result, Intel is talking about building moderately sized clusters of up to 1024 nodes or 8192 accelerators.

Intel is using Ethernet as a scale-out fabric and has a relatively moderate-density rack configuration taking 15 racks to hold the storage, networking, control plane, and 256 Gaudi 3 accelerators.

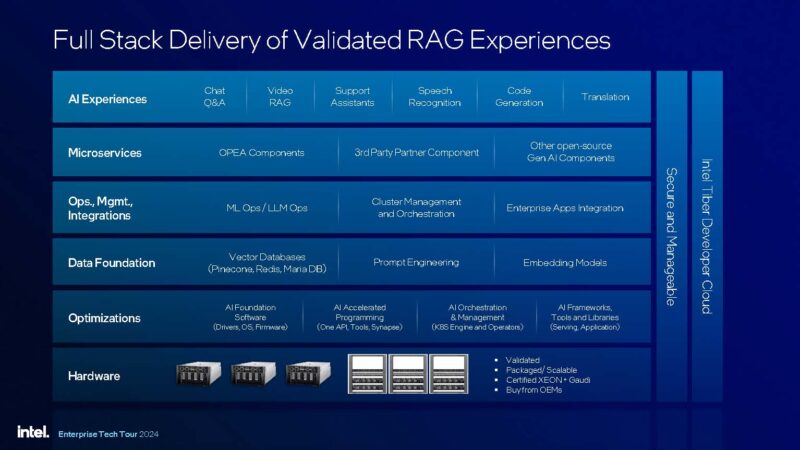

That is part of Intel’s plan to help customers and partners deliver validated RAG experiences for enterprises.

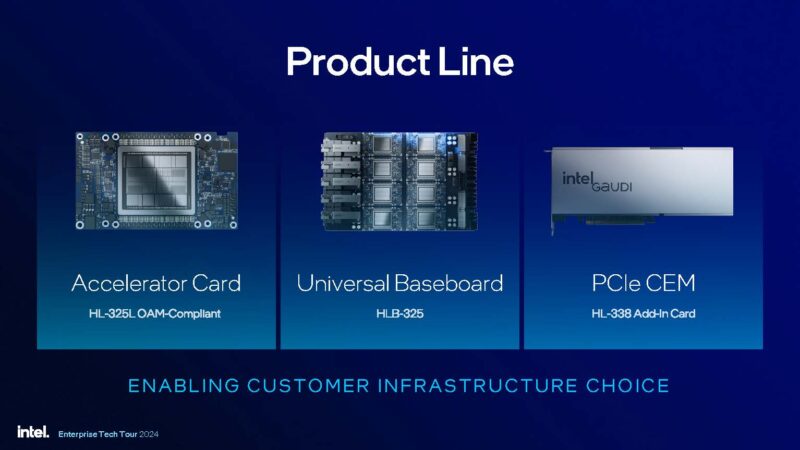

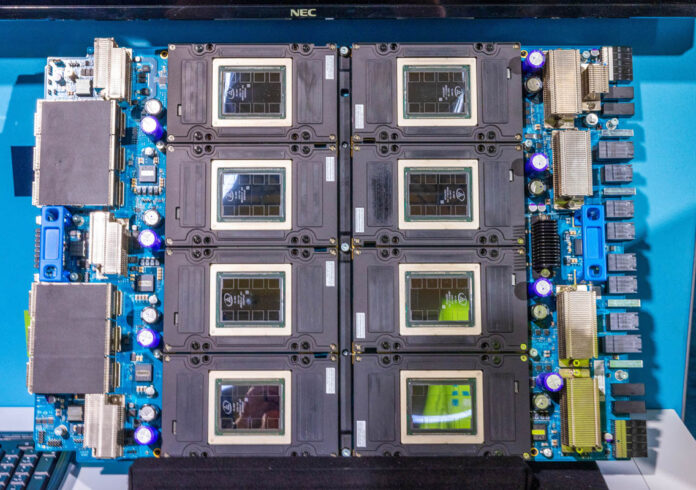

While we have focused on the HL-325L OAM card and the systems built around the HLB-325 UBB (shown in the cover image to this article), Intel also has a PCIe card.

The Intel HL-338 is a Gaudi 3 card in a PCIe CEM form factor. We should be very clear here that not every server will support this 128GB HBM2e card. It is a 600W TDP dual slot passively cooled card that requires a lot of chassis power and airflow.

x

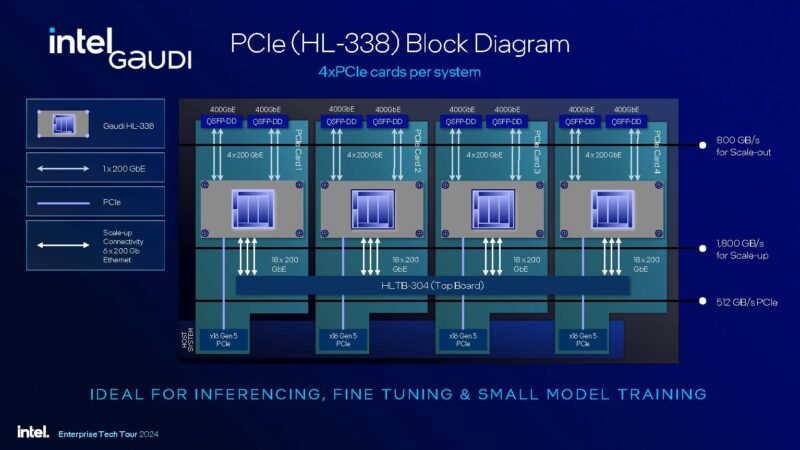

These cards can use QSFP-DD networking for two 400GbE links externally and then can use a backplane for local card-to-card transfers. The four-card block above is an interesting architecture.

Of course, NVIDIA is the big player these days in AI accelerators. Intel says, however, that its cards can be very competitive with the NVIDIA H100 both on a performance and performance per dollar basis. Realistically, Intel needs to discount its cards compared to the NVIDIA H100 given the current market dynamics, and it seems like these are notably less expensive.

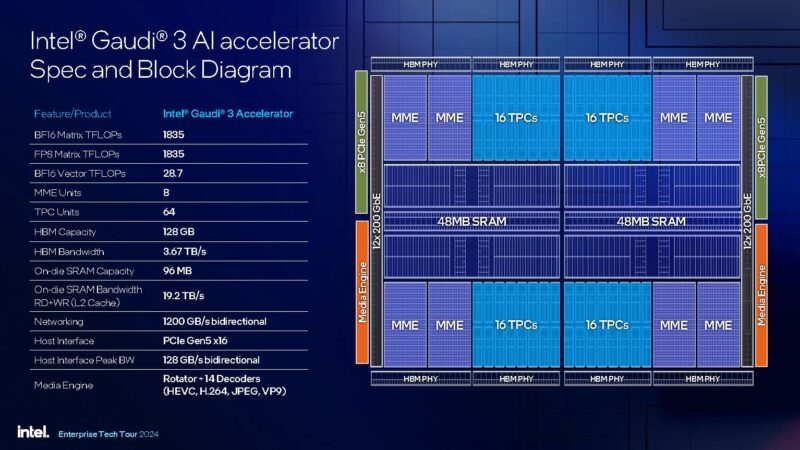

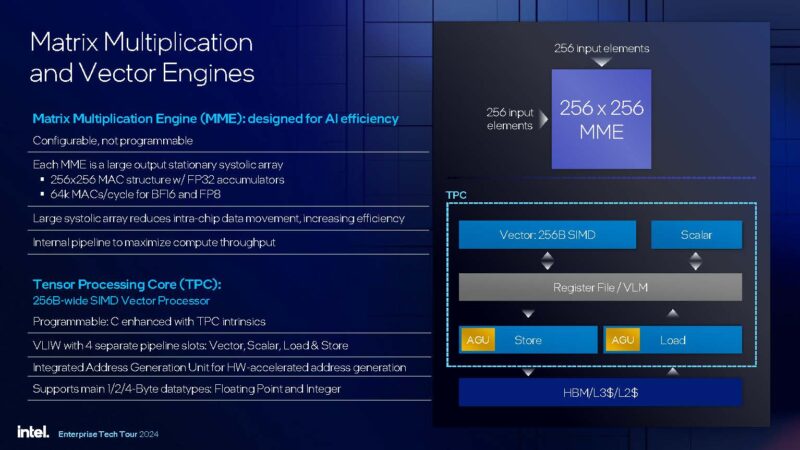

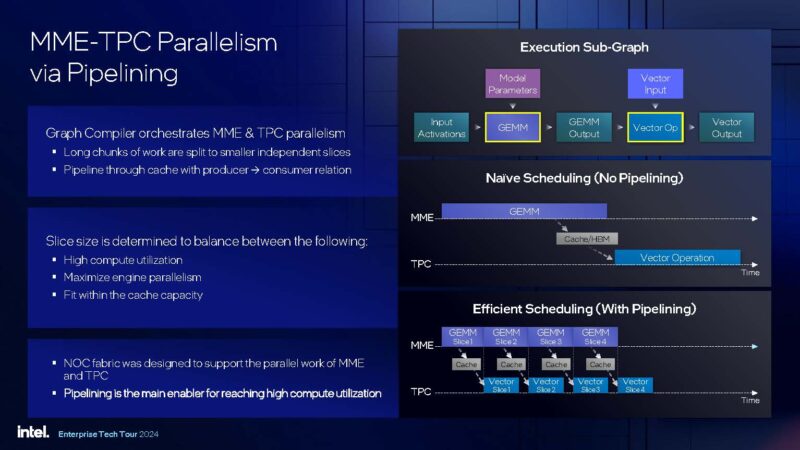

A few weeks ago we went into the detail around the Gaudi 3 architecture at Hot Chips 2024. Intel again showed a lot of that information on how the cards work.

We are just going to post the slides here since we went over this about a month ago.

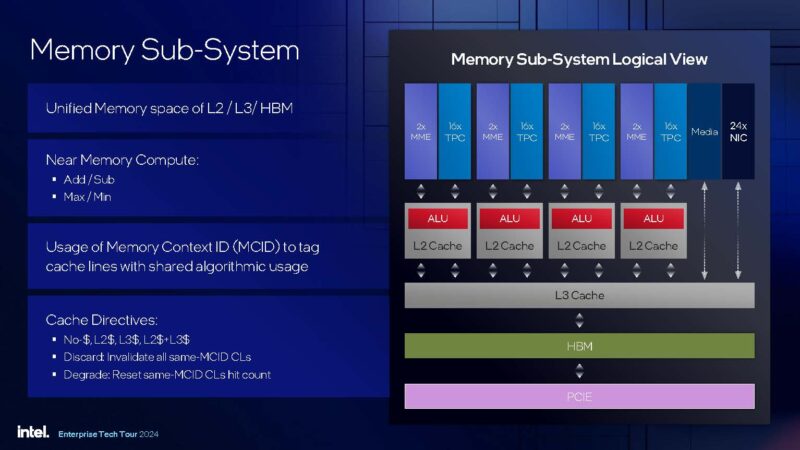

Gaudi 3 certainly puts a lot of emphasis on the memory subsystem as is common in the space. The use of 200GbE for scaling out is one of the more interesting features. Most NVIDIA HGX H100 systems have a NIC per GPU which adds more cost and power consumption to a system. Plus, it creates another internal hop from GPU to NIC over PCIe. With Gaudi 3, that is onboard networking. A fun thought is that if you were to buy PCIe NICs with the same amount of bandwidth as the Gaudi 3 offers, you would likely spend as much if not more on the NICs than the Gaudi 3.

Intel has been working with the Gaudi team since the Habana Labs acquisition, so this is now a third-generation product.

Here are the specs on the HLS3, or the eight GPU reference server.

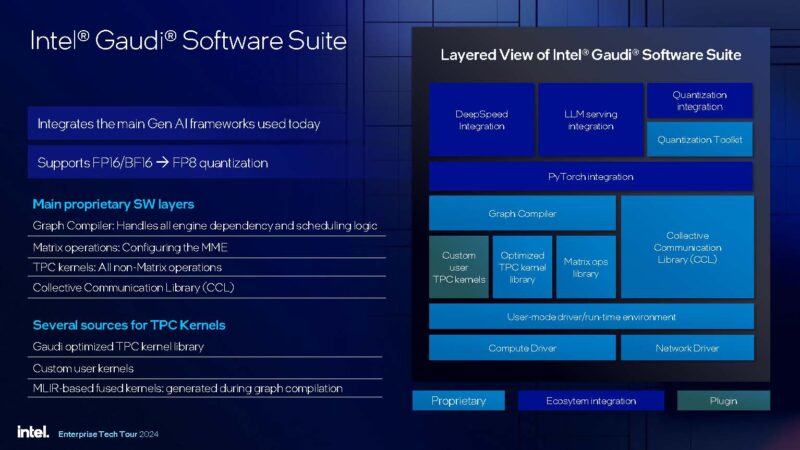

Intel has an entire software suite for the Gaudi line, as one would expect.

It also supports major frameworks.

Something fun is that this is available in the Intel Tiber Developer Cloud.

You might have seen the Intel Developer Cloud with Gaudi accelerators in our Touring the Intel AI Playground Inside the Intel Developer Cloud piece.

Final Words

Overall, it is great to see the Gaudi 3 hit general availability. Intel needs its AI accelerator business to take off, and hopefully, before Falcon Shores arrives. I asked if Intel was looking to package end-to-end solutions for companies just carting in clusters and having enterprise RAG applications running, it sounds like that is not the plan and instead, it is looking to power those applications through partners. Hopefully, we get to show you more Gaudi 3 systems in the future.

All that external networking and the word DPU not mentioned once!

Products like this is why Intel should have continued development of high end switch ASIC from their Barefoot acquisition. It’d have been slick to include such a switch chip on the baseboard or inside the server chassis to internally link several baseboards while providing enough external bandwidth to feed everything. Similarly Intel is leading in the photonics race and it’d have been impressive to have all the external high speed fiber links provided via a photonics chiplet while all the internal links leverage low power KR baseboard interconnects. Stuff like this further enables Gaudi’s scale out topology. Per chip Intel can’t match nVidia but they certainly can help customers build bigger clusters to offset that.