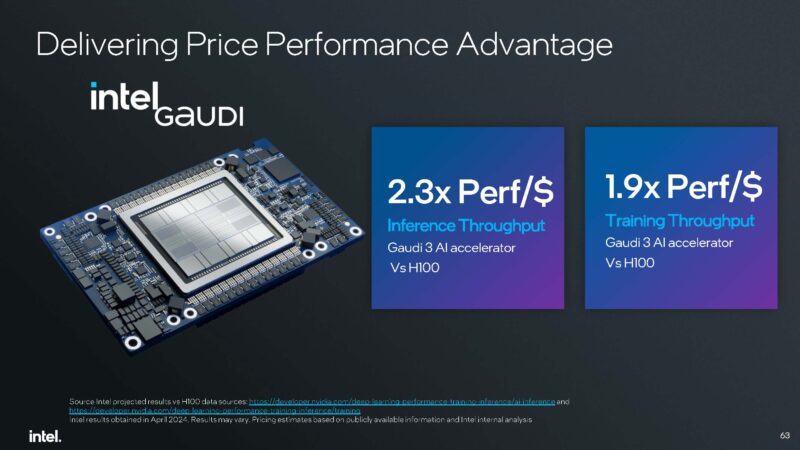

At Computex 2024 in Taipei, Intel made a bunch of announcements. Our Intel Xeon 6 6700E Sierra Forest piece is the one to read as we go in-depth on the performance. At the same time, since everything is AI, Intel also talked up its Gaudi 2 and Gaudi 3 accelerators. What is more, Intel put a public list price out there.

Intel Gaudi 2 8x OAM UBB $65K Gaudi 3 $125K and Includes Networking

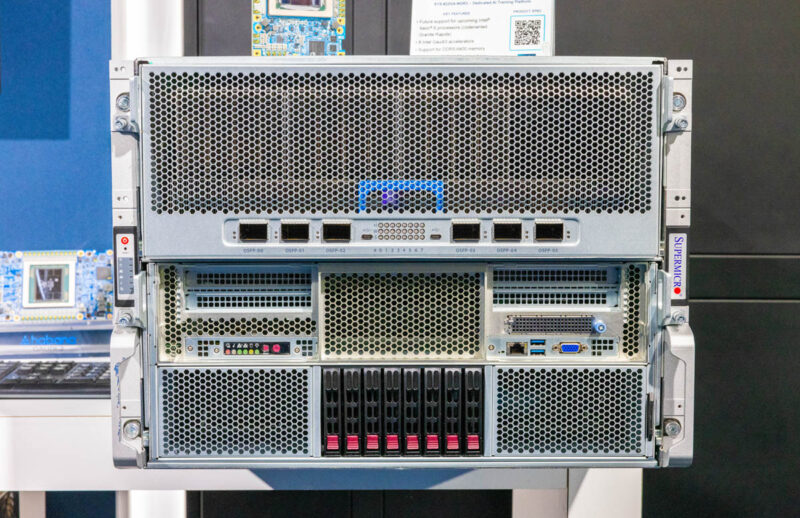

A few weeks ago, we found that Intel Gaudi 2 Complete Servers from Supermicro sell for $90K. Now we know the list price of the Gaudi 2 OAM UBB module, which is $65K.

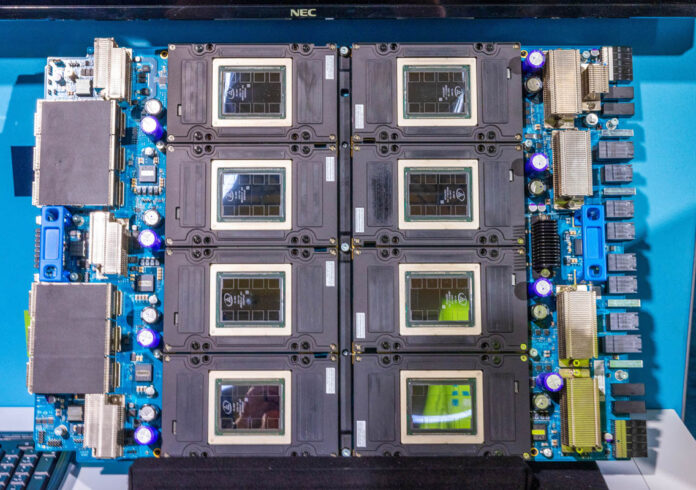

The Intel Gaudi 3 OAM UBB with eight accelerators is $125K list price.

Something that many folks forget is that the Gaudi chips use Ethernet for AI accelerator to AI accelerator interconnect.

It uses Ethernet directly from the AI accelerator, which means that one does not need an NVIDIA ConnectX-7 for each accelerator.

Lower-cost accelerators and lower networking costs by not needing InfiniBand means that it can be much less expensive than the NVIDIA H100 standard.

Final Words

Given that many AI accelerators are supply-constrained, it is a great development to see a hard list price for AI chips. There are other impacts. If you are a company looking to purchase AI systems, this gives a great start for evaluating system pricing. We now know that the Supermicro deal is $25K for the server beyond the Gaudi 2 OAM UBB. That is pretty cool. We wish more companies put list prices on accelerators or assemblies.

For AI chip startups, Intel now has its price/ performance number out there, including integration into an industry-standard form factor with scale-out Ethernet networking.

Let the games begin.

The reason Nvidia uses infiniband is the latency.

Yes, that’s the key reason why NV use IB card.

Is the emergence of hard list prices for AI accelerators a sign that the industry is moving towards greater transparency, or could it be a strategic move to create artificial scarcity? As companies begin to publish their pricing, what implications might this have for competition and innovation in the AI hardware market?

As companies like Intel reveal hard list prices for their AI accelerators, could this lead to price wars that ultimately harm innovation and quality in the industry? Or might it instead foster a more competitive environment that benefits consumers in the long run?