One of the key trends we started to see in the second half of 2017 and into 2018 is an increasing focus on NVMe storage. Prior to June/ July 2017, hyper-scale companies and some storage array vendors were all-in on NVMe storage. Other server and hyper-converged vendors were waiting for the new platform technology transition to move to an NVMe focused storage array.

Market Background

When you look at modern SSD BOM pricing between SAS3, SATA III, and NVMe drives of the same capacity, the largest cost driver is NAND. There are a few implications to this. The first and most spoken about is the need to drive the cost of NAND down in order to hit a given capacity.

As newer server platforms based on Intel Xeon Scalable and AMD EPYC have hit the market, there are more designs that incorporate NVMe storage. Whereas it often required utilizing a limited number of base models or OEM customization to build all NVMe storage arrays in the past, today robust NVMe arrays are relatively widespread and easy to implement. As a result, we are seeing a notable shift to NVMe arrays over their SAS and SATA counterparts given the relative similarity in NVMe SSD pricing compared to legacy interface peers.

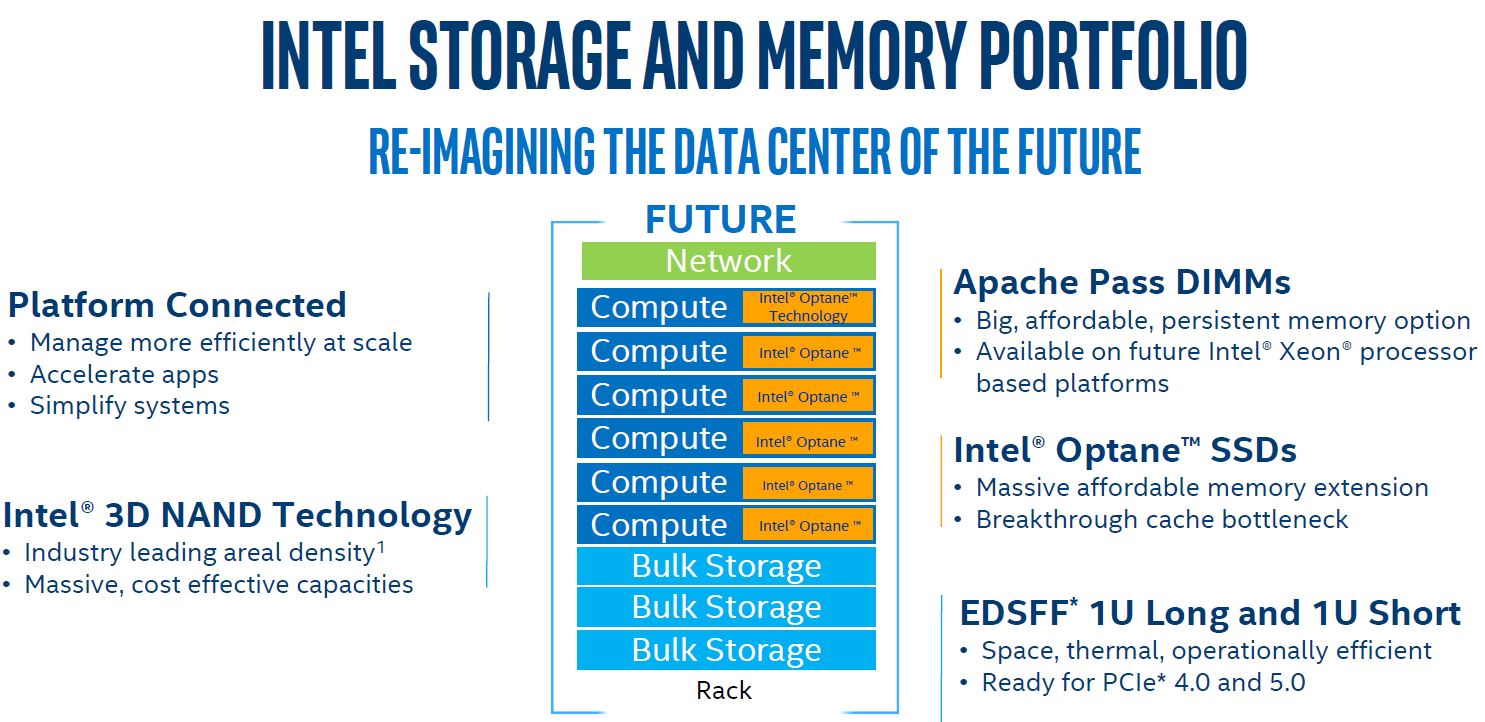

In our briefing, Intel had a number of interesting slides about its corporate vision, but we wanted to highlight its vision of the future.

The key is that Intel envisions Optane as becoming the primary storage inside compute servers. For now, that means NVMe even though it took a massive hit due to Spectre and Meltdown. In the future that may / will mean Apache Pass DIMMs that put 3D Xpoint in the DIMM channels. Apache Pass has been delayed to Cascade Lake or to late 2018.

Bulk storage will essentially become NAND SSDs (e.g. the Intel DC P4510) and Intel’s vision for a data center is essentially a tiered/ cached storage system.

The crux of this vision is that Optane will come down in price and NAND SSDs will push hard drives largely out of warm storage. For that to happen, NAND SSDs need to hit a higher density and be serviceable as large-scale primary storage with Optane acting as the read/ write buffer.

With that market background in mind, it is time to take a look at the Intel DC P4510 that Intel is launching to address this market.

Intel DC P4510 in the Portfolio

Focused on maximizing capacity per NVMe slot as well as lowering the cost per TB of storage Intel is launching the DC P4510 using its 64-layer 3D NAND technology which helps maximize the capacity the company can produce while minimizing the cost per TB.

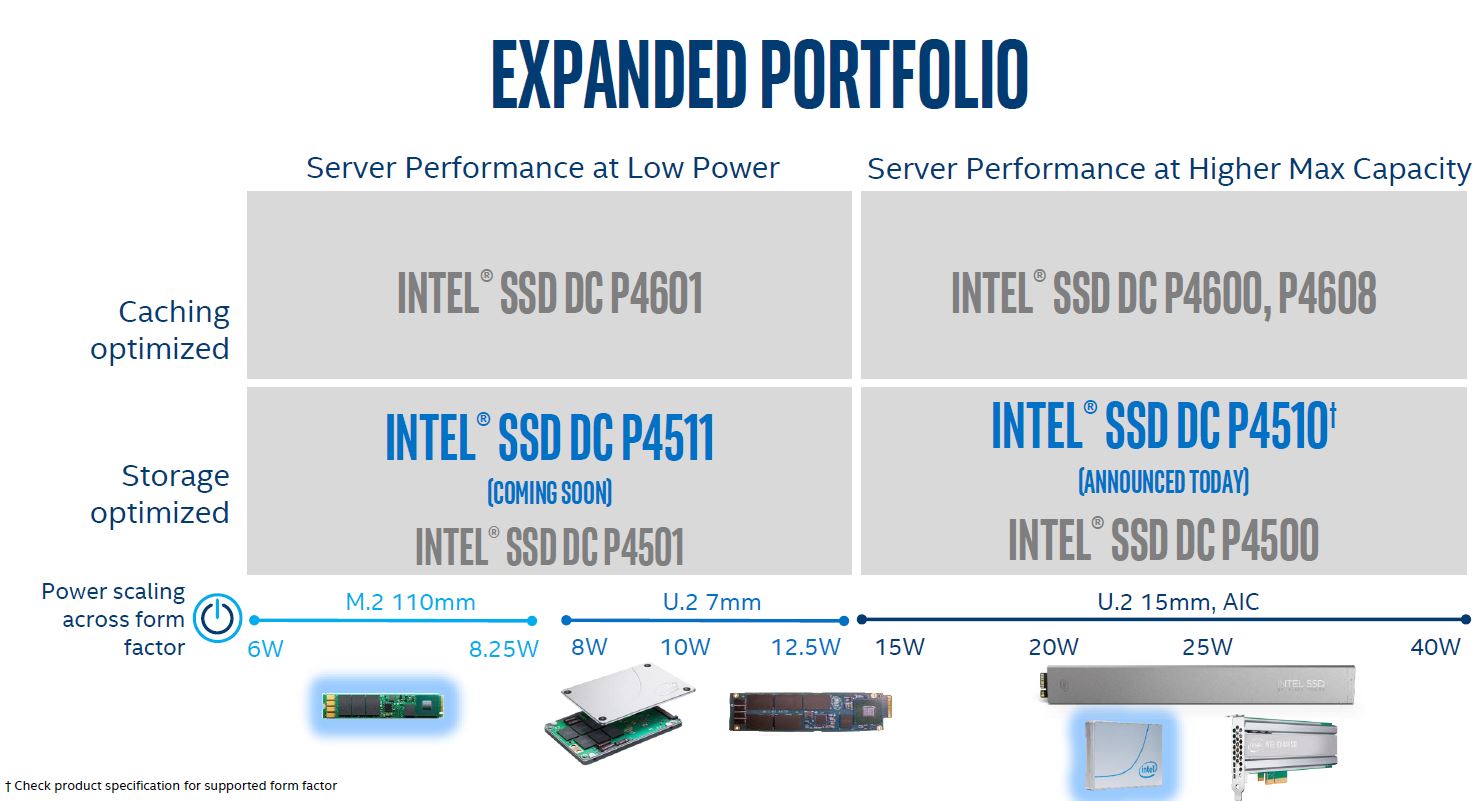

During the briefing, Intel first discussed specs, but we thought the portfolio slide would be an easier way to introduce what we are seeing.

Combined with the vision above, that NAND today can be a cache tier but is moving to a capacity tier, these are the drives meant to focus on storing a large amount of data at low cost. The Intel DC P4600, P4601, and P4608 series are instead focused on higher write endurance, potentially as cache drives today.

The other telling part of this slide is the way Intel is building its portfolio. 15mm U.2, add-in cards and EDSFF for this capacity tier. Clearly, the message here is that by adding more NAND packages on larger PCB, Intel can maximize the amount of capacity per drive and controller to drive down costs. Smaller form factors will be serviced by the Intel DC P4511 series that will range from M.2 to thin 7mm U.2 and EDSFF short form factors.

Intel makes these new model numbers largely to distinguish features. Where we can see the company going is towards a set of tiers, let us call them Gold, Silver, and Bronze where Gold is the high endurance/ high-performance Optane, Silver is the higher performing NAND DC P4600 series and Silver is the lower performing P4510 optimized for cost. Perhaps in that model Apache Pass becomes a Platinum line of SSDs.

Those are not official names, but Intel essentially has three tiers of NVMe SSDs and this occupies the lower tier from a performance perspective. Given that portfolio positioning, we expect these drives to be lower performance, but higher capacity and lower cost.

Intel DC P4510 Series Overview

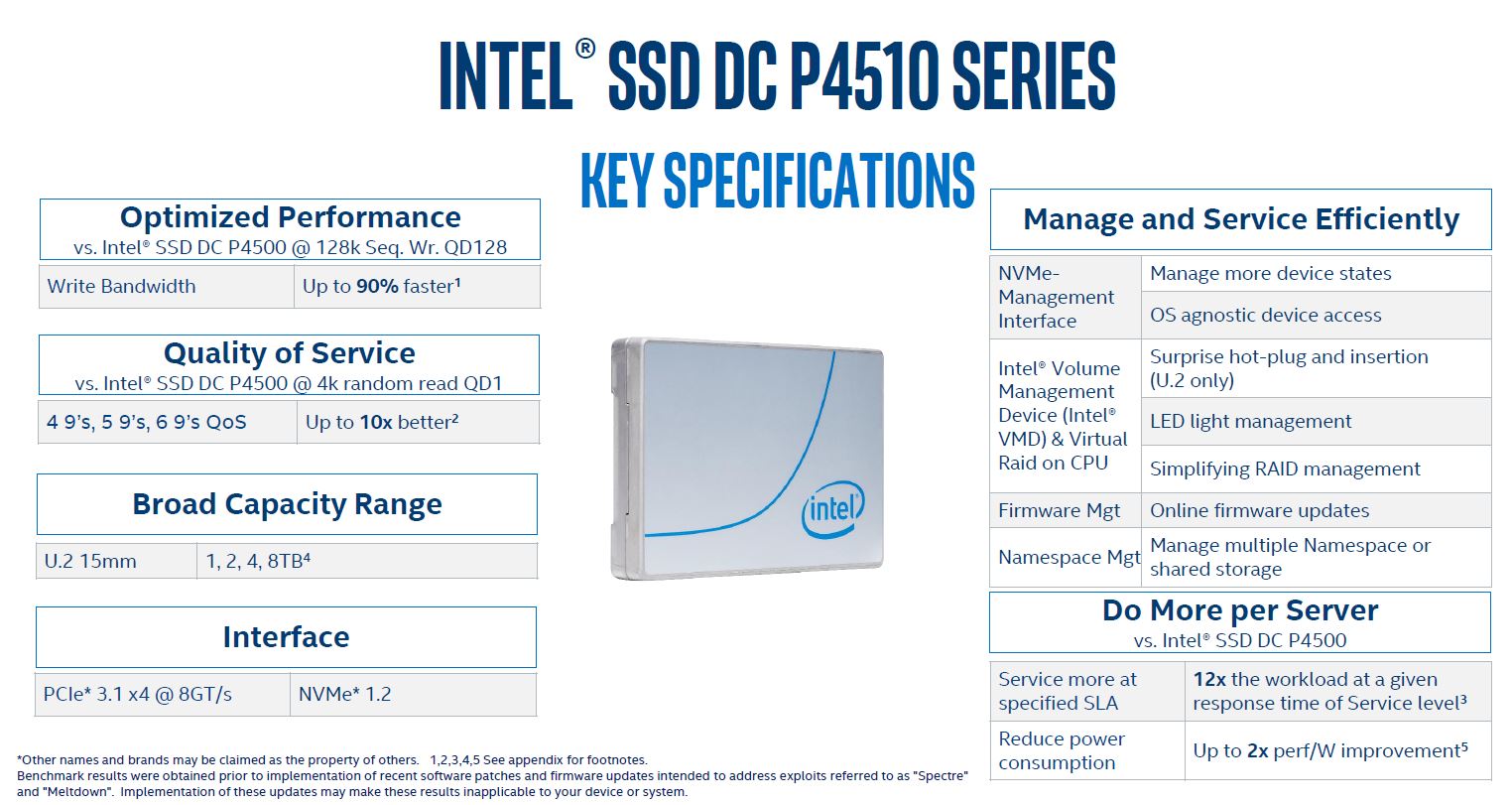

Intel’s newest drives range from 1TB to 8TB in capacity in the U.2 15mm form factor. That is perhaps the most important spec to glean from this slide.

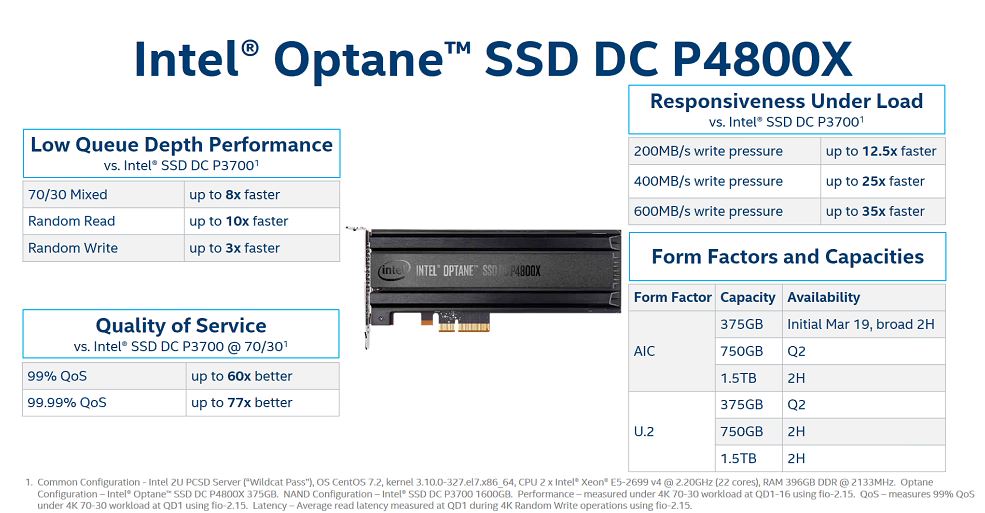

An extremely interesting point on this slide is that we are seeing less consistency in how specs are presented. For example, Intel is de-focusing standard metrics like maximum sequential read/ write speeds, power consumption, MTBF, UBER rates, and IOPS at a given queue depth. Instead, Intel’s specs now show capacity, form factor, and interface and an array of SSD’s key specs or features change by the drive. Just as some context, here was the P4800X slide which was not labeled specs:

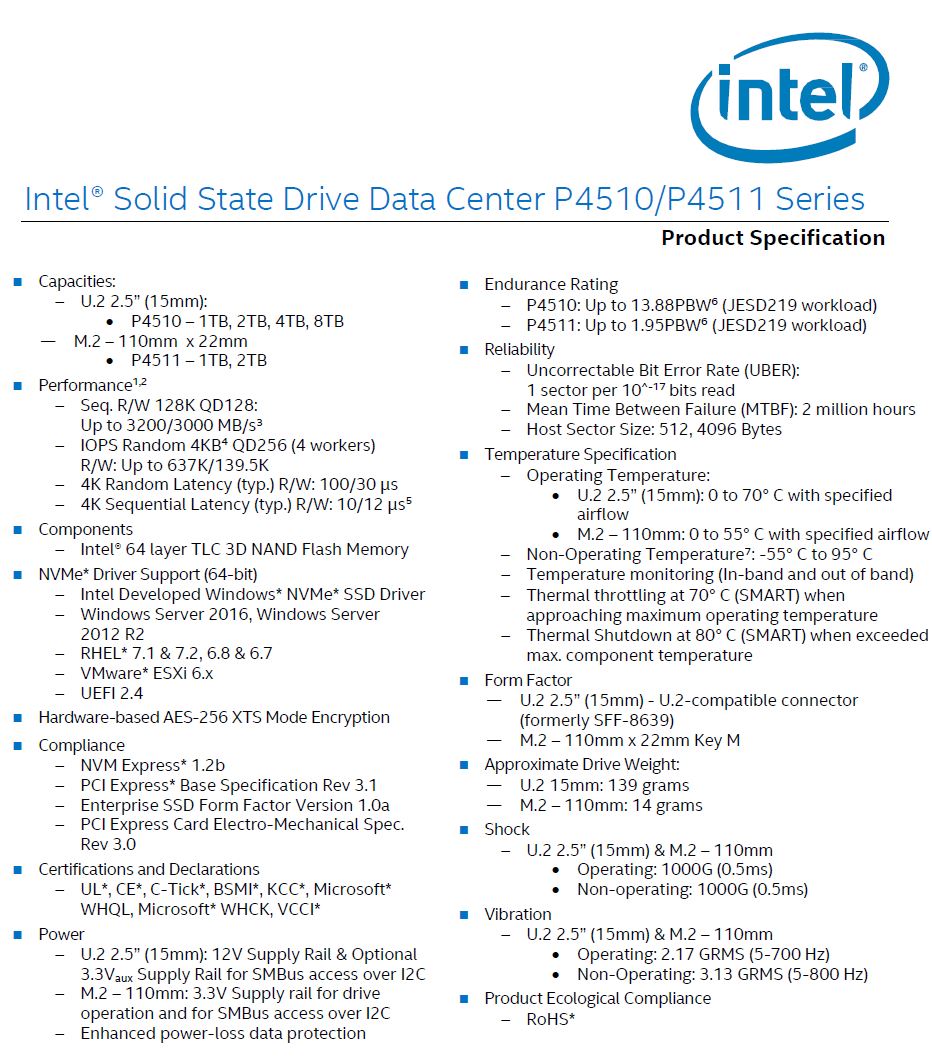

That change makes sense as the specs vary by model, and realistically, it makes sense. On many of the traditional metrics, NVMe SSD makers have been pushing PCIe Gen 3 busses for some time. Before the briefing, Intel sent the Intel DC P4510 and the upcoming M.2 1TB and 2TB P4511 specs in a PDF. Here is the relevant excerpt:

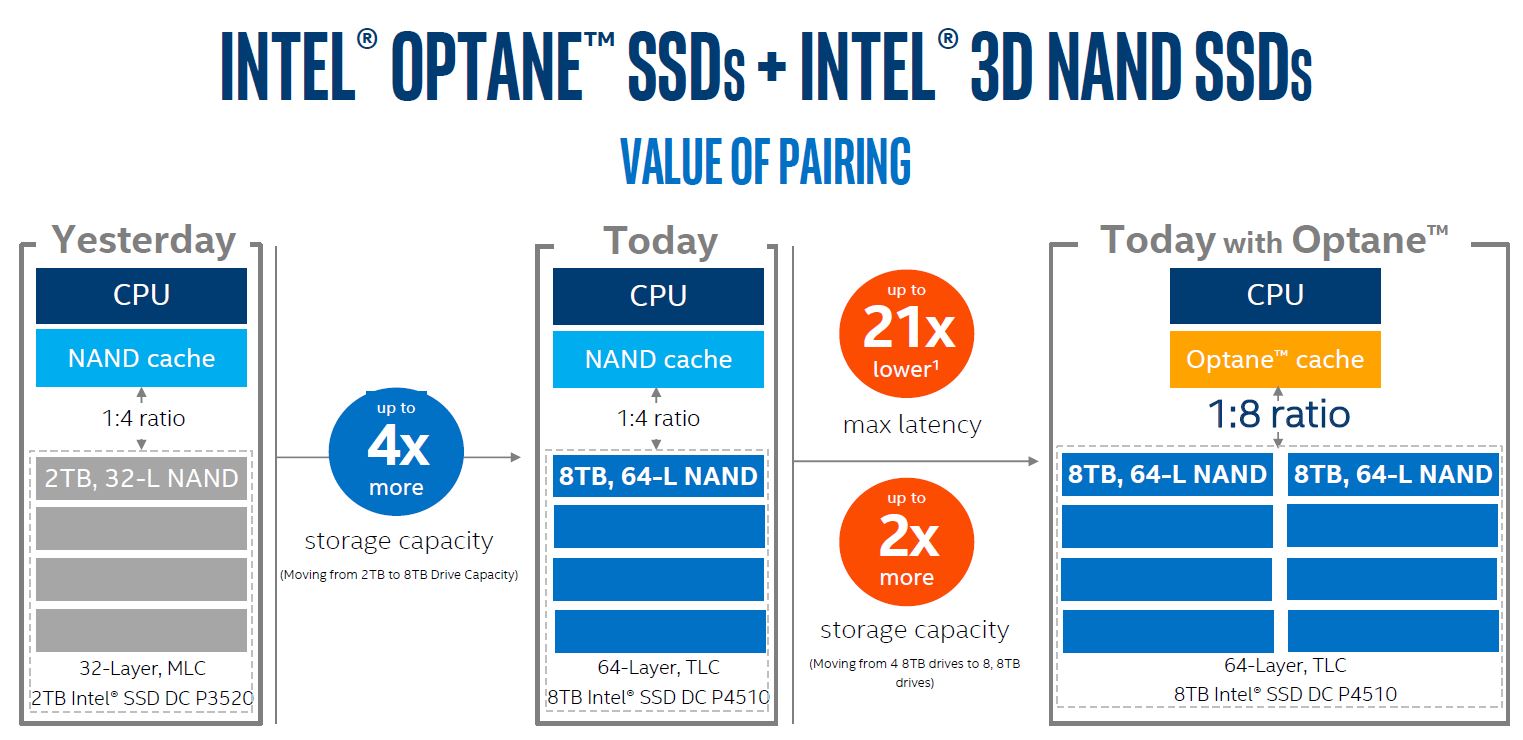

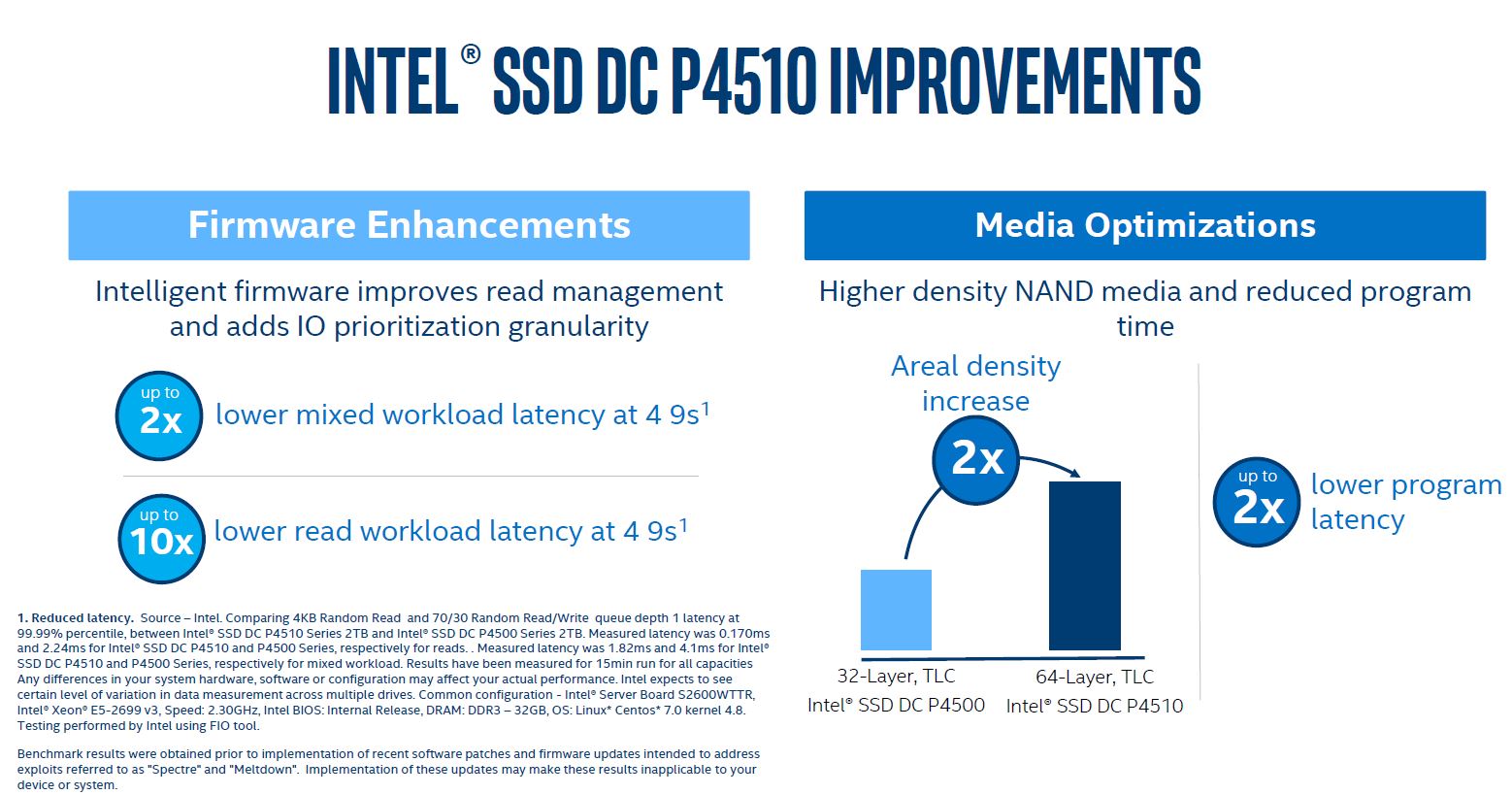

In our briefing, Intel focused on DC P4500 to P4510 generational improvements quite a bit. Essentially, as Intel moved from 32-layer TLC NAND to 64-Layer TLC NAND media, it was able to get higher density and more performance.

The net impact is that these drives perform better. The other set of context here is that these tests still carry disclaimers that they were tested before Spectre and Meltdown patches which negatively impacted fast NVMe storage.

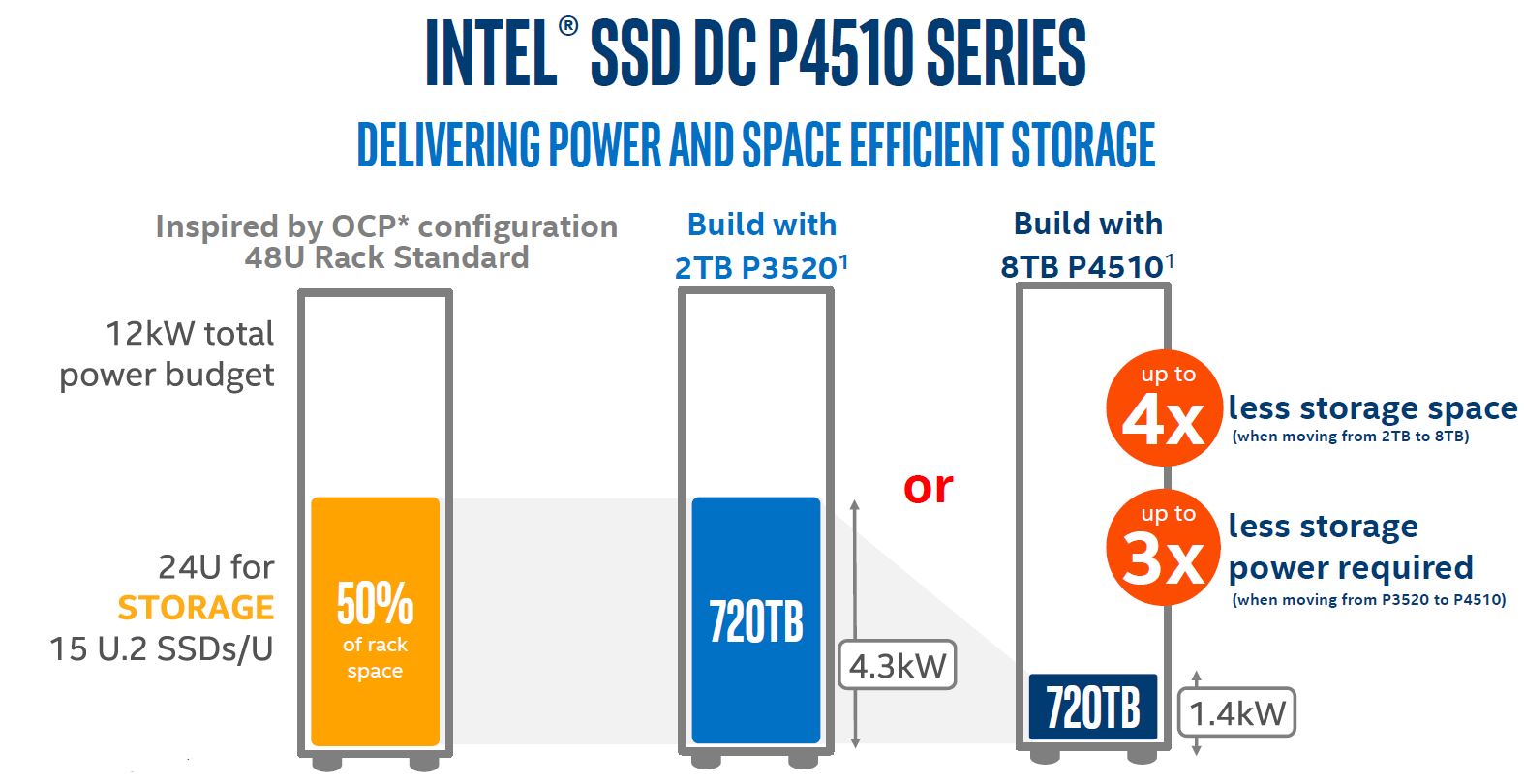

A bigger impact is the capacity side. By moving increasing the capacity by 4x (2TB to 8TB), one can use fewer drives to reach a given capacity, and therefore requires less power and space.

This chart illustrates the power and space savings but it omits an important factor: performance. Moving to fewer drives in fewer chassis means less PCIe bandwidth from the drives to the hosts accessing that capacity and fewer network ports assigned to that storage. Realistically what it means is not that companies are going to use less space for storage. Instead, it means that racks can have 4x the storage density.

Intel DC P4510 OS Testing

One of the key items we saw missing from the official spec sheet is Ubuntu support. While not officially supported, we have the drives running under Ubuntu 16.04.3 LTS, 17.10 and an early development snapshot of Ubuntu 18.04 LTS out of the box. Ubuntu is a huge Linux distribution so we wanted our readers to have that bit of information. We also managed to use the 8TB drive in with FreeBSD 11.1-RELEASE, SLES 12 SP3, openSUSE Leap 42.3, CentOS 7.2 and 7.4, and Citrix XenServer 7.2 and 7.3.

Essentially, most modern OSes support NVMe drives out of the box these days. Most of the early review tech press uses Windows or RHEL/ CentOS so we wanted to double-check that more OSes are supported with compatibility testing. Luckily, we had a multi-boot rig online to help with this even given our short testing period.

More performance focused numbers will be in our follow-up review.

Final Words and What is Next

Given that we had a fairly short testing window, we decided to take the option of providing a news piece. We will be following up with a piece on the single drive and multi-drive VROC performance in servers as Intel also sent us a key as well as multi-drive RAID figures and potentially some additional real-world figures from clusters we have set up in DemoEval.

What you may notice is that Intel is emphasizing performance in this generation relative to its older generation drives, but it de-emphasized many standard performance metrics. We wholeheartedly agree with this approach. These are capacity-focused SSDs that are competing against SAS3 and SATA III SSDs along with disk in some cases. As capacities grow, DWPD ratings are less of a concern versus total write capacity and we rarely see even 400-800GB SSDs hit 0.3 DWPD. Write speeds are important to some extent, but as these are designed to be a capacity tier, we are focused on read speeds and read latency rather than write speeds.

UBER 1 sector per 10^-17 bits read.

Marketing department makes datasheets?

I’ll take a box of those 8TB U.2 drives please. Also intel crapped on broadcom (LSI + avago) in one of their slides, but the 94xx cards seem a good fit for this with mixed mode sas/sata/u.2 HBAs with built-in pcie switching. (4 x U.2 on x8 card for starters, apparently many more with upcoming expanders)

I really want to ditch all spinning rust for my next storage server build.