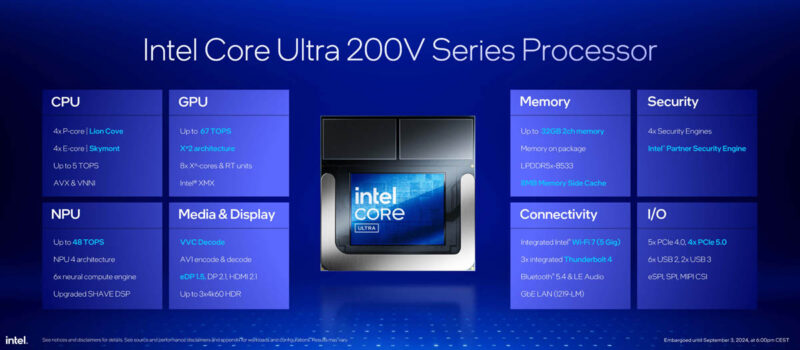

Last week, we explored more architectural details around Intel Lunar Lake for AI PCs at Hot Chips 2024. Now, we have the official launch. Intel is radically changing its design, bringing memory on-package, discarding Hyper-Threading, and lowering core counts in this generation. There is a new, faster GPU, faster NPU for AI, and new core designs. This is a big deal.

Intel Core Ultra 200 Series Lunar Lake Launched

Some Intel CPUs come out, and they are small incremental updates. This is not that. Intel is completely overhauling its mobile lineup.

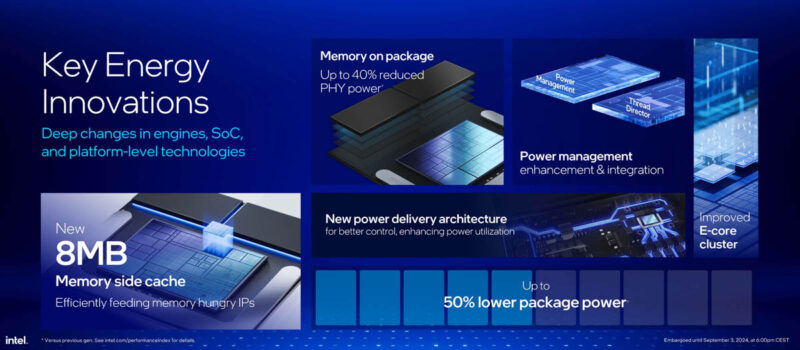

This is not necessarily going to blow the previous generation away across every performance metric, but the big item to keep in mind is that Intel is focused on lower power. An example is bringing memory onto the package, which uses less power than having to drive signal off the package and through a motherboard.

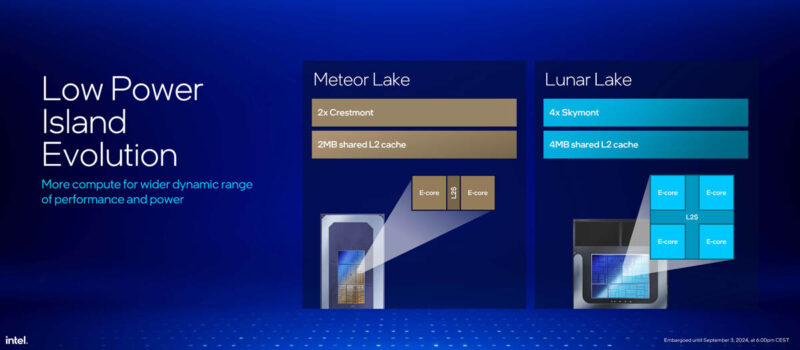

Another big change is the low-power island. Lunar Lake doubles the core count and cache and also updates the E-cores from Crestmont to Skymont. Something at least worth noting here is that Intel is introducing new E-cores on the desktop in the middle of its Sierra Forest Xeon 6 CPU launch. It feels like the data center folks need to up the cadence here.

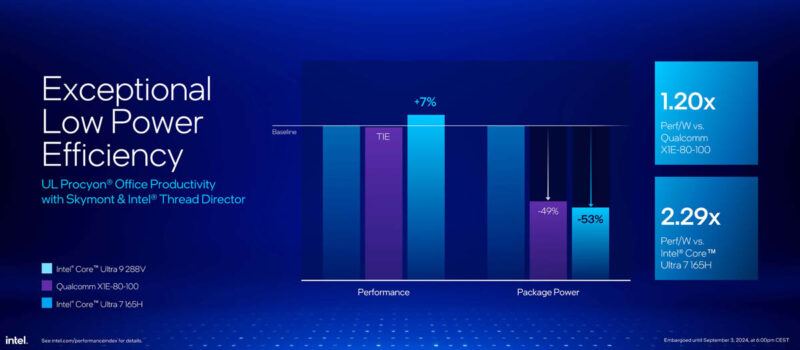

The impact of this is that Intel can match or exceed Qualcomm in power efficiency at around the same power. It can also achieve similar performance to the previous generation at around half the power.

Of course, that is application-dependent but it also extends to gaming.

Here is the generation on generation power decrease.

Intel also says it now is beating not just AMD but also Qualcomm in battery life. This is a disaster for Qualcomm as power efficiency has been the main selling point of its parts over Intel and AMD. It needs to show it can win because if you have used a Snapdragon X Elite device, there are still things that do not run on Arm.

Here is the look at the new core update.

Here is the big one: Intel is not using Hyper-Threading. AMD swears by SMT, but as we went over in the Hot Chips 2024 presentation, Intel said that removing HT helped with the overall integration of the chip and, therefore, it was the right decision to remove it. It also helped with the single-thread performance of the design. This is an interesting one, but it needs to be noted that Intel has a long history with Hyper-Threading, so removing the feature was unlikely to be one done at a whim.

Intel says it has a new lower latency fabric on the SoC.

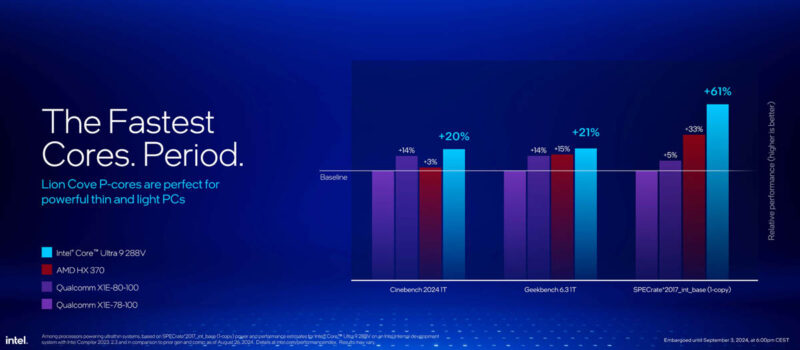

In terms of P-cores, AMD says that it now has the fastest P-cores.

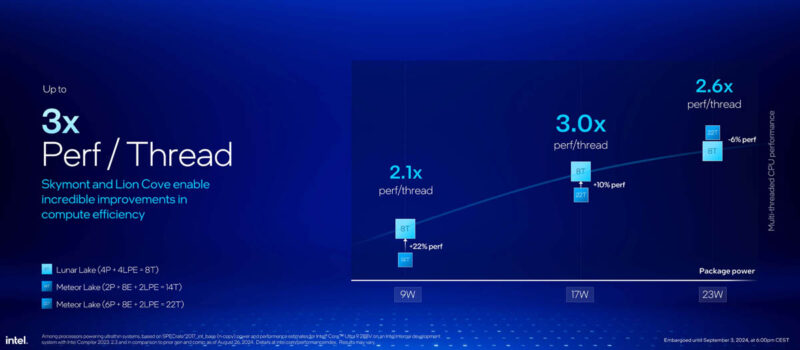

While Intel is not focusing as much on total performance on the SoC, it is putting a lot of effort into showing off the performance per thread. Since Lunar Lake 200V has 4 performance and 4 efficient cores and only eight threads, it has significantly fewer threads than the previous generation, where we had 22 threads.

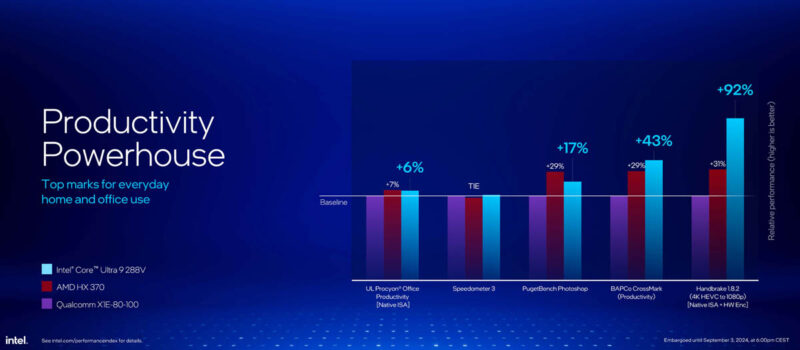

Still, Intel says this 4+4 layout is somewhere between even to much faster than Qualcomm and AMD. The other way to read this slide is that Intel’s testing says AMD is significantly faster than Qualcomm in many cases.

Intel says that the new E-cores are 68% faster than the previous generation.

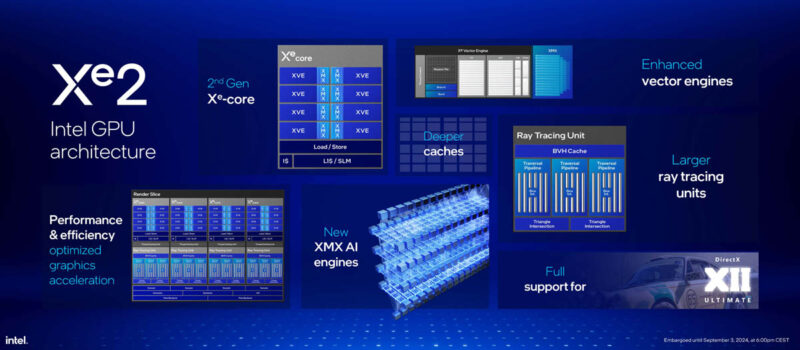

Another big change is the Intel Xe2 graphics. This is the new generation with new Xe cores and new XMX AI engines.

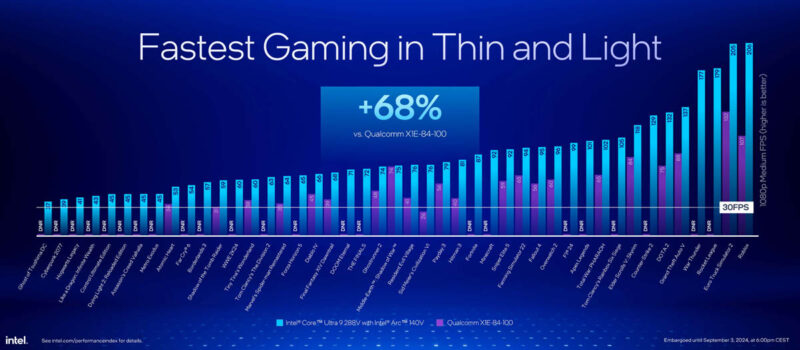

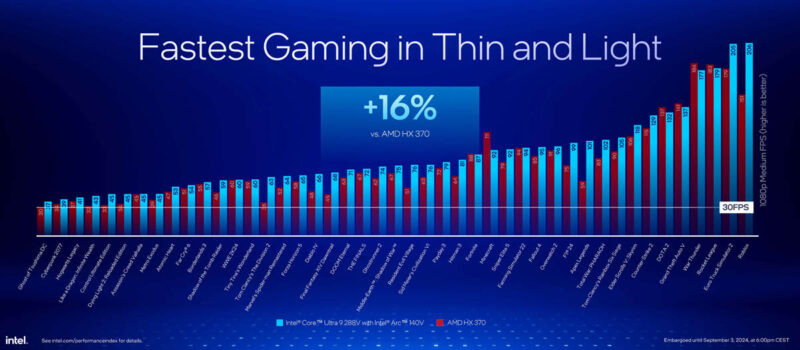

Intel says that its new graphics are faster than Qualcomm’s, and Qualcomm cannot play all games.

Intel also says it is faster than the new AMD Ryzen AI HX 370, but this is a much closer comparison. The chart below is normal competition. The chart above is a symptom of what has made Qualcomm Windows PCs hard to recommend.

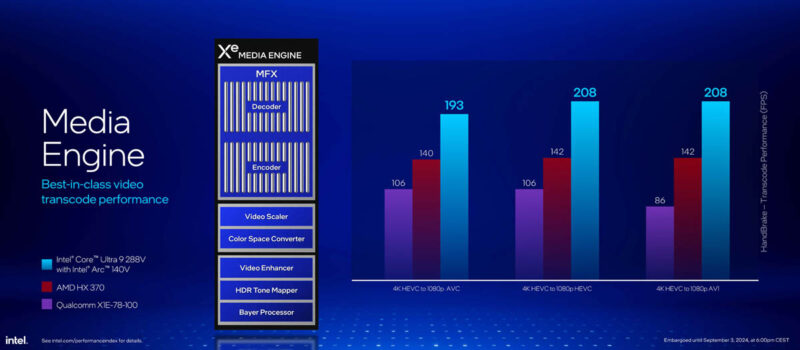

Intel is upgrading the Xe Media Engine with things like VVC or h.266. It is only a ~10% improvement from a file size perspective over AV1, but it has other features like supporting 360 panoramic viewports. The VVC support is only decode, not encode.

Here is the media engine performance comparison. Intel typically has a good media engine.

Here is the graphics summary for the new parts.

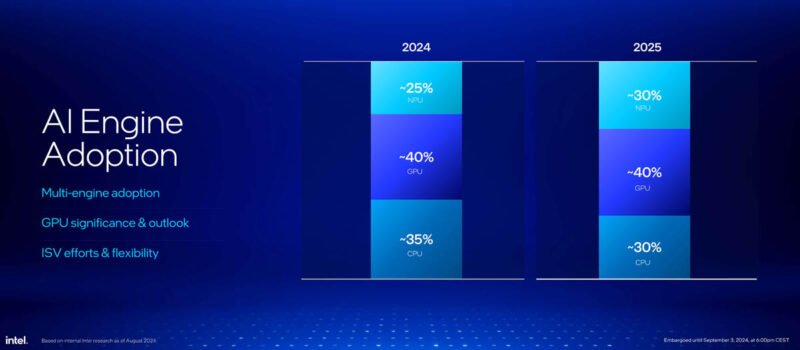

A cool slide Intel showed was the AI Engine Adoption. Something this slide shows is the growing NPU importance versus the CPU.

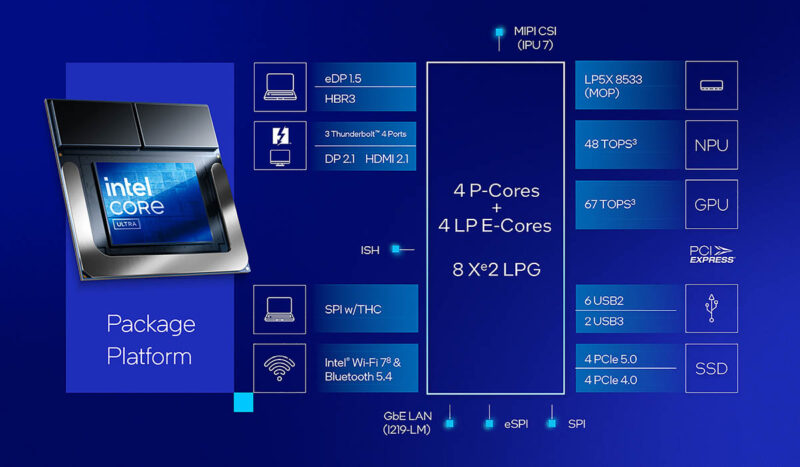

Intel says Lunar Lake features 120 platform TOPS. 67 TOPs from the iGPU, 48 TOPS from the NPU, and 5 TOPS from the CPU.

Here is the Intel versus Qualcomm performance summary for creative applications. Things like Lightroom AI Denoise and Raw Processing, and the transcribe/ caption features are ones we use every week at STH, and can take a long time to run.

With the new platform, Intel is pushing WiFi 7 and Thunderbolt 4.

Here is the overall summary of the new chip. The memory is 32GB maximum, but there are 16GB models as well. If you want 64GB, that is not in the cards here.

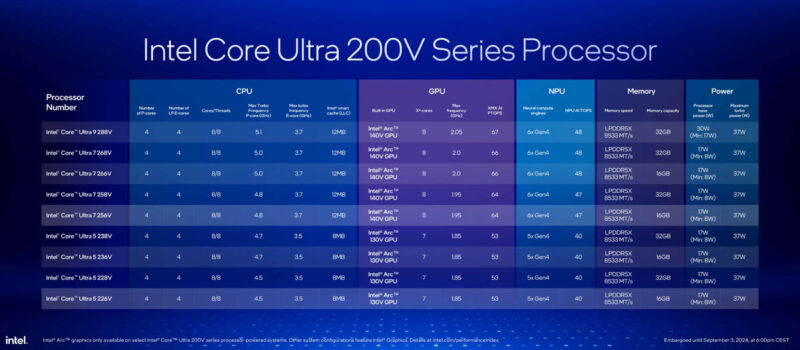

Here is the SKU stack. Something you will notice is that there are often two very similar SKUs until you get to the Memory section where we can see 16GB and 32GB offerings. In previous generations, this might have been only five SKUs, but adding memory onboard adds variants for memory capacity.

One could perhaps hope that Intel will simplify its SKU stack with the onboard memory offerings because it has suffered from SKU proliferation in the past.

Final Words

Lunar Lake for notebooks looks super cool. Intel seems to have touched just about everything in this processor. AMD seems to be still competitive, especially if you want a higher thread count. Qualcomm looks like it needs a new chip fast because if you have a lingering compatibility challenge, then you cannot simply be close on performance and power. It is really interesting for folks who use low-power CPUs for non-traditional notebook tasks such as mini PCs or mini servers (some of the folks at Intel in other divisions watch our mini PC series and do this.) Lunar Lake seems like a much better power and performance solution. At the same time, one loses choice in memory capacities above 32GB and core counts. For those who assign cores to VMs or tasks, simply having fewer cores is not better. That is what is leading to the P-core/ E-core split on the server side.

Still, Lunar Lake seems to be Intel getting its mobile mojo back.

The memory change is interesting. Sure, it’ll be lower power, so the laptops will last longer. But it’s going to hurt the home server/mini pc market (probably a drop far below their notice in revenue) with a 32gb cap. I’m guessing they’ll be shunted off to AMD based solutions.

The memory then is a 32MB cap on workstation class laptops…unless there’s some way to add sodimm/lp-camm2 as well as the 32MB? ? This maybe is just for this sku, perhaps they will have parts that don’t integrate the DRAM on package, but they likely won’t be doing an on-die and off-die memory controller on the same chip anytime soon. The latency shift would be detrimental I’d guess between on-package and on-mainboard dram.

Still…people got use to having fixed memory laptops, I’d guess they won’t care much about this on mainstream product. I’ve got 2x32GB sodimms for vm’s on my laptop, this won’t be a product for me, but 90% of those I support wouldn’t notice. A 64GB sku would be welcome however, and move that to 99% of my end users. I’m assuming this is on package and not on-die memory. Intel is still very good at the on-package setups, better than TSMC from what I can tell. It would be fascinating to run not just DDR5, but also GDDR7X on package to the GPU. Most iGPU’s are easily memory bandwidth starved, this should help that iGPU even with plain DDR5.

From what I’ve heard Arrow Lake based solutions will be the expandable memory options in the future.

Lunar Lake is thin&light only.

“Intel has a long history with Hyper-Threading, so removing the feature was unlikely to be one done at a whim.”

It wasn’t done on a whim. power efficiency is probably a nice side benefit, but I’m sure one of the primary drivers was security. Remove HT, remove most of the attack vectors on modern Intel CPUs/SoCs.

Intel is moving on the right direction, esp. with on package memory and high clocked at that. AMD should be doing this as well. Most notebooks are soldered anyway and 32GB will cover almost all the market. Still the silly e-cores though. I suppose a 4 core CPU just would not sell, thus the marketing-cores are still present.

200V gives them a lot more ceiling for degradation than 1.5V does.

I’m assuming they will still offer expandable RAM in the form of SODIMMs or LPCAMM2 on Arrow Lake-class CPUs. That means desktops, mini PCs, or high performance mobile CPUs like the HX series.

Very interesting. Timing-wise Intel really needs this, but I can’t help but think that the market would fare better if more software developers were tempted/forced to consider ARM as an option. With Lunar Lake they can just continue business as usual and delay the switch and Intel/MS can cling to their majority market share for a while longer.

@MOSFET, Just wait until they learn about HVDC! =)

This looks very interesting. Many AMD-based (thin and light) devices used soldered LPDDR5X to increase signal integrity and achieve higher memory clocks and this just brings that to the next level.

Funnily enough, AMD used to build embedded GPUs with the GDDR5 memory packages on the interposer, right next to the GPU, just like here, back in 2011. This was called the Radeon E6760. They could do something exciting with a 12-16-core part with a large integrated GPU and a 256-bit LPDDR5X memory bus with the chips on the interposer again.

“Intel said that removing HT helped with the overall integration of the chip and, therefore, it was the right decision to remove it.”

Not to mention that hyperthreading is the source of most if not all of the Spectre-type exploits. No more performance sapping workarounds.