In San Francisco California today, Intel announced a new big data platform in the form of the third release of the Intel Apache Hadoop framework. Intel sees Hadoop as a major application for its Xeon and Atom processors in the near future as data is growing rapidly. One big data statistic Intel brought out at the event by Boyd Davis (Intel’s lead) is that we are now generating 1PB of data every 11 seconds. Intel’s goal is to have its solutions, from processors to solid state disks to networking to help process and gain insights from that data. Big data is driven to a large extent by open source technology. Lustre, Hadoop, OpenStack are major players that are democratizing big data applications and Intel is using these technologies to build a platform to sort through the big data problem.

Intel’s goal is to have its solutions, from processors to solid state disks to networking to help process and gain insights from that data. Big data is driven to a large extent by open source technology. Lustre, Hadoop, OpenStack are major players that are democratizing big data applications and Intel is using these technologies to build a platform to sort through the big data problem.

Intel first shared its view and goals for the Intel Distribution for Apache Hadoop Software v3.

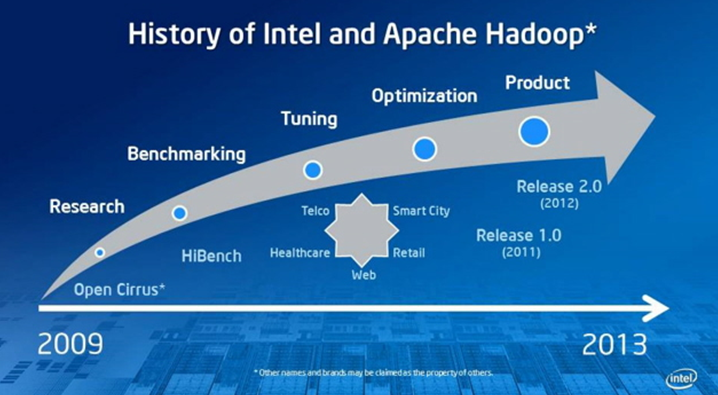

Intel is looking to provide performance, security and resiliency in the Apache Hadoop framework. The company also shared a quick road map of their journey in the area. One thing Boyd Davis highlighted was that this big data effort was largely based on requests and support from customers in China like China Mobile and China Telecom.

Intel’s goal is to change Hadoop from being seen as a non-realtime batch processing framework, and to take it to real-time analytics. Intel’s goal is to play horizontally with a consistent framework. Intel is not planning to go deep into an application or industry vertical solution.

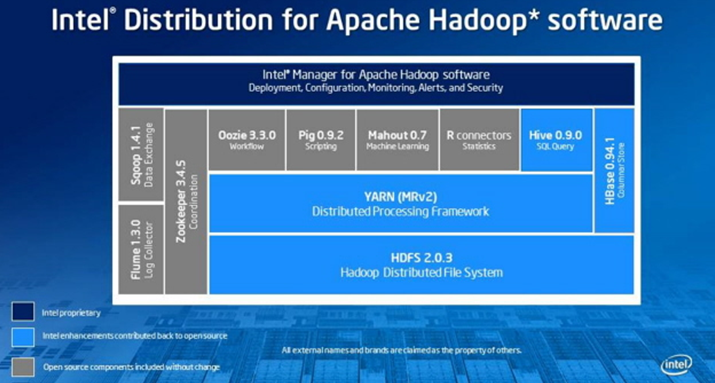

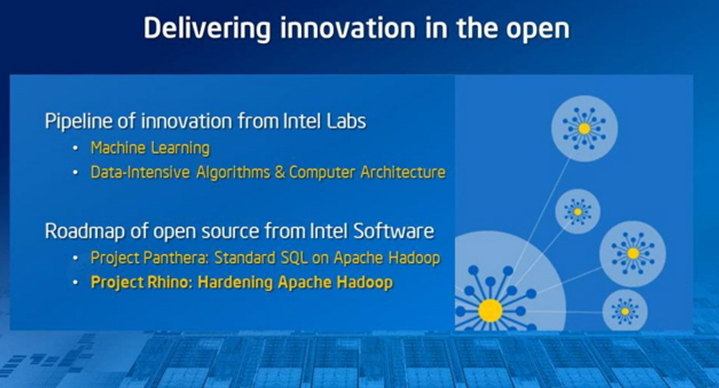

Intel is planning to release almost everything to the opensource community. What Intel is keeping proprietary to their solution is the Intel Manager for Apache Hadoop which is a management framework.

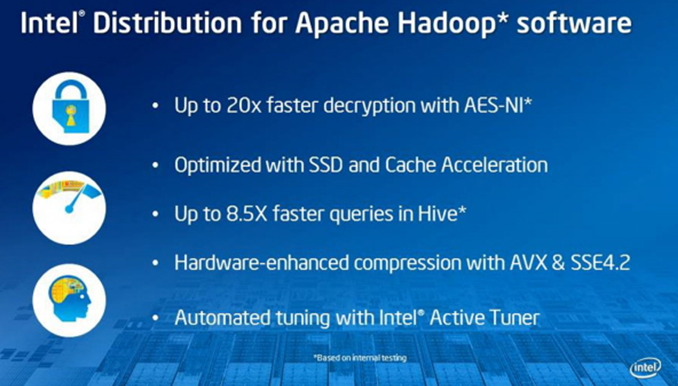

Intel highlighted certain features that they have to accelerate Hadoop. AES acceleration into the Hadoop framework with Intel AES-NI from the Intel Xeon 5600 series onward. SSD caching solution. Intel uses machine learning with Intel Active Tuner to do “Hadoop on Hadoop” to suggest performance tweaks. Intel stated that this could gain as much as 30% performance improvement.

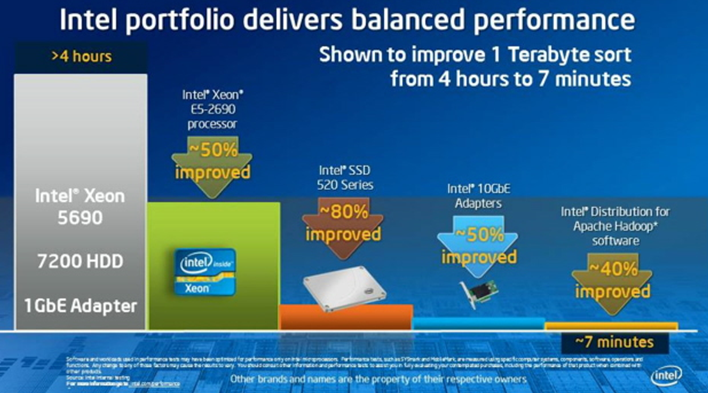

Intel showed how their portfolio of technologies accelerates the Hadoop stack. Here is Intel’s breakdown of where performance is improved with each component upgrade in the ten machine cluster.

Intel has a number of projects in the work, including adding standard SQL language to Hadoop as well as securing deployments with AES encryption.

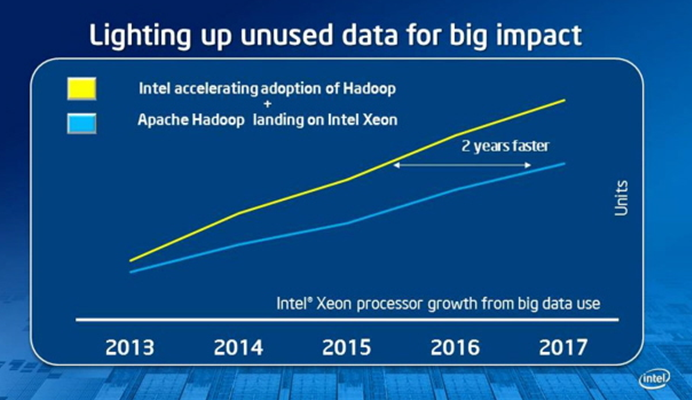

Intel’s goal is to make Hadoop more accessible and therefore ramp the usage of Hadoop in the industry. Of course, Intel is also hoping that increasing adoption of Hadoop clusters, that they will sell more chips.

For those looking for more information here are Intel’s Hadoop and big data links:

Intel Big Data and Apache Hadoop Conclusion

Overall, STH is seeing more users than ever building cloud computing labs for Hadoop and big data applications. Intel is clearly banking that by helping to push Hadoop forward with their technologies that they will be able to stave off ARM vendors and AMD from a growing space that will sell many CPUs. For those unfamiliar, the goal with these applications is to sort through the seas of information we now generate and turn raw data into useful information. That application is seen as a growing segment in the market that will require much more computing power than the world currently has. The goal is to be able to transform that information in real-time while coping with a growing amount of data.