Inspur NF8260M5 Power Consumption

Our Inspur NF8260M5 test server used a quadruple power supply configuration, and we wanted to measure how it performed using the Intel Xeon Platinum 8276L CPUs.

- Idle: 0.77kW

- STH 70% Load: 1.1kW

- 100% Load: 1.3kW

- Maximum Recorded: 1.5kW

Compared to dual socket systems this may seem low, but one must remember that this is a 112 core system with 6.75TB of memory in its DIMM channels and 24 storage devices. This is a very large system indeed.

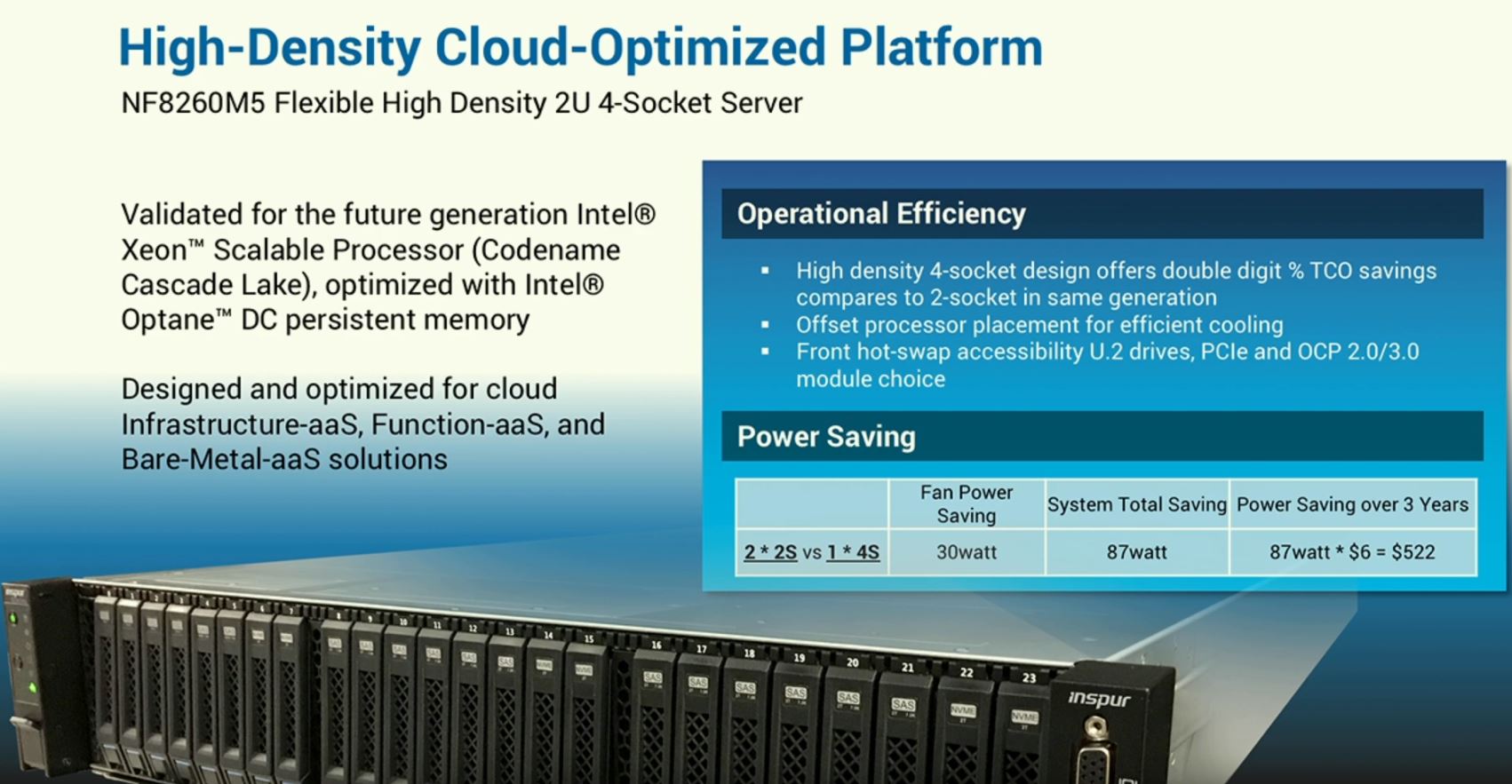

Inspur claims that the 4-socket design saves around 87W over two dual-socket servers yielding around $522 in three year TCO savings. We were told at OCP Summit 2019 that this $6 per watt figure is from one of Inspur’s CSP customers. For 2U4N testing, we have a methodology to validate this type of claim, see How We Test 2U 4-Node System Power Consumption. We do not have that for four-socket servers but may add this in the future.

Note these results were taken using a 208V Schneider Electric / APC PDU at 17.7C and 72% RH. Our testing window shown here had a +/- 0.3C and +/- 2% RH variance.

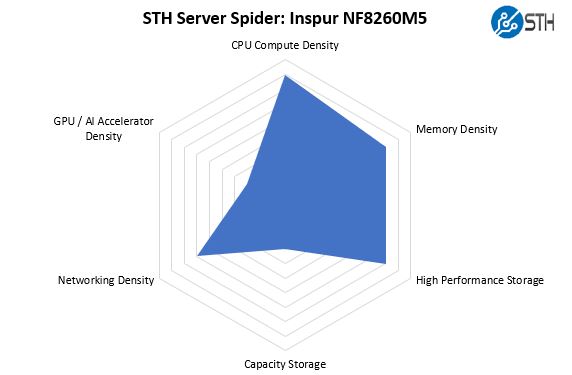

STH Server Spider: Inspur NF8260M5

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

As you can see, the Inspur Systems NF8260M5 is designed to provide a large node in a 2U form factor. While 2U4N designs offer more compute density in terms of cores per U, the 2U 4-socket design is very dense for a 4-socket server. This gives 48 DIMM slots for DDR4 or Optane DCPMM in a single 2U chassis. The Inspur NF8260M5 has the ability to house GPUs internally, and externally via expansion chassis. In 2U it does not have the same GPU capacity as some dedicated GPU offerings like the Inspur Systems NF5468M5 Review 4U 8x GPU Server we reviewed.

Final Words

There are two aspects to this review. First, is that the Inspur Systems NF8260M5 offers a strong 4-socket platform for the CSP market. After using the server for some time, we see how Inspur and Intel designed the system for broader use. Using industry standard BMC and management functionality, OCP NICs instead of proprietary NICs, and standard components, we see this as very attractive to a class of customers who want to use open platforms to manage their infrastructure. For example, if you wanted an OpenStack private cloud or Kubernetes cluster with 4-socket nodes, this is the type of design you would want.

We found the performance to be excellent and the server to be highly serviceable. Inspur has a vision for this platform well beyond the single node that includes external JBOFs and JBOGs for building massive x86 nodes. We think that the OCP community will find interesting uses for the Crane Mountain platform.

Good review but a huge market for Optane-equipped systems is databases.

Can you at least put up some Redis numbers or other DB-centric numbers?

Yep. MongoDB and Redis, nginx are the most important. Maybe some php benches with caching & etc…

From what I read that’s what they’re doing using memory mode and redis in VMs on the last chart. Maybe app direct next time?

Can you guys do more OCP server reviews? Nobody else is doing anything with them outside of hyperscale. I’d like to know when they’re ready for more than super 7 deployment

It’s hard. What if we wanted to buy 2 of these or 10? I can’t here with Inspur.

I’m also for having STH do OCP server reviews. Ya’ll do the most server reviews and OCP is growing to the point that maybe we’d have to deploy in 36 months and start planning in the next year or two.

Why is half the ram inaccessible?

How’s it inaccessible? You can see it all clearly there?