Inspur NF5468M5 Storage Performance

We tested a few different NVMe storage configurations because this is one of the Inspur Systems NF5468M5 key differentiation points. Previous generation servers often utilized a single NVMe storage device if any at all. There are eight SAS3 / SATA bays available but we are assuming those are being used for OS/ bulk storage given the system’s design. Instead, we are testing the four NVMe drives that will likely be used for high-performance storage.

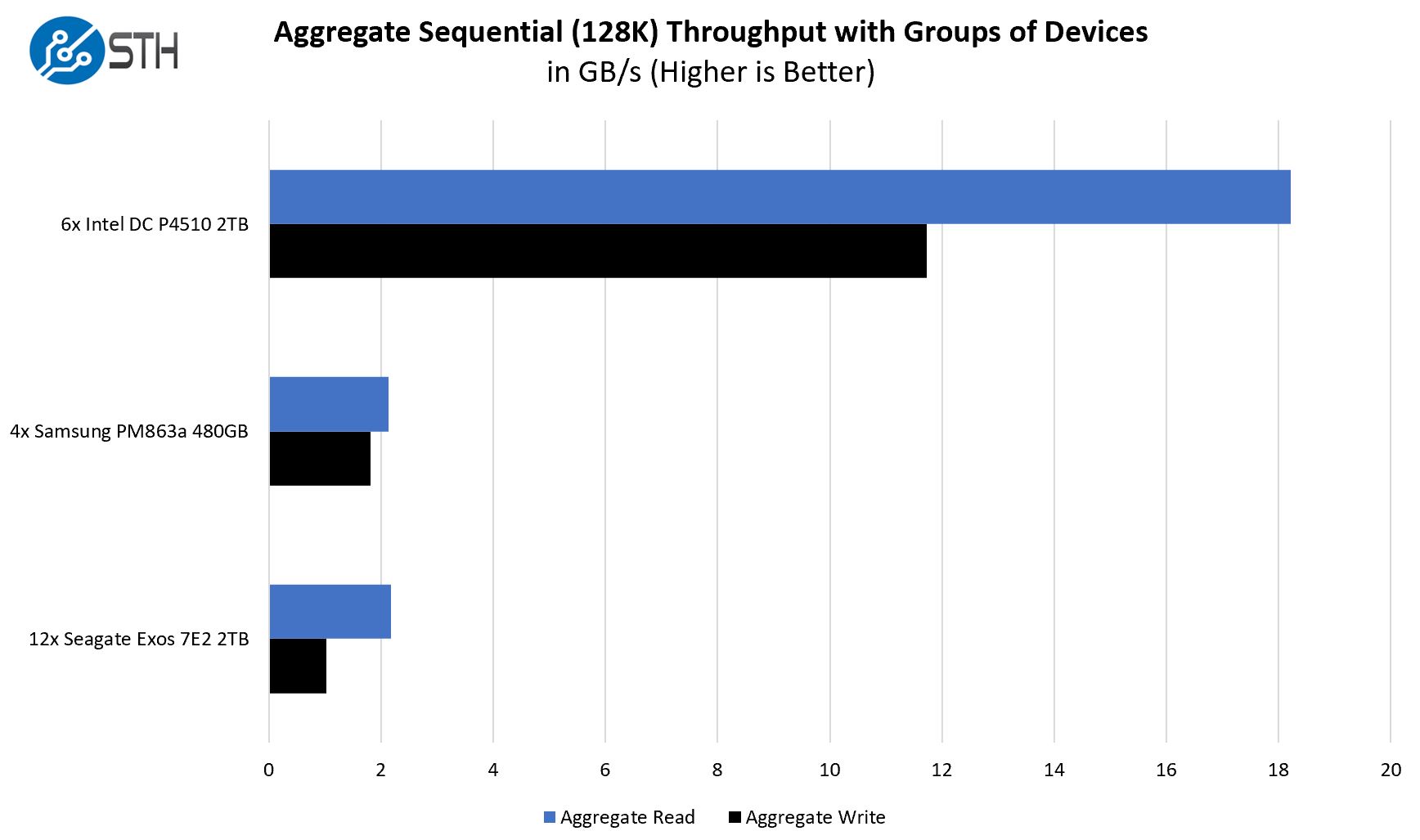

Here one can see the impressive performance from the NVMe SSDs. When put into the context of arrays, the performance of NVMe SSDs clearly outpaces the SAS and SATA counterparts while offering excellent capacity.

With the Inspur NF8260M5’s flexible storage backplane and rear I/O riser configuration, one can configure the system to host a large number of NVMe SSDs. In the coming months, NVMe SSDs will continue to displace both SATA and SAS3 alternatives making this chassis well equipped for trends in the server storage market.

Also, we have shown that we are testing this system with Optane DCPMM. Using AppDirect mode with the DCPMM modules can yield well over 100GB/s of storage throughput. We focused our performance testing on Memory Mode, however in the next 12 to 18 months we expect more applications will be able to use DCPMM in AppDirect mode greatly enhancing the storage story of the NF8260M5.

Inspur NF8260M5 Networking Performance

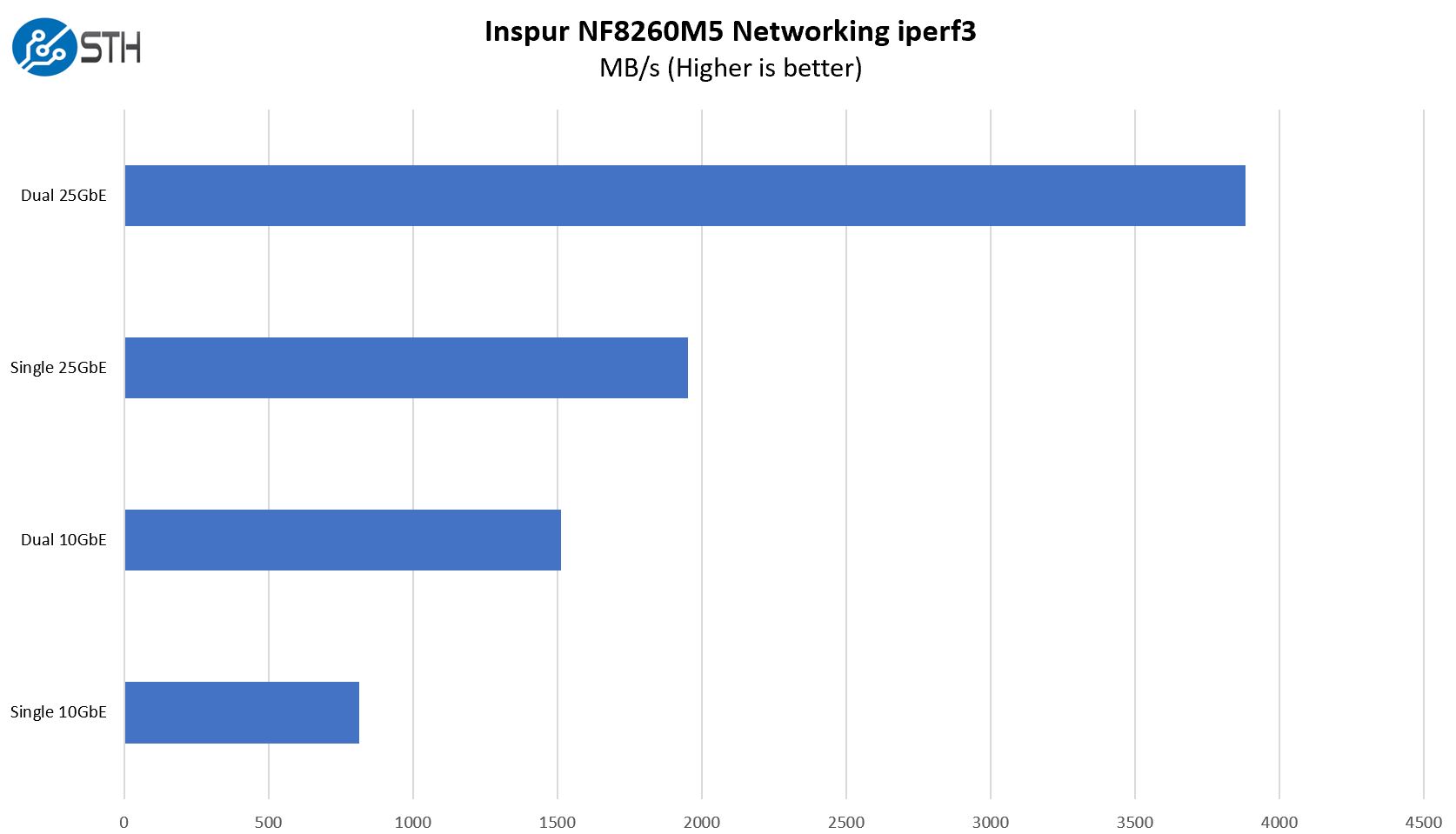

We used the Inspur Systems NF8260M5 with a port dual Mellanox ConnectX-4 Lx 25GbE OCP NIC. The server itself supports a riser configuration for more, this is simply all we could put in our test system.

Networking is an important aspect as CSPs are commonly deploying 25GbE infrastructure and many deep learning clusters are using EDR Infiniband as their fabric of choice or 100GbE for moving data from the network to GPUs. We would be tempted to move to 100GbE if we had GPUs connected to this system and more riser slots.

Next, we are going to take a look at the Inspur Systems NF8260M5 power consumption before looking at the STH Server Spider for the system and concluding with our final words.

Good review but a huge market for Optane-equipped systems is databases.

Can you at least put up some Redis numbers or other DB-centric numbers?

Yep. MongoDB and Redis, nginx are the most important. Maybe some php benches with caching & etc…

From what I read that’s what they’re doing using memory mode and redis in VMs on the last chart. Maybe app direct next time?

Can you guys do more OCP server reviews? Nobody else is doing anything with them outside of hyperscale. I’d like to know when they’re ready for more than super 7 deployment

It’s hard. What if we wanted to buy 2 of these or 10? I can’t here with Inspur.

I’m also for having STH do OCP server reviews. Ya’ll do the most server reviews and OCP is growing to the point that maybe we’d have to deploy in 36 months and start planning in the next year or two.

Why is half the ram inaccessible?

How’s it inaccessible? You can see it all clearly there?