The Inspur Systems NF8260M5 is a four-socket Intel Xeon server with a unique twist. Inspur and Intel jointly developed the “Crane Mountain” platform specifically for the cloud service provider (CSP) market. While many of the four-socket systems we review are developed for enterprises, this is the first 4P server we have seen specifically designed for CSPs. Inspur and Intel are going a step further and contributing this design to the OCP community so others can benefit from the design.

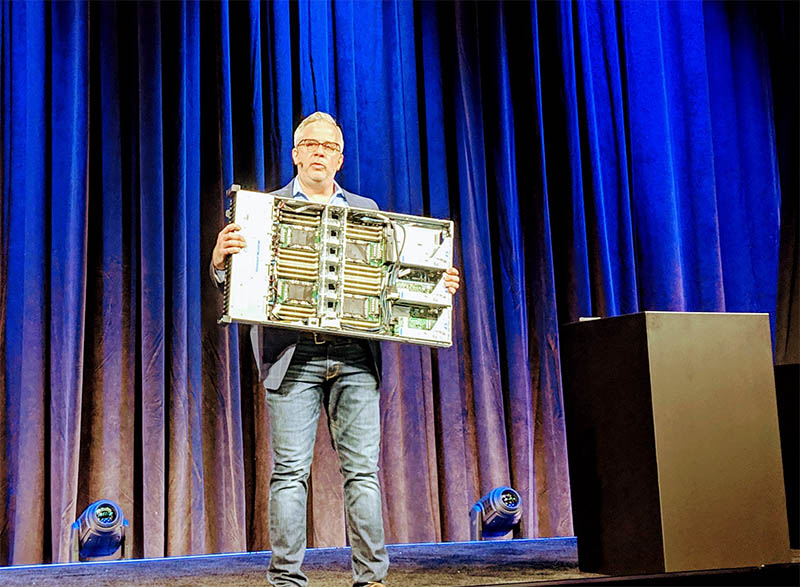

Our introduction to the Inspur Systems NF8260M5 was through Intel’s Jason Waxman who held a system up on stage at OCP Summit 2019 during Intel’s keynote. He noted at the time that this would support 2nd Gen Intel Xeon Scalable processors and Intel Optane DC Persistent Memory.

After the show, we immediately got one in our test lab and our review here today shows what this OCP contribution has to offer.

Inspur Systems NF8260M5 Overview

At the top-end packaging, this is a 2U server with 24x 2.5″ hot-swap bays up front. We are going to discuss storage in a bit, however, this form factor is a big deal. Previous generation Intel Xeon E7 series quad socket servers were often 4U designs. Newer quad socket designs like the Inspur Systems NF8260M5 are 2U, effectively doubling socket density for these scale up platforms.

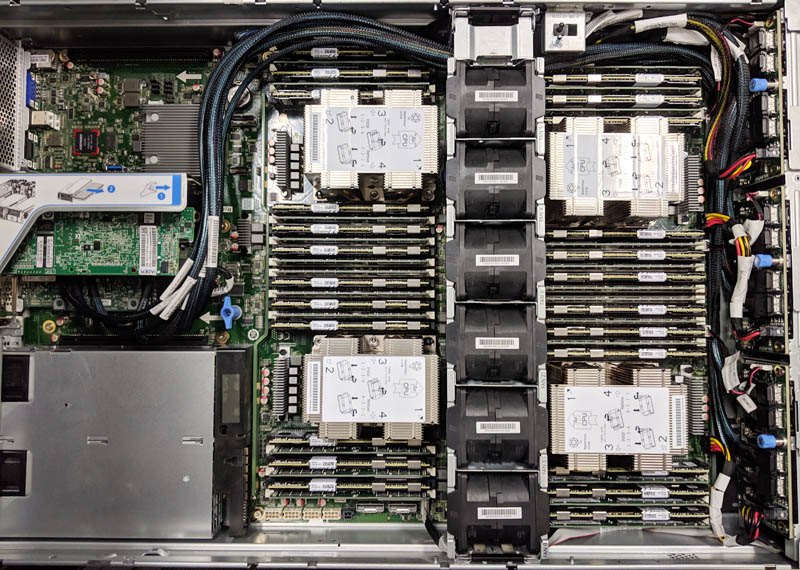

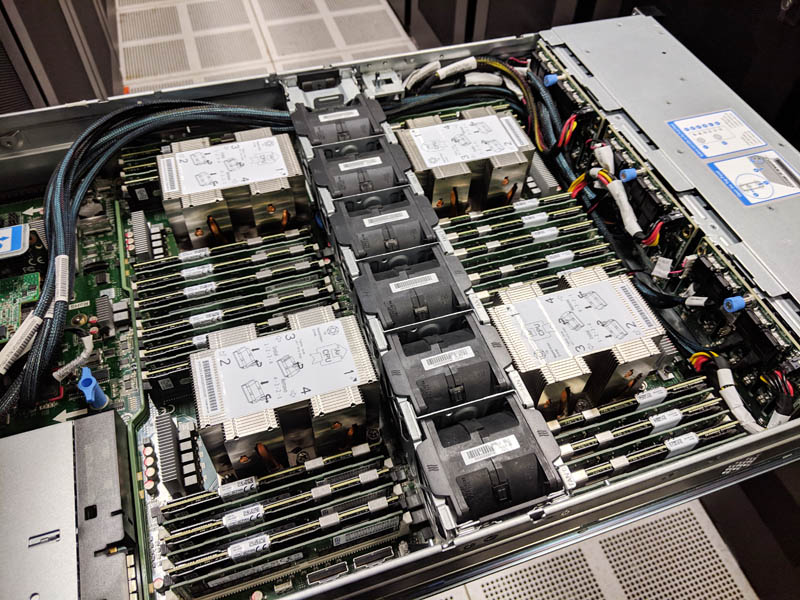

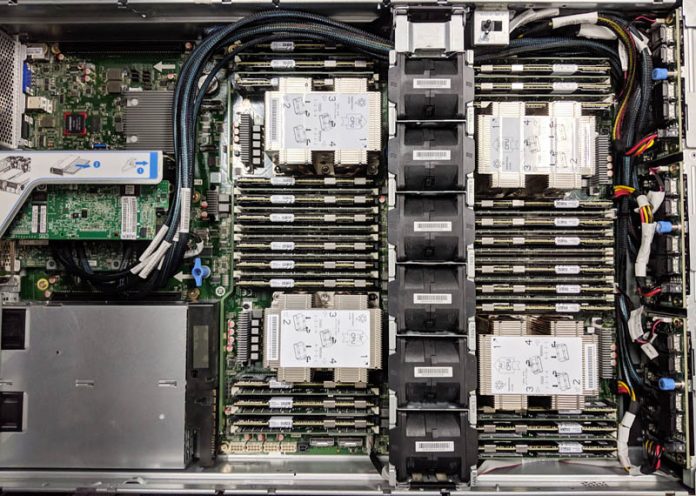

Inside, we find the heard of the system which are four Intel Xeon Scalable CPUs. These CPUs can be either first or second generation Intel Xeon Scalable processors. Our test system, we utilized a range of second generation options. Higher-end SKUs top out at 28 cores and 56 threads meaning that the entire system can handle 112 cores and 224 threads.

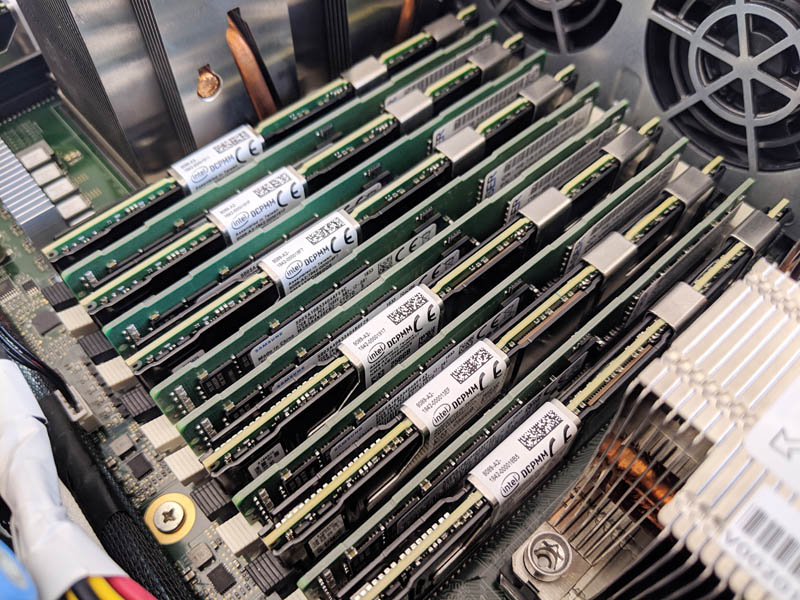

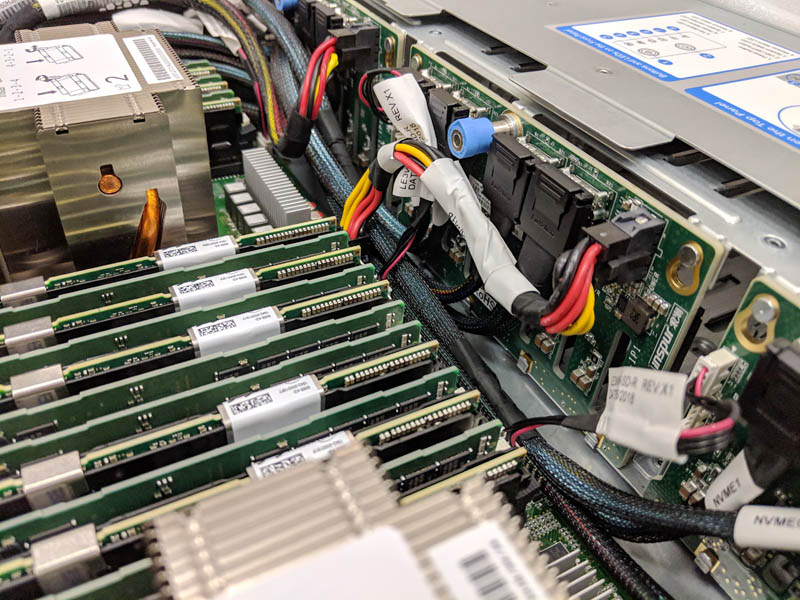

Each CPU is flanked by a maximum set of twelve DIMM slots, making 48 DIMM slots total. One can utilize 128GB LRDIMM for up to 6TB of memory or reaching into 12TB using 256GB LRDIMMs that are coming on the market now. One can also utilize Intel Optane DC Persistent Memory Modules or Optane DCPMM.

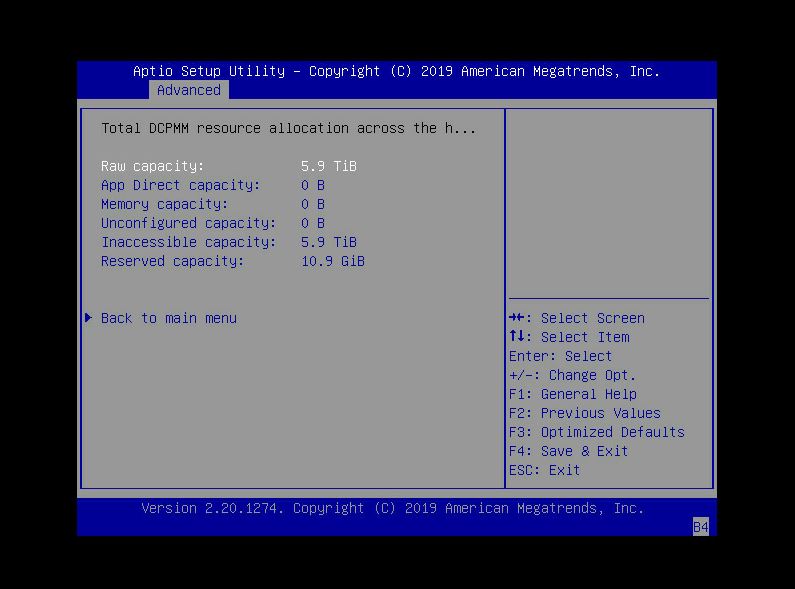

These modules combine persistent memory attributes, like NVMe SSDs, with higher speeds and lower latency from being co-located in the DRAM channels. Our system, for example, has 24x 32GB DDR4 RDIMMs along with 24x 256GB Optane DCPMMs for a combined 6.75TB of memory in the system. That is absolutely massive.

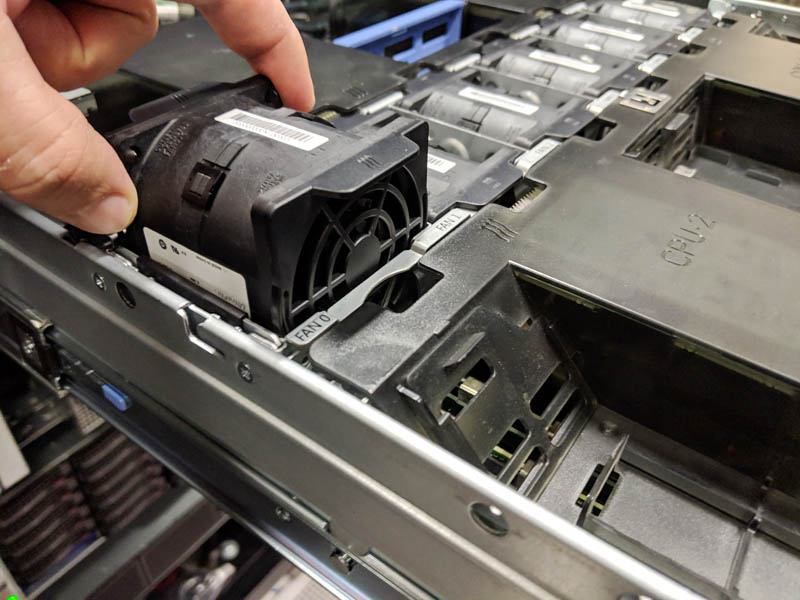

In the middle of the chassis, we find six hot-swap fans cooling this massive system.

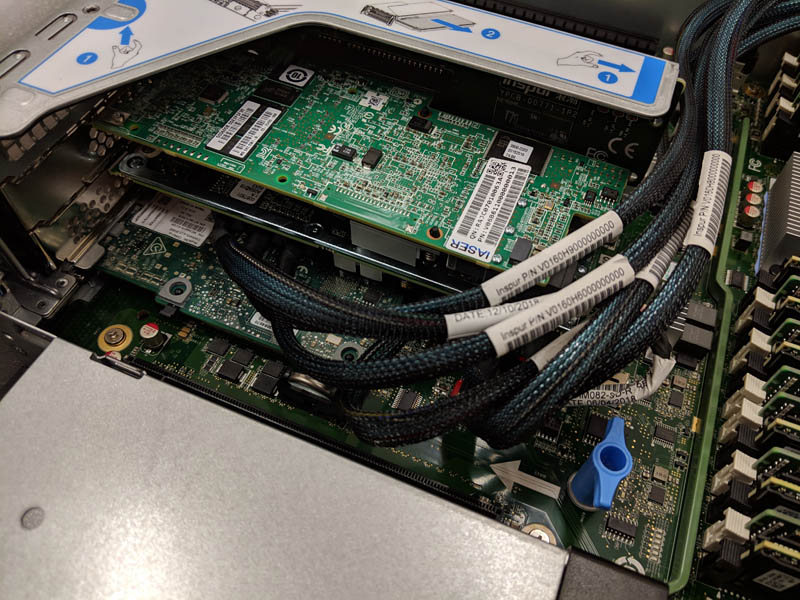

The rear I/O is handled mainly via risers. there are three sets of risers across the chassis. The Inspur Systems NF8260M5 also has an OCP mezzanine slot for networking without using the risers. Our single riser configuration was enough to handle the full storage configuration for our system.

Storage is segmented into three different sets of eight hot-swap bays. There are three PCBs each servicing a set of eight bays. Using this method, the NF8260M5 can utilize NVMe, SAS/SATA, or combined backplanes depending on configuration needs.

Power is supplied via two power supplies. The PSUs in our test unit were 800W units which is not redundant for our configuration. Inspur has 1.3kW, 1.6kW, and 2kW versions which we would recommend if you were configuring a similar system.

Rear I/O without expansion risers and the OCP mezzanine slot is limited to a management RJ-45 network port, two USB 3.0 ports, and legacy VGA plus serial ports.

On a quick usability note, the Inspur NF8260M5 was serviceable on its rails. Some lower-end units require the chassis to be completely removed for service.

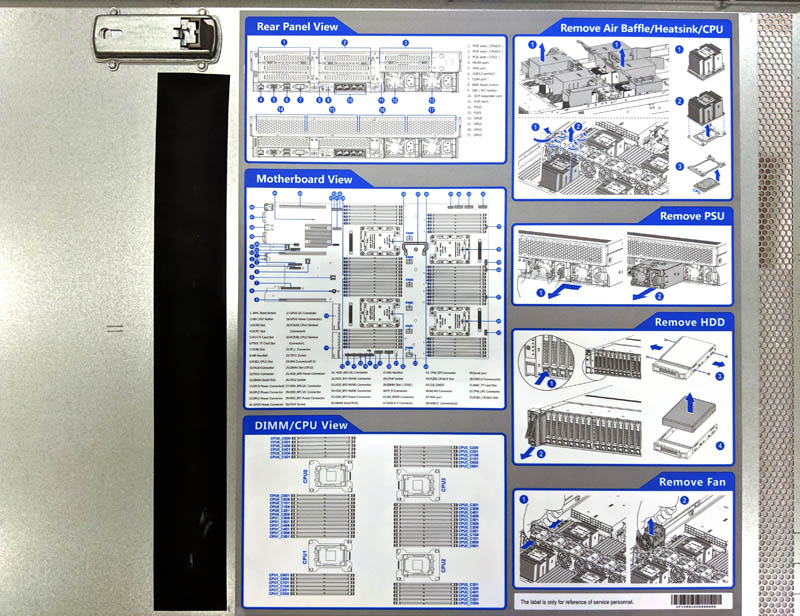

The top cover had a nice latching mechanism and good documentation of the system’s main features within. This is a feature we expect now from top tier servers.

Overall, this streamlined hardware design worked well for us in testing.

Next, we are going to take a look at the Inspur Systems NF8260M5 test configuration and management, before continuing with our review.

Good review but a huge market for Optane-equipped systems is databases.

Can you at least put up some Redis numbers or other DB-centric numbers?

Yep. MongoDB and Redis, nginx are the most important. Maybe some php benches with caching & etc…

From what I read that’s what they’re doing using memory mode and redis in VMs on the last chart. Maybe app direct next time?

Can you guys do more OCP server reviews? Nobody else is doing anything with them outside of hyperscale. I’d like to know when they’re ready for more than super 7 deployment

It’s hard. What if we wanted to buy 2 of these or 10? I can’t here with Inspur.

I’m also for having STH do OCP server reviews. Ya’ll do the most server reviews and OCP is growing to the point that maybe we’d have to deploy in 36 months and start planning in the next year or two.

Why is half the ram inaccessible?

How’s it inaccessible? You can see it all clearly there?