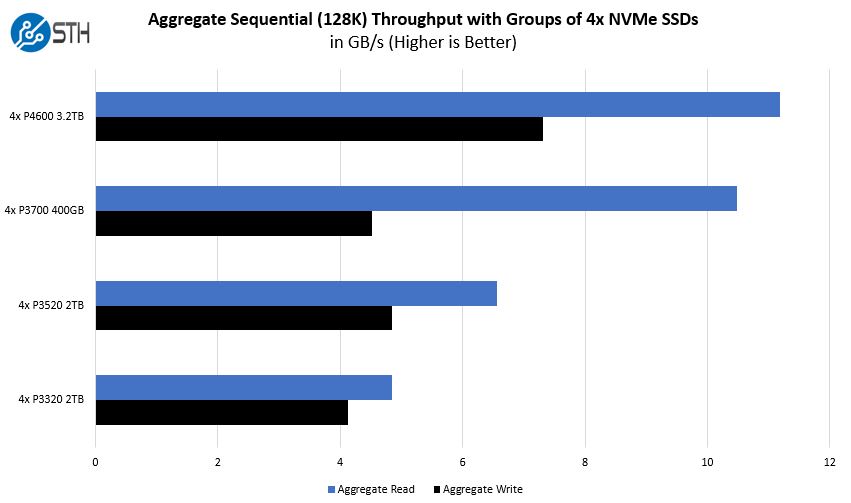

Inspur NF5468M5 Storage Performance

We tested a few different NVMe storage configurations because this is one of the Inspur Systems NF5468M5 key differentiation points. Previous generation servers often utilized a single NVMe storage device if any at all. There are eight SAS3 / SATA bays available but we are assuming those are being used for OS/ bulk storage given the system’s design. Instead, we are testing the four NVMe drives that will likely be used for high-performance storage.

Here we see the impressive performance. With the Intel Xeon E5-2600 V4 generation of PCIe-based deep learning training servers, one was typically limited to a single PCIe slot for NVMe storage. That meant a single device. With the Intel Xeon E5 generation that single NVMe device was generally 1.6TB to 2TB in size.

We are using four 3.2TB devices here which are not even the highest capacity U.2 NVMe SSDs on the market. Even with that, we have 12.8TB of NVMe storage or over six times what we saw from high-end previous generation systems. That means one can store more data locally. What is more, one has more local NVMe bandwidth feeding the GPUs which can lead to higher efficiency on many scenarios.

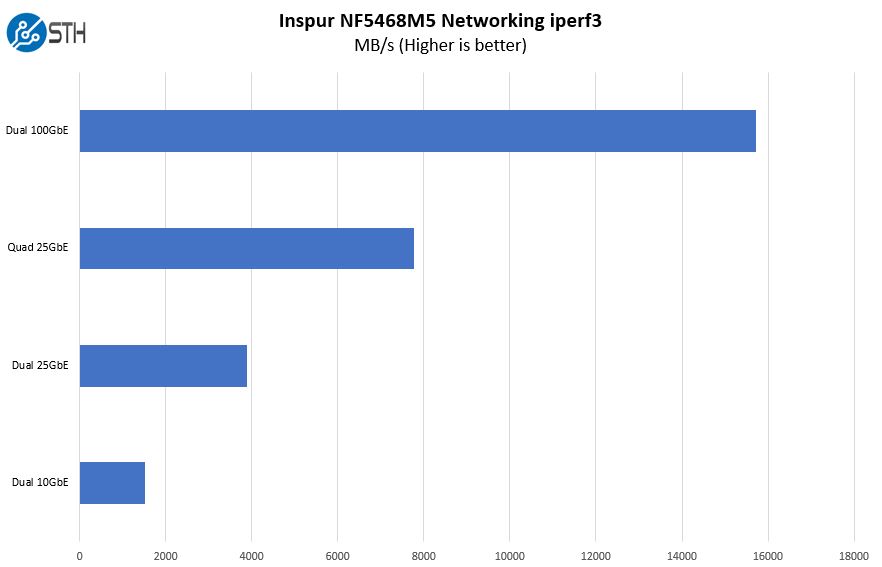

Inspur NF5468M5 Networking Performance

We loaded the Inspur Systems NF5468M5 with a number of NICs. For the main networking NICs, we used Mellanox ConnectX-5 100GbE/ EDR Infiniband NICs but also utilized the dual Mellanox ConnectX-4 Lx 25GbE NICs.

Networking is an important aspect as CSPs are commonly deploying 25GbE infrastructure and many deep learning clusters are using EDR Infiniband as their fabric of choice or 100GbE for moving data from the network to GPUs.

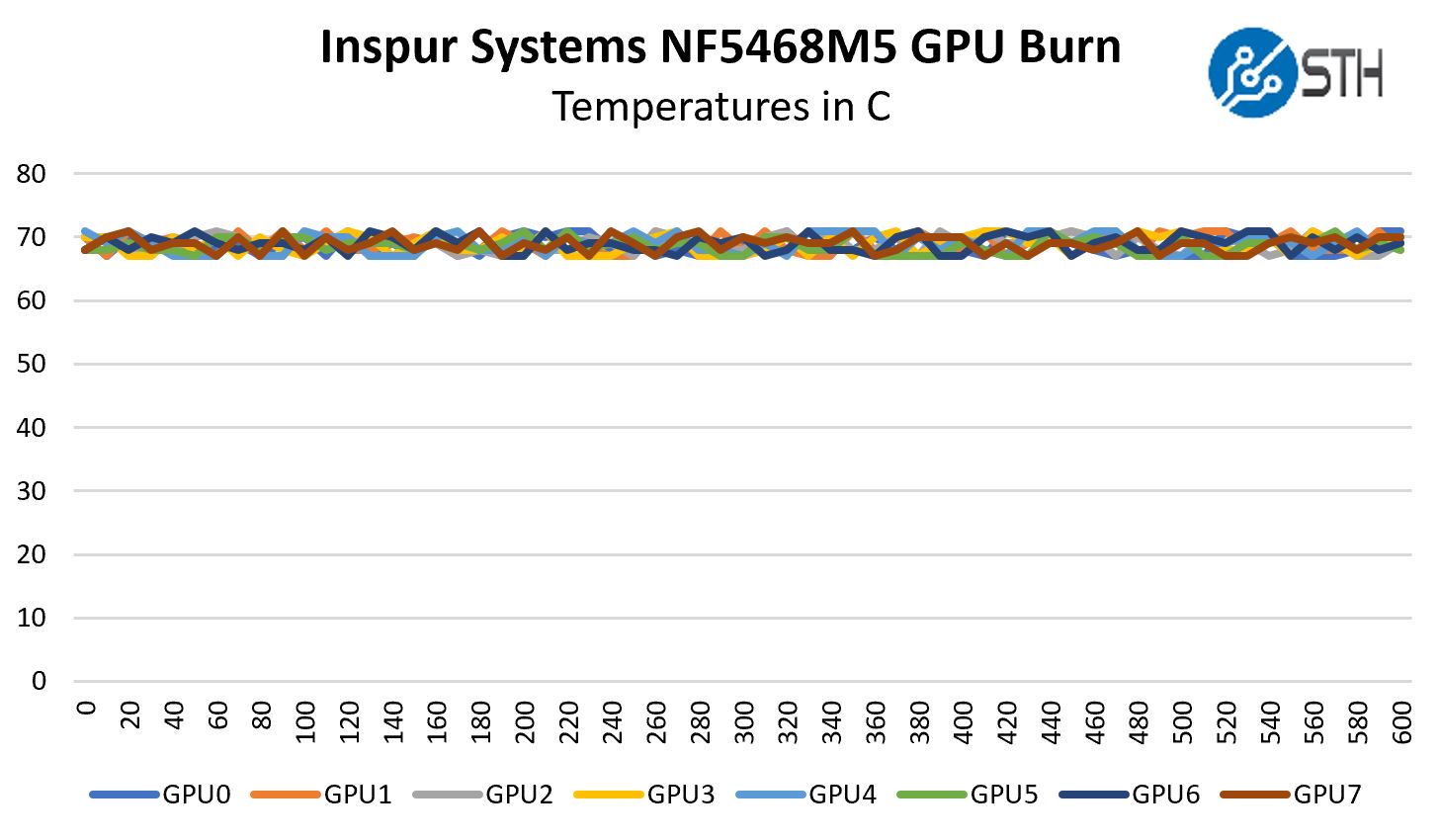

Inspur Systems NF5468M5 GPU Burn

One of the areas we wanted to test with this solution was the ability to effectively cool eight NVIDIA Tesla V100 32GB GPUs. We ran GPU burn and took samples

Overall, the system cooling kept GPUs in acceptable ranges. This performance takes more than just fans. Consistent performance over time requires aspects such as cabling and airflow over NICs to be designed to ensure proper airflow. The Inspur Systems NF5468M5 scores well with GPU Burn which is a worst-case scenario.

Next, we are going to take a look at the Inspur Systems NF5468M5 power consumption before looking at the STH Server Spider for the system and concluding with our final words.

Ya’ll are doing some amazing reviews. Let us know when the server is translated on par with Dell.

How wonderful this product review is! So practical and justice!

Amazing. For us to consider Inspur in Europe English translation needs to be perfect since we have people from 11 different first languages in IT. Our corporate standard since we are international is English. Since English isn’t my first language I know why so early of that looks a little off. They need to hire you or someone to do that final read and editing and we would be able to consider them.

The system looks great. Do more of these reviews

Thanks for the review, would love to see a comparison with MI60 in a similar setup.

Great review! This looks like better hardware than the Supermicro GPU servers we use.

Can we see a review of the Asus ESC8000 as well? I have not found any other gpu compute designer that offers the choice in bios between single and dual root such as Asus does.

Hi Matthias – we have two ASUS platforms in the lab that are being reviewed, but not the ASUS ESC8000. I will ask.

How is the performance affected by CVE‑2019‑5665 through CVE‑2019‑5671and CVE‑2018‑6260?

P2P bandwidth testing result is incorrect, above result should be from NVLINK P100 GPU server not PCIE V100.