Inspur NF5488A5 Power Consumption

Our Inspur NF5488A5 test server used a quad 3kW power supply configuration.

We managed to get up to 5.2kW which is more than our standard 30A 208V Schneider Electric / APC PDU can handle. We actually had to draw power from two circuits at 17.6C and 72% RH to keep the server online. Our testing window shown here had a +/- 0.3C and +/- 2% RH variance.

One probably would not want to deploy a server like this, but it highlights the need to use higher-power racks with servers like these. 10x of these systems occupying 40U of rack space would use >50kW which is why we often see empty spaces in racks with these servers.

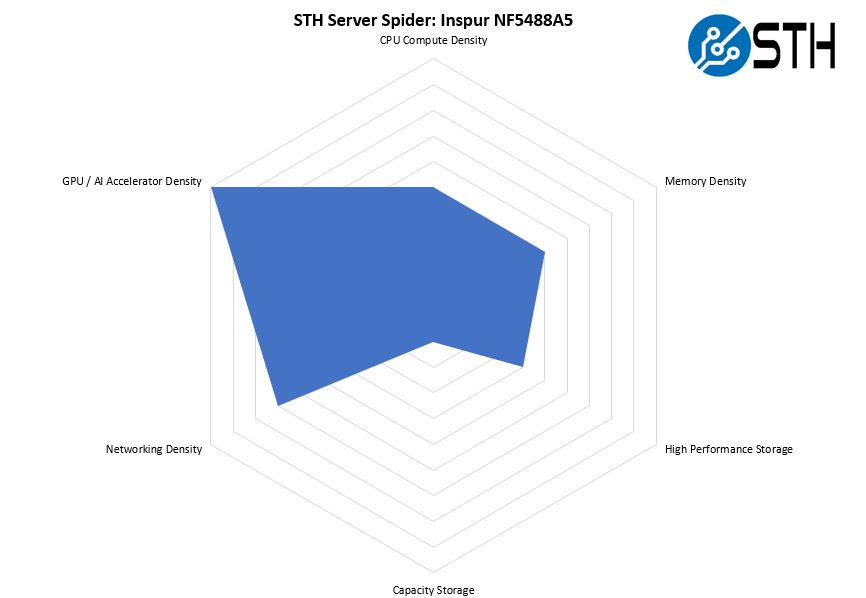

STH Server Spider Inspur NF5488A5

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

The Inspur Systems NF5488A5 is a solution designed around providing great A100 performance in a flexible configuration. We get more cores in this generation and significantly better performance overall.

Final Words

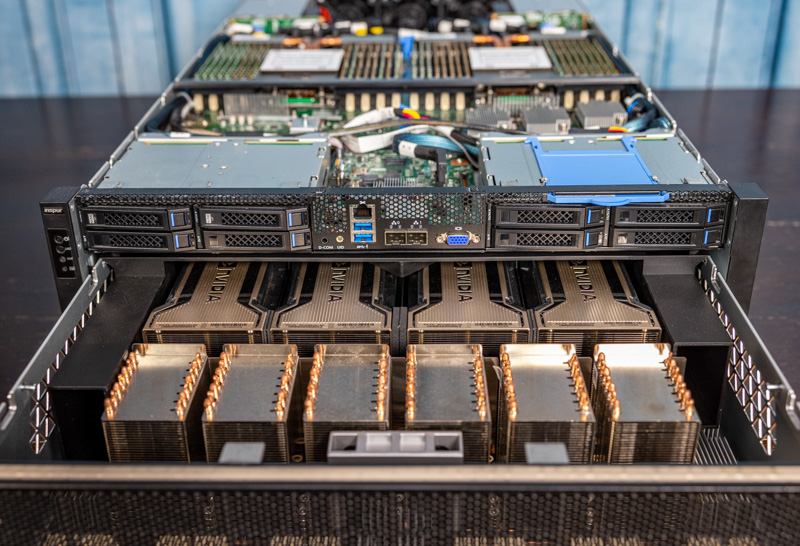

Overall, this is a very cool system. If you are building a training cluster these days, getting a higher-end NVIDIA HGX A100 8 GPU system is the way to go. While the cost is higher than on PCIe-based systems, that is not the best way to look at it. Generally, we are seeing cluster-level pricing in the 10-20% higher range for clusters based on machines like the Inspur NF5488A5, but also better performance.

The ability to handle higher-power A100 GPUs in the 400-500W range and the NVSwitch topology means that we get more performance per node. After all, the networking fabric, storage, CPUs, RAM, and so forth are largely similar so it is only a small portion of the cost is higher to go with the HGX A100 8 GPU solutions. That is a key reason that the market has shifted to this form factor from being PCIe dominated a few years ago.

The Inspur NF5488A5 is a solid server. It is built in a highly serviceable fashion. It performed well for us. From the exterior, it may look like a small upgrade over the previous generation NF5488M5 that we reviewed. At the end of the day, we get much faster CPUs, GPUs, networking (with PCIe Gen4). We also get more memory capacity and bandwidth. Overall, it is just a better platform and not by a small increment.

We upgraded from the DGX-2 (initially with Volta, then Volta Next) with a DGX A100 – with 16 A100 GPUs back in January – Our system is Nvidia branded, not inspur. Next step is to extricate the inferior AMDs with some American made Xeon Ice Lake SP.

DGX-2 came after the Pascal based DGX-1. So has nothing to do with 8 or 16 GPUs.

Performance is not even remotely comparable – a FEA sim that took 63 min on a 16 GPU Volta Next DGX-2 is now done in 4 minutes – with an increase in fidelity / complexity of 50% only take a minute longer than that. 4-5 min is near real time – allowing our iterative engineering process to fully utilize the Engineer’s intuition…. 5 min penalty vs 63-64 min.

DGX-1 was available with V100s. DGX-2 is entirely to do with doubling the GPU count.

Nvidia ditched Intel for failing to deliver on multiple levels, and will likely go ARM in a year or two.

The HGX platform can come in 4-8-16 gpu configs as Patrick showed the connectors on the board for linking a 2nd backplane above. Icelake A100s servers don’t make much sense, they simply don’t have enough pcie lanes or cores to compete at the 16 GPU level.

There will be a couple of 8x A100 Icelake servers coming out but no 16.

It would be nice if in EVERY system review, you include the BIOS vendor, and any BIOS features of particular interest. My experience with server systems is that a good 33% to 50% of the “system stability” comes from the quality of the BIOS on the system, and the other 66% to 50% comes from the quality of the hardware design, manufacturing process, support, etc. It is like you are not reviewing about 1/2 of the product. Thanks.