Inspur Systems NF5488A5 Management

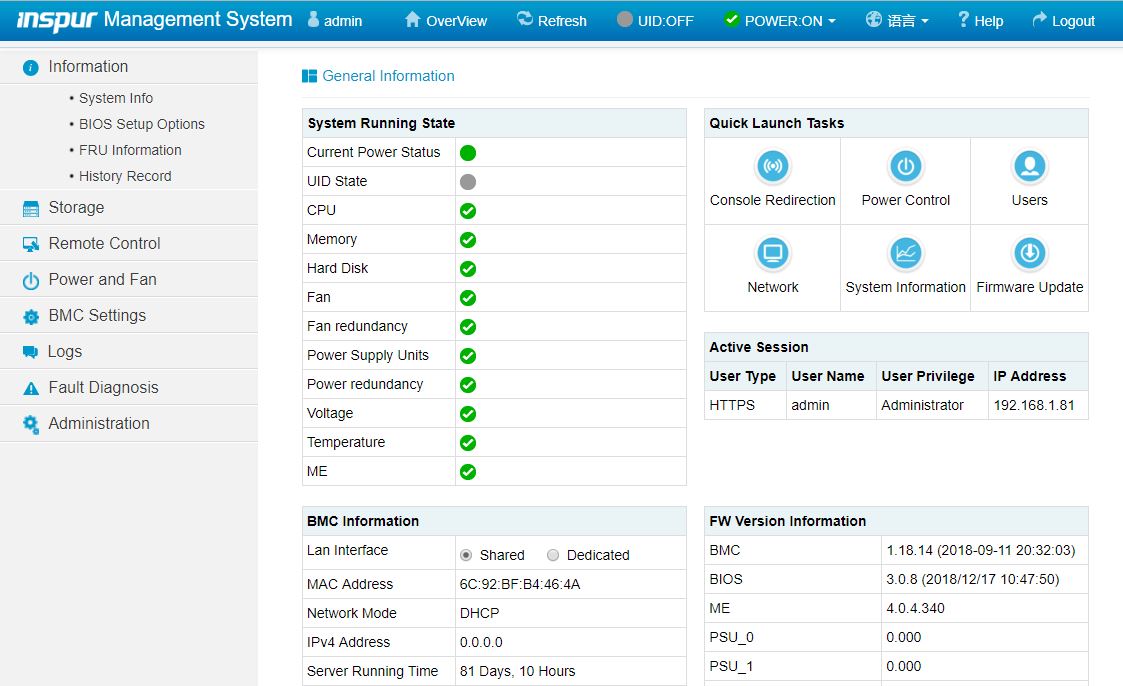

Inspur’s primary management is via IPMI and Redfish APIs. We have shown this before, so we are not going to go into too much extra, but will have a recap here. That is what most hyperscale and CSP customers will utilize to manage their systems. Inspur also includes a robust and customized web management platform with its management solution.

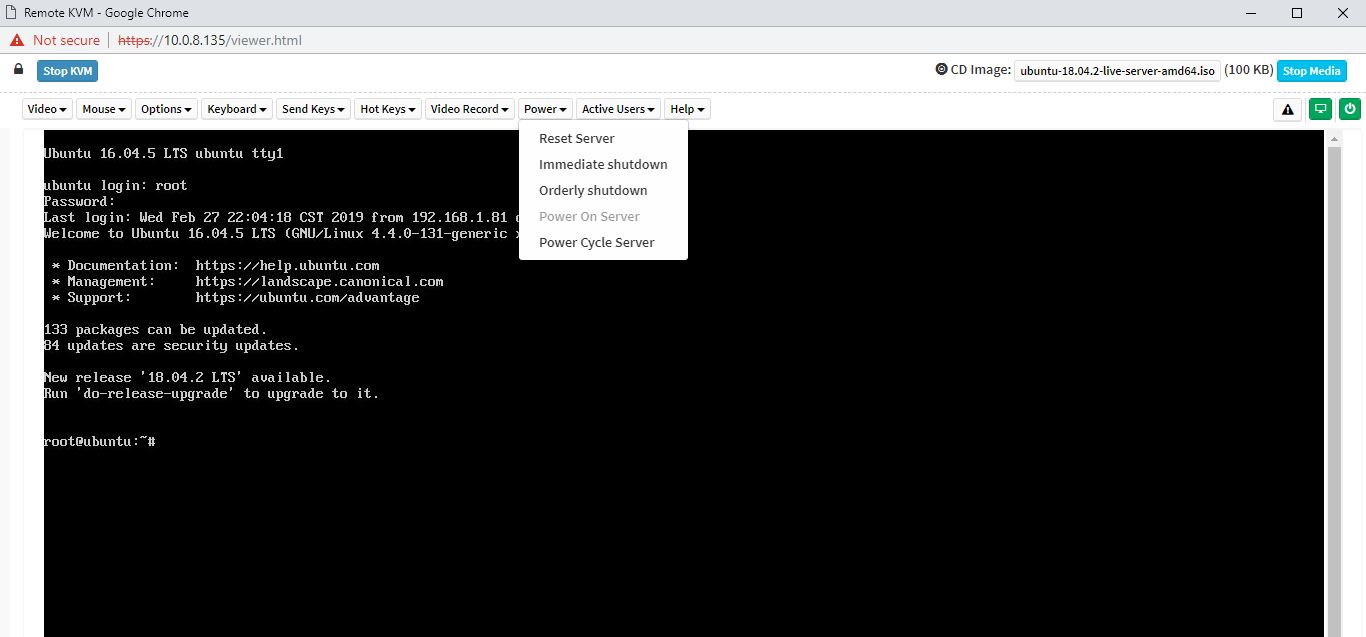

There are key features we would expect from any modern server. These include the ability to power cycle a system and remotely mount virtual media. Inspur also has a HTML5 iKVM solution that has these features included. Some other server vendors do not have fully-featured HTML5 iKVM including virtual media support as of this review being published.

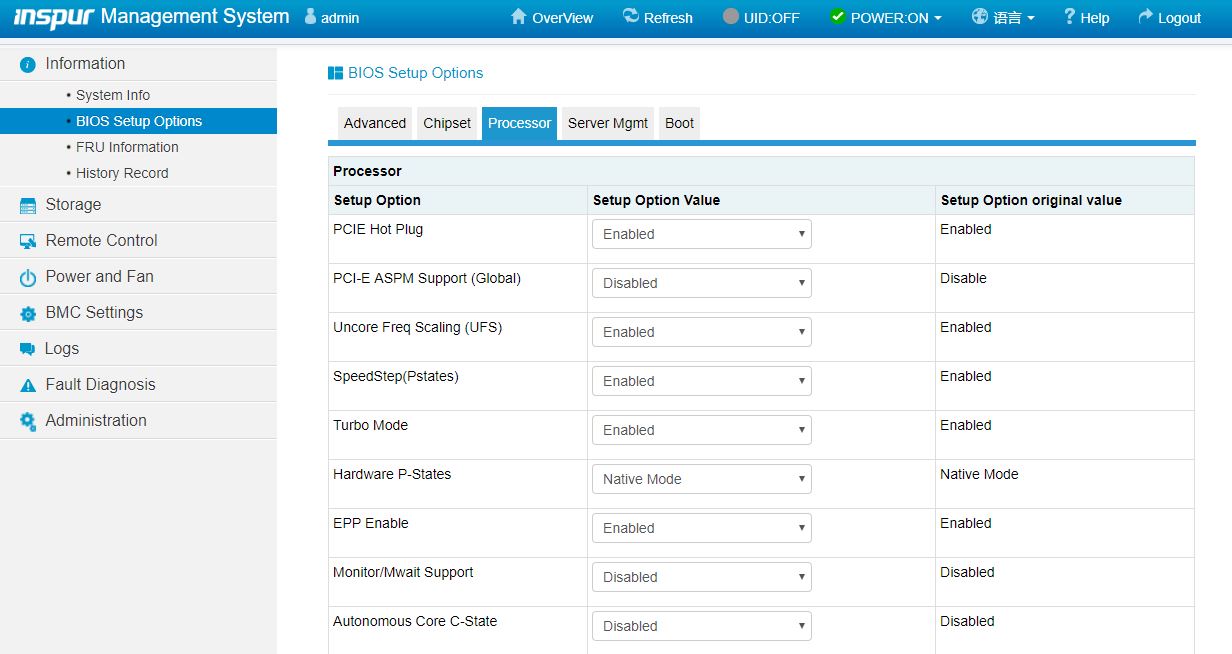

Another feature worth noting is the ability to set BIOS settings via the web interface. That is a feature we see in solutions from top-tier vendors like Dell EMC, HPE, and Lenovo, but many vendors in the market do not have.

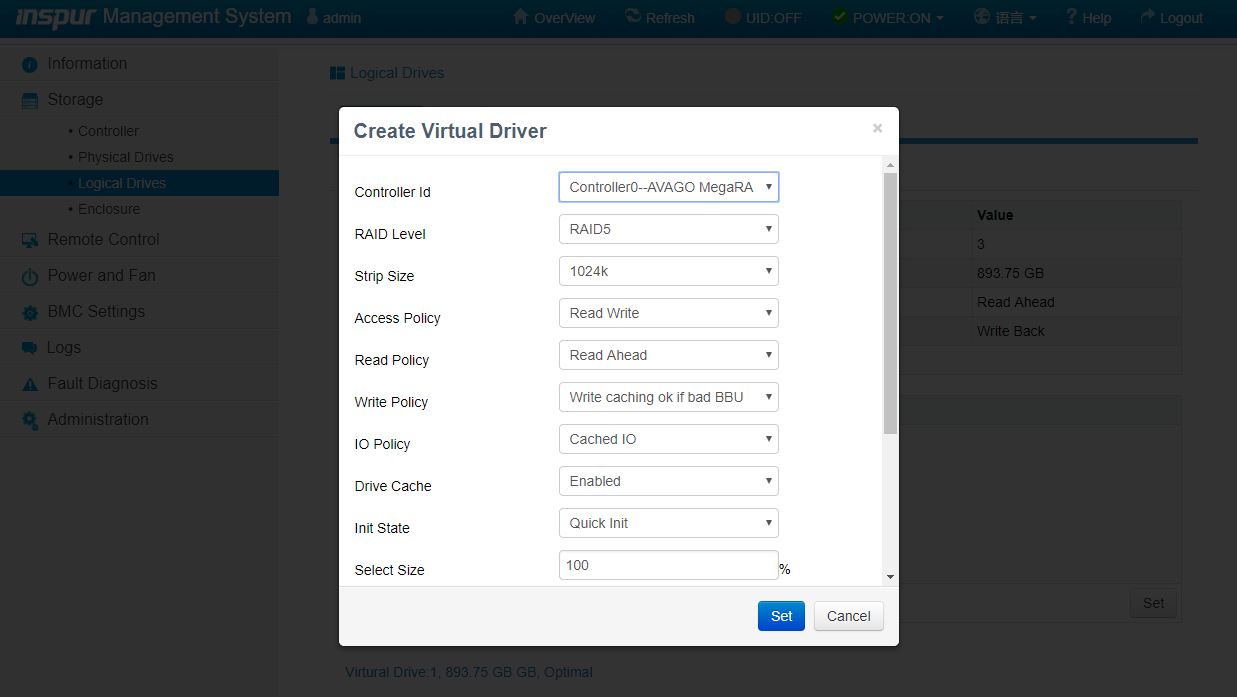

Another web management feature that differentiates Inspur from lower-tier OEMs is the ability to create virtual disks and manage storage directly from the web management interface. Some solutions allow administrators to do this via Redfish APIs, but not web management. This is another great inclusion here.

Based on comments in our previous articles, many of our readers have not used an Inspur Systems server and therefore have not seen the management interface. We have an 8-minute video clicking through the interface and doing a quick tour of the Inspur Systems management interface:

It is certainly not the most entertaining subject, however, if you are considering these systems, you may want to know what the web management interface is on each machine and that tour can be helpful.

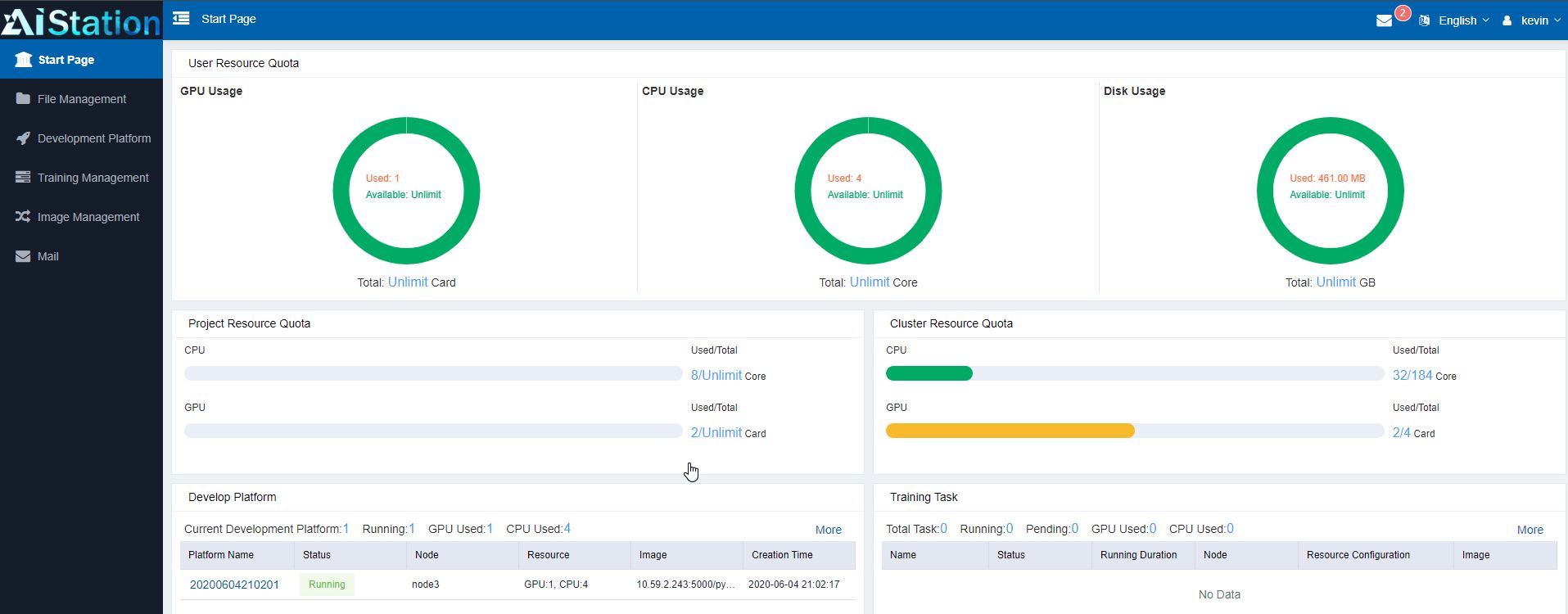

In addition to the web management interface, we have also covered Inspur AIStation for AI Cluster Operations Management Solution.

Inspur has an entire cluster-level solution to manage models, data, users, as well as the machines that they run on. For an AI training server like this, that is an important capability. We are going to direct you to that article for more on this solution.

Next, we are going to get to power consumption, the STH Server Spider, and our final words.

We upgraded from the DGX-2 (initially with Volta, then Volta Next) with a DGX A100 – with 16 A100 GPUs back in January – Our system is Nvidia branded, not inspur. Next step is to extricate the inferior AMDs with some American made Xeon Ice Lake SP.

DGX-2 came after the Pascal based DGX-1. So has nothing to do with 8 or 16 GPUs.

Performance is not even remotely comparable – a FEA sim that took 63 min on a 16 GPU Volta Next DGX-2 is now done in 4 minutes – with an increase in fidelity / complexity of 50% only take a minute longer than that. 4-5 min is near real time – allowing our iterative engineering process to fully utilize the Engineer’s intuition…. 5 min penalty vs 63-64 min.

DGX-1 was available with V100s. DGX-2 is entirely to do with doubling the GPU count.

Nvidia ditched Intel for failing to deliver on multiple levels, and will likely go ARM in a year or two.

The HGX platform can come in 4-8-16 gpu configs as Patrick showed the connectors on the board for linking a 2nd backplane above. Icelake A100s servers don’t make much sense, they simply don’t have enough pcie lanes or cores to compete at the 16 GPU level.

There will be a couple of 8x A100 Icelake servers coming out but no 16.

It would be nice if in EVERY system review, you include the BIOS vendor, and any BIOS features of particular interest. My experience with server systems is that a good 33% to 50% of the “system stability” comes from the quality of the BIOS on the system, and the other 66% to 50% comes from the quality of the hardware design, manufacturing process, support, etc. It is like you are not reviewing about 1/2 of the product. Thanks.