A Few Additional Features

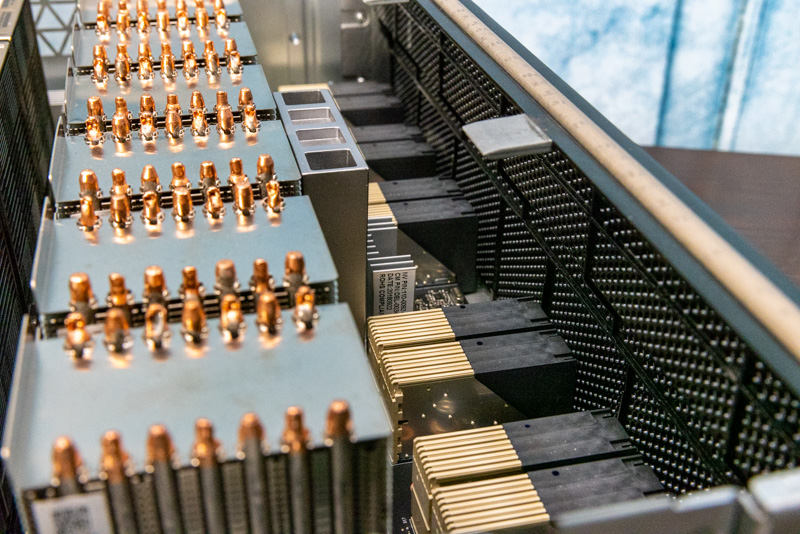

We wanted to also show off a few fun points here that you may have never seen on the NVIDIA A100 platform. First, we keep calling it the NVIDIA HGX A100 8 GPU “Delta” platform. One can see that the assembly actually has this branding.

Branding on the previous generation V100 part was “HGX-2”. That was because, with the V100 version, there was an option to expand to a second board using connectors at the other edge of the board. The HGX A100 8 GPU still has connectors here although we have not seen a DGX-2/ HGX-2 announcement.

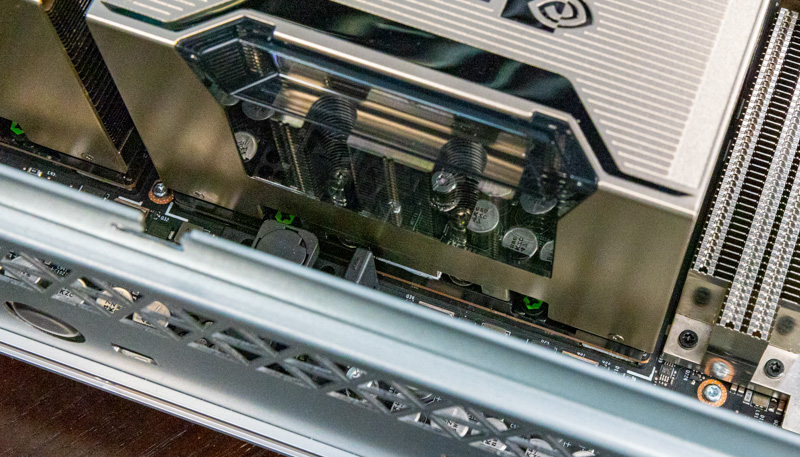

Personally, I think the NVIDIA green V100’s look more visually appealing than the new branding. NVIDIA has not stripped all green though. The SXM4 screws are capped in NVIDIA green paint.

Those were just a few fun features of the platform that we saw in the NF5488A5, but are not often seen elsewhere.

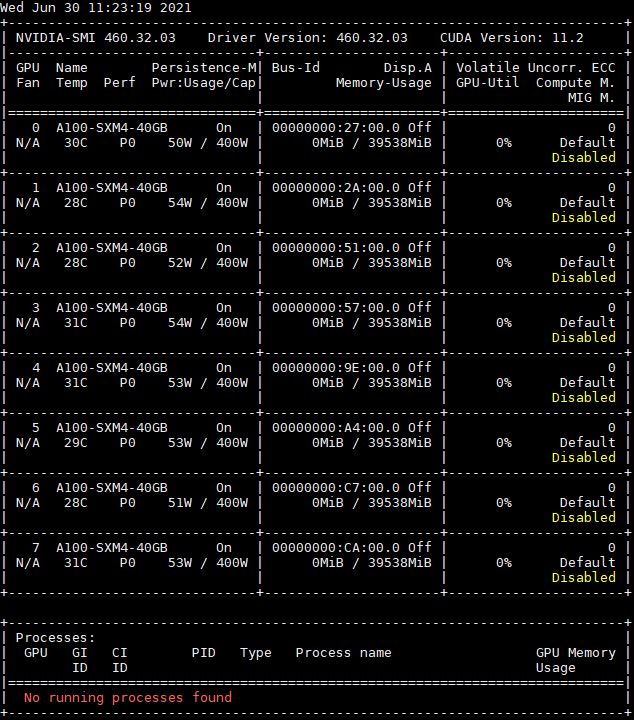

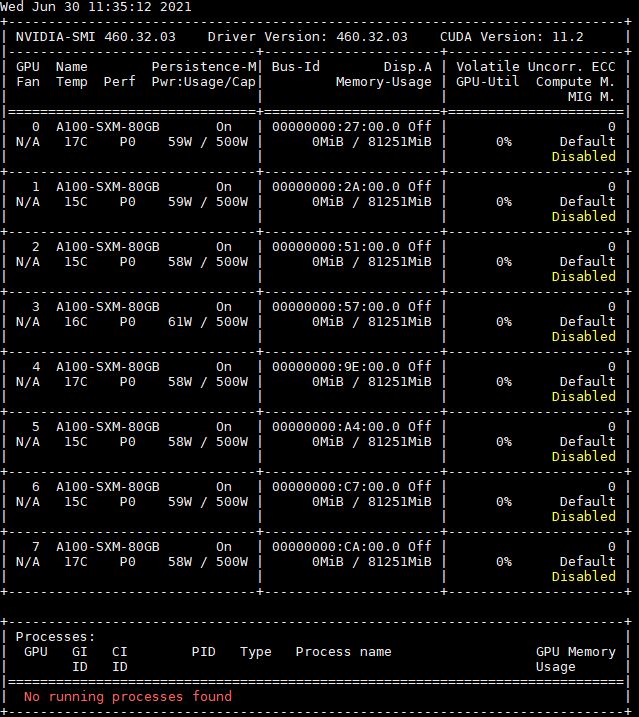

NVIDIA A100 GPU Options

We will have a bit on the 40GB 400W v. 80GB 500W GPUs coming in a different server review, but we just wanted to show two screenshots. The Inspur NF5488A5 has several configurations. One can get the original 40GB 400W GPUs.

Or one can get up to new 80GB HBM2E 500W GPUs.

What we will simply note here is that the 400W 40GB and 80GB models are a lot easier to integrate into data centers. Inspur does have 500W NF5488A5 options, but we would strongly suggest discussing that type of installation with Inspur first.

Why the Inspur Systems NF5488A5 v. NVIDIA DGX

This is a question we get often. The NVIDIA DGX A100 is a fixed configuration and NVIDIA often uses it to get a higher margin on newly released GPU configurations. In contrast, the Inspur NF5488A5 is designed to be configurable. One can get AMD EPYC 7003 Milan CPUs, different memory and storage configurations, and so forth.

Both systems use the NVIDIA HGX A100 8 GPU Delta assembly, so performance is fairly similar as we showed with the MLPerf benchmarks.

There are reasons beyond the hardware. In 2019 we had a piece Visiting the Inspur Intelligent Factory Where Robots Make Cloud Servers where I actually toured an Inspur factory in Jinan, China. Here is Inspur’s robot-driven factory.

Inspur is a server vendor that sells hyper-scale clusters to customers. That is not how NVIDIA is currently set up to sell. As a result, there are simple reasons beyond price and configurability, that extend to just the setup and delivery of systems as well.

This is one where we are just going to say, our testing confirmed what one would expect, faster GPUs and faster interconnects yield better performance, the degree of which depends on the application.

Inspur Systems NF5488A5 Performance

In terms of performance, we originally penned this article with a comparison to the M5 V100 version. The challenge was that our software stack was not the same due to the separation in terms of time between the reviews. On the CPU side where we have very stable software stack, we saw similar performance to our Rome test platform, so looking at some charts of previous generation EPYC 7002 “Rome” CPU to baseline numbers that were all +/- 1.5% was good to show the NF5488A5 could cool the chips, but it was not necessarily exciting. As part of that process, we then turned to MLPerf and wanted to show a few comparisons using this system.

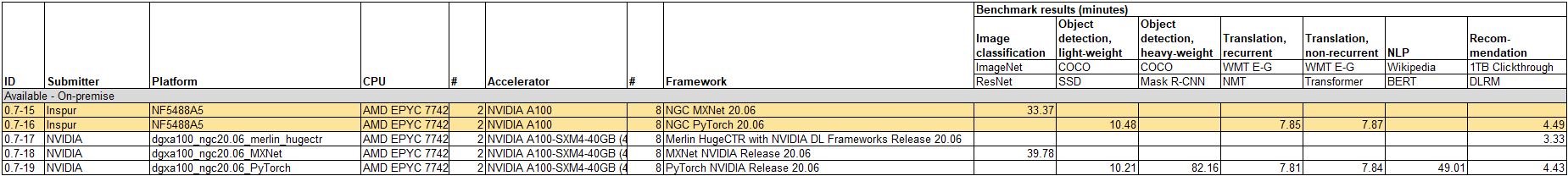

First, using MLPerf v0.7 Training methodology, we can see that this system with 40GB 400W A100 GPUs performs very similarly to the NVIDIA DGX A100, and in cases like the ResNet image classification, the Inspur system actually beats the NVIDIA DGX A100.

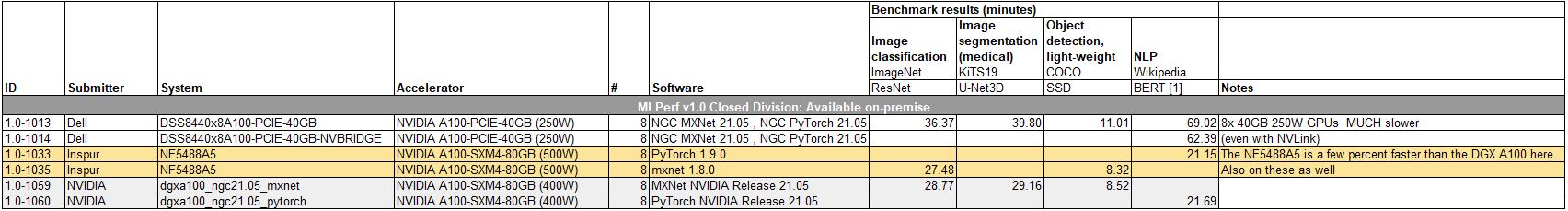

Next, with MLPerf Training v1.0 there were some fairly interesting results comparing to a few different options.

Here we can see this system is configured with the 500W 80GB GPUs and AMD EPYC 7003 Milan series CPUs. As a result, it is able to out-perform the DGX A100 640GB model with 400W 80GB A100’s. The importance of that result is a key value proposition of the NF5488A5. The ability to customize with higher TDP A100s and newer CPUs than NVIDIA offers means that one can get more performance.

The other big one to note here is just how enormous the gap is between the Inspur A5’s SXM4 A100’s is compared to Dell’s PCIe-based system. The Delta platform does cost more with the NVSwithes and such, but the performance gap is huge. At a cluster level, this is what is driving major AI shops to adopt the Delta platform over the PCIe solutions. Dell, for its part, offers PCIe and Redstone in its public PowerEdge offerings, but not the higher-end Delta platform that Inspur offers.

We have an upcoming piece with two other Delta-based A100 systems that we were going to compare here using Linpack. The result on the system comparable to Inspur’s was within +/-2% on a 5-run average basis. Check that article once we are able to disclose results, but we are going to call that a virtual tie. That makes sense and the performance variance may have been from the different ambient temperature/ humidity in the facilities.

Next, we are going to look at the management followed by power consumption and our final thoughts..

We upgraded from the DGX-2 (initially with Volta, then Volta Next) with a DGX A100 – with 16 A100 GPUs back in January – Our system is Nvidia branded, not inspur. Next step is to extricate the inferior AMDs with some American made Xeon Ice Lake SP.

DGX-2 came after the Pascal based DGX-1. So has nothing to do with 8 or 16 GPUs.

Performance is not even remotely comparable – a FEA sim that took 63 min on a 16 GPU Volta Next DGX-2 is now done in 4 minutes – with an increase in fidelity / complexity of 50% only take a minute longer than that. 4-5 min is near real time – allowing our iterative engineering process to fully utilize the Engineer’s intuition…. 5 min penalty vs 63-64 min.

DGX-1 was available with V100s. DGX-2 is entirely to do with doubling the GPU count.

Nvidia ditched Intel for failing to deliver on multiple levels, and will likely go ARM in a year or two.

The HGX platform can come in 4-8-16 gpu configs as Patrick showed the connectors on the board for linking a 2nd backplane above. Icelake A100s servers don’t make much sense, they simply don’t have enough pcie lanes or cores to compete at the 16 GPU level.

There will be a couple of 8x A100 Icelake servers coming out but no 16.

It would be nice if in EVERY system review, you include the BIOS vendor, and any BIOS features of particular interest. My experience with server systems is that a good 33% to 50% of the “system stability” comes from the quality of the BIOS on the system, and the other 66% to 50% comes from the quality of the hardware design, manufacturing process, support, etc. It is like you are not reviewing about 1/2 of the product. Thanks.