Inspur NF5180M6 Power Consumption

Our system came equipped with the 1.3kW 80Plus Platinum power supply option. Again, Inspur offers 500W-2kW options in Platinum and Titanium efficiency ratings.

The system itself was able to peak just above 1kW with Platinum 8380’s. The system also supports multiple GPUs and options that we do not have populated so it seems like higher-end configurations could easily surpass the 1.6kW figure. At the same time, lower-end Xeon Silver configurations may look at the 500W PSUs for better efficiency.

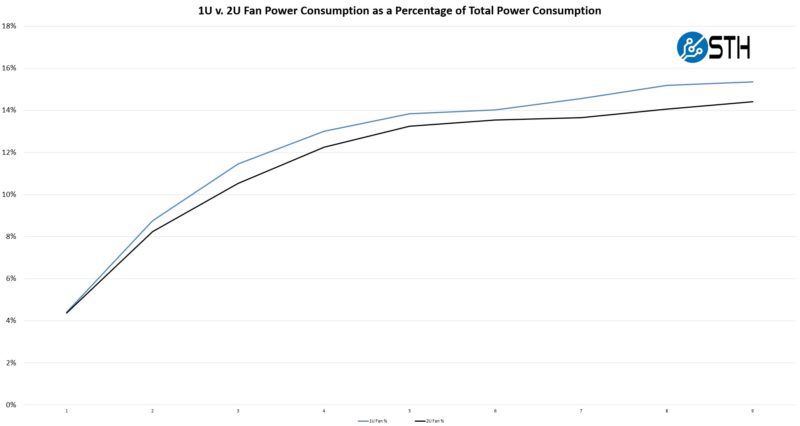

Since we used this system in our 1U v. 2U testing, along with Inspur’s 2U alternative, we were able to generate some really cool data. For example, running the same workloads on the two systems, how much power was being used by the fans in each system:

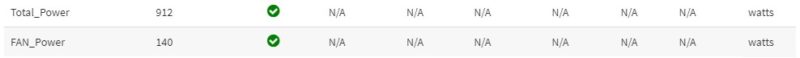

Inspur has the facilities to look at fan power consumption from IPMI in these platforms.

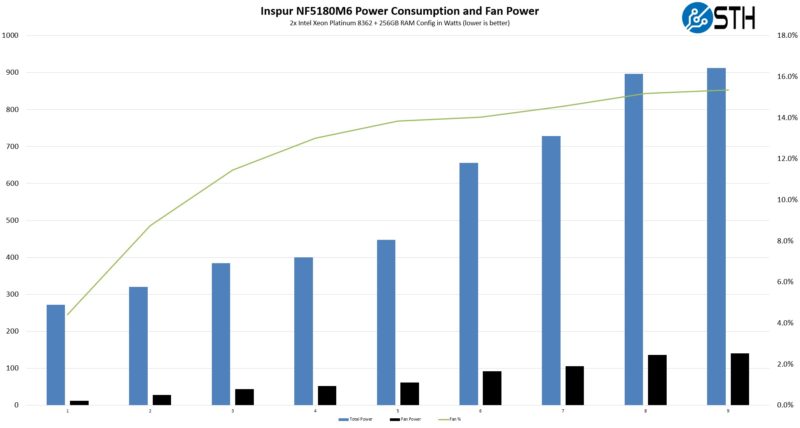

Taking a look at the power consumption and amount of fan power on a number of different workloads, we can see that as we got to the 800-900W range, fans were already in the ~15% of total system power range.

If you want to learn more, we are going to suggest you check out our Deep Dive into Lowering Server Power Consumption:

You will notice a familiar view as the cover of that video as well. We do not always get to look at pairs of systems like these back-to-back so this was a treat to get to do.

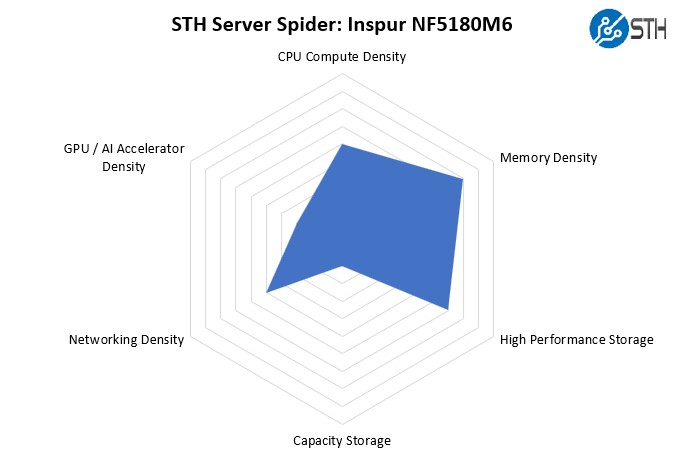

STH Server Spider: Inspur NF5180M6

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

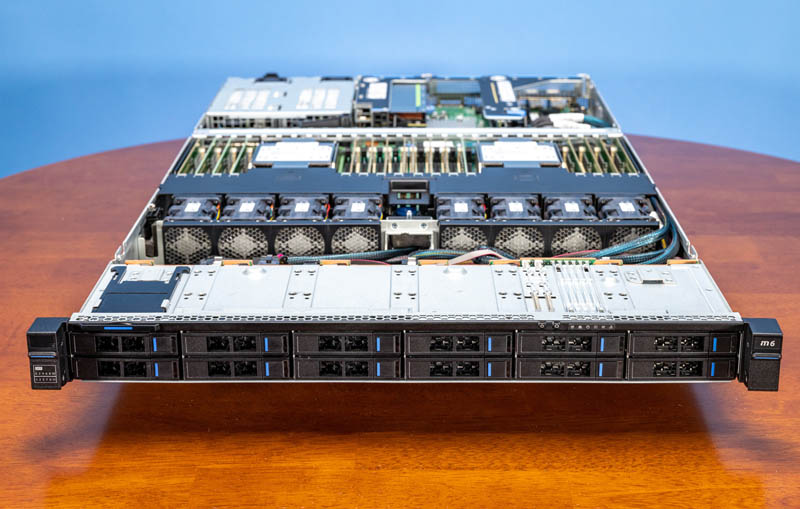

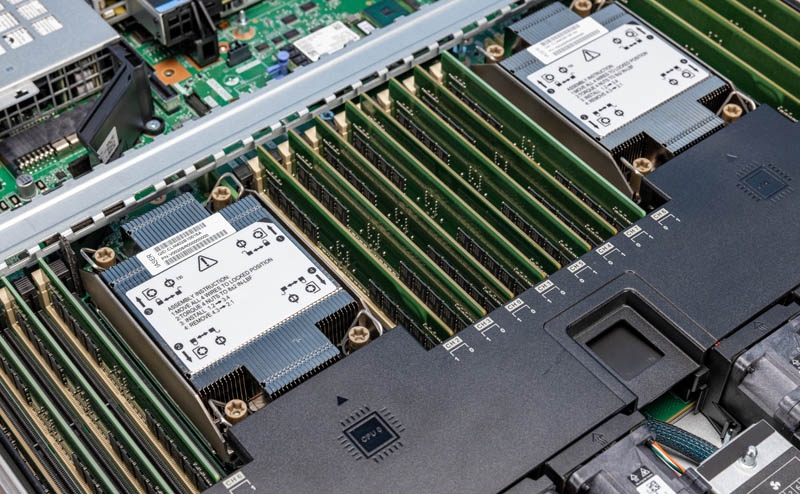

This system is really designed as a 1U server with quite a bit of flexibility. The main benefit of this system over the 2U is that it allows one to greatly increase the CPU and memory density in a rack. The trade-off is that slightly more power is used and we also get less physical space to house accelerators and storage devices. That makes a lot of sense and it is the exact trade-off that one would expect to make with a 1U system. We will quickly note that the EDSFF E1.S version of this server would actually have high storage density and high-performance storage, we just were not testing that version of the system.

Final Words

The Inspur Systems NF5180M6 is exactly what we would anticipate the 1U mainstream Intel Xeon server would be from the company. It is built to leverage the same base motherboard platform and technologies used in the 2U server. That drives quantity and therefore more use and investment in quality. Inspur is also leveraging many of the open standards and largely stays away from proprietary connectors which is great.

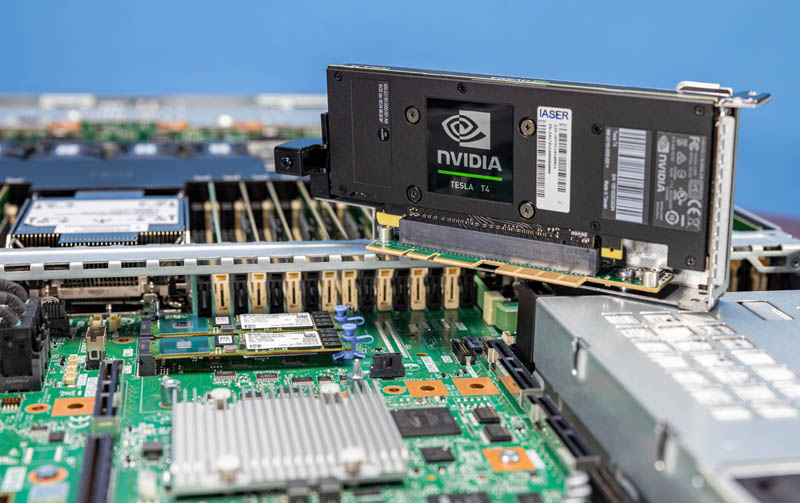

We really liked the ability of the system to handle cooling for the NVIDIA T4. Adding inferencing accelerators is becoming more common, even with onboard AI inference acceleration. Having the ability to cool these accelerators in the 1U form factor easily is great.

This was an exciting server for another reason. Namely, we were able to test this 1U platform against a nearly identical 2U platform to really see the impacts of the denser chassis. That is something that is rarely done in the industry so it was great to get the opportunity to gather data and present it to our readers with this platform.

Overall, the Inspur NF5180M6 worked well for us. From our perspective, being able to increase density while maintaining relatively similar performance and power consumption shows that the NF5180M6 is from a top 3 worldwide server vendor. The Inspur engineering team did a great job putting together a well-designed 1U mainstream dual Intel Xeon server with the NF5180M6.

Leaving a suggestion: upload higher-res images on reviews!

They look very soft on higher dpi displays and you can barely zoom on them :(

Is the front storage options diagram from Inspur directly? The image for 10x 2.5″ and 12x 2.5″ seem to be swapped.

Jim – it is, and you are correct.

The only thing missing might be a swappable BMC, or perhaps just the application processor part of the BMC. They tend to get outdated much faster than the rest of the hardware, and even if they were using OpenBMC as a software stack it would severely limit what the machine can do in its second or third life after it’s been written off by the first enterprise owner.