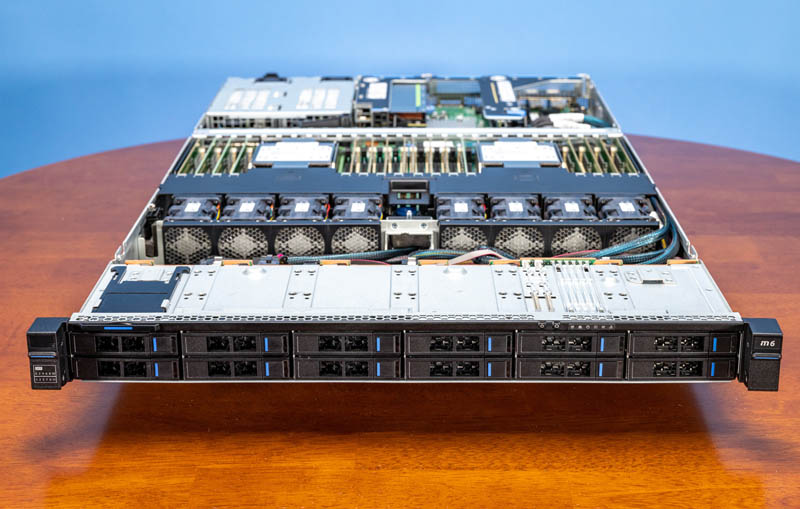

Inspur NF5180M6 Internal Hardware Overview

Now that we have gone over the exterior of the system, let us move through the system from the front to the rear.

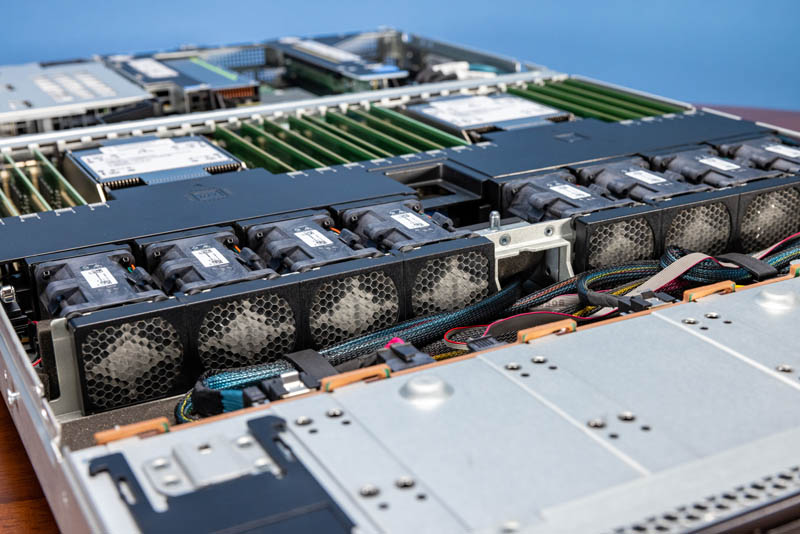

Behind the drive backplanes, that support SATA, SAS, and NVMe, we have the chassis fans. Here we can see the metal honeycomb airflow guide design as we saw on the 2U server.

The fans themselves are hot-swappable dual-fan modules. In the 1U form factor, there are many servers without hot-swap fans given physical space constraints. Inspur including the feature with a well-designed implementation is a nice touch here.

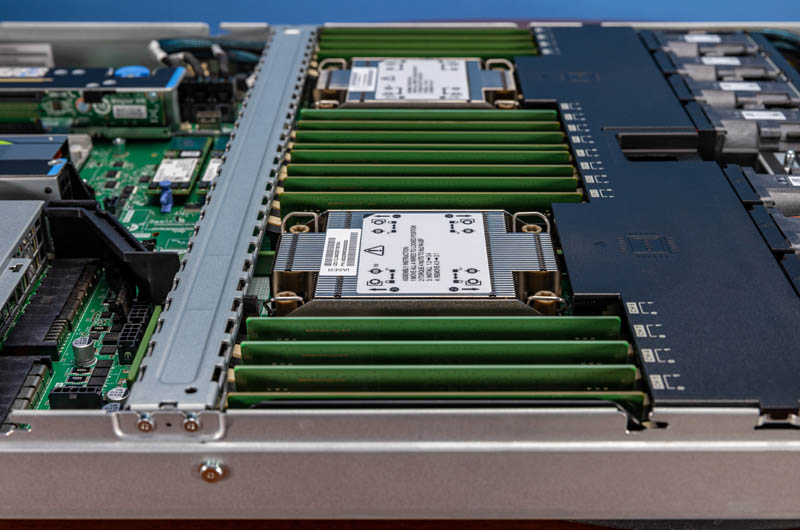

Fan airflow is directed via an airflow guide. This airflow guide has the CPU sockets and DIMM slots labeled and is fairly small. One of the nice features is that it is very easy to remove and install which cannot be said for all competitive systems.

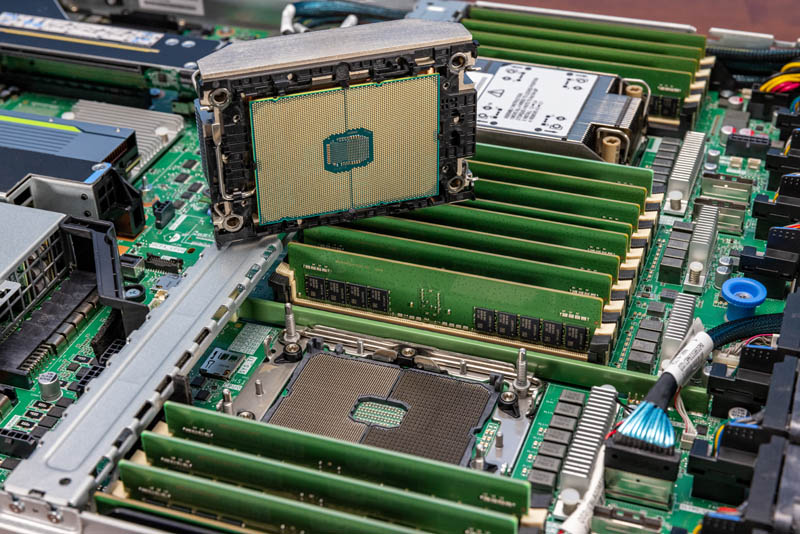

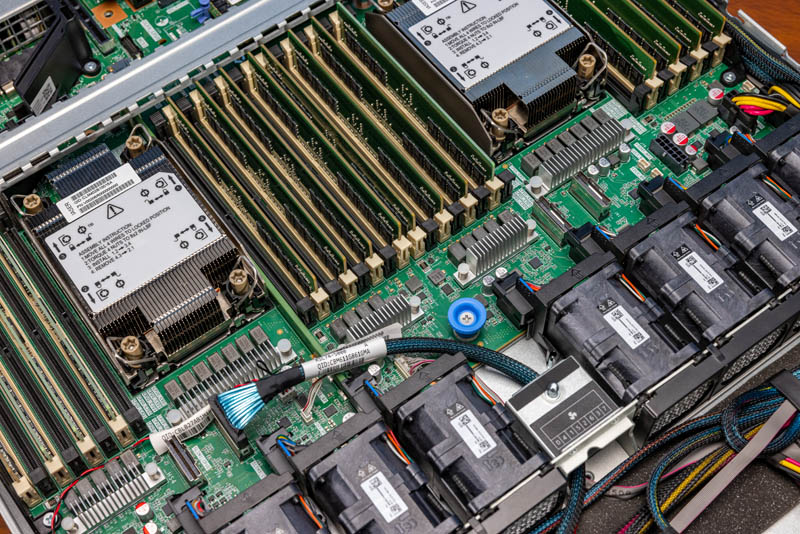

Since this is a third-generation Intel Xeon scalable server, we have eight memory channels per CPU and two DIMMs per channel. With two CPUs that gives the server the ability to support up to 32 DIMMs. Inspur can also support higher-power Intel Optane PMem modules here as well.

Inspur’s solution supports high-end Intel Xeon Platinum SKUs with top-bin TDPs. Some 1U servers do not support up to 270W TDP CPUs like the Xeon Platinum 8380, but Inspur does in this design.

One small item worth noting is what is between the fans and the CPU and memory. Here we can see several PCIe headers that can be used to enable NVMe on the front storage. If this was an EDSFF or other configuration where more PCIe lanes in the front are needed, we would expect to see cables running from this area, through the center channel, and to the backplane. Having the center cable channel between the fans also helps with cable management versus having to bring all of the cables to the side of the chassis and around the fans.

Between the CPUs and memory and the OCP NIC 3.0 slot, there are a number of headers for SATA and power. Locating these near the edge of the chassis also helps with cable routing.

The risers we showed earlier are tool-less, and one can see the area from a bit further back as we remove the riser and take a step back.

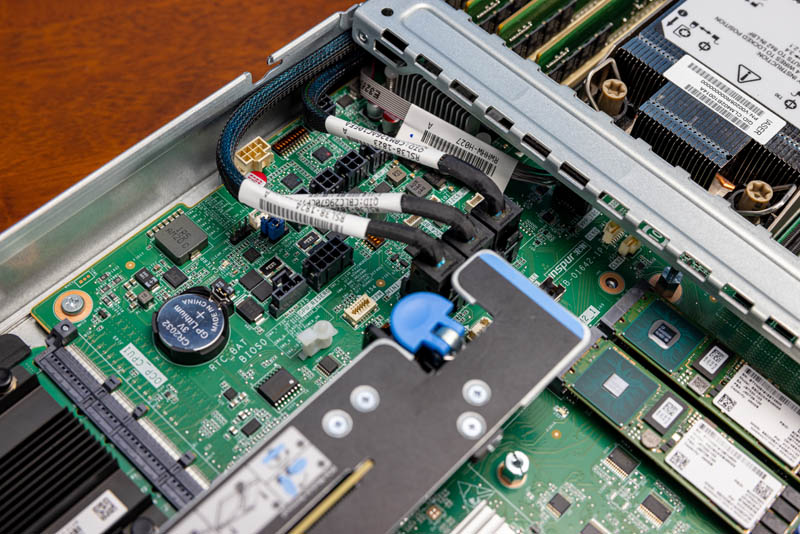

The motherboard in this system is very similar to the one we saw in the Inspur NF5280M6 perhaps the biggest difference is that the 2U server had an internal OCP header for a SAS mezzanine card. In this system, we get two M.2 SSDs to use for boot duties.

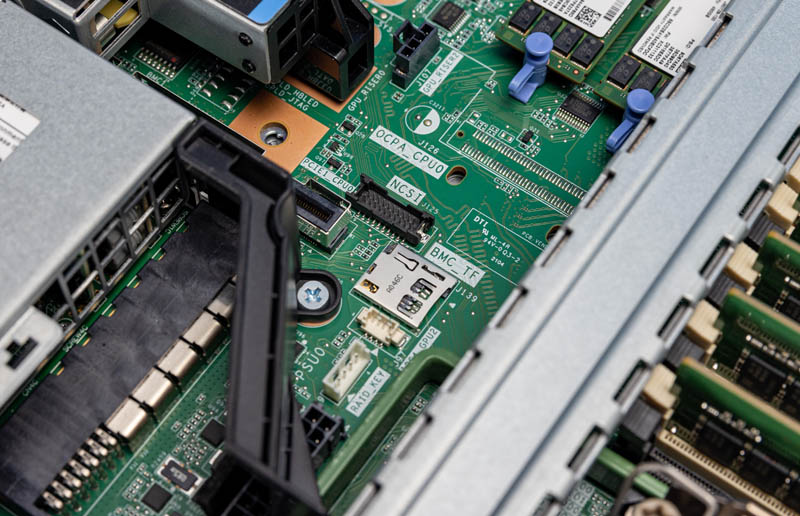

As with the 2U version we also get the MicroSD card slots for the system and BMC.

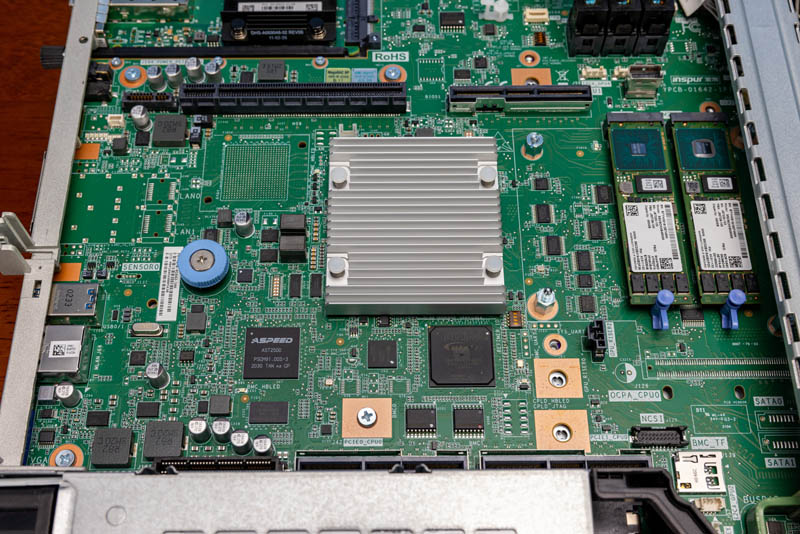

Here we see a few interesting points with the risers removed. First, the large heatsink is the Lewisburg Refresh PCH. Under that, we have the ASPEED BMC and the Intel-Altera FPGA for the system.

One can also see pads for a NIC as well as two SFP cages that are unpopulated on this system. Inspur has a few options like onboard networking that can be configured but that our test system does not utilize. This is similar to how this system has pads for the internal SAS controller, but those are not populated and instead, we have two M.2 SSDs. The same base motherboard has these small customizations available.

Next, we are going to get to the block diagram to see how this is all connected, then we will get to the management and performance.

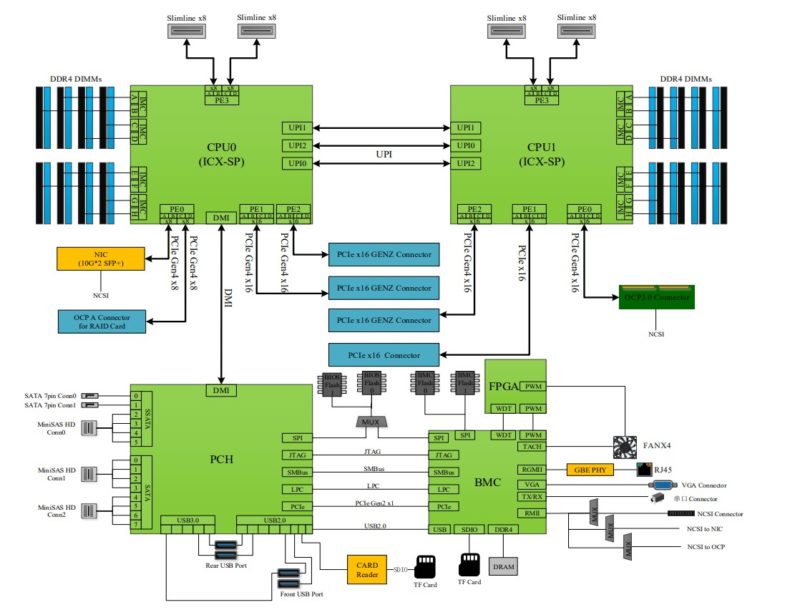

Inspur NF5180M6 Block Diagram

Since there are a lot of configuration options in the NF5180M6, it is worth showing the block diagram.

Here we can see the FPGA is being used for things like PWM fan control, and also that there are dual system and BMC firmware images. The PCH is also only being used for PCIe for the BMC management, but the rest of the PCIe is being handled by the two Xeon CPUs.

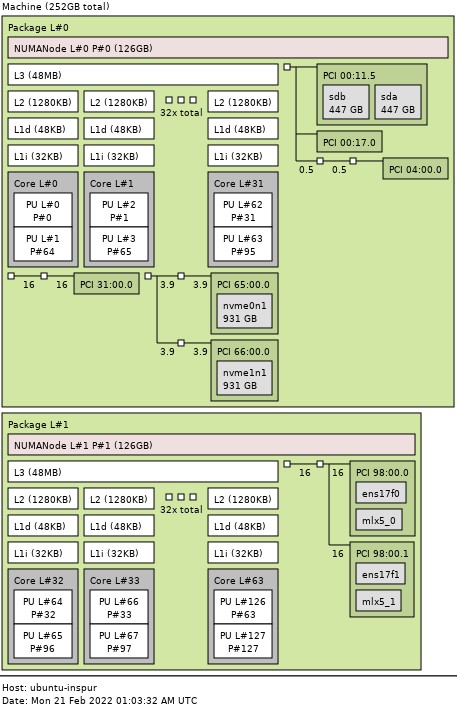

Above is an example of the topology of the system as you saw it in the photos and Intel Xeon Platinum 8362 CPUs. We tried this with a number of different configurations, but we had this screenshot of the one that we have photos of.

Next, let us look at management.

Inspur Systems NF5180M6 Management

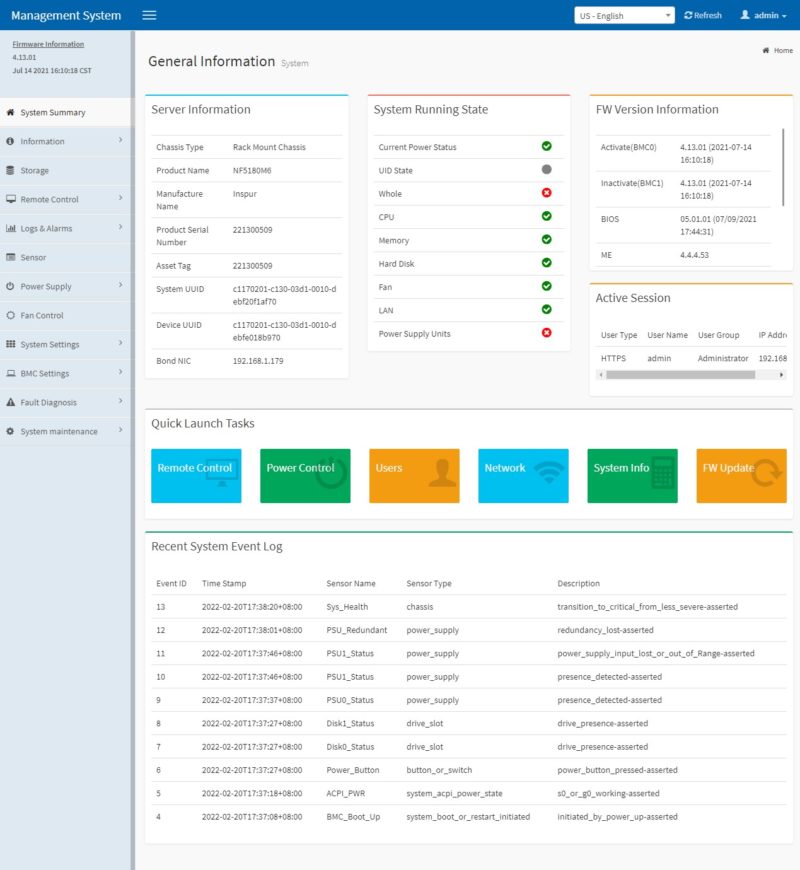

Inspur’s primary management is via IPMI and Redfish APIs. We have shown this before, so we are not going to go into too much extra, but will have a recap here. That is what most hyperscale and CSP customers will utilize to manage their systems. Inspur also includes a robust and customized web management platform with its management solution.

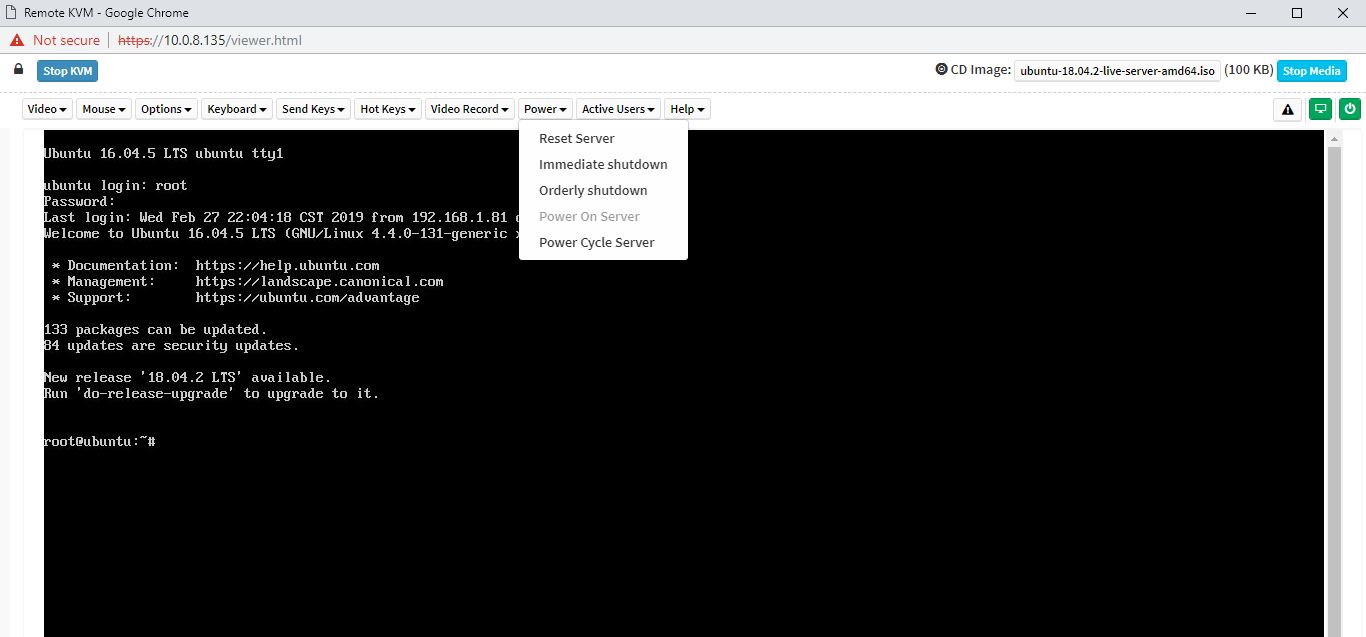

There are key features we would expect from any modern server. These include the ability to power cycle a system and remotely mount virtual media. Inspur also has a HTML5 iKVM solution that has these features included. Some other server vendors do not have fully-featured HTML5 iKVM including virtual media support as of this review being published.

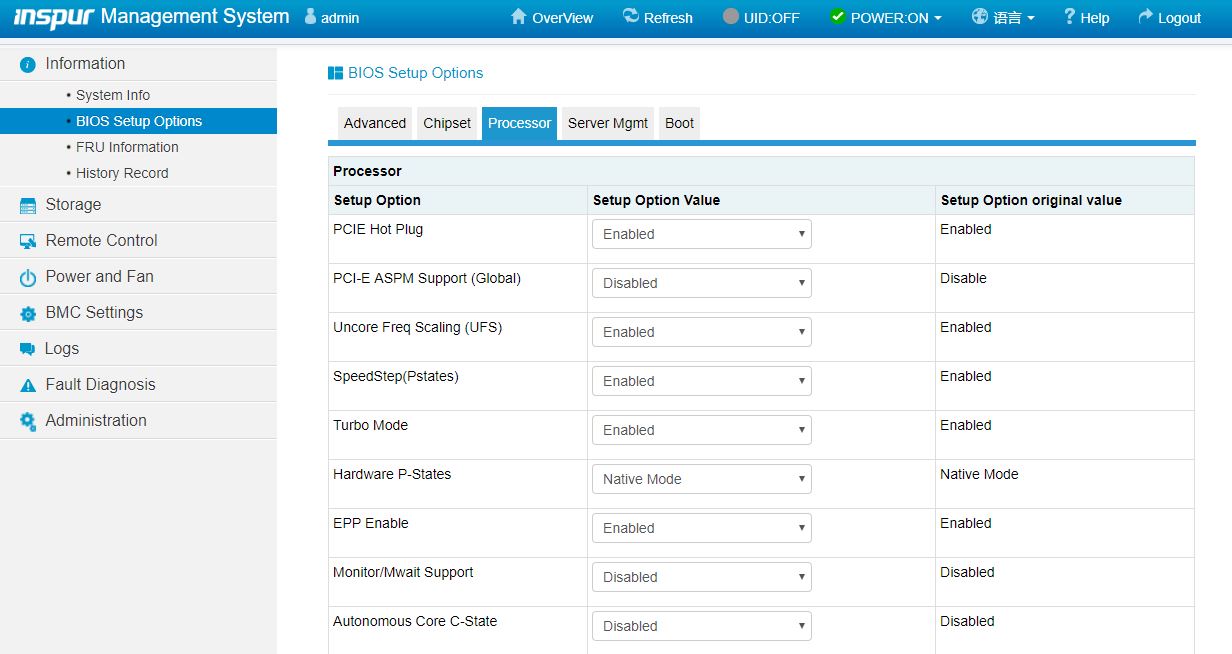

Another feature worth noting is the ability to set BIOS settings via the web interface. That is a feature we see in solutions from top-tier vendors like Dell EMC, HPE, and Lenovo, but many vendors in the market do not have.

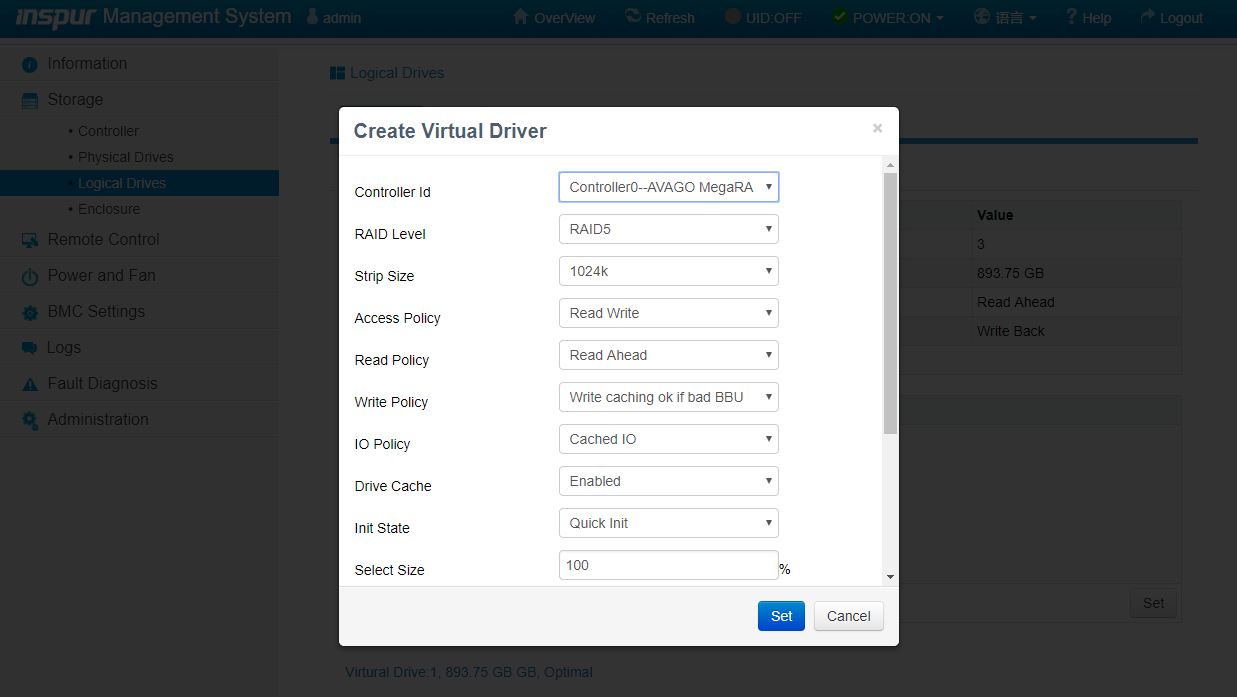

Another web management feature that differentiates Inspur from lower-tier OEMs is the ability to create virtual disks and manage storage directly from the web management interface. Some solutions allow administrators to do this via Redfish APIs, but not web management. This is another great inclusion here.

Based on comments in our previous articles, many of our readers have not used an Inspur Systems server and therefore have not seen the management interface. We have an 8-minute video clicking through the interface and doing a quick tour of the Inspur Systems management interface:

It is certainly not the most entertaining subject, however, if you are considering these systems, you may want to know what the web management interface is on each machine and that tour can be helpful.

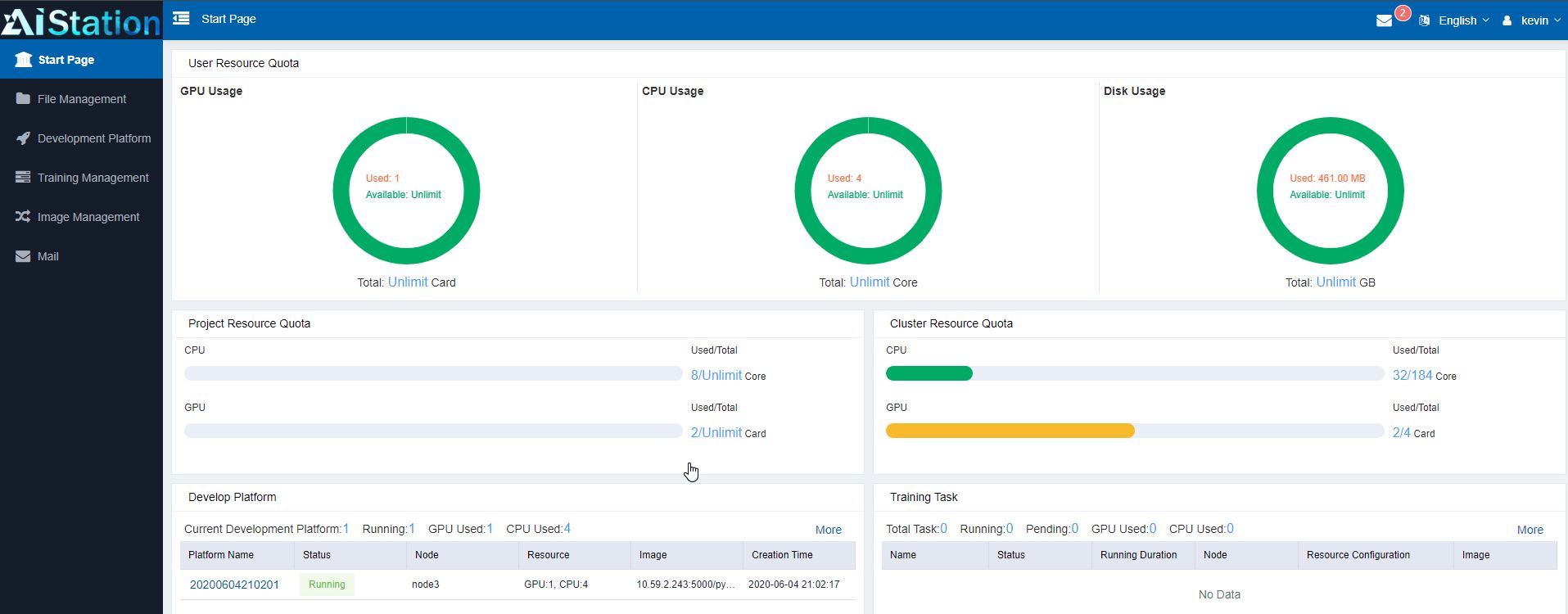

In addition to the web management interface, we have also covered Inspur AIStation for AI Cluster Operations Management Solution.

Inspur has an entire cluster-level solution to manage models, data, users, as well as the machines that they run on. For an AI training server like this, that is an important capability. We are going to direct you to that article for more on this solution.

Next, let us discuss performance.

Inspur NF5180M6 Performance

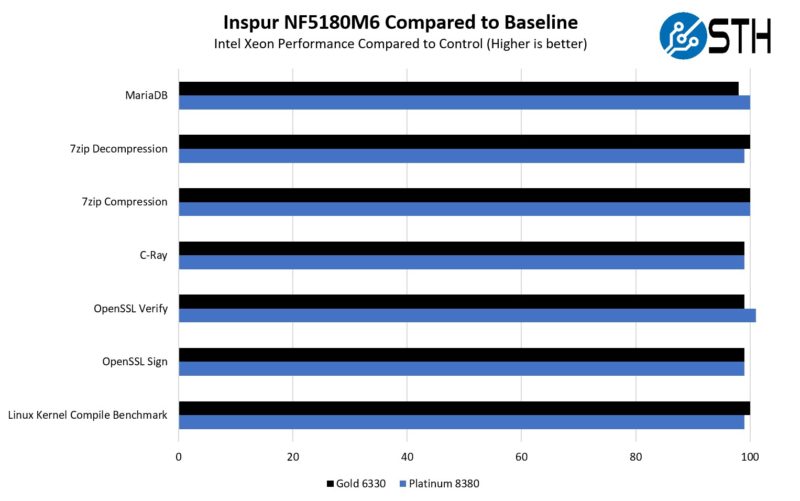

In terms of performance, we wanted to test higher-end SKUs to ensure the system has adequate cooling. Modern systems, if they have insufficient cooling, will see CPUs throttle down and that will have a negative impact on performance. Verifying the top-end of the CPU TDP range helps us see if the server has enough cooling to run the entire SKU stack at full performance. As such, we just wanted to do a quick comparison to our baseline with processors to ensure performance and cooling were acceptable.

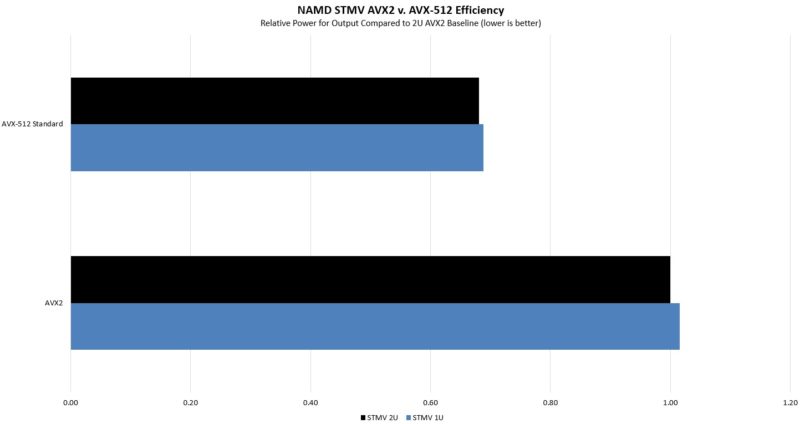

Just looking at a higher-end AVX2/ AVX-512 workload, we see that the 1U and 2U versions of the Inspur servers are very close in terms of performance. While the 2U NF5280M6 was slightly faster, achieving twice the rack density in the 1U platform offsets this very small performance delta.

Since we had the NVIDIA T4 in the system, we just ran it in the 1U platform to ensure that the accelerator is getting proper cooling.

We found the same here. Overall, it seems like the NF5180M6 is performing as it should. We also should take a quick note that since this is an Intel Xeon Scalable “Ice Lake” system there are accelerators for AI inference, crypto offload, and HPC that we recently looked at. You can see that piece here.

Those same acceleration concepts apply to Ice Lake Xeon servers like this Inspur server.

Next, we are going to get to power consumption, the STH Server Spider, and our final words.

Leaving a suggestion: upload higher-res images on reviews!

They look very soft on higher dpi displays and you can barely zoom on them :(

Is the front storage options diagram from Inspur directly? The image for 10x 2.5″ and 12x 2.5″ seem to be swapped.

Jim – it is, and you are correct.

The only thing missing might be a swappable BMC, or perhaps just the application processor part of the BMC. They tend to get outdated much faster than the rest of the hardware, and even if they were using OpenBMC as a software stack it would severely limit what the machine can do in its second or third life after it’s been written off by the first enterprise owner.