Inspur NF3180A6 Power Consumption

Power in this system is provided by two 1.3kW 80Plus Platinum power supplies in a redundant 1+1 configuration.

We fairly consistently saw idle power of just around 200W and maximum power under 700W in this configuration. There is a lot here with a top-bin CPU, 16x DIMMs, and more. Still, we can imagine higher-end configurations in this chassis, especially with the E1.S configurations.

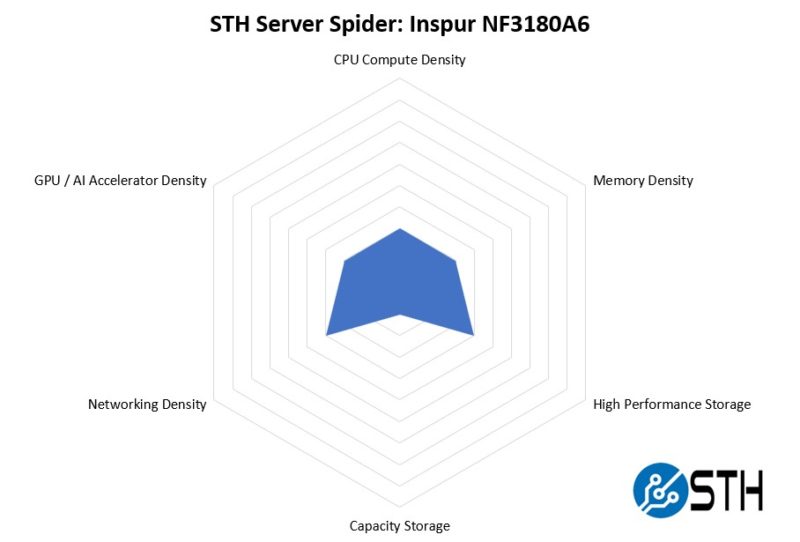

STH Server Spider: Inspur NF3180A6

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

The Inspur NF3180A6 is not necessarily the densest server these days as a single socket 1U server. At the same time, it offers a reasonably flexible platform in a 1U configuration. There are other features like offering 16x DDR4-3200 DIMM support that helps produce a well-rounded platform. At the same time, it means that this is a platform targeting more general-purpose workloads instead of focusing on GPU, storage, CPU, memory, or networking density.

Final Words

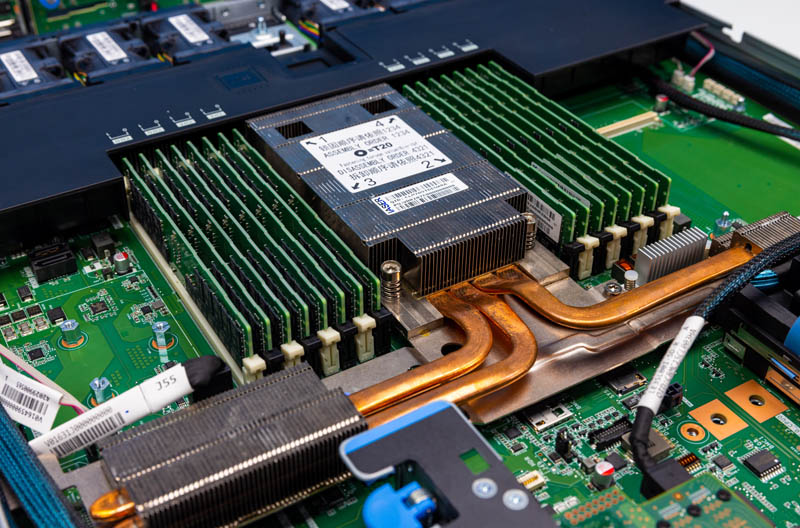

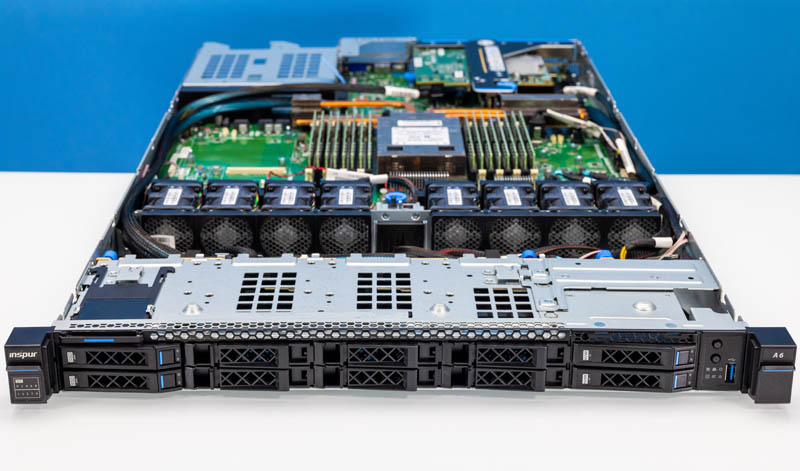

Overall, this was a really interesting platform to review. The server’s design language is distinctively Inspur leaning into OpenCompute Project principles. At the same time, there were fun features like this large heatsink that allowed the server to cool a top-end AMD EPYC 7763 in a 1U server.

At the same time, it is an interesting design case. AMD EPYC 9004 “Genoa” has launched at this point, but the “Milan” generation is a lot less expensive with DDR4, PCIe Gen4, and lower-power CPUs. The single socket platform may be more desirable to organizations given the reduced complexity. Some major hyper-scalers are starting to deploy a single socket in many new servers, so this is part of a broader industry trend.

Still, the Inspur NF3180A6 is a solid platform that worked well in our testing and is lower-cost than other options in the market, while still being very capable, by using AMD EPYC.

Is this a “your mileage can and will vary; better do your benchmarking and validating” type question; or is there an at least approximate consensus on the breakdown in role between contemporary Milan-bases systems and contemporary Genoa ones?

RAM is certainly cheaper per GB on the former; but that’s a false economy if the cores are starved for bandwidth and end up being seriously handicapped; and there are some nice-to-have generational improvements on Genoa.

Is choosing to buy Milan today a slightly niche(if perhaps quite a common niche; like memcached, where you need plenty of RAM but don’t get much extra credit for RAM performance beyond what the network interfaces can expose) move; or is it still very much something one might do for, say, general VM farming so long as there isn’t any HPC going on, because it’s still plenty fast and not having your VM hosts run out of RAM is of central importance?

Interesting, it looks like the OCP 3.0 slot for the NIC is feed via cables to those PCIe headers at the front of the motherboard. I know we’ve been seeing this on a lot of the PCIe Gen 5 boards, I’m sure it will only get more prevalent as it’s probably more economical in the end.

One thing I’ve wondered is, will we get to a point where the OCP/ PCIe card edge connectors are abandoned, and all cards have is power connectors and slimline cable connectors? The big downside is that those connectors are way less robust than the current ‘fingers’.

I think AMD and Intel are still shipping more Milan and Ice than Genoa and Sapphire. Servers are not like the consumer side where demand shifts almost immediately with the new generation.