The Inspur Electronic Information NF3180A6 is a really interesting server for the company. Many of Inspur’s servers that we have reviewed over the years focus on maximum AI compute performance, CPU density, or edge use cases. The NF3180A6 is instead a more general-purpose 1U AMD EPYC 7003 server that is focused on lower per-node pricing than achieving the highest density per rack unit.

Inspur NF3180A6 External Hardware Overview

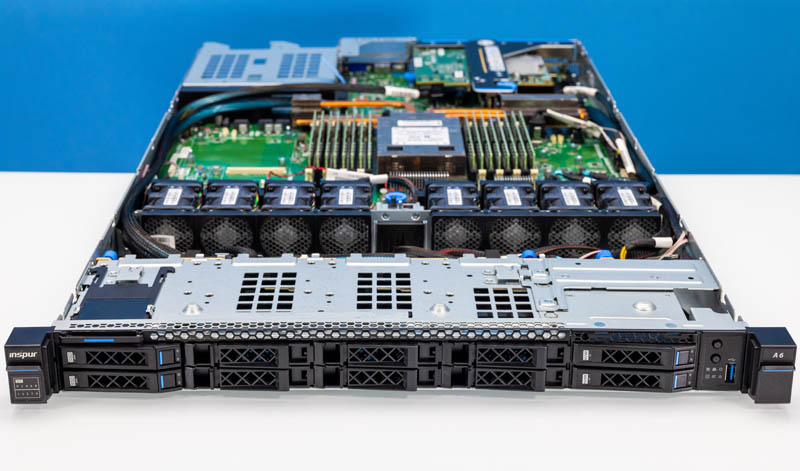

The system is a 1U platform with just under 31″ of depth. We are looking at a 10x 2.5″ configuration but there are also 4x 3.5″ bay, 16x E1.S, and 32x E1.S configurations. The longer configuration option adds around 1.2″ to the depth coming in just under 33″.

On the right front, we have the A6 model number, USB port, power buttons, status LEDs, and NVMe SSDs. The “A” was for AMD. Inspur has changed this designation for Inspur AMD EPYC Genoa platforms.

On the right side we get the service tag, SATA (optional SAS) bays, and drive status LEDs.

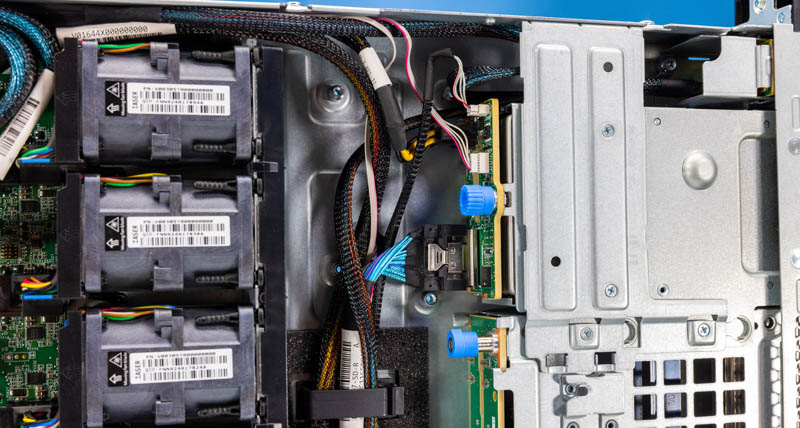

To even out the external/ internal overview sections, here is a look at the NVMe backplane that in our server supports only two NVMe SSDs.

Here is the SATA backplane. We will quickly note that these are designed to be easily swapped from a backplane perspective. The other change that would need to happen is the cabling, but there are more storage options than we are looking at here.

Moving to the rear of the server, we have a fairly standard Inspur 1U layout.

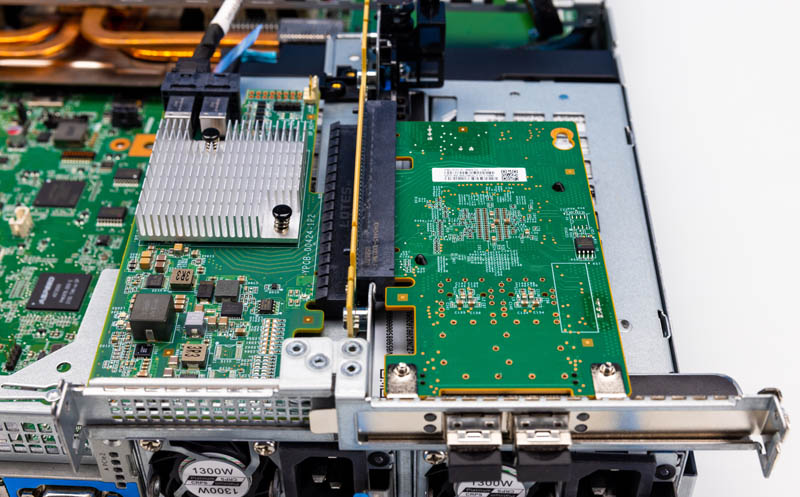

On the left side, we have networking in this configuration. There is a full-height expansion card on top with an OCP NIC 3.0 slot on the bottom.

PCIe card expansion happens via this riser. Here we can see the NIC along with the Broadcom SAS3008 controller.

In the center of the server, we have two USB 3 ports and a VGA port for local KVM cart management. There is also an IPMI port for out-of-band management that we will discuss more in our management section.

The power supplies are 1.3kW 80Plus Platinum CRPS units. Inspur has both higher and lower wattage power supplies based on the configuration.

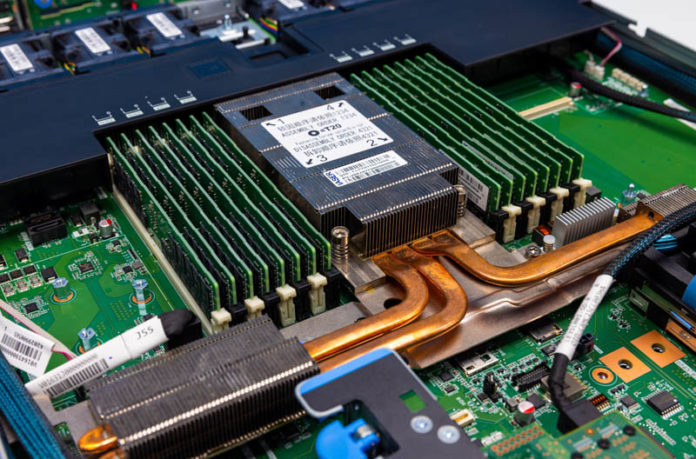

Next, let us get inside the system for our internal hardware overview.

Is this a “your mileage can and will vary; better do your benchmarking and validating” type question; or is there an at least approximate consensus on the breakdown in role between contemporary Milan-bases systems and contemporary Genoa ones?

RAM is certainly cheaper per GB on the former; but that’s a false economy if the cores are starved for bandwidth and end up being seriously handicapped; and there are some nice-to-have generational improvements on Genoa.

Is choosing to buy Milan today a slightly niche(if perhaps quite a common niche; like memcached, where you need plenty of RAM but don’t get much extra credit for RAM performance beyond what the network interfaces can expose) move; or is it still very much something one might do for, say, general VM farming so long as there isn’t any HPC going on, because it’s still plenty fast and not having your VM hosts run out of RAM is of central importance?

Interesting, it looks like the OCP 3.0 slot for the NIC is feed via cables to those PCIe headers at the front of the motherboard. I know we’ve been seeing this on a lot of the PCIe Gen 5 boards, I’m sure it will only get more prevalent as it’s probably more economical in the end.

One thing I’ve wondered is, will we get to a point where the OCP/ PCIe card edge connectors are abandoned, and all cards have is power connectors and slimline cable connectors? The big downside is that those connectors are way less robust than the current ‘fingers’.

I think AMD and Intel are still shipping more Milan and Ice than Genoa and Sapphire. Servers are not like the consumer side where demand shifts almost immediately with the new generation.