Inspur NE5260M5 Internal Hardware Overview

Opening the lid we can see the power distribution board and storage on the left, two riser assemblies in the middle, and an airflow guide strategically directing the flow of air.

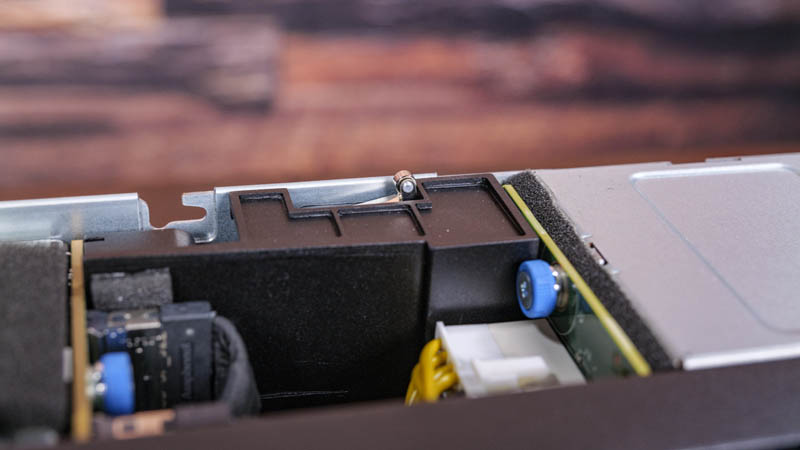

With edge servers, chassis intrusion is a big deal. Instead of having a small plastic switch for chassis intrusion, the NE5260M5 has a robust metal arm that detects if the chassis has been opened.

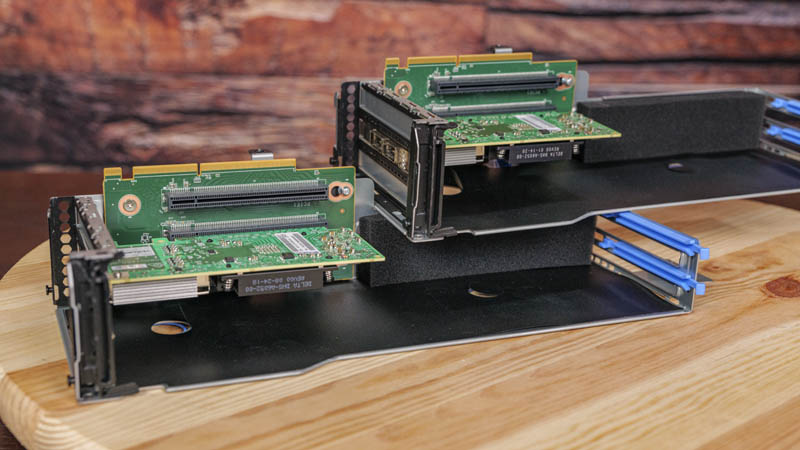

The risers running through the middle of the chassis are designed to house a number of different configuration options. In our test unit, we have two NVIDIA-Mellanox NICs, however, the system is NVIDIA EGX certified so it is also designed for a number of GPUs. Our system has two PCIe 3.0 x16 slots in each riser for four total. There is another configuration option for a PCIe x16 and two PCIe x8 slots per riser yielding six PCIe slots total. We have heard a popular configuration is to use this system with several NVIDIA T4 GPUs for edge inferencing.

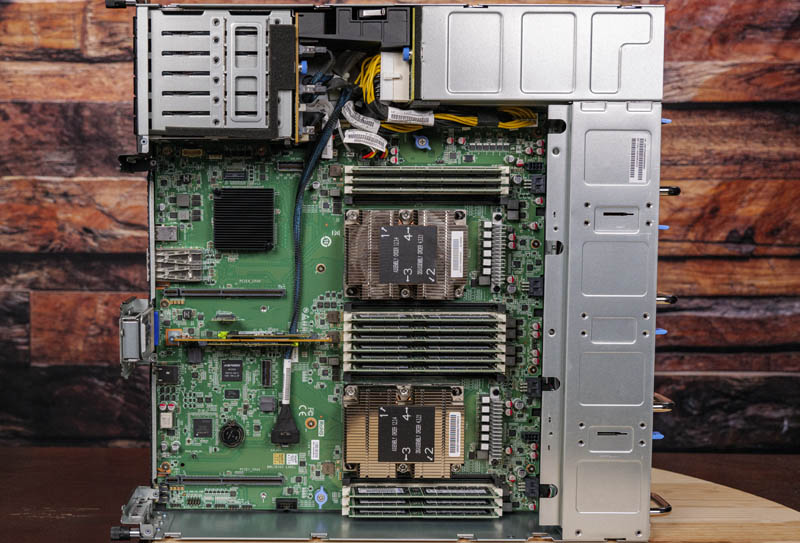

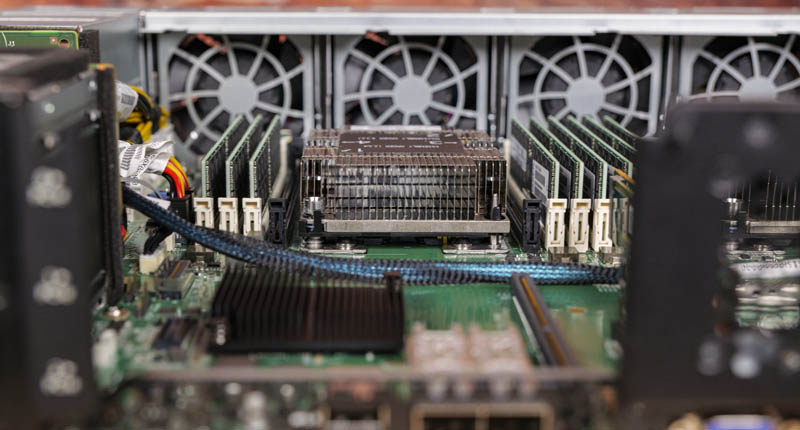

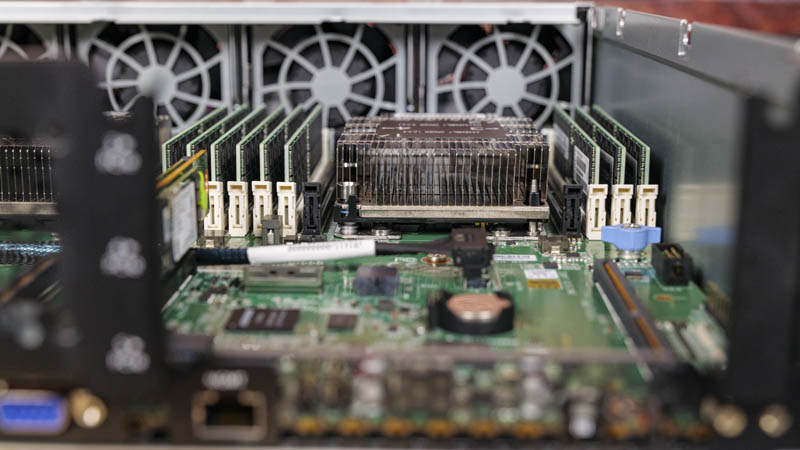

With the air baffle and risers removed, we can see the inside of the NE5260M5. This is a fairly standard layout for CPUs and PCIe expansion, but the rest of the chassis has been modified to make it significantly shorter-depth.

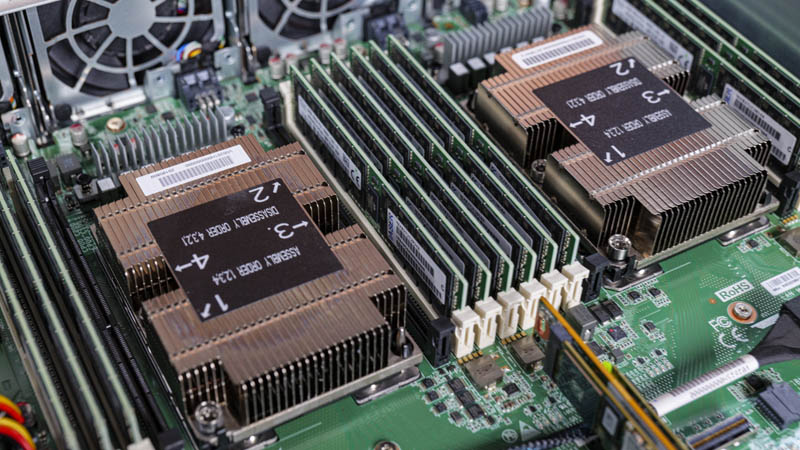

Powering the server we see two Intel Xeon Scalable CPUs. This system takes first and second-gen Xeon Scalable CPUs including Refresh SKUs. Each CPU gets eight DDR4 DIMM slots for sixteen in total. It almost seems as though this layout was done intentionally given we know the next-generation Intel Ice Lake-SP will have eight DDR4 channels.

The PCH options are the Intel C622 or C627. Inspur is utilizing the Lewisburg PCH’s 10GbE capabilities. The Intel C627 adds Intel QuickAssist Technology or QAT to the platform for those deployments that need crypto and compression acceleration. This 10GbE option is popular to lower system costs but it is something we saw Intel remove in the 3rd Generation Intel Xeon Scalable Cooper Lake Lewisburg Refresh PCH.

Inside the system, one can see the airflow design. The large fan modules are designed to push a high volume of air through the chassis to cool hot CPUs and expansion cards such as FPGAs, SmartNICs, or GPUs.

The air baffle is designed to direct airflow both over the CPU heatsinks, but also through to the expansion card areas while limiting airflow to the storage and power distribution areas.

Here is the view through the left riser location. In systems like these, we sometimes see TDP limitations on the CPUs. With Inspur’s design, the NE5260M5 can support the full Xeon stack up to 205W TDP CPUs.

Here is a view from the right riser location. One can quickly see how that air baffle is designed to direct airflow through these sections to cool important parts.

On the storage side, we wanted to quickly show the backplane here. Despite the compact size, the Inspur NE5260M5 has a focus on serviceability. A great example from the storage backplane is that one can see a blue thumbscrew on the PCB. One can also see a hook for the PCB on the left edge. This type of design minimizes the number of screws that must be turned and thus speeds field service. In edge servers, speed and ease of service are key differentiators.

Storage is not limited to the 2.5″ bays and PCIe slots. Inspur also has a dual M.2 SSD boot drive slot in the system on a small riser PCB. This is a common way to deliver boot devices in modern servers as SSDs are very reliable and one can put redundant boto devices inside the system rather than using a hot-swap bay.

While you may see a TPM module above, something that caught our attention was the lack of an OCP NIC slot. Most of the Inspur servers we have tested have had either an OCP 2.0 or OCP 3.0 NIC slot so this was a feature we were slightly surprised we did not see.

Now that we have looked at the system’s hardware, it is time to test the server.

We’re looking to deploy this form factor in 2021. It’s great that STH is reviewing this class of system.

Hello, I’m the EE of this SEVER, It also have several SKUs to support 6 Bays of 2.5” NVMe SSDs, or 4 Bays NVMe + 2 Bays SATA , or 2 Bays NVMe + 4 SATA, just let readers to know , thanks!