Inspur NE3412M5 Internal Hardware Overview

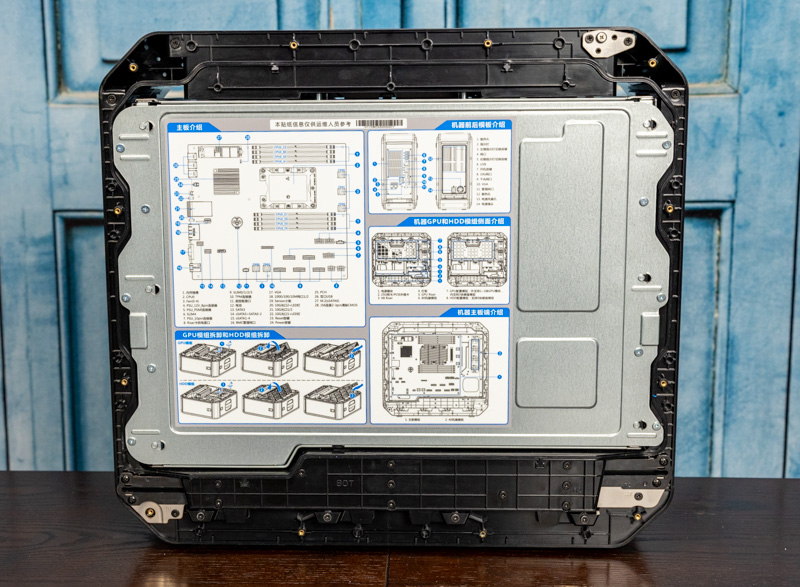

Pulling a panel off, one can see that this external plastic is not the only place where there is protection. We also see that inside the motherboard is in its own metal compartment. Here we also get a nice service guide. Since the unit we are reviewing is a configuration that is used by major Chinese hyper-scalers as an edge device, similar to how Amazon AWS has its Snowball and other similar boxes, we see the service guide is in Chinese. From what we understand, this can be swapped to a different language if necessary. Again, we are not discussing which major hyper-scaler is using this machine, but this is a popular edge deployment system.

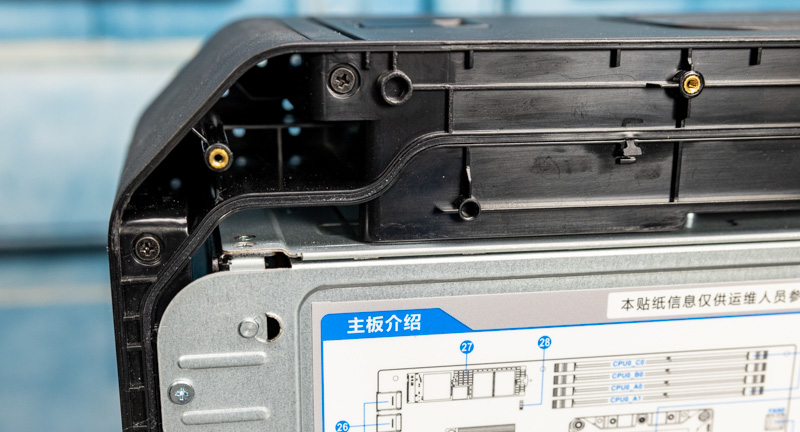

When we showed the screws holding the side panel on, one item we wanted to note here is that the external plastic is only one level of protection. There is also an internal gasket that runs around the internal compartment to keep moisture out of the system. This gasket is a bit inside of the exterior shell so it is protected by a hard plastic buffer around the system.

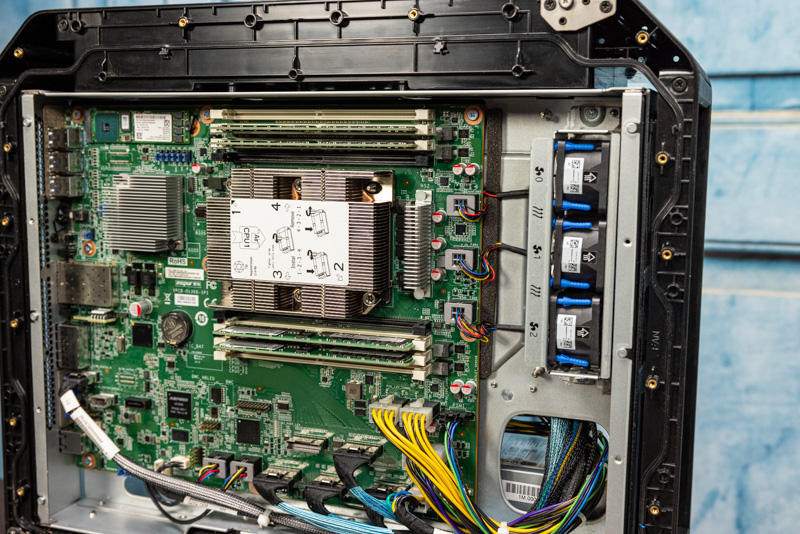

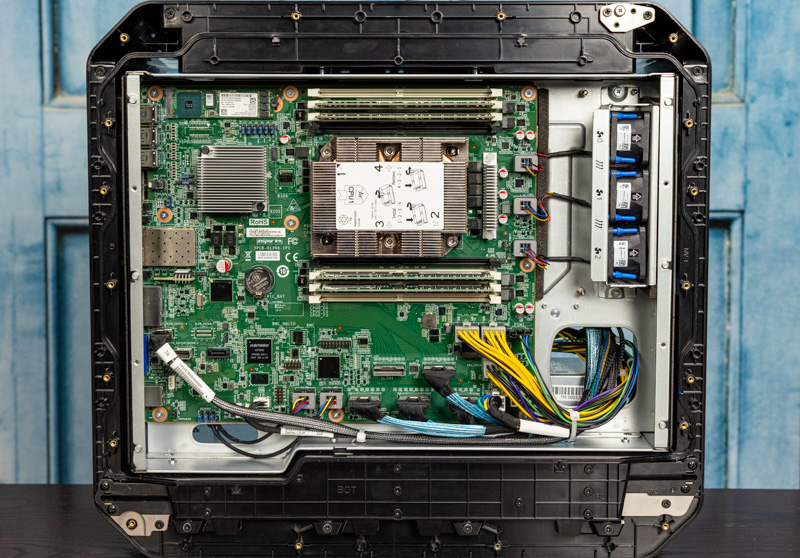

Removing the primary motherboard area cover, we can see the system. Here we see how this side of the system is laid out. The CPU and memory area is covered with an airflow guide. Behind that is the PCH and M.2 storage as well as the ports. Below this area, we have the BMC and both power and PCIe connectors.

The fans are on their own partition secured by two screws. Something that is a bit different is that these fans pull air from inside the chassis and expel air outside of the chassis.

One can see that the fans have more robust power connectors than typical server 4-pin PWM fan headers we see in a system.

The system itself is a single Intel Xeon Scalable (first and second generation) design with six-channel memory and eight DIMM slots. As one would expect on an edge server like this, the heatsink is passive so the chassis fans can provide redundant cooling.

In front of the CPU and memory portion, we have a M.2 storage slot that will usually be occupied by a boot SSD. The heatsink below this is the PCH. One can see ports are on the left side of this system here.

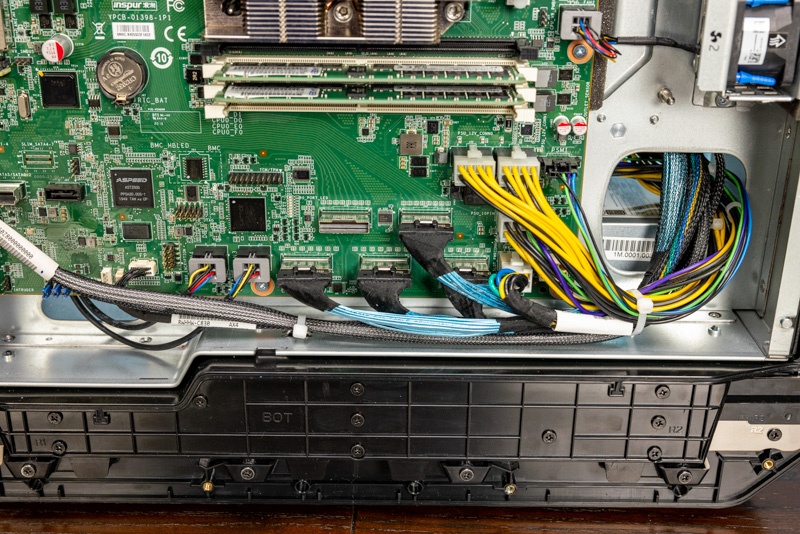

Below this area, we have the ASPEED AST2500 BMC as well as high-density SATA as well as a traditional 7-pin SATA port.

Below the CPU area, we have a section dedicated to cabled connections. Specifically for power and PCIe connectivity. There are no traditional PCIe x16 slots on the motherboard here, so instead this is designed for cables to attach to I/O on the other side of the system. This area gets less airflow, so it makes sense that it is used for cable connectors.

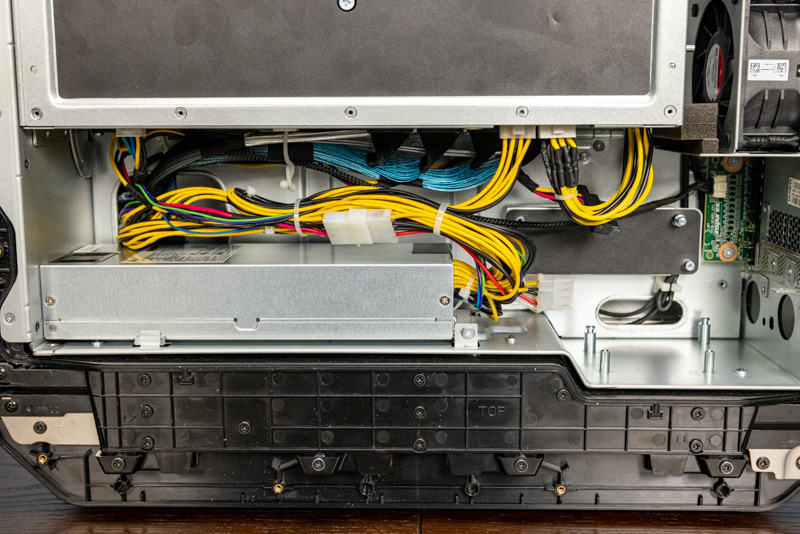

On the other side of the system, we get something that is very different. The top portion is a secured metal casing for expansion. There is also cooling and power being delivered to this section that also has the power supply.

First, the big feature. The peripheral section unscrews/ latches and swivels out locking in either the up or down positions (shown above and below.) In this peripheral section, we get the ability to put 3.5″ storage, and three drives for capacity or redundancy. We also get a PCIe section for up to two cards. In our test system, we utilized a single double-width GPU. One can see the fans directing air into this peripheral section.

Underneath we can see the PCIe cables and SATA cables along with power entering the peripheral section. We can also see the FSP PSU below.

Something we mentioned earlier is that in this area one can see the empty I/O expansion slot below the fans. Our system did not have this populated but it seems as though there may be a PCI mezzanine option that could be placed here. Another interesting feature is that the bottom has two round holes and some mounting points. The faceplate we have on this system had blanks over these two holes, but we could see them being used for a variety of applications.

Overall, there is a lot of engineering that went into this solution. Our test unit has been to multiple continents and one can see has certainly seen a lot of use. Still, it worked directly upon startup.

Next, let us get to how the system performs and the management.

You didn’t mention weight, but it looks heavy.

Man this is cool. I wish you’d have done a video to see those doors open in action

Interesting approach to a specific market segment.

The interior views were “off putting” to me. Can you say “cramped”? I knew you could.

Long-run data covering interior case thermals would be interesting to see. All the durability in the world means nothing if the hardware slowly roasts itself.

Note to all HW manufacturers: Leave the roasting and smoking to a qualified chef in a proper kitchen.

Also curious to not see a label that says, “No user serviceable parts inside”, but then again the case labels are not in English, perhaps for good reasons.

This is a small form and looks more “efficient” than “cramped”.

to sleepy and awake – either here or in the vast majority of cases and server internals the recent lenovo DIMM fan shrouds notable for rarity — now we’re entering a fast paced generational upgrade sequence, I’m starting to think it’s lazy and can’t understand why the engineering isn’t of sufficient economic value to manufacturers when I think my company would tolerate a 5 percent price hike just to get given useful numbers about thermal characteristics

there’s no obvious thermal circulation design here or anywhere and I think that’s just poor in this age

to awake

this is why modular encapsulated subsystems on the CXL busses of the near future are where I hope we’re going. VME bus… hmm!

I really hope you do a video on this, I am really enjoying your movies on Youtube.