Inspur i24 Node Overview

The Inspur i24 is aimed at higher-density but lower-power hyper-converged deployments. One can see this immediately when looking at the node.

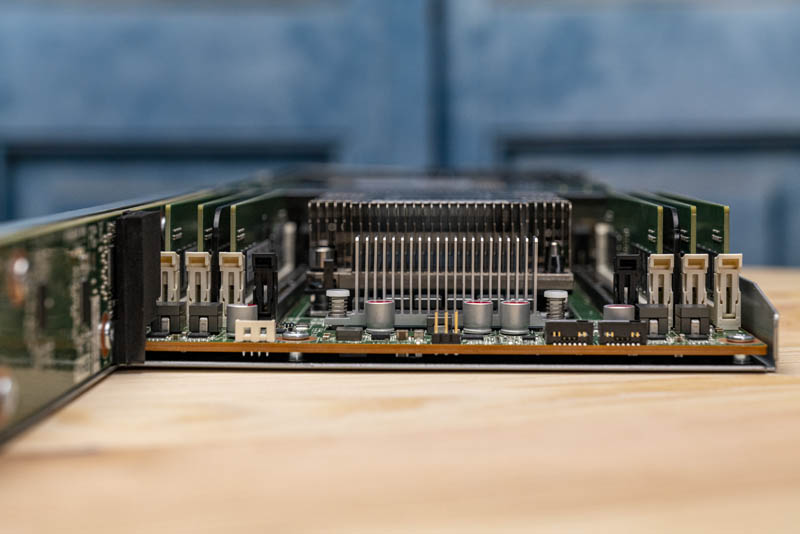

There are two sockets, each rated for up to 165W TDP CPUs. Each CPU gets a total of eight DIMM slots for 16 DIMMs per node and the system does support Intel Optane DCPMM (now Optane PMem 100.) Some systems cram a full set of 24x DIMMs per node into the system and 205W TDP CPUs, but those systems also need higher-density fan arrays which means they tend to be lower on power efficiency due to the thermal challenges.

Between the CPUs, Inspur is using a hard plastic airflow guide. Some competitive systems use thin airflow guides that are susceptible to getting caught when installing a node into a chassis. This is a very nice design.

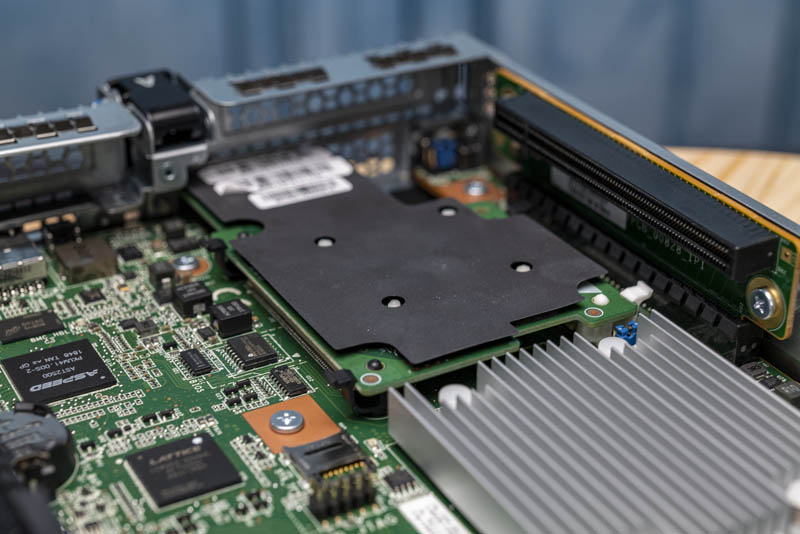

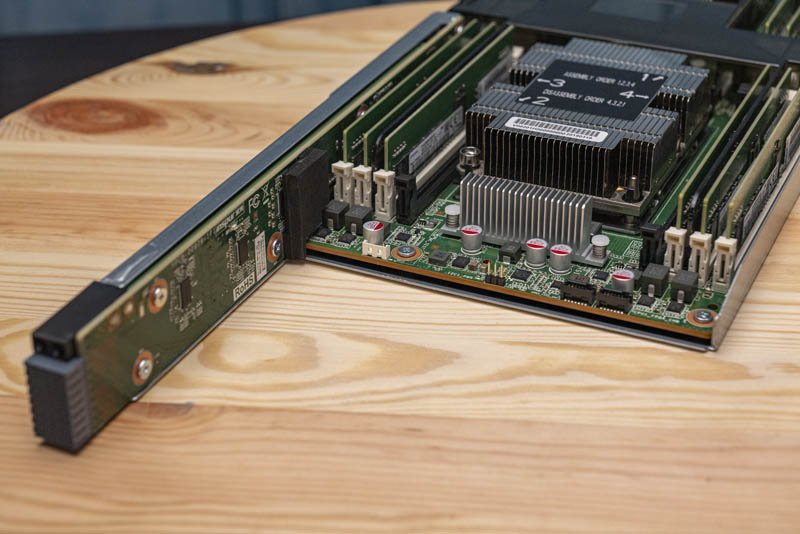

Something that is absolutely excellent in the Inspur i24 is the PCIe expansion. Inspur has a solid configuration consisting of an OCP NIC 2.0 slot for networking, then two PCIe Gen3 x16 low profile slots for expansion. Some other 2U4N systems we have used only have two expansion slots.

What makes this class-leading is the ease of servicing the PCIe slots. One pulls a tab with the node label. After that is complete, one can simply pull up on the riser and remove it from the node. In some competitive solutions, this requires 4 or more screws per riser to remove. Further, one can remove each independently while many competitive solutions have one riser that must always be removed first. After having tested many 2U4N solutions, this is most likely the best design we have seen to date.

There is a riser slot that is not occupied in our system. Inspur has an option to add M.2 boot drives to this location.

The rear I/O of each node may look a little barren. It is mostly focused on providing the three PCIe/ OCP expansion slot I/O panels across three quadrants. The fourth has a single RJ45 management port along with a high-density connector. This high-density connector uses a dongle the provides VGA and USB connectivity. Other than that single management port, there are no 1/10GbE ports onboard the system. Instead, one will configure the OCP NIC 2.0 mezzanine slot for that functionality.

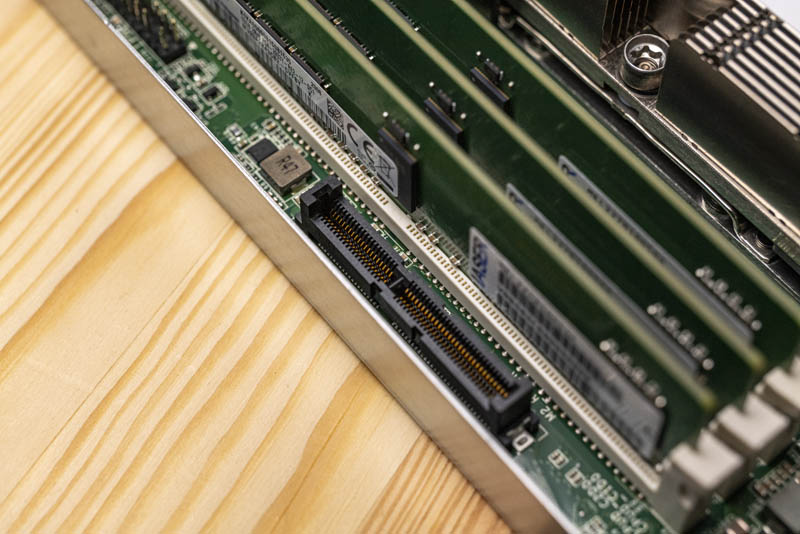

One other item we wanted to note quickly is the midplane connector. We have seen some systems with cables that route SATA that get all over the node which can be a slight annoyance when servicing memory modules. Here, the i24 has excellent cable routing an the cables are routed into a channel on the midplane connector arm on the node which keeps them secure. This is another small design step, but it shows attention to detail in the design.

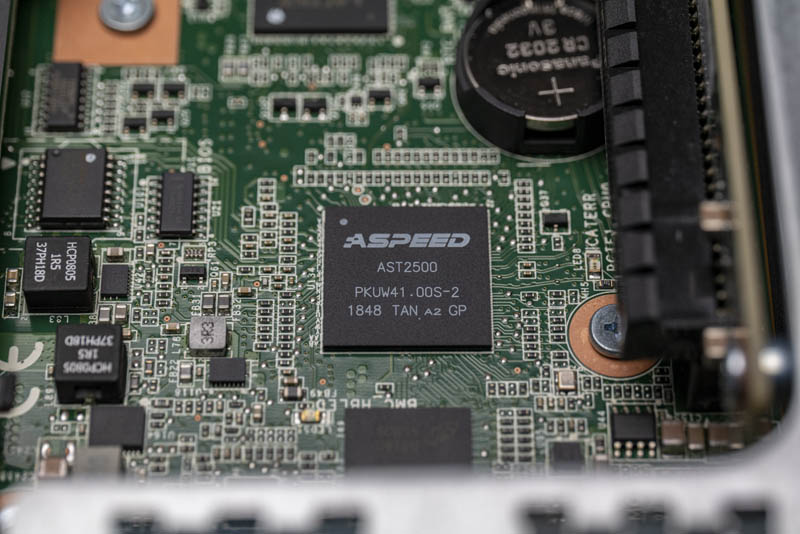

Each node gets its own ASPEED AST2500 BMC which has some integration with the chassis-level AST1250 CMC we showed earlier.

We are going to discuss the i24 management next, but overall this is a very well-designed hardware solution from Inspur.

Next, we are going to take a look at the BMC and CMC management aspects. We will then test these servers to see how well they perform.

The units should also support the new Series 200 Intel Optane DCPMMs, but only in AppDirect (not Memory) mode.

U.S. Forces Intel to Pause Shipments to Leading Server Maker.

Tomshardware

Intel Restarts Shipments to Chinese Server Vendor

Tomshardware

How do you get to CMC management’s?