At SC19, Inspur showed off a cooling solution that it believes can help offer lower costs and higher performance to next-generation servers. The company also showed off GPU servers, but most notably it had its Intel Nervana NNP-T OAM and PCIe solutions on display. Alan Chang, deputy GM of Inspur’s server business showed me around the company’s booth and I asked him to tell our readers more about both of those solutions, but one wildcard: Inspur Power Systems. Inspur also designs and builds systems for Power CPUs, and I wanted to cover those solutions for the first time on STH.

Inspur Evaporative Cooling Solution

First I asked Alan to show us around the company’s Natural Circulation Evaporative Cooling Solution that you can see us standing around in the cover image. Here is what he told me:

AC: One of the things you see on the show floor at SC19 is immersion cooling is all over the place. There are also companies that are using cold plate cooling. We are showing our Natural Circulation Evaporative Cooling Technology (ECT.) Compared to the traditional liquid cooling methods where you have to change a lot in your data center building, with ECT you do not. You can add a condenser at the top of your rack and then you do not need to do anything else to the building because it is self-contained.

The 2U 4-node server that is being cooled using ECT. Once the liquid inside hits 60C it evaporates and turns into vapor.

It then goes up to what we call the condenser at the top of the rack. This is much like your refrigerator or car. The condenser cools the vapor and turns it back liquid. It is then returned to the server. This is a closed-loop self-sustaining solution.

Imagine this. We have a customer that has a high altitude data center. At altitude, the air density is lower so using fans to cool is less effective. The fan has to work extra hard. In a high altitude and high-temperature area, using fans to cool dense systems does not work.

We have one case deployed in a data center in China with a high altitude like in Denver. There the air density is very low. There the server using air conditioning will consume around 1900W including cooling. Once we deployed Evaporative Cooling, we saved over 200W. That is over 10.5%.

One other benefit is that we can sustain higher boost clocks which is another amazing part of this.

I asked Alan whether that was the real production condenser on the show floor. He assured me that it was just a demonstration at SC19 and that the full version is larger and integrated.

Inspur NNP-T in OAM and PCIe Form Factors

I spoke with Alan about the OAM form factor and Inspur’s UBB for OAM before. You can read more about that Interview with Alan Chang of Inspur on OCP Regional Summit 2019. For SC19, the company showed its platform with the Intel Nervana NNP-T OAM module.

I asked Alan if OAM is what any accelerator company that wants to sell into the hyperscale market will be using and he said “absolutely.”

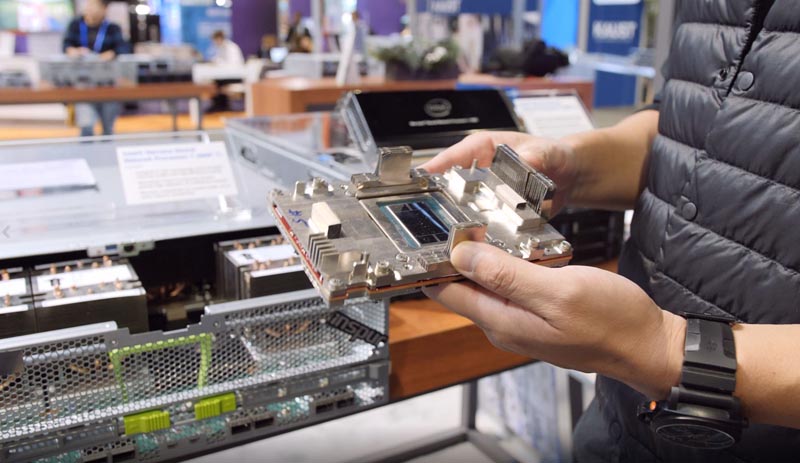

We then shifted to the Intel Nervana NNP-T PCIe module. He said:

AC: For those that already use GPGPU systems, there is the double-width NNP-T PCIe module that integrates into existing systems. Each card has four external links that you can use to link to another box.

Internally, there are two connectors that can be used inside a server. That means you can have multiple different topologies. We have a lot of customers that want different topologies. With these interconnects, you can do 8, 16, 32 even 128 card topologies. That has a lot of people very excited.

You will notice that in the background that the NNP-T PCIe card is still labeled the NNP L-1000. Intel changed branding a few times in 2019 on these. You will also see that the card was being shown with the Inspur Systems NF5468M5 that STH reviewed earlier this year.

Inspur POWER Systems

Inspur has a joint venture to sell Power systems. We have not covered them as much on STH, but I have seen them in action for example, when doing the Inspur Partner Forum 2019 trip. I asked Alan to give our readers a quick overview of the solutions.

AC: One of the biggest barriers to Power systems getting a big market today is cost. This is due partly to the economies of scale. So what we did is that we combined a lot of system infrastructure with the x86 side. That allows the end customer to pick whichever solution they feel is better for their application.

For example, we have GPU systems where the GPU board, the backplanes, and enclosure are the same between Power and x86. The only change is the Power CPUs and motherboards. In the future, we may make a change to make the GPU board take either PCIe or NVLink directly, not just PCIe as it is today.

Final Words

I just wanted to thank Alan for chatting again with me at SC19. Inspur is pushing on a lot of fronts and that is leading to market share growth. The company has consistently been growing and is now solidly in third place in terms of both unit volume and revenue on the latest IDC 3Q19 Quarterly Server Tracker. IDC includes both x86 and Inspur Power Systems numbers so I wanted to show off a few of those servers.

Any news from Inspur providing EPYC ROME solutions (should make sense…) ?

Or is it the question to avoid with Inspur ?