The Rabbit of HPE

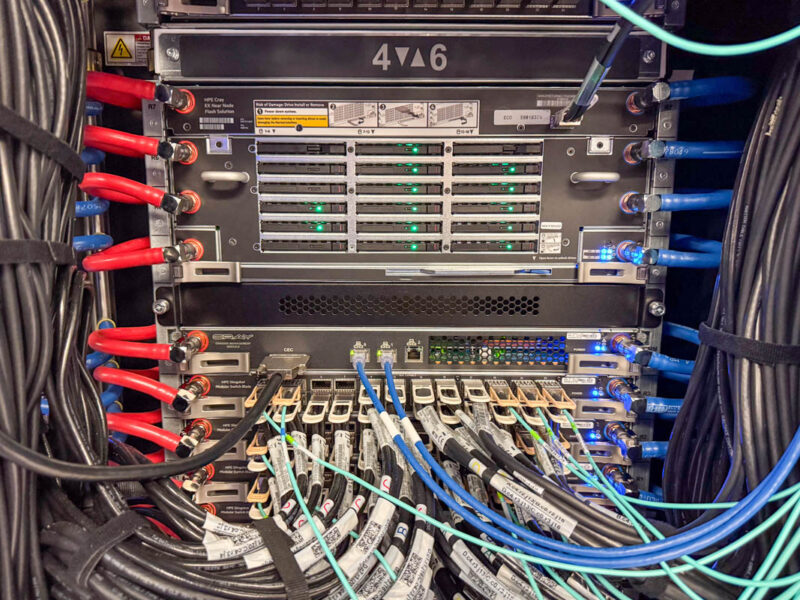

El Capitan had a feature I did not know about until today. Perhaps I was sleeping on this one. Here is a close-up of the Slingshot interconnect side. You can see that this is also liquid cooled and that the Slingshot switch trays only occupy the bottom half of the space shown here. The folks at LLNL said that their codes do not require that the entire HPE Slingshot area is populated. Instead, they have enough bandwidth half-populated leaving extra space.

In that top section, instead of just leaving it blank, there is the “Rabbit.” The Rabbit houses a total of 18x NVMe SSDs and is liquid cooled just like the rest of the system.

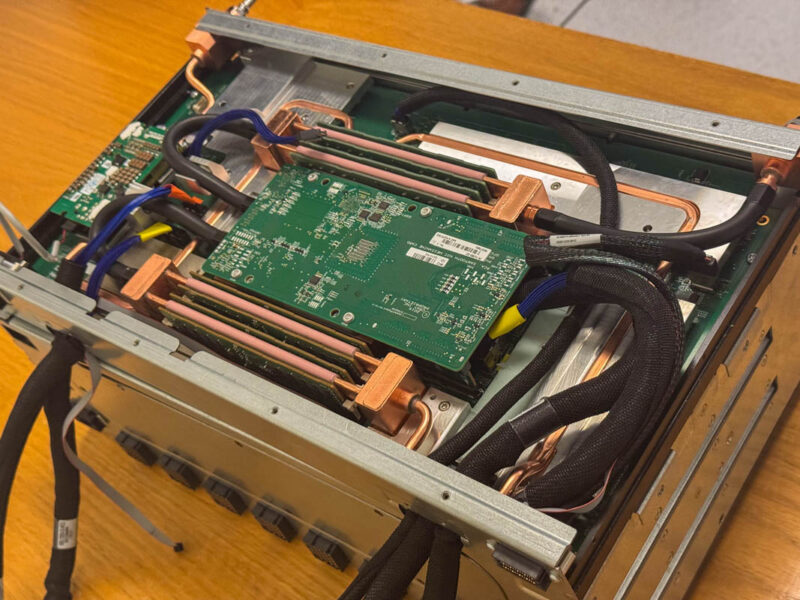

We got to see inside the system, and we saw something other than an APU. Instead, there was a CPU that looks like an AMD EPYC 7003 Milan part which feels about right given the AMD MI300A’s generation. Unlike the APU, the Rabbit’s CPU had DIMMs, and what looks like DDR4 memory that is liquid cooled. Like the standard blades, everything is liquid cooled so there are not any fans in the system.

There are a number of PCIe cables as well. Apparently the Rabbit can operate as either a standalone server with lots of storage for things like data preparation tasks. Alternatively, it can be used as shared storage within the cluster.

It was hard to not feel like the Rabbit might be the most over-enginnered single socket storage server around.

Final Words

This was one of those really cool experiences where I got to go behind the scenes at a large cluster. While it is less than half the size that the xAI Colossus cluster was when we filmed it at 100,000 GPUs in September, it is also worth noting that systems like this are still huge and are done on a fraction of the budget of a 100,000 plus GPU system.

I still have a few more photos and some video I need to go through perhaps on the plane to Taipei this weekend. You may see a weekend piece in the Substack with higher resolution photos and a bit more detail if I find something interesting going through them. The video will likely make it to the STH Labs shorts channel.

A big thank you is in order to the LLNL, DoE, NNSA, HPE, and AMD teams for making this trip possible. Or more specifically, thank you for letting me grab some shots before El Capitan gets to its classified mission. It is always great to see large-scale systems since they are all-too-often hidden from cameras.

I was a system administrator for a supercomputer center at Lockheed in the late 80s. We had a Cray X-MP, a Y-MP, a Connection Machine and several DEC VAXs computers as front end compute nodes. It was an exciting time with so much raw computational power that today can be had in a smart phone.

Your visit to Aurora brought back lots of great memories.

But you had to leave your packet capture gear at home, right?

Some seriously nice kit, thanks for sharing.

If you ever find yourself in Lugano, Switzerland then you could attempt a visit to CSCS to see their HPC/Cray machines

You are missing a ‘Us’ in the title of yhis article :)

And I can’t spell ‘this’ :(

The Rabbit software is entirely open source

https://nearnodeflash.github.io/latest/

Any insights into why AMD won this vs NVIDIA back in 2020?

Probably one good reason is that AMD committed to the HPC market and FP64 while NVIDIA has been focused on mixed precision